Authors: Shoubin Yu*, Jaehong Yoon*, Mohit Bansal

- Jun 14, 2024. Check our new arXiv-version2 for exciting additions to CREMA:

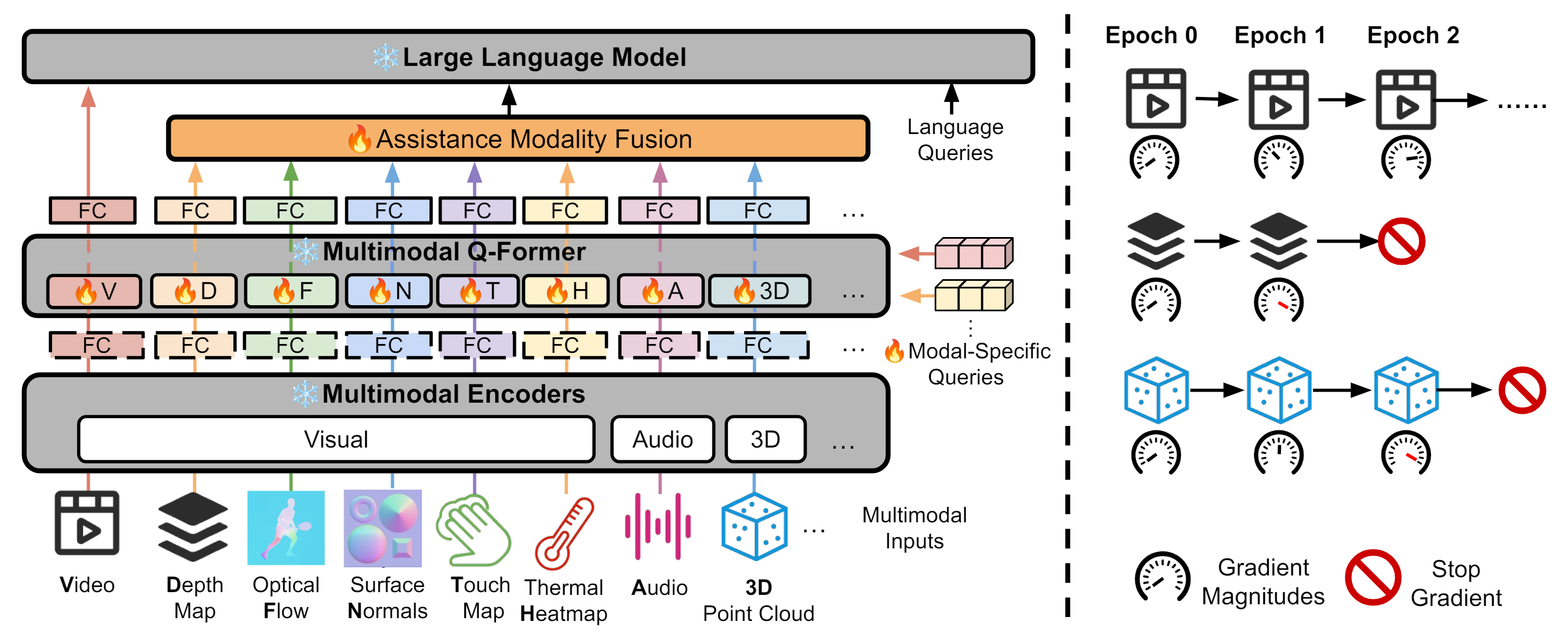

- New modality-sequential modular training & modality-adaptive early exit strategy to handle learning with many modalities.

- More unique/rare multimodal reasoning tasks (video-touch and video-thermal QA) to further demonstrate the generalizability of CREMA

# CREMA code

./lavis/

# running scripts for CREMA training/inference

./run_scripts

- (Optional) Creating conda environment

conda create -n crema python=3.8

conda activate crema- build from source

pip install -e .Visual Encoder: we adopt pre-trained ViT-G (1B), the codebase downloads the model automatically.

Audio Encoder: we use pre-trained BEATs (iter3+), please download the model here, and update the path in the code

3D Encoder: we conduct off-line feature extraction following 3D-LLM, please refer to this page for per-extracted features. Please change the storage in dataset config.

Multimodal Qformer: We initialize query tokens and FC layer for each MMQA in Multimodal Q-Former form pre-trained BLIP-2 model checkpoints. We hold Multimodal Q-Fromer with pre-trained MMQA-audio and MMQA-3D via HuggingFace, and Multimodal Q-Fromer initilized from BLIP-2 can be found here.

| Dataset | Modalities |

|---|---|

| SQA3D | Video+3D+Depth+Norm |

| MUSIC-AVQA | Video+Audio+Flow+Norm+Depth |

| NExT-QA | Video+Flow+Depth+Normal |

We test our model on:

-

MUSIC-AVQA: we follow the orginal MUSIC-AVQA data format.

-

Touch-QA (reformulated from Touch&Go): we follow SeViLA data format, and released our data here.

-

Thermal-QA (reformulated from Thermal-IM): we follow SeViLA data format, and released our data here.

To get trimmed Touch-QA and Thermal-QA video frames, you can first download raw videos from each original data project, and preprocess with our scripts after setting the custom data path, by running.

python trim_video.py

python decode_frames.pyWe extract various extra modalities from raw video with pre-train models, please refer to each model repo and paper appendix for more details.

We will share extracted features in the following table.

| Dataset | Multimodal Features |

|---|---|

| SQA3D | Video Frames, Depth Map, Surface Normals |

| MUSIC-AVQA | Video Frames, Optical Flow , Depth Map, Surface Normals |

| NExT-QA | Video Frames, Depth Map, Optical Flow, Surface Normals |

| Touch-QA | Video Frames, Surface Normals |

| Thermal-QA | Video Frames, Depth Map |

We pre-train MMQA in our CRMEA framework with public modality-specific datasets:

We provide CREMA training and inference script examples as follows.

sh run_scripts/crema/finetune/sqa3d.shsh run_scripts/crema/inference/sqa3d.shWe thank the developers of LAVIS, BLIP-2, CLIP, X-InstructBLIP, for their public code release.

Please cite our paper if you use our models in your works:

@article{yu2024crema,

title={CREMA: Generalizable and Efficient Video-Language Reasoning via Multimodal Modular Fusion},

author={Yu, Shoubin and Yoon, Jaehong and Bansal, Mohit},

journal={arXiv preprint arXiv:2402.05889},

year={2024}

}