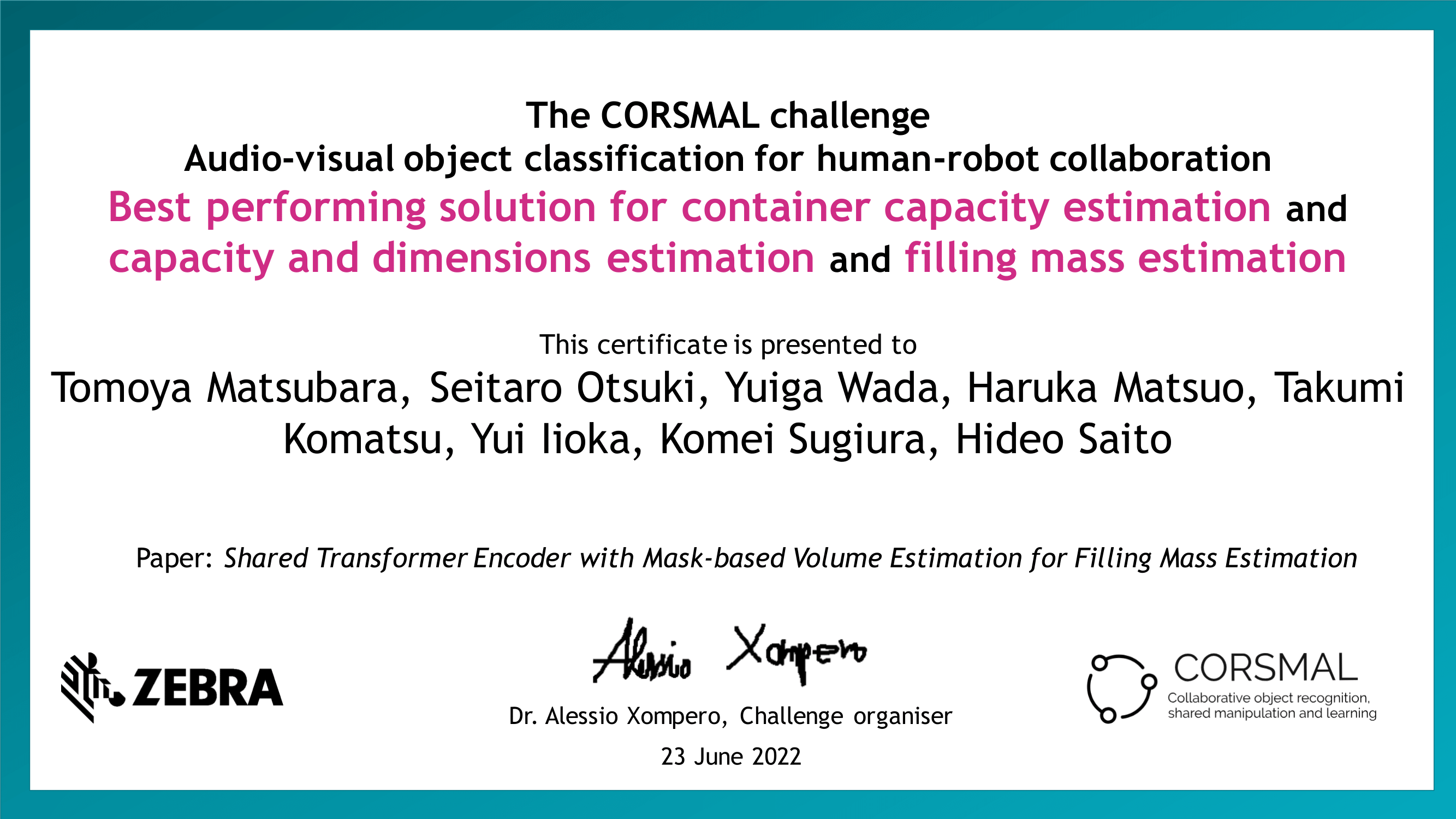

- Team KEIO-ICS repository for The CORSMAL challenge.

- [paper]

- Shared Transformer Encoder with Mask-Based 3d Model Estimation for Container Mass Estimation

- *Tomoya Matsubara

- *Seitaro Otsuki

- *Yuiga Wada

- Haruka Matsuo

- Takumi Komatsu

- Yui Iioka

- Komei Sugiura

- Hideo Saito

(* Equal contribution)

-

python=3.7.11

-

numpy=1.21.2

-

scipy=1.6.2

-

pandas=1.3.5

-

matplotlib=3.5.0

-

opencv-python=4.5.5.62

-

torch=1.8.2

-

torchaudio=0.8.2

-

torchvision=0.9.2

-

Clone repository.

git clone https://github.com/YuigaWada/CORSMAL2021.git -

Create a new conda environment and activate it.

conda env create -f env.yml && conda activate corsmal -

The above command may fail depending on your environment. If it fails, you need to maually install some libraries.

- If you do not have

torch,torchaudioortorchvisioninstalled, install them by pip.. See https://pytorch.org/ and check your hardware requirements. - To install

pandas, runconda install pandasorconda install pandas=1.3.5=py37h8c16a72_0 - To install

scipy, runconda install scipyorconda install scipy=1.6.2=py37had2a1c9_1 - To install

matplotlib, runconda install matplotliborconda install matplotlib=3.5.0=py37h06a4308_0 - To install

opencv-python, runconda install opencv-pythonorpip install opencv-python==4.5.5.62

- If you do not have

To output the estimations as a .csv file, run the following command:

python run.py [test_data_path] [output_path] -m12 [task1and2_model] -m4 [task4_model]

For example,

python run.py ./test ./output.csv -m12 task1and2.pt -m4 task4.pt

The pre-trained models are available here.

The directory of test data should be organized as:

test

|-----audio

|-----view1

| |-----rgb

| |-----calib

|-----view2

| |-----rgb

| |-----calib

|-----view3

| |-----rgb

| |-----calib

To train the model, organize your training data as:

train

|-----audio

|-----view1

|-----view2

|-----view3

|-----ccm_train_annotation.json

python train.py [train_data_path] --task1and2

For example,

python train.py ./train --task1and2

python train.py [train_data_path] --task4

For example,

python train.py ./train --task4

There is no training necessary for Task 3 and 5: the algorithms used in Task 3 and 5 are not deep learning ones.

Hardware

- CentOS Linux release 7.7.1908 (server machine)

- Kernel: 3.10.0-1062.el7.x86_64

- GPU: (4) GeForce GTX 1080 Ti

- GPU RAM: 48 GB

- CPU: (2) Xeon(R) Silver 4112 @ 2.60GHz

- RAM: 64 GB

- Cores: 24

Libraries

- Anaconda 3 (conda 4.7.12)

- CUDA 7-10.2

- Miniconda 4.7.12

Hardware

- Ubuntu 20.04LTS

- GPU: GeForce RTX 3080 laptop

- GPU RAM: 16GB GDDR6

- CPU: Core i9 11980HK

- RAM: 64 GB Libraries

- CUDA 11.1

- Miniconda 4.7.12 (pyenv)

We installed pytorch for CUDA 11.1 with pip.

- torch==1.8.2+cu111

- torchaudio==0.8.2

- torchvision==0.9.2+cu111

- LoDE(Creative Commons Attribution-NonCommercial 4.0)

- We use LoDE with some formula modifications.

This work is licensed under the MIT License. To view a copy of this license, see LICENSE.