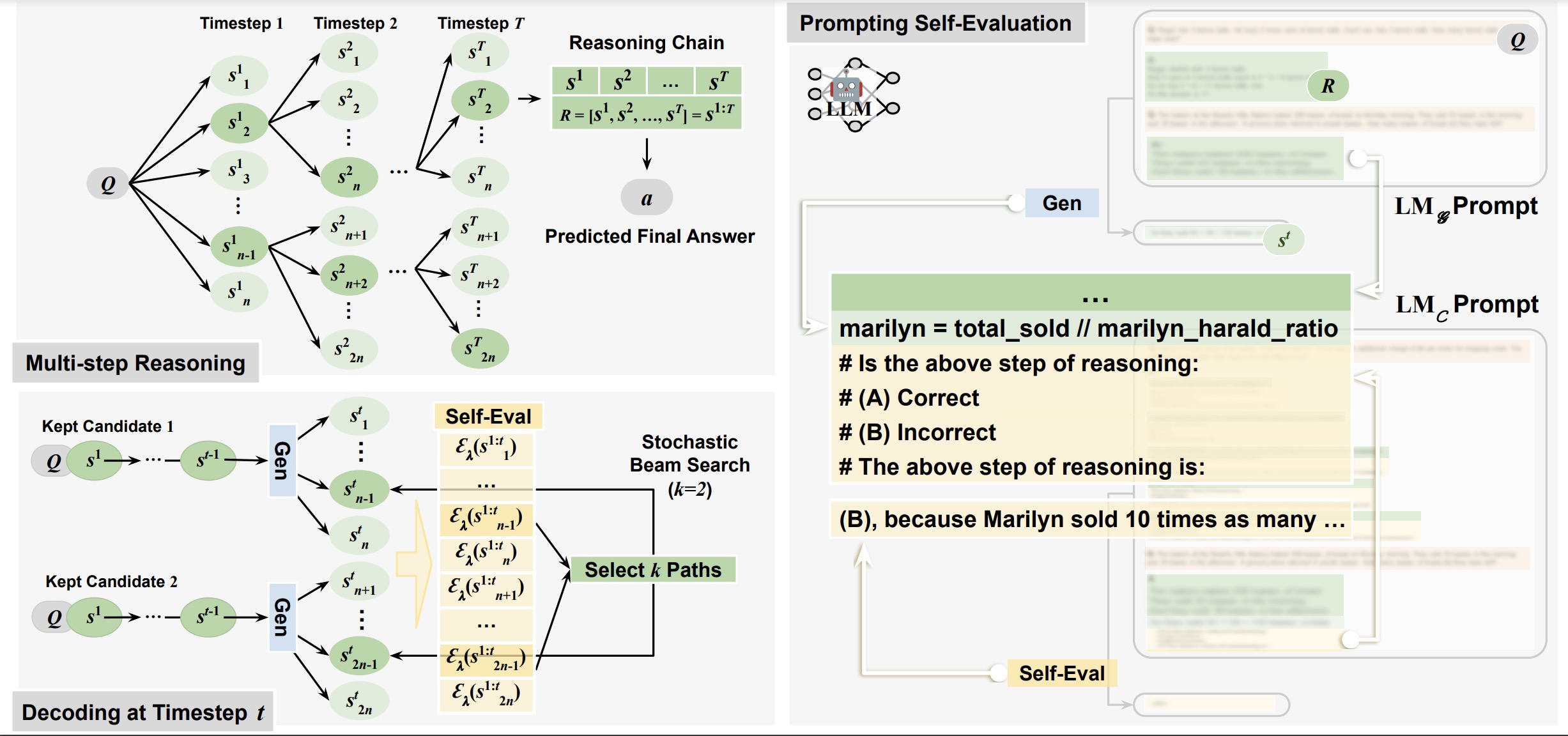

This repository contains code and analysis for the paper: Decomposition Enhances Reasoning via Self-Evaluation Guided Decoding. Below is the framework of our proposed method (on the left) together with a prompting example of self-evaluation (on the right).

09/2023: Llama-2 is supported. Please check example scripts for details.07/2023: Our algorithm of Guided Decoding is supported by LLM-Reasoners. You can utilize the library to better compare our method with other cutting-edge reasoning algorithms.05/2023: First release of the SelfEval Guided Decoding pipeline and preprint.

openai 0.27.1

matplotlib 3.3.4

numpy 1.20.1

ipdb 0.13.9

tqdm 4.64.1

We provide example formats of the input dataset in the folder data.

For other datasets, please check the details of prompt construction, where we show the specific attributes each data point should contain.

In the current version of our main method (in generate_code.py), we adopt Codex as our backend LLM.

However, OpenAI has discontinued public access to this model.

To address this, you can either (1) apply for the research access to Codex (code-davinci-002) to run our approach, or (2) utilize an alternative backbone text-davinci-003.

We will later also release the results of running based on text-davinci models for reference.

We show examples of how to run our method on different datasets in scripts. Specifically, scripts with names starting with run_generation_ are for running our methods with either PAL or CoT as basic prompting methods.

Please find in src/execute_and_evaluate how to extract and evaluate the outputs of different methods on different datasets.

@misc{xie2023decomposition,

title={Decomposition Enhances Reasoning via Self-Evaluation Guided Decoding},

author={Yuxi Xie and Kenji Kawaguchi and Yiran Zhao and Xu Zhao and Min-Yen Kan and Junxian He and Qizhe Xie},

year={2023},

eprint={2305.00633},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

This repository is adapted from the code of the works PaL: Program-Aided Language Model and Program of Thoughts Prompting: Disentangling Computation from Reasoning for Numerical Reasoning Tasks.