CapsNet-Tensorflow

A Tensorflow implementation of CapsNet based on Geoffrey Hinton's paper Dynamic Routing Between Capsules

Status:

- The capsule of MNIST version is finished. The current

test accuracy = 99.57, see details in theResultssection

Daily task

- multi-GPU support

- Improving the reusability of

capsLayer.py, what you need isimport capsLayer.fully_connectedorimport capsLayer.conv2din your code

Others

- Here(知乎) is an answer explaining my understanding of the paper. It may be helpful in understanding the code.

- If you find out any problems, please let me know. I will try my best to 'kill' it ASAP.

Requirements

- Python

- NumPy

- Tensorflow (I'm using 1.3.0, not yet tested for older version)

- tqdm (for displaying training progress info)

- scipy (for saving images)

Usage

Step 1.

Clone this repository with git.

$ git clone https://github.com/naturomics/CapsNet-Tensorflow.git

$ cd CapsNet-Tensorflow

Step 2.

Download the MNIST dataset, mv and extract it into data/mnist directory.(Be careful the backslash appeared around the curly braces when you copy the wget command to your terminal, remove it)

$ mkdir -p data/mnist

$ wget -c -P data/mnist http://yann.lecun.com/exdb/mnist/{train-images-idx3-ubyte.gz,train-labels-idx1-ubyte.gz,t10k-images-idx3-ubyte.gz,t10k-labels-idx1-ubyte.gz}

$ gunzip data/mnist/*.gz

Step 3. Start the training:

$ pip install tqdm # install it if you haven't installed yet

$ python main.py

The default parameters of batch size is 128, and epoch is 50. You may need to modify the

config.pyfile or use command line parameters to suit your case. In my case, I runpython main.py --test_sum_freq=200 --batch_size=48for my 4G GPU(~10min/epoch)

Results

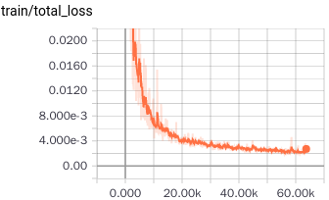

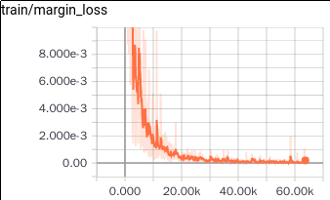

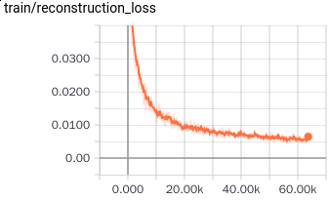

- training loss

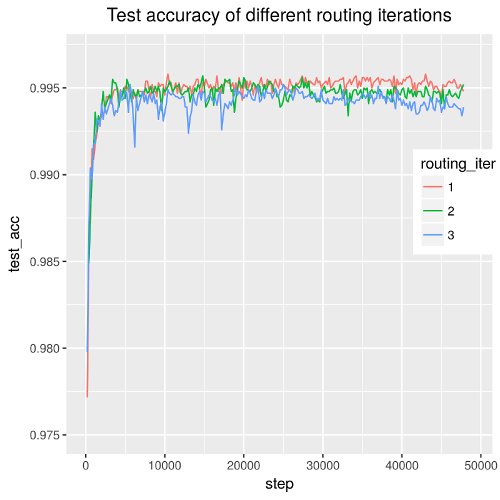

- test accuracy(using reconstruction)

| Routing iteration | 1 | 2 | 3 |

|---|---|---|---|

| Test accuracy | 0.43 | 0.44 | 0.49 |

| Paper | 0.29 | - | 0.25 |

My simple comments for capsule

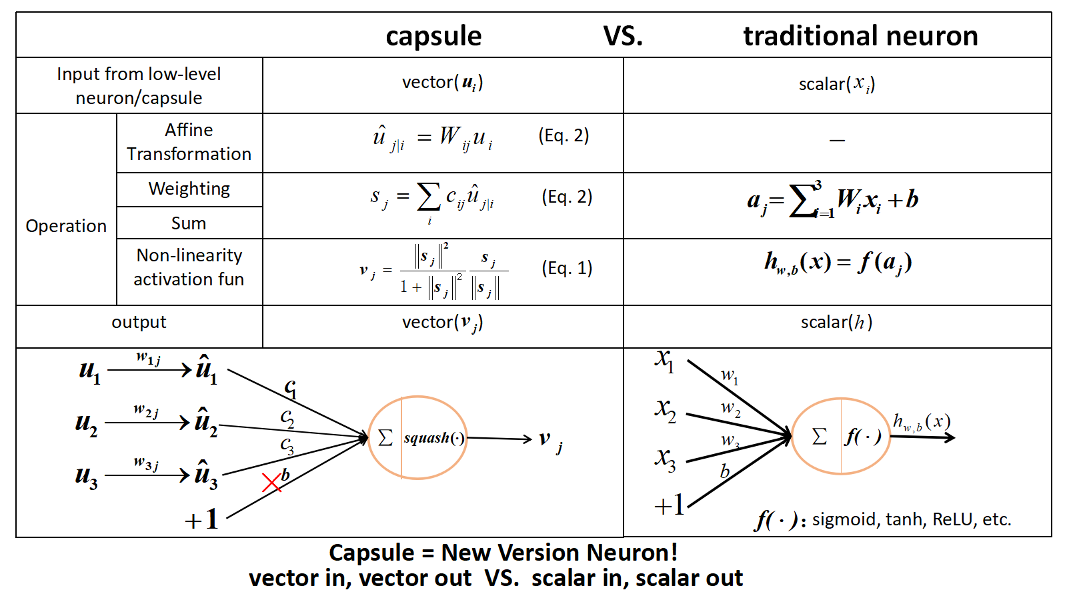

- A new version neural unit(vector in vector out, not scalar in scalar out)

- The routing algorithm is similar to attention mechanism

- Anyway, a great potential work, a lot to be built upon

TODO:

-

Finish the MNIST version of capsNet (progress:90%)

-

Do some different experiments for capsNet:

- Try Using other datasets

- Adjusting the model structure

-

There is another new paper about capsules(submitted to ICLR 2018), a follow-up of the CapsNet paper.

My weChat:

Reference

- XifengGuo/CapsNet-Keras: referred for code optimization