-

Code demo for Chpater 8, Reinforcement Learning and Control.

-

Methods: Approximate Dynamic Programming, Model Predictive Control

PyTorch 1.4.0

- To train an agent, follow the example code in

main.pyand tune the parameters. ChangeMETHODSvariable for adjusting the methods to compare in simulation stage. - Simulations will automatically executed after the training is finished. To separately start a simulation from a trained results and compare the performance between ADP and MPC, run

simulation.py. ChangeLOG_DIRvariable to set the loaded results.

Approximate-Dynamic-Programming

│ main.py - Main script

│ plot.py - To plot comparison between ADP and MPC

│ train.py - To execute PEV and PIM

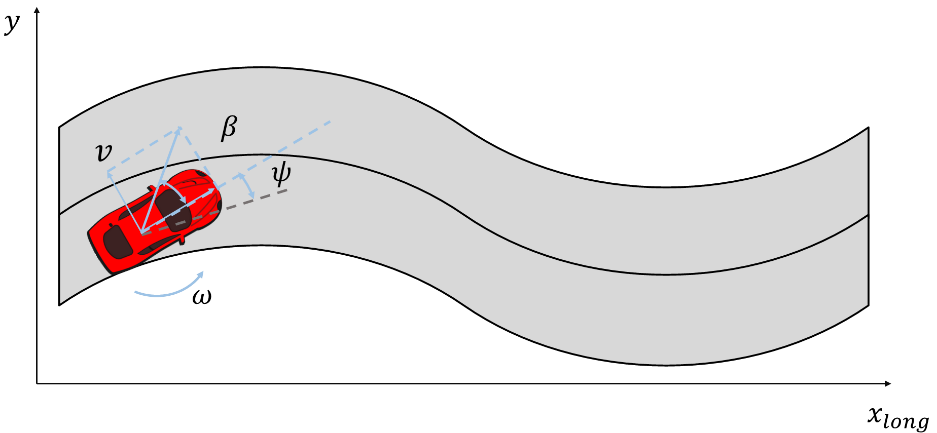

│ dynamics.py - Vehicle model

│ network.py - Network structure

│ solver.py - Solvers for MPC using CasADi

│ config.py - Configurations about training and vehicle model

│ simulation.py - Run experiment to compare ADP and MPC

│ readme.md

│ requirements.txt

│

├─Results_dir - store trained results

│

└─Simulation_dir - store simulation data and plots

Reinforcement Learning and Control. Tsinghua University Lecture Notes, 2020.

CasADi: a software framework for nonlinear optimization and optimal control