This repository contains the implementation of the paper:

Deep Implicit Moving Least-Squares Functions for 3D Reconstruction [arXiv]

Shi-Lin Liu, Hao-Xiang Guo, Hao Pan, Pengshuai Wang, Xin Tong, Yang Liu.

If you find our code or paper useful, please consider citing

@inproceedings{Liu2021MLS,

author = {Shi-Lin Liu, Hao-Xiang Guo, Hao Pan, Pengshuai Wang, Xin Tong, Yang Liu},

title = {Deep Implicit Moving Least-Squares Functions for 3D Reconstruction},

booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year = {2021}}First you have to make sure that you have all dependencies in place. The simplest way to do so, is to use anaconda.

You can create an anaconda environment called deep_mls using

conda env create -f environment.yml

conda activate deep_mls

Next, a few customized tensorflow modules should be installed:

O-CNN Module

O-CNN is an octree-based convolution module, please take the following steps to install:

cd Octree && git clone https://github.com/microsoft/O-CNN/

cd O-CNN/octree/external && git clone --recursive https://github.com/wang-ps/octree-ext.git

cd .. && mkdir build && cd build

cmake .. && cmake --build . --config Release

export PATH=`pwd`:$PATH

cd ../../tensorflow/libs && python build.py --cuda /usr/local/cuda-10.0

cp libocnn.so ../../../ocnn-tf/libs

Neighbor searching is intensively used in DeepMLS. For efficiency reasons, we provide several customized neighbor searching ops:

cd points3d-tf/points3d

bash build.sh

In this step, some errors like this may occur:

tensorflow_core/include/tensorflow/core/util/gpu_kernel_helper.h:22:10: fatal error: third_party/gpus/cuda/include/cuda_fp16.h: No such file or directory

#include "third_party/gpus/cuda/include/cuda_fp16.h"

For solving this, please refer to issue.

Basically, We need to edit the codes in tensorflow framework, please modify

#include "third_party/gpus/cuda/include/cuda_fp16.h"in "site-packages/tensorflow_core/include/tensorflow/core/util/gpu_kernel_helper.h" to

#include "cuda_fp16.h"and

#include "third_party/gpus/cuda/include/cuComplex.h"

#include "third_party/gpus/cuda/include/cuda.h"in "site-packages/tensorflow_core/include/tensorflow/core/util/gpu_device_functions.h" to

#include "cuComplex.h"

#include "cuda.h"We have modified the PyMCubes to get a more efficient marching cubes method for extract 0-isosurface defined by mls points.

To install:

git clone https://github.com/Andy97/PyMCubes

cd PyMCubes && python setup.py install

We have provided the processed tfrecords file. This can be used directly.

Our training data is available now! (total 130G+)

Please download all zip files for extraction.

ShapeNet_points_all_train.zip.001

ShapeNet_points_all_train.zip.002

ShapeNet_points_all_train.zip.003

After extraction, please modify the "train_data" field in experiment config json file with this tfrecords name.

If you want to build the dataset from your own data, please follow:

To acquire a watertight mesh, we first preprocess each mesh follow the preprocess steps of Occupancy Networks.

From step 1, we have already gotten the watertight version of each model. Then, we utilize OpenVDB library to get the sdf values and gradients for training.

For details, please refer to here.

Update (2021-09-30): The script for generating training tfrecords is released, for details please refer to Readme.md.

We have provided pretrained models which can be used to inference:

#first download the pretrained models

cd Pretrained && python download_models.py

#then we can use either of the pretrained model to do the inference

cd .. && python DeepMLS_Generation.py Pretrained/Config_d7_1p_pretrained.json --test

The input for the inference is defined in here.

Your can replace it with other point cloud files in examples or your own data.

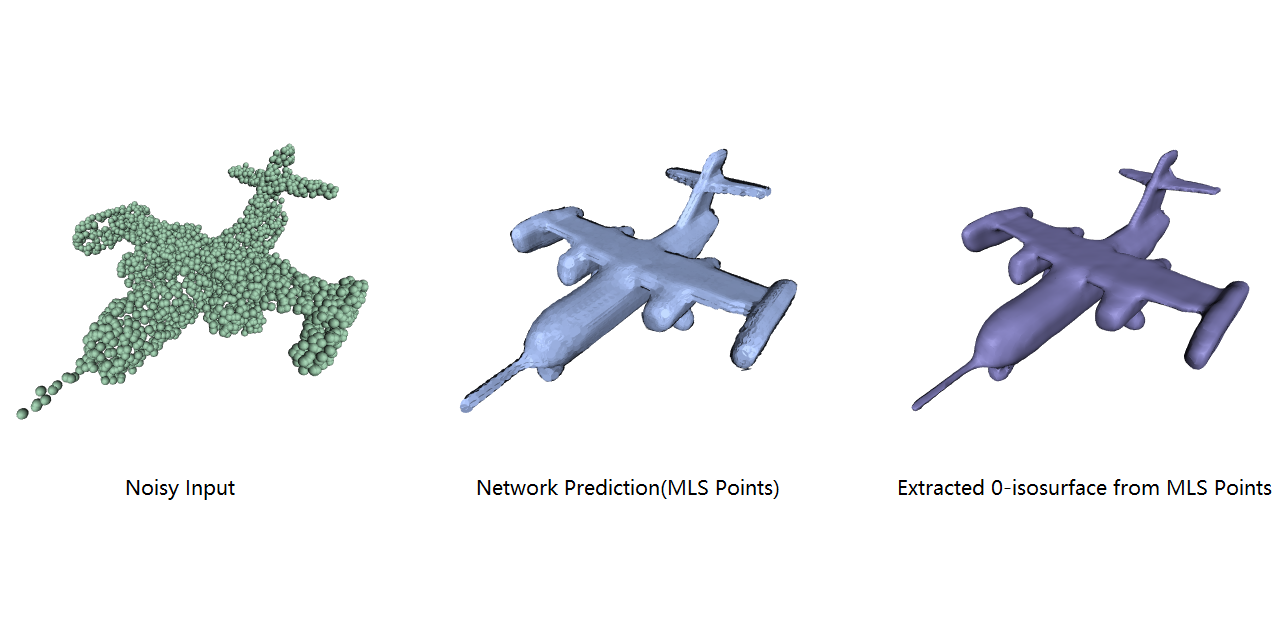

After inference, now we have network predicted mls points. The next step is to extract the surface:

python mls_marching_cubes.py --i examples/d0fa70e45dee680fa45b742ddc5add59.ply.xyz --o examples/d0fa70e45dee680fa45b742ddc5add59_mc.obj --scale

Our code supports single and multiple gpu training. For details, please refer to the config json file.

python DeepMLS_Generation.py examples/Config_g2_bs32_1p_d6.json

For evaluation of results, ConvONet has provided a great script. Please refer to here.