Pcc-tuning: Breaking the Contrastive Learning Ceiling in Semantic Textual Similarity

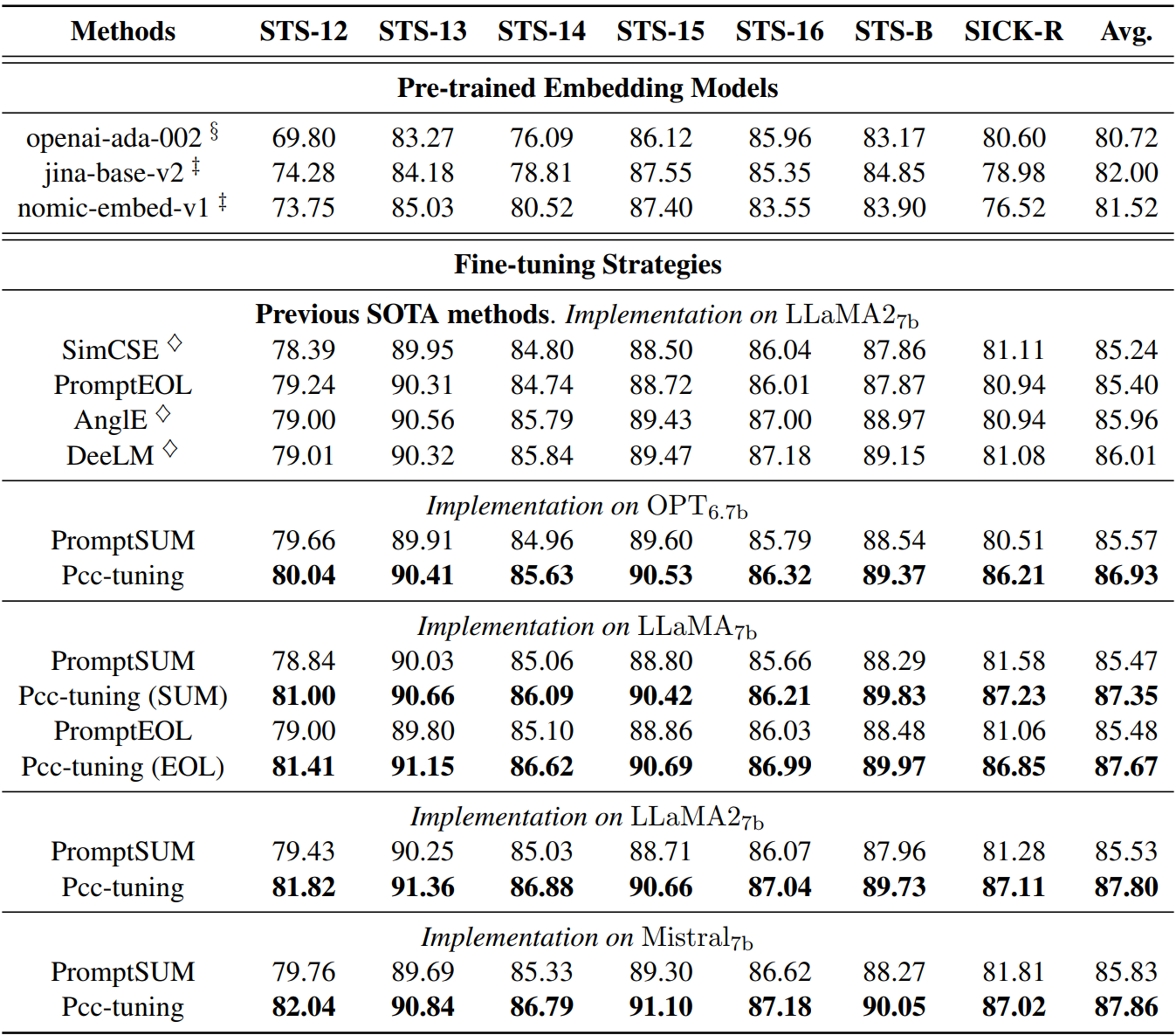

Our paper is the first to propose and substantiate the theoretical performance upper bound of contrastive learning methods. Additionally, Pcc-tuning is the inaugural method capable of achieving Spearman’s correlation scores above 87 on standard STS tasks, marking a significant advancement in the field.

This paper has been accepted to EMNLP 2024. (Main)

- Stage one:

nli_for_simcse.csv - Stage two:

merged-SICK-STS-B-train.jsonl - Link: https://drive.google.com/drive/folders/1M6zXUQ-XCe7bgYD6th6T-5mCInpnUwbV?usp=sharing

-

Python Version: 3.9.18

-

Install Dependencies

cd code pip install -r requirements.txt -

Download SentEval

cd SentEval/data/downstream/ bash download_dataset.sh -

Stage One

cd code nohup torchrun --nproc_per_node=4 train.py > nohup.out & # 4090 * 4

-

Stage Two

cd code nohup torchrun --nproc_per_node=4 tune.py > nohup.out & # 4090 * 4

- Our code is based on PromptEOL

-

Github: STS-Regression

Conference: 🌟 EMNLP 2024, Main

-

Github: CoT-BERT

Paper: CoT-BERT: Enhancing Unsupervised Sentence Representation through Chain-of-Thought

Conference: 🌟 ICANN 2024, Oral

-

Github: PretCoTandKE

Paper: Simple Techniques for Enhancing Sentence Embeddings in Generative Language Models

Conference: 🌟 ICIC 2024, Oral