- 2024-08-28: We released a smaller version of Spider 2.0 (~ 25% of the full dataset) containing 190 examples to give users early access. The full dataset and the paper will be available in two weeks. As this is a preliminary release, there may be errors. Your feedback would be invaluable in refining the dataset. Stay tuned!

In 2018, we introduced Spider 1.0, SParC, and CoSQL as part of the Yale Semantic Parsing and Text-to-SQL Challenge Series, attracting over 300 submissions from leading research labs worldwide.

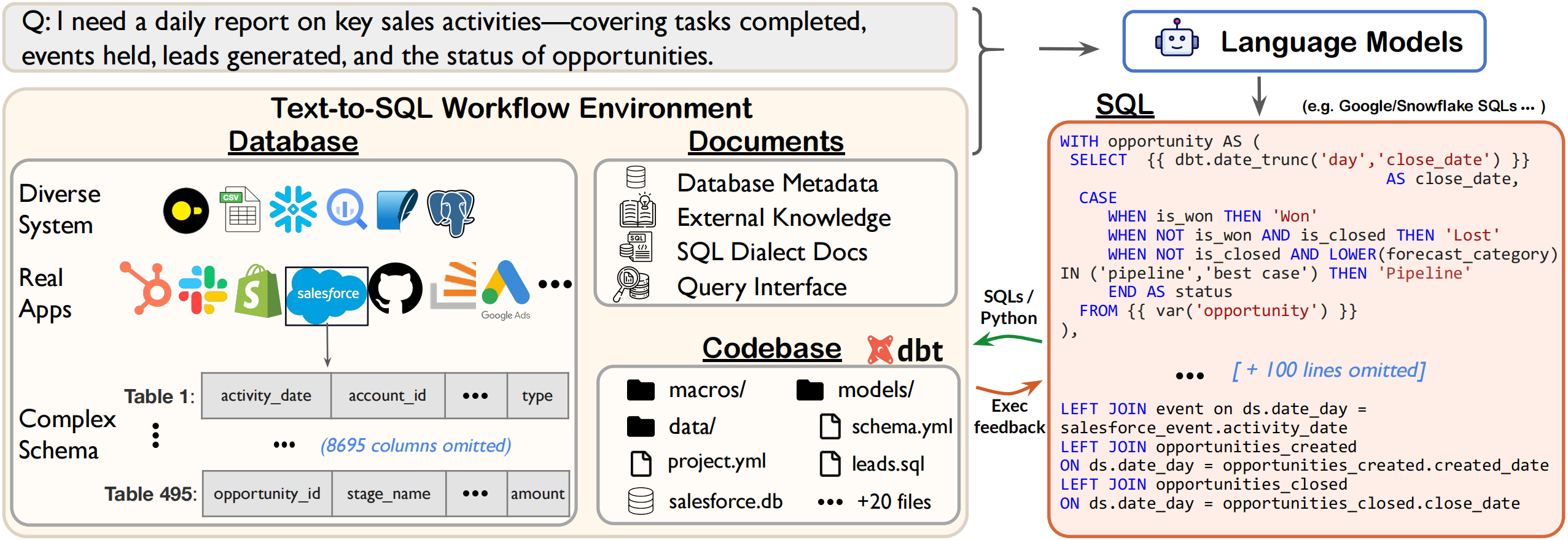

Now, in the era of Large Language Models (LLMs), we present Spider 2.0 to advance code generation, particularly text-to-SQL capabilities.

This new benchmark offers a more realistic and challenging test of LLMs' performance on complex enterprise-level text-to-SQL workflows, involving complex data environments (e.g., >3000 columns), multiple SQL dialects (e.g., BigQuery, Snowflake), and diverse operations (e.g., transformation, analytics).

Notably, as shown below, even the most advanced LLMs, including GPT-4, solve only 6.0% of Spider 2.0 tasks, compared to 86.6% on Spider 1.0 and 57.4% on BIRD, highlighting the significant challenges posed by Spider 2.0.

| Spider 1.0 dev | Spider 1.0 test | BIRD test | Spider 2.0 | |

|---|---|---|---|---|

| DailSQL + GPT-4 | 82.4 | 86.6 | 57.4 | 9.4 (on a subset of 319 examples) |

| CodeS-7B | 85.4 | - | 59.3 | 2.2 (on a subset of 319 examples) |

For Spider 2.0, all evaluation examples are aggregated in file spider2.jsonl, where each data point contains the following field:

{

"instance_id": "3a348be1-aed2-44fb-8185-c66c9d14a6ef",

"instruction": "Please tell me the number of sessions for each website traffic channel in December 2020.",

"type": "Bigquery"

}For each instance, we also provide a separate folder ./spider2/examples/{instruction_id} as its Execution Context to simulate the agentic setting. Each folder may have the following files:

README.md: detailed requirements of theinstructionfield for the current example withinstance_id;*_credential.json: credential file connecting to realistic enterprise-level databases, e.g., BigQuery. Can be replaced with your OWN;result.csv: CSV file to store the execution results;- other instance-specific materials which assist in finishing the current task:

- 🏗️ partial project, e.g.,

dbt_project/. - 📝 reference documentation:

ga4_dimensions_and_metrics.md,retention_rate.md, etc. - 🔍 query interface: We have predefined how to access the diverse database systems.

- 🎞️ query history or samples, e.g.,

QUERY_HISTORY/,BASIC_SQLS/, etc.

- 🏗️ partial project, e.g.,

The agent has to interact with complex SQL workflows, process extremely long contexts, perform intricate reasoning, and generate multiple SQL queries with diverse operations, often exceeding 100 lines across multiple turns.

For Spider 2.0, we proposed an agent framework Spider-Agent based on Docker environment.

- Install Docker. Follow the instructions in the Docker setup guide to install Docker on your machine.

- Install conda environment.

git clone https://github.com/xlang-ai/Spider2.git

cd methods/spider-agent

# Optional: Create a Conda environment for Spider 2.0

# conda create -n spider2 python=3.11

# conda activate spider2

# Install required dependencies

pip install -r requirements.txt

-

Configure credential: follow this instruction to configure BigQuery for running the SQL queries.

-

Run agent

export OPENAI_API_KEY=your_openai_api_key

python run.py --model gpt-4o --suffix test1

To align with research interests in traditional Text2SQL settings, we also release Spider 2.0-Lite. This set is more self-contained, with well-prepared database metadata and documentation, making it a text-in, text-out task that supports faster development and evaluation.

You can also access the Spider 2.0-Lite by huggingface dataset.🤗

from datasets import load_dataset

ds = load_dataset("xlangai/spider2-lite")

Each file in spider2-lite.json contains the following fields:

instance_id: the unique example iddb: the database id to which this question is addressedquestion: the natural language questionexternal_knowledge: the filenames of external knowledge, documentation, and information required to answer this question are stored in documents

We proposed baselines based on the widely used text2sql methods: Dail-SQL and CodeS, with evaluation results reported 🧪.

Set up the environment and dependencies:

conda create -n DAIL-SQL python=3.8

cd spider2-lite/baselines/dailsql

pip install -r requirements.txt

python nltk_downloader.py

pip install https://github.com/explosion/spacy-models/releases/download/en_core_web_sm-3.5.0/en_core_web_sm-3.5.0-py3-none-any.whlSimply run 😆:

bash run.sh

For a detailed guideline of running Dail-SQL, please refer to Installation.