In this case study, you will prepare Ames Housing Dataset in a csv file in a way that it is suitable for a ML algorithm. You will achieve this by first exploring the data and performing feature transformations on provided dataset of house price prediction ML problem. You are required to train a ML model by using linear regression, ridge regression and lasso regression for predicting house prices.

- 2.1 Load data set

- 2.2 Exploratory Data Analysis (EDA)

-

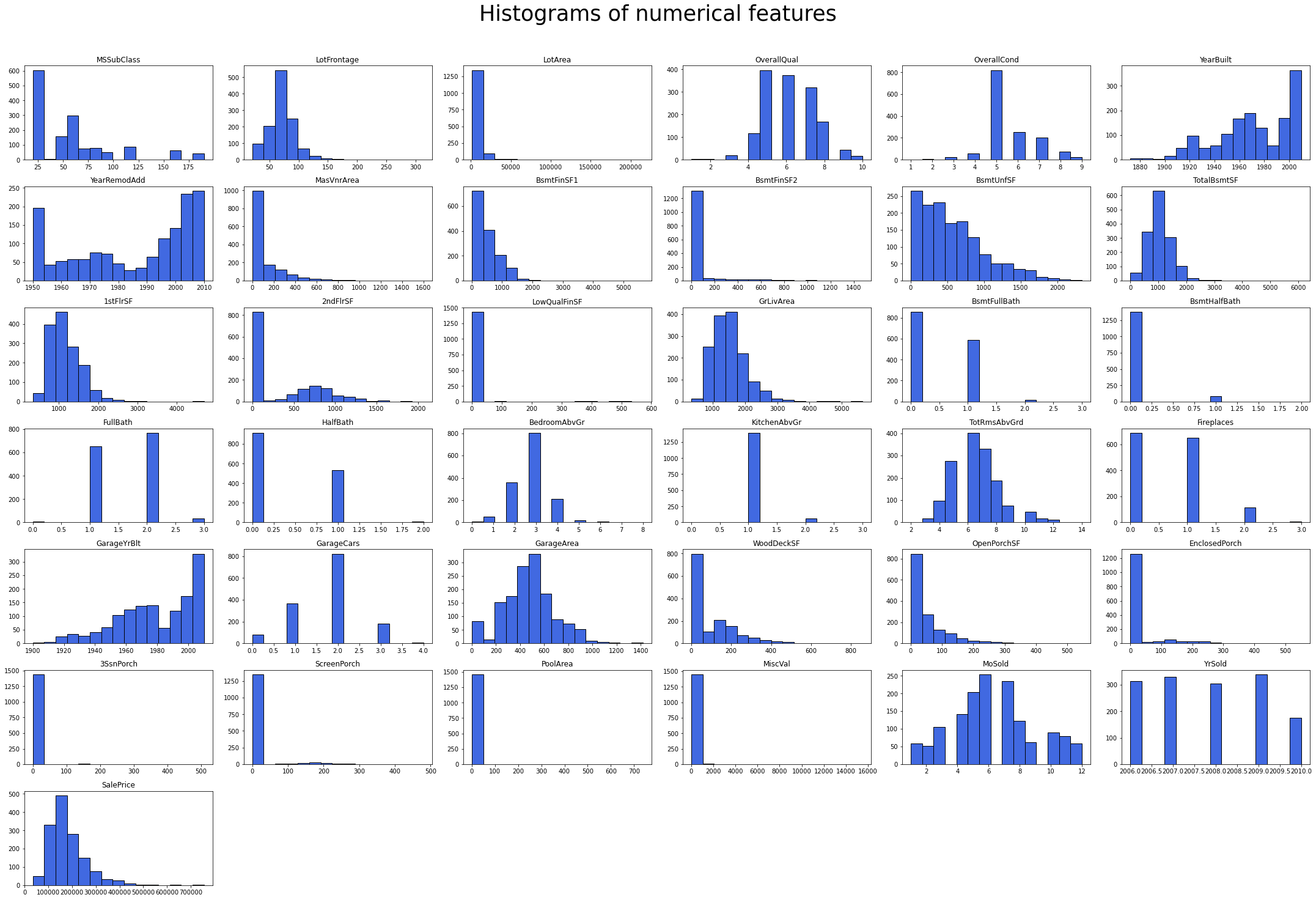

- Histograms

-

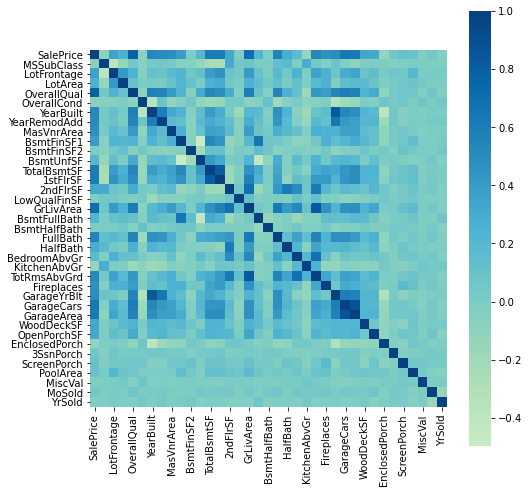

- Heatmap

-

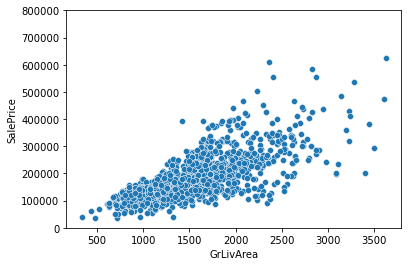

- Scatterplots

-

- Scatter matrix

-

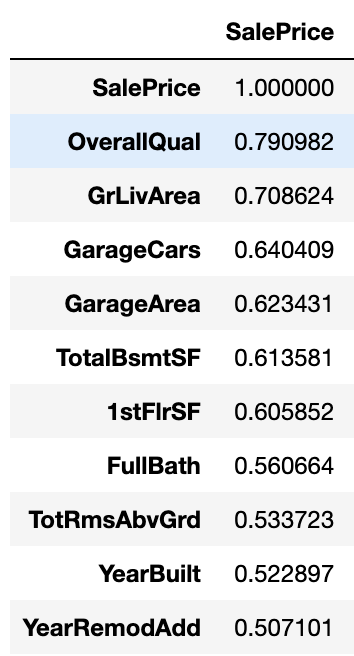

- Correlation between other features and 'SalePrice'

The target 'SalePrice' variable is highly correlated with features such as OverallQual, GrLivArea, GarageCars, GarageArea and TotalBsmtSF among others.

- 2.3 Process dataset for ML

Steps:

-

- Handle missing values

-

- Fill nulls for 'LotFrontage' with median value calculated after grouping by 'Neighborhood'

-

- Fill nulls for 'GarageYrBlt','MasVnrArea' with 0

-

- Apply log-transform on target feature 'SalePrice'

-

- One-hot encoding

Split dataset in training set (X_train, y_train) and test set (X_test, y_test)

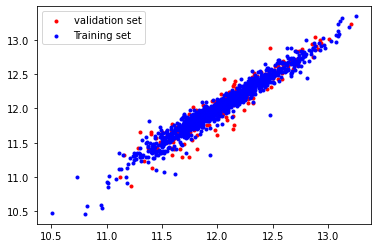

R^2 score on trainig set: 0.94609, MSE score on trainig set: 0.00808

R^2 score on test set: 0.89136, MSE score on test set: 0.01472

Ridge regression (alpha=0.05): R^2 score on training set: 0.94598, R^2 score on test set: 0.89410

Lasso regression (alpha= 0.0001): R^2 score on trainig set: 0.94169, R^2 score on test set: 0.90843

6.1 In practice, ridge regression is usually the first choice between two models.

6.2 However, if you have a large amount of features and expect only a few of them to be important, Lasso might be a better choice.

| R^2 score | Linear Regression | Ridge Regression | Lasso Regression |

|---|---|---|---|

| training set | 0.94609 | 0.94598 | 0.94169 |

| test set | 0.89136 | 0.89410 | 0.90843 |