Works for deep model intellectual property (IP) protection.

Survey ^

-

Machine Learning IP Protection: Major players in the semiconductor industry provide mechanisms on device to protect the IP at rest and during execution from being copied, altered, reverse engineered, and abused by attackers. 参考硬件领域的保护措施(静态动态) | BitTex: cammarota2018machine | Cammarota et al, Proceedings of the International Conference on Computer-Aided Design(ICCAD) 2018

-

A Survey on Model Watermarking Neural Networks: This document at hand provides the first extensive literature review on ML model watermarking schemes and attacks against them. | BibTex: boenisch2020survey | Franziska Boenisch, 2020.9

-

DNN Intellectual Property Protection: Taxonomy, Methods, Attack Resistance, and Evaluations: This paper attempts to provide a review of the existing DNN IP protection works and also an outlook. | BibTex: xue2020dnn | Xue et al, GLSVLSI '21: Proceedings of the 2021 on Great Lakes Symposium on VLSI 2020.11 TAI

-

A survey of deep neural network watermarking techniques | BibTex: li2021survey | Li et al, 2021.3

-

Protecting artificial intelligence IPs: a survey of watermarking and fingerprinting for machine learning: The majority of previous works are focused on watermarking, while more advanced methods such as fingerprinting and attestation are promising but not yet explored in depth; provide a table to show the resilience of existing watermarking methods against attacks | BibTex: regazzoni2021protecting | Regazzoni et al, CAAI Transactions on Intelligence Technology 2021

-

Watermarking at the service of intellectual property rights of ML models? | BibTex: kapusta2020watermarking | Kapusta et al, In Actes de la conférence CAID 2020

-

神经网络水印技术研究进展/Research Progress of Neural Networks Watermarking Technology: 首先, 分析水印及其基本需求,并对神经网络水印涉及的相关技术进行介绍;对深度神经网络水印技术进行对 比,并重点对白盒和黑盒水印进行详细分析;对神经网络水印攻击技术展开对比,并按照水印攻击目标 的不同,对水印鲁棒性攻击、隐蔽性攻击、安全性攻击等技术进行分类介绍;最后对未来方向与挑战进行 探讨 . | BibTex: yingjun2021research | Zhang et al, Journal of Computer Research and Development 2021

-

20 Years of research on intellectual property protection | BibTex: potkonjak201720 | Potkonjak et al, IEEE International Symposium on Circuits and Systems (ISCAS). 2017

-

DNN Watermarking: Four Challenges and a Funeral | BibTex: barni2021four | IH&MMSec '21

-

SoK: How Robust is Deep Neural Network Image Classification Watermarking?: | Lukas, et al, S&P2022 | [Toolbox]

-

Regulating Ownership Verification for Deep Neural Networks: Scenarios, Protocols, and Prospects: we study the deep learning model intellectual property protection in three scenarios: the ownership proof, the federated learning, and the intellectual property transfer | Li, et al, * IJCAI 2021 Workshop on Toward IPR on Deep Learning as Services*

-

Selection Guidelines for Backdoor-based Model Watermarking 本科毕业论文 | lederer2021selection | Lederer, 2021

-

Survey on the Technological Aspects of Digital Rights Management | William Ku, Chi-Hung Chi | International Conference on Information Security,2004

-

Survey on watermarking methods in the artificial intelligence domain and beyond: 利用AI设计水印算法

Preliminary ^

-

[model modifications]: Fine-tuning (adi2018turning) | Transfer Learning (kolesnikov2020big) | Mode Compression

-

[model extratcion]: Some reference in related work of Stealing Links from Graph Neural Networks

refer to Sensitive-Sample Fingerprinting of Deep Neural Networks

- complex cloud environment: All your clouds are belong to us: security analysis of cloud management interfaces

- posioning attack & backdoor attack: refer to poison ink

Access Control ^

Encryption Scheme ^

-

MLCapsule: Guarded Offline Deployment of Machine Learning as a Service: deployment mechanism based on Isolated Execution Environment (IEE), we couple the secure offline deployment with defenses against advanced attacks on machine learning models such as model stealing, reverse engineering, and membership inference. | BibTex: hanzlik2018mlcapsule | Hanzlik et al, In Proceedings of ACM Conference (Conference’17). ACM 2019

-

Slalom: Fast, Verifiable and Private Execution of Neural Networks in Trusted Hardware: It partitions DNN computations into nonlinear and linear operations. These two parts are then assigned to the TEE and the untrusted environment for execution, respectively | Tramer, Florian and Boneh, Dan | tramer2018slalom | 2018.6

-

DeepAttest: An End-to-End Attestation Framework for Deep Neural Networks: the first on-device DNN attestation method that certifies the legitimacy of the DNN program mapped to the device; device-specific fingerprint | BibTex: chen2019deepattest | Chen et al, ACM/IEEE 46th Annual International Symposium on Computer Architecture (ISCA) 2019

(Homomorphic Encryption HE, does not support operations such as comparison and maximization. Therefore, HE cannot be directly applied to deep learning. Solution: approximation or leaving in plaintext)

[data, privacy concern, privacy-perserving]

-

Machine Learning Classification over Encrypted Data: privacy-preserving classifiers | BibTex: bost2015machine | Bost et al, NDSS 2015

-

CryptoNets: Applying Neural Networks to Encrypted Data with High Throughput and Accuracy | BibTex: dowlin2016cryptonets | Dowlin et al, ICML 2016

-

Deep Learning as a Service Based on Encrypted Data: we combine deep learning with homomorphic encryption algorithm and design a deep learning network model based on secure Multi-party computing (MPC); 用户不用拿到模型,云端只拿到加密的用户,在加密测试集上进行测试 | BibTex: hei2020deep | Hei et al, International Conference on Networking and Network Applications (NaNA) 2020

[model weights]

- Security for Distributed Deep Neural Networks: Towards Data Confidentiality & Intellectual Property Protection: Making use of Fully Homomorphic Encryption (FHE), our approach enables the protection of Distributed Neural Networks, while processing encrypted data. | BibTex: gomez2019security | Gomez et al, 2019.7

Key Scheme ^

- Protect Your Deep Neural Networks from Piracy: using the key to enable correct image transformation of triggers; 对trigger进行加密 | BibTex: chen2018protect | Chen et al, IEEE International Workshop on Information Forensics and Security (WIFS) 2018

(AprilPyone -- access control)

- A Protection Method of Trained CNN Model Using Feature Maps Transformed With Secret Key From Unauthorized Access: up-to-data version, 比较完整的,涵盖了注释掉的两篇 | AprilPyone et al, 2021.9

(AprilPyone -- semantic segmentation models)

- Access Control Using Spatially Invariant Permutation of Feature Maps for Semantic Segmentation Models: spatially invariant permutation with correct key | ito2021access, AprilPyone et al, 2021.9

(AprilPyone -- adversarial robustness)

-

Encryption inspired adversarial defense for visual classification | BibTex: maung2020encryption | AprilPyone et al, In 2020 IEEE International Conference on Image Processing (ICIP)

-

Block-wise Image Transformation with Secret Key for Adversarially Robust Defense: propose a novel defensive transformation that enables us to maintain a high classification accuracy under the use of both clean images and adversarial examples for adversarially robust defense. The proposed transformation is a block-wise preprocessing technique with a secret key to input images BibeTex: aprilpyone2021block | AprilPyone et al, IEEE Transactions on Information Forensics and Security (TIFS) 2021

(AprilPyone -- piracy)

-

Piracy-Resistant DNN Watermarking by Block-Wise Image Transformation with Secret Key:uses a secret key to verify ownership instead of trigger sets, 类似张鑫鹏的protocal, 连续变换,生成trigger sets | BibTex: AprilPyone2021privacy | AprilPyone et al, 2021.4 | IH&MMSec'21 version

-

Non-Transferable Learning: A New Approach for Model Verification and Authorization: propose the idea is feasible to both ownership verification (target-specified cases) and usage authorization (source-only NTL).; 反其道行之,只要加了扰动就下降,利用脆弱性,或者说是超强的转移性,exclusive | BibTex: wang2021nontransferable | Wang et al, NeurIPS 2021 submission [Mark]: for robust black-box watermarking | ICLR'22 | blog

[prevent unauthorized training]

-

A Novel Data Encryption Method Inspired by Adversarial Attacks: 利用encoder生成对抗噪声转移数据分布,然后decoder再拉回去; 数据加密着,使用时解密;攻击者从加密的数据和相应的输出学不到relationship; inference stage | fernando2021novel, fernando et al, 2021.9

-

Protect the Intellectual Property of Dataset against Unauthorized Use: 将数据都加feature level的对噪声,然后用可逆隐写进行加密解密,可逆隐写讲干净图片利用LSB 藏在对抗样本里,攻击者只能拿到对抗样本数据集进行训 | xue2021protect, Xue et al, 2021.9

simialr idea for data privacy protection -- unlearnable_examples_making_personal_data_unexploitable | BibTex: huang2021unlearnable | Huang et al, ICLR 2021

Learning to Confuse: Generating Training Time Adversarial Data with Auto-Encoder: modifying training data with bounded perturbation, hoping to manipulate the behavior (both targeted or non-targeted) of any corresponding trained classifier during test time when facing clean samples. 可以用来做水印 | Code | BibTex: feng2019learning | Feng et al, NeurIPS 2019

[model architecture]

-

Rethinking Deep Neural Network Ownership Verification: Embedding Passports to Defeat Ambiguity Attacks | Code | BibTex:fan2019rethinking | Extension | Fan et al, NeuraIPS 2019, 2019.9

-

Hardware-Assisted Intellectual Property Protection of Deep Learning Models: ensures that only an authorized end-user who possesses a trustworthy hardware device (with the secret key embedded on-chip) is able to run intended DL applications using the published model | BibTex: chakraborty2020hardware | Chakraborty et al, 57th ACM/IEEE Design Automation Conference (DAC) 2020

[model weights]

-

Deep-Lock : Secure Authorization for Deep Neural Networks: utilizes S-Boxes with good security properties to encrypt each parameter of a trained DNN model with secret keys generated from a master key via a key scheduling algorithm, same threat model with [chakraborty2020hardware]| [update](NN-Lock: A Lightweight Authorization to Prevent IP Threats of Deep Learning Models):不是就一次认证,每次输入都要带着认证 | BibTex: alam2020deep; alam2022nn | Alam et al, 2020.8

-

Enabling Secure in-Memory Neural Network Computing by Sparse Fast Gradient Encryption: 加密尽可能少的权值使模型出错, 把对抗噪声加在权值上,解密时直接减去相应权值 , run-time encryption scheduling (layer-by-layer) to resist confidentiality attack | BibTex: cai2019enabling | Cai et al, ICCAD 2019

-

AdvParams: An Active DNN Intellectual Property Protection Technique via Adversarial Perturbation Based Parameter Encryption: 用JSMA找加密位置,更加准确,扰动更小? | BibTex: xue2021advparams | Xue et al, 2021.5

-

Chaotic Weights- A Novel Approach to Protect Intellectual Property of Deep Neural Networks: exchanging the weight

positionsto obtain a satisfying encryption effect, instead of using the conventional idea of encrypting the weight values; CV, NLP tasks; | BibTex: lin2020chaotic | Lin et al, IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems (2020) 2020 -

On the Importance of Encrypting Deep Features: shuffle bits on tensor, direct way| Code | ni2021importance | Ni te al, 2021.8

[Encrpted Weights -- Hierarchical Service]

- Probabilistic Selective Encryption of Convolutional Neural Networks for Hierarchical Services: Probabilistic Selection Strategy (PSS), 如何优化可以借鉴; Distribution Preserving Random Mask (DPRM) | Code | BibTex: tian2021probabilistic | Tian et al, CVPR2021

[Encrpted Architecture]

- DeepObfuscation: Securing the Structure of Convolutional Neural Networks via Knowledge Distillation: . Our obfuscation approach is very effective to protect the critical structure of a deep learning model from being exposed to attackers; limitation: weights may be more important than the architecture; agaisnt transfer learning & incremental learning | BibTex: xu2018deepobfuscation | Xu et al, 2018.6

-

Active DNN IP Protection: A Novel User Fingerprint Management and DNN Authorization Control Technique: using trigger sets as copyright management | BibTex: xue2020active | Xue et al, Security and Privacy in Computing and Communications (TrustCom) 2020

-

ActiveGuard: An Active DNN IP Protection Technique via Adversarial Examples: different compared with [xue2020active]: adversarial example based | update | BibTex: xue2021activeguard | Xue et al, 2021.3

-

Hierarchical Authorization of Convolutional Neural Networks for Multi-User: we refer to differential privacy and use the Laplace mechanism to perturb the output of the model to vary degrees, 没有加解密过程,直接release | BibTex: luo2021hierarchical | Luo et al, IEEE Signal Processing Letters 2021

Model Retrieval ^

-

Grounding Representation Similarity with Statistical Testing: orthogonal procrustes for representing similarity | ding2021grounding | Ding et al, NeurIPS 2021

-

Similarity of Neural Network Representations Revisited: 可以衡量不同模型结构学习到的特征表达,那到底是数据集影响还是结构影响呢? analysis study | Kornblith, Simon and Norouzi, Mohammad and Lee, Honglak and Hinton, Geoffrey | kornblith2019similarity | ICML'19

-

ModelDiff: Testing-Based DNN Similarity Comparison for Model Reuse Detection: Specifically, the behavioral pattern of a model is represented as a decision distance vector (DDV), in which each element is the distance between the model’s reactions to a pair of inputs 类似 twin trigger | BibTex: li2021modeldiff | Li et al, In Proceedings of the 30th ACM SIGSOFT International Symposium on Software Testing and Analysis (ISSTA ’21)

-

Deep Neural Network Retrieval: reducing the computational cost of MLaaS providers. | zhong2021deep | Zhong et al,

-

Copy, Right? A Testing Framework for Copyright Protection of Deep Learning Models: mulit-level such as porperty-level, neuron-level and layer-level | Chen, Jialuo and Wang, Jingyi and Peng, Tinglan and Sun, Youcheng and Cheng, Peng and Ji, Shouling and Ma, Xingjun and Li, Bo and Song, Dawn | chen2021copy | S&P2022

DNN Watermarking ^

-

Machine Learning Models that Remember Too Much:redundancy: embedding secret information into network parameters | BibTex: song2017machine | Song et al, Proceedings of the 2017 ACM SIGSAC Conference on computer and communications security 2017

-

Understanding deep learning requires rethinking generalization:overfitting: The capability of neural networks to “memorize” random noise | BibTex: zhang2016understanding | Zhang et al, 2016.11

White-box DNN Watermarking ^

-

Embedding Watermarks into Deep Neural Networks:第一篇模型水印工作 | Code | [BibTex]): uchida2017embedding | Uchia et al, ICMR 2017.1

-

Digital Watermarking for Deep Neural Networks:Extension of [1] | BibTex: nagai2018digital | Nagai et al, 2018.2

Improvement ^

Watermark Carriers ^

-

DeepSigns: An End-to-End Watermarking Framework for Protecting the Ownership of Deep Neural Networks: pdf distribution of activation maps as cover; the activation of an intermediate layer is continuous-valued | code | BibTex: rouhani2019deepsigns | Rouhani et al, ASPLOS 2019

-

Don’t Forget To Sign The Gradients! : imposing a statistical bias on the expected gradients of the cost function with respect to the model’s input. introduce some adaptive watermark attacks Pros: The watermark key set for GradSigns is constructed from samples of training data without any modification or relabeling, which renders this attack (Namba) futile against our method | code | BibTex: aramoon2021don | Aramoon et al, Proceedings of Machine Learning and Systems 2021

-

When NAS Meets Watermarking: Ownership Verification of DNN Models via Cache Side Channels :dopts a conventional NAS method with mk to produce the watermarked architecture and a verification key vk; the owner collects the inference execution trace (by side-channel), and identifies any potential watermark based on vk | BibTex: lou2021when | Lou et al, 2021.2

-

Structural Watermarking to Deep Neural Networks via Network Channel Pruning: structural watermarking scheme that utilizes

channel pruningto embed the watermark into the host DNN architecture instead of crafting the DNN parameters; bijective algorithm | BibTex: zhao2021structural | Zhao et al, 2021.7 -

You are caught stealing my winning lottery ticket! Making a lottery ticket claim its ownership: 让模型尽可能地精简,把signature藏在sparse mask里 | BibTex: chen2021you | Chen et al, NeurIPS 2021.

Loss Constrains | Verification Approach | Training Strategies ^

[Stealthiness]

-

Attacks on digital watermarks for deep neural networks:weights variance or weights standard deviation, will increase noticeably and systematically during the process of watermark embedding algorithm by Uchida et al; using L2 regulatization to achieve stealthiness; w tend to mean=0, var=1 | BibTex: wang2019attacks | Wang et al, ICASSP 2019

-

RIGA Covert and Robust White-Box Watermarking of Deep Neural Networks:improvement of [1] in stealthiness, constrain the weights distribution with advesarial training; white-box watermark that does not impact accuracy; Cons but cannot possibly protect against model stealing and distillation attacks, since model stealing and distillation are black-box attacks and the black-box interface is unmodified by the white-box watermark. However, white-box watermarks still have important applications when the model needs to be highly accurate, or model stealing attacks are not feasible due to rate limitation or available computational resources. | code | BibTex: wang2019riga | Wang et al, WWW 2021

-

Adam and the ants: On the influence of the optimization algorithm on the detectability of dnn watermarks:improvement of [1] in stealthiness, adoption of the Adam optimiser introduces a dramatic variation on the histogram distribution of the weights after watermarking, constrain Adam optimiser is run on the projected weights using the projected gradients | code | BibTex: cortinas2020adam | Cortiñas-Lorenzo et al, Entropy 2020

[Capacity]

-

RIGA Covert and Robust White-Box Watermarking of Deep Neural Networks:improvement of [1] in stealthiness, constrain the weights distribution with advesarial training; white-box watermark that does not impact accuracy; Cons but cannot possibly protect against model stealing and distillation attacks, since model stealing and distillation are black-box attacks and the black-box interface is unmodified by the white-box watermark. However, white-box watermarks still have important applications when the model needs to be highly accurate, or model stealing attacks are not feasible due to rate limitation or available computational resources. | code | BibTex: wang2019riga | Wang et al, WWW 2021

-

A Feature-Map-Based Large-Payload DNN Watermarking Algorithm: simialr to deepsign, feature map as cover | Li, Yue and Abady, Lydia and Wang, Hongxia and Barni, Mauro | li2021feature | International Workshop on Digital Watermarking, 2021

[Fidelity]

-

Spread-Transform Dither Modulation Watermarking of Deep Neural Network :changing the activation method of [1], whcih increase the payload (capacity), couping the spread spectrum and dither modulation | BibTex: li2020spread | Li et al, 2020.12

-

Watermarking in Deep Neural Networks via Error Back-propagation:using an independent network (weights selected from the main network) to embed and extract watermark; provide some suggestions for watermarking; introduce model isomorphism attack | BibTex: wang2020watermarking | Wang et al, Electronic Imaging 2020.4

[Robustness]

-

Delving in the loss landscape to embed robust watermarks into neural networks:using partial weights to embed watermark information and keep it untrainable, optimize the non-chosen weights; denoise training strategies; robust to fine-tune and model parameter quantization | BibTex: tartaglione2020delving | Tartaglione et al, ICPR 2020

-

DeepWatermark: Embedding Watermark into DNN Model:using dither modulation in FC layers fine-tune the pre-trainde model; the amount of changes in weights can be measured (energy perspective ) | BibTex: kuribayashi2020deepwatermark | Kuribayashi et al, Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC) 2020 (only overwriting attack) | IH&MMSec 21 WS White-Box Watermarking Scheme for Fully-Connected Layers in Fine-Tuning Model

-

TATTOOED: A Robust Deep Neural Network Watermarking Scheme based on Spread-Spectrum Channel Coding: 权值直接添加编码,改变后过一遍数据测准确率然后再调节超参数,寻找 optimum; robust to refit; 不同性质定义的很好 | Pagnotta, Giulio and Hitaj, Dorjan and Hitaj, Briland and Perez-Cruz, Fernando and Mancini, Luigi V | pagnotta2022tattooed | 2022.2

-

Immunization of Pruning Attack in DNN Watermarking Using Constant Weight Code: solve the vulnerability against pruning, viewpoint of communication | BibTex: kuribayashi2021immunization, kuribayashi et al, 2021.7

-

Fostering The Robustness Of White-box Deep Neural Network Watermarks By Neuron Alignment: using trigger to remember the order of neurons, against the neuron permutation attack [lukas,sok] | li2021fostering | 2021.12

[security]

-

Watermarking Neural Network with Compensation Mechanism: using spread spectrum (capability) and a noise sequence for security; 补偿机制指对没有嵌入水印的权值再进行fine-tune; measure changes with norm (energy perspective), 多用方法在前面robustness中都提过,此处可以不再提这篇工作 | BibTex: feng2020watermarking | Feng et al, International Conference on Knowledge Science, Engineering and Management 2020 [Fidelity] | [Compensation Mechanism]

-

Rethinking Deep Neural Network Ownership Verification: Embedding Passports to Defeat Ambiguity Attacks | Code | BibTex: fan2019rethinking | Extension | Fan et al, NeuraIPS 2019, 2019.9

-

Passport-aware Normalization for Deep Model Protection: Improvemnet of [1] | Code | BibTex: zhang2020passport | Zhang et al, NeuraIPS 2020, 2020.9

-

Watermarking Deep Neural Networks with Greedy Residuals: less is more; feasible to the deep model without normalization layer | BibTex: liu2021watermarking | Liu et al, ICML 2021

-

Collusion Resistant Watermarking for Deep Learning Models Protection: 可不引用 : ICACT‘22 | Tokyo

Approaches Based on Muliti-task Learning ^

-

Secure Watermark for Deep Neural Networks with Multi-task Learning: The proposed scheme explicitly meets various security requirements by using corresponding regularizers; With a decentralized consensus protocol, the entire framework is secure against all possible attacks. ;We are looking forward to using cryptological protocols such as zero-knowledge proof to improve the ownership verification process so it is possible to use one secret key for multiple notarizations. 白盒水印藏在不同地方,互相不影响,即使被擦除也没事儿? | BibTex: li2021secure | Li et al, 2021.3

-

HufuNet: Embedding the Left Piece as Watermark and Keeping the Right Piece for Ownership Verification in Deep Neural Networks:Hufu(虎符), left piece for embedding watermark, right piece as local secret; introduce some attack: model pruning, model fine-tuning, kernels cutoff/supplement and crafting adversarial samples, structure adjustment or parameter adjustment; Table12 shows the number of backoors have influence on the performance; cosine similarity is robust even weights or sturctures are adjusted, can restore the original structures or parameters; satisfy Kerckhoff's principle | Code | BibTex: lv2021hufunet | Lv et al, 2021.3

-

TrojanNet: Embedding Hidden Trojan Horse Models in Neural Networks: We show that this opaqueness provides an opportunity for adversaries to embed unintended functionalities into the network in the form of Trojan horses; Our method utilizes excess model capacity to simultaneously learn a public and secret task in a single network | Code | NeurIPS2021 submission | BibTex: guo2020trojannet | Guo et al, 2020.2

Black-box DNN Watermarking ^

-

Turning Your Weakness Into a Strength: Watermarking Deep Neural Networks by Backdooring:thefirst backdoor-based, abstract image; 补充材料: From Private to Public Verifiability, Zero-Knowledge Arguments. | Code | BibTex: adi2018turning | Adi et al, 27th {USENIX} Security Symposium 2018

-

Protecting Intellectual Property of Deep Neural Networks with Watermarking:Three backdoor-based watermark schemes | BibTex: zhang2018protecting | Zhang et al, Asia Conference on Computer and Communications Security 2018

-

KeyNet An Asymmetric Key-Style Framework for Watermarking Deep Learning Models: append a private model after pristine network, the additive model for verification; describe drawbacks of two type triggers, 分析了对不同攻击的原理性解释:ft, pruning, overwriting; 做了laundring的实验 | BibTex: jebreel2021keynet| Jebreel et al, *Applied Sciences * 2021

-

Protecting Intellectual Property of Deep Neural Networks with Watermarking:Three backdoor-based watermark schemes: specific test string, some pattern of noise | BibTex: zhang2018protecting | Zhang et al, Asia Conference on Computer and Communications Security 2018

-

Watermarking Deep Neural Networks for Embedded Systems:One clear drawback of their Adi is the difficulty to associate abstract images with the author’s identity. Their answer is to use a cryptographic commitment scheme, incurring a lot of overhead to the proof of authorship; using message mark as the watermark information; unlike cloud-based MLaaS that usually charge users based on the number of queries made, there is no cost associated with querying embedded systems; owners's signature | BibTex: guo2018watermarking | Guo et al, IEEE/ACM International Conference on Computer-Aided Design (ICCAD) 2018

-

Evolutionary Trigger Set Generation for DNN Black-Box Watermarking:proposed an evolutionary algorithmbased method to generate and optimize the trigger pattern of the backdoor-based watermark to reduce the false alarm rate. | Code | BibTex: guo2019evolutionary | Guo et al, 2019.6

-

Deep Serial Number: Computational Watermarking for DNN Intellectual Property Protection: we introduce the first attempt to embed a serial number into DNNs, DSN is implemented in the knowledge distillation framework, During the distillation process, each customer DNN is augmented with a unique serial number; gradient reversal layer (GRL) [ganin2015unsupervised] | BibTex: tang2020deep | Tang et al, 2020.11

-

How to prove your model belongs to you: a blind-watermark based framework to protect intellectual property of DNN:combine some ordinary data samples with an exclusive ‘logo’ and train the model to predict them into a specific label, embedding logo into the trigger image | BibTex: li2019prove | Li et al, Proceedings of the 35th Annual Computer Security Applications Conference 2019

-

Protecting the intellectual property of deep neural networks with watermarking: The frequency domain approach: explain the failure to forgery attack of zhang-noise method. | BibTex: li2021protecting | Li et al, 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom) 2021

-

Adversarial frontier stitching for remote neural network watermarking:propose a novel zero-bit watermarking algorithm that makes use of adversarial model examples, slightly adjusts the decision boundary of the model so that a specific set of queries can verify the watermark information. | Code | BibTex: merrer2020adversarial | Merrer et al, Neural Computing and Applications 2020 2017.11 | Repo by Merrer: awesome-audit-algorithms: A curated list of audit algorithms for getting insights from black-box algorithms.

-

BlackMarks: Blackbox Multibit Watermarking for Deep Neural Networks: The first end-toend multi-bit watermarking framework ; Given the owner’s watermark signature (a binary string), a set of key image and label pairs are designed using targeted adversarial attacks; provide evaluation method | BibTex: chen2019blackmarks | Chen et al, 2019.4

[watermark for adv]

-

A Watermarking-Based Framework for Protecting Deep Image Classifiers Against Adversarial Attacks: watermark is robust to adversarial noise | BibTex: sun2021watermarking | Sun et al, CVPR W 2021

-

Watermarking-based Defense against Adversarial Attacks on Deep Neural Networks: we propose a new defense mechanism that creates a knowledge gap between attackers and defenders by imposing a designed watermarking system into standard deep neural networks; introduce

randomization| BibTex: liwatermarking | Li et al, 2021.4

-

Robust Watermarking of Neural Network with Exponential Weighting: increase the weight value (trained on clean data) exponentially by fine-tuning on combined data set; introduce query modification attack (detect and AE) | BibTex: namba2019robust | et al, Proceedings of the 2019 ACM Asia Conference on Computer and Communications Security (AisaCCS) 2019

-

DeepTrigger: A Watermarking Scheme of Deep Learning Models Based on Chaotic Automatic Data Annotation:fraudulent ownership claim attacks, chaotic automatic data annotation; Anti-counterfeiting | BibTex: zhang2020deeptrigger | Zhang et al, * IEEE Access 8* 2020

-

Protecting IP of Deep Neural Networks with Watermarking: A New Label Helps: adding a new label will not twist the original decision boundary but can help the model learn the features of key samples better; investigate the relationship between model accuracy, perturbation strength, and key samples’ length.; reports more robust than zhang's method in pruning and | BibTex: zhong2020protecting | Zhong et al; Pacific-Asia Conference on Knowledge Discovery and Data Mining 2020

-

Protecting the Intellectual Properties of Deep Neural Networks with an Additional Class and Steganographic Images: use a set of watermark key samples (from another distribution) to embed an additional class into the DNN; adopt the least significant bit (LSB) image steganography to embed users’ fingerprints for authentication and management of fingerprints, 引用里有code | BibTex: sun2021protecting | Sun et al, 2021.4

-

Robust Watermarking for Deep Neural Networks via Bi-level Optimization: inner loop phase optimizes the example-level problem to generate robust exemplars, while the outer loop phase proposes a masked adaptive optimization to achieve the robustness of the projected DNN models | yang2021robust, Yang et al, ICCV 2021

-

‘‘Identity Bracelets’’ for Deep Neural Networks:using MNIST (unrelated to original dataset) as trigger set; exploit the discarded capacity in the intermediate distribution of DL models’ output to embed the WM information; SN is a vector that contains n decimal units where n is the number of neurons in the output layer; 同样scale的trigger set, 分析了unrelated 和 related trigger 各自的 drawback; 提到dark knowledge?; extension of zhang; 给出了evasion attack 不作考虑的原因 | BibTex: xu2020identity | Initial Version: A novel method for identifying the deep neural network model with the Serial Number | Xu et al, IEEE Access 2020.8

-

Visual Decoding of Hidden Watermark in Trained Deep Neural Network:The proposed method has a remarkable feature for watermark detection process, which can decode the embedded pattern cumulatively and visually. 关注提取端,进行label可视化成二位图片,增加关联性 | BibTex: sakazawa2019visual | Sakazawa et al, * IEEE Conference on Multimedia Information Processing and Retrieval (MIPR)* 2019

[post-processing]

- Robust and Verifiable Information Embedding Attacks to Deep Neural Networks via Error-Correcting Codes: 使用纠错码对trigger进行annotation, 分析了和现有information embedding attack 以及 model watermarking的区别; 可以recover的不只是label, 也可以是训练数据, property, 类似inference attcak | Jia, Jinyuan and Wang, Binghui and Gong, Neil Zhenqiang | BibTex: jia2020robust | Jia et al, 2020.10

[removal attacks]

-

Re-markable: Stealing watermarked neural networks through synthesis: using synthesized data (iid) to retrain the target model | Chattopadhyay, Nandish and Viroy, Chua Sheng Yang and Chattopadhyay, Anupam | chattopadhyay2020re

-

ROWBACK: RObust Watermarking for neural networks using BACKdoors using adv in every layer | Chattopadhyay, Nandish and Chattopadhyay, Anupam | chattopadhyay2021rowback | ICMLA 2022

[pre-processing]

- Persistent Watermark For Image Classification Neural Networks By Penetrating The Autoencoder: enhance the robustness against AE pre-processing | li2021persistent | Li et al, ICIP 2021

[model extratcion] tramer2016stealing

-

DAWN: Dynamic Adversarial Watermarking of Neural Networks: dynamically changing the responses for a small subset of queries (e.g., <0.5%) from API clients | BibTex: szyller2019dawn | Szyller et al, 2019,6

-

Cosine Model Watermarking Against Ensemble Distillation: [to do]

-

Entangled Watermarks as a Defense against Model Extraction:forcing the model to learn features which are jointly used to analyse both the normal and the triggers; using soft nearest neighbor loss (SNNL) to measure entanglement over labeled data; location的确定取决于梯度,还是很全面分析了一些adaptive attack 值得进一步得阅读; outlier dection可以参考 | Code | Jia, Hengrui and Choquette-Choo, Christopher A and Chandrasekaran, Varun and Papernot, Nicolas | BibTex: jia2020entangled | et al, 30th USENIX 2020

-

Was my Model Stolen? Feature Sharing for Robust and Transferable Watermarks: 互信息的概念, T-test定义可以借鉴;watermark的location指哪些层可以用来fine-tune;P7 take home; feature extractor is prone to use a part of neurons to identify watermark samples if we directly add watermark samples into the training set. 水印数据和原始数据同分布和非同分布都可以,Jia 是OOD? 对entangled的改进 | Tang, Ruixiang and Jin, Hongye and Wigington, Curtis and Du, Mengnan and Jain, Rajiv and Hu, Xia | tang2021my | ICLR2020 submission

-

Effectiveness of Distillation Attack and Countermeasure on DNN watermarking:Distilling attack; countermeasure: embedding the watermark into NN in an indiret way rather than directly overfitting the model on watermark, specifically, let the target model learn the general patterns of the trigger not regarding it as noise. evaluate both embedding and trigger watermarking | Distillation: yang2019effectiveness; NIPS 2014 Deep Learning Workshop | BibTex: yang2019effectiveness | Yang et al, 2019.6

-

Secure neural network watermarking protocol against forging attack:noise-like trigger; 引入单向哈希函数,使得用于证明所有权的触发集样本必须通过连续的哈希逐个形成,并且它们的标签也按照样本的哈希值指定; 对overwriting 有其他解释: 本文针对黑盒情况下的forging attack; 如果白盒情况下两个水印同时存在,只要能提供具有单一水印的模型即可,因此简单的再添加一个水印并不构成攻击威胁; idea 模型水印从差的迁移性的角度去考虑,训练的时候见过的trigger能识别但是verification的时候不能识别 | BibTex: zhu2020secure | Zhu et al, EURASIP Journal on Image and Video Processing 2020.1

-

Piracy Resistant Watermarks for Deep Neural Networks: out-of-bound values; null embedding (land into sub-area/local minimum); wonder filter | Video | BibTex: li2019piracy | Li et al, 2019.10 | Initial version: Persistent and Unforgeable Watermarks for Deep Neural Networks | BibTex: li2019persistent | Li et al, 2019.10

-

Preventing Watermark Forging Attacks in a MLaaS Environment: | BibTex: sofiane2021preventing | Lounici et al. SECRYPT 2021, 18th International Conference on Security and Cryptography

-

A Protocol for Secure Verification of Watermarks Embedded into Machine Learning Models: choose normal inputs and watermarked inputs randomly; he whole verification process is finally formulated as a problem of Private Set Intersection (PSI), and an adaptive protocol is also introduced accordingly | BibTex: Kapusta2021aprotocol | Kapusta et al, IH&MMSec 21

- Certified Watermarks for Neural Networks:Using the randomized smoothing technique proposed in Chiang et al., we show that our watermark is guaranteed to be unremovable unless the model parameters are changed by more than a certain `2 threshold | BibTex: chiang2020watermarks | Bansal et al, 2018.2

- DeepHardMark: Towards Watermarking Neural Network Hardware: injected model, trigger, target functional blocks together to trigger the special behavior. 多加了一个硬件约束 | AAAI2022 under-review | toto do

Attempts with Related Prediction ^

[image processing]

-

Watermarking Neural Networks with Watermarked Images:Image Peocessing, exclude surrogate model attack | BibTex: wu2020watermarking | Wu et al, TCSVT 2020

-

Model Watermarking for Image Processing Networks:Image Peocessing | BibTex: zhang2020model | Zhang et al, AAAI 2020.2

-

Deep Model Intellectual Property Protection via Deep Watermarking:Image Peocessing | code | BibTex: zhang2021deep | Zhang al, TPAMI 2021.3

-

Exploring Structure Consistency for Deep Model Watermarking: improvement against model extration attack with pre-processing | zhang2021exploring | Zhang et al, 2021.8

[text]

-

Watermarking the outputs of structured prediction with an application in statistical machine translation: proposed a method to watermark the outputs of machine learning models, especially machine translation, to be distinguished from the human-generated productions. | BibTex: venugopal2011watermarking | Venugopal et al, Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing 2011

-

Adversarial Watermarking Transformer: Towards Tracing Text Provenance with Data Hiding: towards marking and tracing the provenance of machine-generated text ; While the main purpose of model watermarking is to prove ownership and protect against model stealing or extraction, our language watermarking scheme is designed to trace provenance and to prevent misuse. Thus, it should be consistently present in the output, not only a response to a trigger set. | BibTex: abdelnabi2020adversarial | Abdelnabi et al, 2020.9

-

Tracing Text Provenance via Context-Aware Lexical Substitution | Yang et al, AAAI'22

-

Protecting Intellectual Property of Language Generation APIs with Lexical Watermark model extraction attack | he2021protecting | He et al, AAAI'22

Attempts with Clean Prediction ^

- Defending against Model Stealing via Verifying Embedded External Features: We embed the external features by poisoning a few training samples via style transfer; train a meta-classifier, based on the gradient of predictions; white-box; against some model steal attack | BibTex: zhu2021defending | Zhu et al, ICML 2021 workshop on A Blessing in Disguise: The Prospects and Perils of Adversarial Machine Learning, AAAI'22 [Yiming]

Applications ^

-

Model Watermarking for Image Processing Networks:Image Peocessing | BibTex: zhang2020model | Zhang et al, AAAI 2020.2

-

Deep Model Intellectual Property Protection via Deep Watermarking:Image Peocessing | code | BibTex: zhang2021deep | Zhang al, TPAMI 2021.3

-

Watermarking Neural Networks with Watermarked Images:Image Peocessing, similar to [1] but exclude surrogate model attack | BibTex: wu2020watermarking | Wu et al, TCSVT 2020

-

Watermarking Deep Neural Networks in Image Processing:Image Peocessing, using the unrelated trigger pair, target label replaced by target image; inspired by Adi and deepsigns | BibTex: quan2020watermarking | Quan et al, TNNLS 2020

-

Protecting Deep Cerebrospinal Fluid Cell Image Processing Models with Backdoor and Semi-Distillation: pattern of predicted bounding box as watermark | Li, Fang–Qi, Shi–Lin Wang, and Zhen–Hai Wang. | li2021protecting1 | DICTA 2021

-

Protecting Intellectual Property of Generative Adversarial Networks from Ambiguity Attack: using trigger noise to generate trigger pattern on the original image; using passport to implenment white-box verification | Ong, Ding Sheng and Chan, Chee Seng and Ng, Kam Woh and Fan, Lixin and Yang, Qiang | ong2021protecting, One et al, CVPR 2021

-

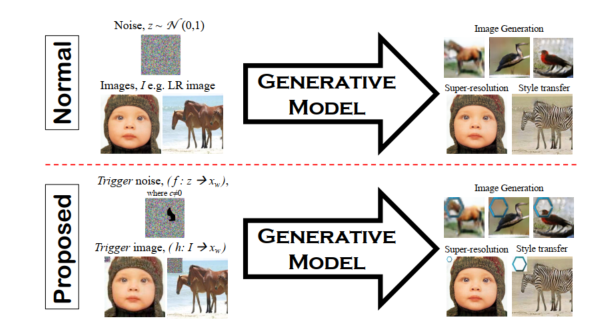

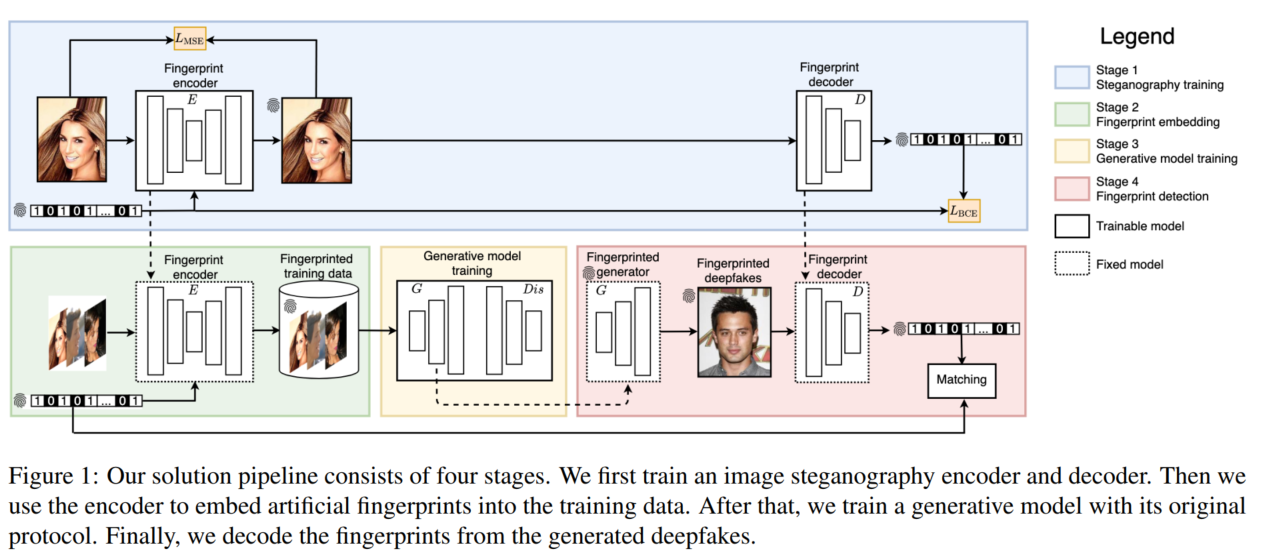

Artificial Fingerprinting for Generative Models: Rooting Deepfake Attribution in Training Data: We first embed artificial fingerprints into training data, then validate a surprising discovery on the transferability of such fingerprints from training data to generative models, which in turn appears in the generated deepfakes; proactive method for deepfake detection; leverage [4]; cannot scale up to a large number of fingerprints | [Empirical Study] [ICC'21 oral] | BibTex: yu2020artificial | Yu et al, 2020.7

-

An Empirical Study of GAN Watermarking | thakkarempirical | Thakkar wt al, 2020.Fall

-

Entangled Watermarks as a Defense against Model Extraction :forcing the model to learn features which are jointly used to analyse both the normal and the triggers (related square); using soft nearest neighbor loss (SNNL) to measure entanglement over labeled data | Code | BibTex: jia2020entangled | Jia et al, 30th USENIX 2020

-

SpecMark: A Spectral Watermarking Framework for IP Protection of Speech Recognition Systems: Automatic Speech Recognition (ASR) | [BibTex]: chen2020specmark | Chen et al, Interspeech 2020

-

Speech Pattern based Black-box Model Watermarking for Automatic Speech Recognition | BibTex: chen2021speech | Chen et al, 2021.10

-

Watermarking of Deep Recurrent Neural Network Using Adversarial Examples to Protect Intellectual Property: speech-to-text RNN model, based on adv example | Rathi, Pulkit and Bhadauria, Saumya and Rathi, Sugandha | rathi2021watermarking | Applied Artificial Intelligence, 2021

-

Protecting the Intellectual Property of Speaker Recognition Model by Black-Box Watermarking in the Frequency Domain: adding a trigger signal in the frequency domain; a new label is assigned | Yumin Wang and Hanzhou Wu | [to do]

NLP [link]

-

Watermarking Neural Language Models based on Backdooring: sentiment analysis (sentence-iunputted calssification task) | Fu et al, 2020.12

-

Robust Black-box Watermarking for Deep Neural Network using Inverse Document Frequency: modified text as trigger; divided into the following three categories: Watermarking the training data, network's parameters, model's output; Dataset: IMDB users' reviews sentiment analysis, HamSpam spam detraction | BibTex: yadollahi2021robust | Yadollahi et al, 2021.3

-

Yes We can: Watermarking Machine Learning Models beyond Classification attack can be referenced; watermark forging? | lounici2021yes | Lounici wt al, 2021 IEEE 34th Computer Security Foundations Symposium (CSF) | machine translation

-

Protecting Your NLG Models with Semantic and Robust Watermarks: re | xiang2021protecting | Xiang et al, ICLR 2022 withdraw New Arxiv

-

Watermarking the outputs of structured prediction with an application in statistical machine translation: proposed a method to watermark the outputs of machine learning models, especially machine translation, to be distinguished from the human-generated productions. | BibTex: venugopal2011watermarking | Venugopal et al, Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing 2011

-

Adversarial Watermarking Transformer Towards Tracing Text Provenance with Data Hiding: 和模型水印对于攻击的定义有所不同 towards marking and tracing the provenance of machine-generated text; our language watermarking scheme is designed to trace provenance and to prevent misuse. Thus, it should be consistently present in the output, not only a response to a trigger set. Transformer| BibTex: abdelnabi2020adversarial | Abdelnabi et al, 2020.9 S&P21

-

Tracing Text Provenance via Context-Aware Lexical Substitution | Yang et al, AAAI'22

-

Protecting Intellectual Property of Language Generation APIs with Lexical Watermark model extraction attack;machine translation, document summarizartion, image captioning | BibTex: he2021protecting | He et al, AAAI'22

-

Protect, Show, Attend and Tell: Empower Image Captioning Model with Ownership Protection:Image Caption | BibTex: lim2020protect | Lim et al, 2020.8 (surrogate model attck) | Pattern Recognition 2021.8

-

Protecting Intellectual Property of Language Generation APIs with Lexical Watermark model extraction attack;machine translation, document summarizartion, image captioning | BibTex: he2021protecting | He et al, AAAI'22

-

Passport-aware Normalization for Deep Model Protection: Improvemnet of [1] | Code | BibTex: zhang2020passport | Zhang et al, NeuraIPS 2020, 2020.9

-

Watermarking Graph Neural Networks by Random Graphs: Graph Neural Networks (GNN); focus on node classification task | BibTex: zhao2020watermarking | Zhao et al, Interspeech 2020

-

Watermarking Graph Neural Networks based on Backdoor Attacks: both graph and node classification tasks; backdoor-style; considering suspicious models with different architecture | BibTex: xu2021watermarking | Xu et al, 2021.10

- WAFFLE: Watermarking in Federated Learning: WAFFLE leverages capabilities of the aggregator to embed a backdoor-based watermark by re-training the global model with the watermark during each aggregation round. considering evasion attack by a detctor, watermark forging attack; client might be malicious, clients not involved in watermarking, and have no access to watermark set. | BibTex: atli2020waffle | Atli et al, 2020.8

-

FedIPR: Ownership Verification for Federated Deep Neural Network Models: claim legitimate intellectual property rights (IPR) of FedDNN models, in case that models are illegally copied, re-distributed or misused. | fan2021fedipr, Fan et al, FTL-IJCAI 2021

-

Towards Practical Watermark for Deep Neural Networks in Federated Learning: we demonstrate a watermarking protocol for protecting deep neural networks in the setting of FL. [Merkle-Sign: Watermarking Framework for Deep Neural Networks in Federated Learning] | BibTex: li2021towards | Li et al, 2021.5

- Secure Federated Learning Model Verification: A Client-side Backdoor Triggered Watermarking Scheme: Secure FL framework is developed to address data leakage issue when central node is not fully trustable; backdoor in encrypted way | Liu, Xiyao and Shao, Shuo and Yang, Yue and Wu, Kangming and Yang, Wenyuan and Fang, Hui | liu2021secure | SMC'21

-

Sequential Triggers for Watermarking of Deep Reinforcement Learning Policies: experimental evaluation of watermarking a DQN policy trained in the Cartpole environment | BibTex: behzadan2019sequential | Behzadan et al, 2019,6

-

Yes We can: Watermarking Machine Learning Models beyond Classification | lounici2021yes, Lounici wt al, 2021 IEEE 34th Computer Security Foundations Symposium (CSF)

-

Temporal Watermarks for Deep Reinforcement Learning Models: damage-free (related) states | BibTex: chen2021temporal | Chen et al, International Conference on Autonomous Agents and Multiagent Systems 2021

- Protecting Your Pretrained Transformer: Data and Model Co-designed Ownership Verification: CVPR'22 under review toto do

-

StolenEncoder: Stealing Pre-trained Encoders: ImageNet encoder, CLIP encoder, and Clarifai’s General Embedding encoder | Yupei Liu, Jinyuan Jia, Hongbin Liu, Neil Zhenqiang Gong | liu2022stolenencoder | 2022.1

-

Can’t Steal? Cont-Steal! Contrastive Stealing Attacks Against Image Encoders | Sha, Zeyang and He, Xinlei and Yu, Ning and Backes, Michael and Zhang, Yang | sha2022can | 2022.1

-

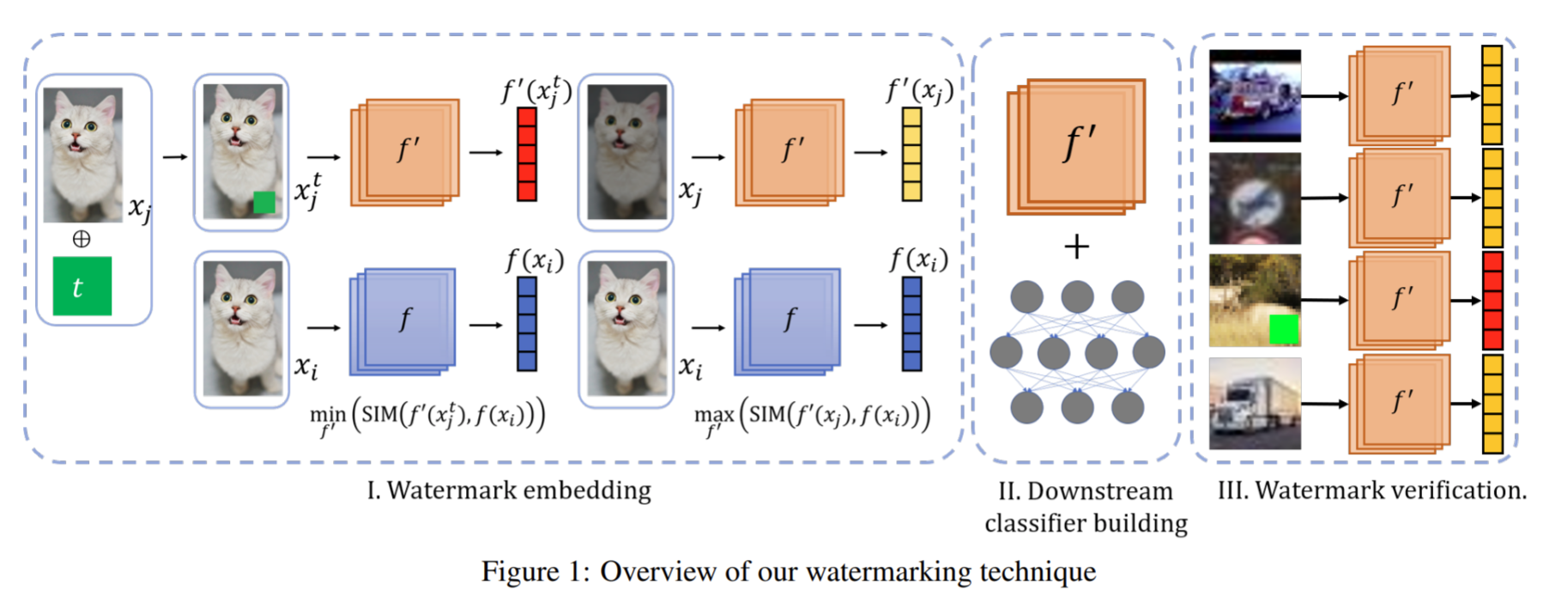

Watermarking Pre-trained Encoders in Contrastive Learning: Selling pre-trained encoders; introduce a task-agnostic loss function to effectively embed into the encoder a backdoor as the watermark. | Wu, Yutong and Qiu, Han and Zhang, Tianwei and Qiu, Meikang | wu2022watermarking | 2022.1

-

SSLGuard: A Watermarking Scheme for Self-supervised Learning Pre-trained Encoders: uses optimized verification dataset a decoder to extract copyright information to the stolen encoder and a surrogate model is involved during the watermark embedding stage. | Cong, Tianshuo and He, Xinlei and Zhang, Yang | cong2022sslguard | 2022.1

-

Radioactive data tracing through training: craft a class-specific additive mark in the latent space before the classification layer | sablayrolles2020radioactive | Sablayrolles et al, ICML 2020

-

On the Effectiveness of Dataset Watermarking in Adversarial Settings: 对radioactive data的分析 [to do]

-

Open-sourced Dataset Protection via Backdoor Watermarking: use a hypothesis test guided method for dataset verification based on the posterior probability generated by the suspicious third-party model of the benign samples and their correspondingly watermarked samples | BibTex: li2020open | Li ea el, NeurIPS Workshop on Dataset Curation and Security 2020 [Yiming]

-

Anti-Neuron Watermarking: Protecting Personal Data Against Unauthorized Neural Model Training: utilize linear color transformation as shift of the private dataset. | zou2021anti | Zou et al, 2021.9

-

Dataset Watermarking: 介绍了fingerprinting, dataset inference, dataset watermarking;Alto, examination for the degree of master | Hoang, Minh and others | hoang2021dataset | 2021.8 emperical

-

Data Protection in Big Data Analysis: 一些数据加密,和隐私保护;examination for Ph.D | Masoumeh Shafieinejad, 2021.8

Identificiton Tracing ^

阐明fingerprints和fingerprinting的不同:一个类似相机噪声,设备指纹;一个是为了进行用户追踪的分配指纹,序列号

Fingerprints ^

[adversarail example]

-

AFA Adversarial fingerprinting authentication for deep neural networks:Use the adversarial examples as the model’s fingerprint; also mimic the

logits vectorof the target sample 𝑥𝑡; ghost model [li2020learning] (directly modify) substitute refrence models | BibTex: zhao2020afa | Zhao et al, * Computer Communications* 2020

-

Fingerprinting Deep Neural Networks - A DeepFool Approach: In this paper, we utilize the geometry characteristics inherited in the DeepFool algorithm to extract data points near the classification boundary; execution time independent of dataset | Wang, Si and Chang, Chip-Hong | wang2021fingerprinting | Wang et al, IEEE International Symposium on Circuits and Systems (ISCAS) 2021

-

Deep neural network fingerprinting by conferrable adversarial examples: conferrable adversarial examples that exclusively transfer with a target label from a source model to its surrogates, using refrence model | BibTex: lukas2019deep | Lukas et al, ICLR 2021

-

Characteristic Examples: High-Robustness, Low-Transferability Fingerprinting of Neural Networks: we use random initialization instead of true data and therefore our method is data-free; using high frequency to constrain the transferablity | Wang, Siyue and Wang, Xiao and Chen, Pin-Yu and Zhao, Pu and Lin, Xue | wang2021characteristic | IJCAI2021

-

Fingerprinting Deep Neural Networks Globally via Universal Adversarial Perturbations: UAPs, contrastive learning | Peng, Zirui and Li, Shaofeng and Chen, Guoxing and Zhang, Cheng and Zhu, Haojin and Xue, Minhui | 2022.2 [to do]

[boundary example]

-

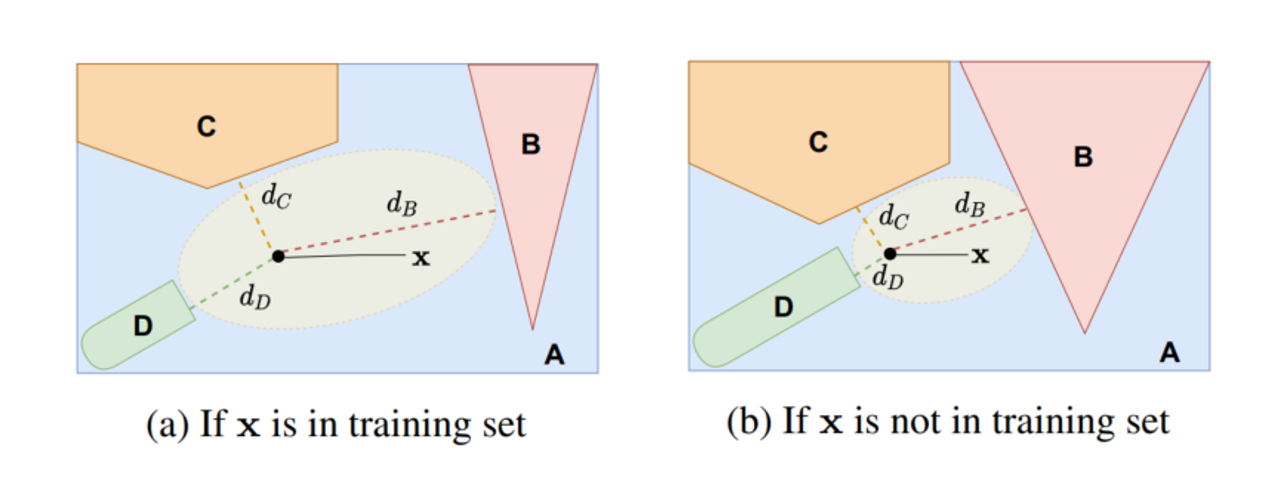

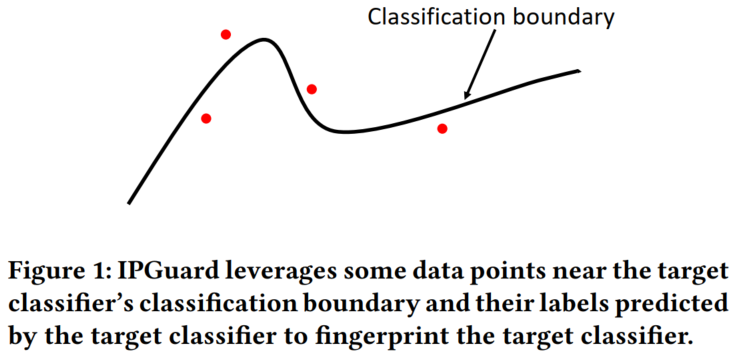

IPGuard: Protecting Intellectual Property of Deep Neural Networks via Fingerprinting the Classification Boundary: data points near the classification boundary of the model owner’s classifier (either on or far away), 找对于两个预测都很近的点; only identify verification in exp | BibTex: cao2019ipguard | Cao, Xiaoyu and Jia, Jinyuan and Gong, Neil Zhenqiang | AsiaCCS 2021

-

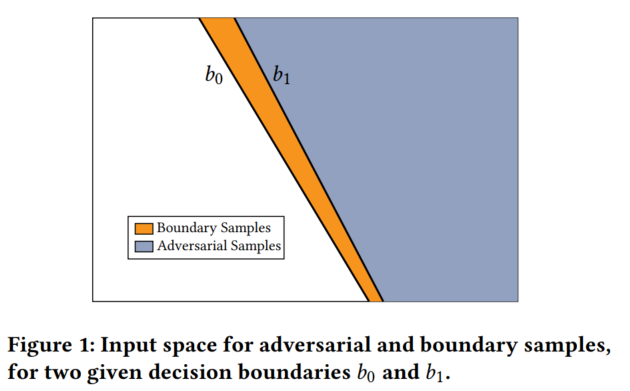

Forensicability of Deep Neural Network Inference Pipelines: identification of the execution environment (software & hardware) used to produce deep neural network predictions. Finally, we introduce boundary samples that amplify the numerical deviations in order to distinguish machines by their predicted label only. | BibTex: schlogl2021forensicability | Schlogl et al, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2021

-

iNNformant: Boundary Samples as Telltale Watermarks: Improvement of [schlogl2021forensicability]; This is relevant if, in the above example, the model owner wants to probe the inference pipeline inconspicuously in order to avoid that the licensee can process obvious boundary samples in a different pipeline (the legitimate one) than the bulk of organic samples. We propose to generate transparent boundary samples as perturbations of natural input samples and measure the distortion by the peak signal-to-noise ratio (PSNR). | BibTex: schlogl2021innformant | Schlogl et al, * IH&MMSEC '21* 2021

-

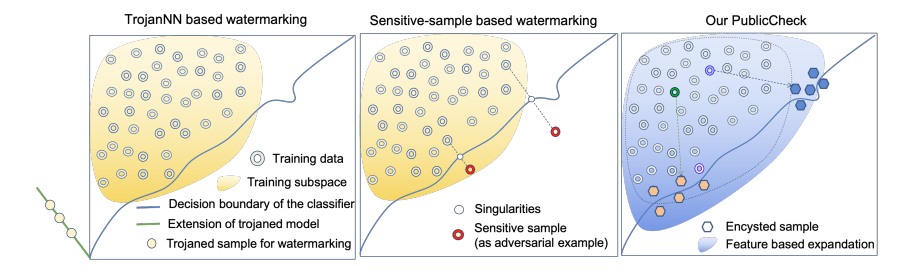

Integrity Fingerprinting of DNN with Double Black-box Design and Verification: h captures the decision boundary by generating a limited number of encysted sample fingerprints, which are a set of naturally transformed and augmented inputs enclosed around the model’s decision boundary in order to capture the inherent fingerprints of the model [to do]

[more application]

-

A Novel Verifiable Fingerprinting Scheme for Generative Adversarial Networks: image 后面再加一个classifier, 使用adv,这样生成的adv会更不可见一点 | BibTex: li2021novel | Li et al, 2021.6

-

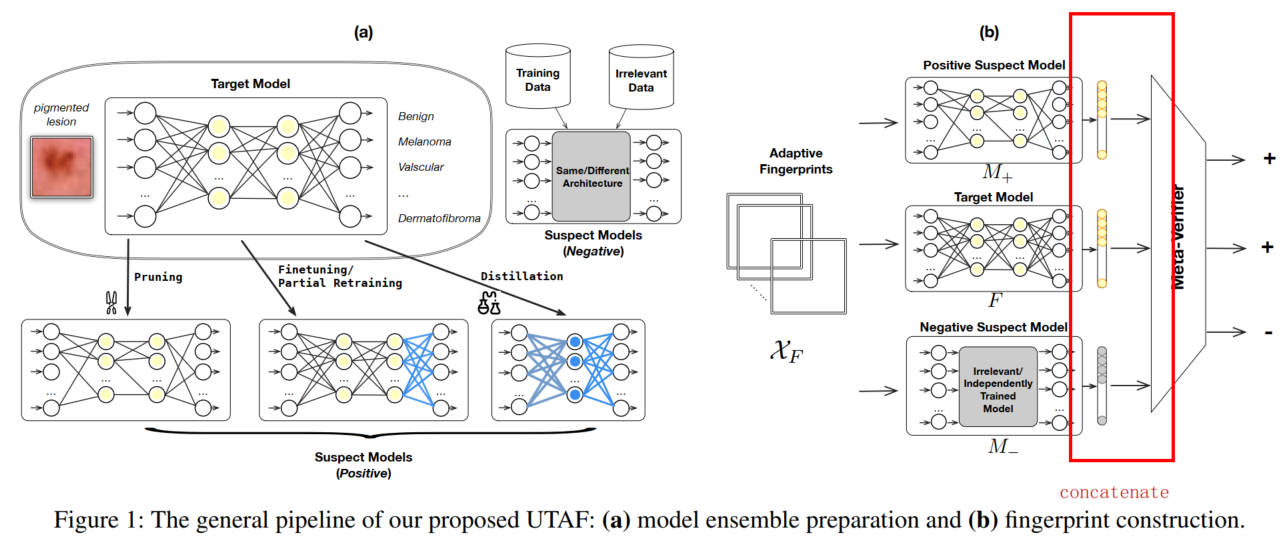

UTAF: A Universal Approach to Task-Agnostic Model Fingerprinting | Pan, Xudong and Zhang, Mi and Yan, Yifan | pan2022utaf | 2022.1

-

TAFA: A Task-Agnostic Fingerprinting Algorithm for Neural Networks: on a variety of downstream tasks including classification, regression and generative modeling, with no assumption on training data access. [rolnick2020reverse] | Pan, Xudong and Zhang, Mi and Lu, Yifan and Yang, Min | pan2021tafa | European Symposium on Research in Computer Security 2021 (B类)

[interesting]

- Boundary Defense Against Black-box Adversarial Attacks: Our method detects the boundary samples as those with low classification confidence and adds white Gaussian noise to their logits.

Inference ^

[outputs]

-

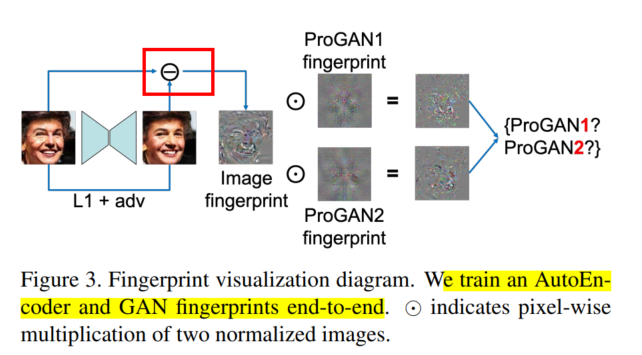

Do gans leave artificial fingerprints?: visualize GAN fingerprints motivated by PRNU, extract noise residual (unrelated to the image semantics) and show their application to GAN source identification | BibTex: marra2019gans | Marra et al, IEEE Conference on Multimedia Information Processing and Retrieval (MIPR) 2019

-

Leveraging frequency analysis for deep fake image recognition: DCT domain, these artifacts are consistent across different neural network architectures, data sets, and resolutions (不易区分相同结构?) | frank2020leveraging | Frank et al | ICML'20

-

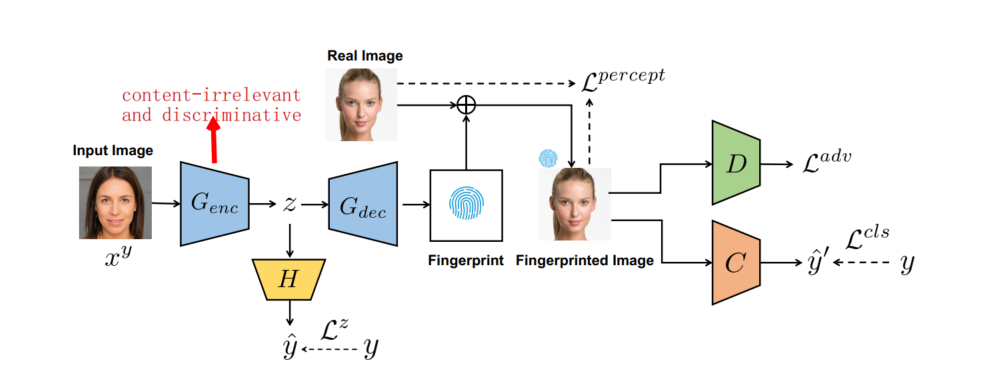

Attributing Fake Images to GANs: Learning and Analyzing GAN Fingerprints: We replace their hand-crafted fingerprint (of [1]) formulation with a learning-based one,

decouplingmodel fingerprint from image fingerprint, and show superior performances in a variety of experimental conditions. | Supplementary Material | Code | Ref Code | [BibTex]: yu2019attributing | Homepage | Yu et al, ICCV 2019

-

Learning to Disentangle GAN Fingerprint for Fake Image Attribution: the extracted features could include many content-relevant components and generalize poorly on unseen images with different content | BibTex: c | Yang et al, 2021.6

-

Your Model Trains on My Data? Protecting Intellectual Property of Training Data via Membership Fingerprint Authentication:使用MIA做 fingerprint [to do]

[pair outputs]

- Teacher Model Fingerprinting Attacks Against Transfer Learning: latent backdoor 生成一个和prob样本输出类似的样本,如果模型对这两个成对的数据都是相似的response,证明用来原始的feature extractor,和我想的成对的trigger 异曲同工 | Chen, Yufei and Shen, Chao and Wang, Cong and Zhang, Yang | chen2021teacher, Chen et al, 2021.6

[inference time]

- Fingerprinting Multi-exit Deep Neural Network Models Via Inference Time: we propose a novel approach to fingerprint multi-exit models via inference time rather than inference predictions. | Dong, Tian and Qiu, Han and Zhang, Tianwei and Li, Jiwei and Li, Hewu and Lu, Jialiang | dong2021fingerprinting | 2021.10

Training ^

-

Proof-of-Learning: Definitions and Practice: 证明训练过程的完整性,要求:验证花费小于训练花费,训练花费小于伪造花费;通过特定初始化下,梯度更新的随机性,以及逆向highly costly, 来作为交互验证的信息。可以用来做模型版权和模型完整性认证(分布训练,确定client model 是否trusty) | Code | BibTex: jia2021proof | Jia et al, 42nd S&P 2021.3

-

“Adversarial Examples” for Proof-of-Learning: we show that PoL is vulnerable to “adversarial examples”! | Zhang, Rui and Liu, Jian and Ding, Yuan and Wu, Qingbiao and Ren, Kui | zhang2021adversarial | 2021.8

-

Towards Smart Contracts for Verifying DNN Model Generation Process with the Blockchain: we propose a smart contract that is based on the dispute resolution protocol for verifying DNN model generation process. | BibTex: seike2021towards | Seike et al, IEEE 6th International Conference on Big Data Analytics (ICBDA) 2021

阐明fingerprints和fingerprinting的不同:一个类似相机噪声,设备指纹;一个是为了进行用户追踪的分配指纹,序列号

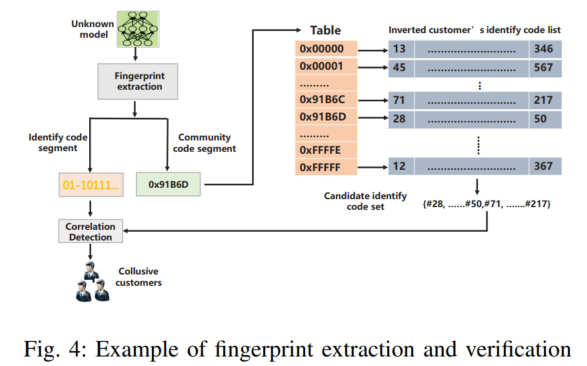

DNN Fingerprinting ^

-

DeepMarks: A Secure Fingerprinting Framework for Digital Rights Management of Deep Learning Models: focusing on the watermark bit, which using Anti Collusion Codes (ACC), e.g., Balanced Incomplete Block Design (BIBID); The main difference between watermarking and fingerprinting is that the WM remains the same for all copies of the IP while the FP is unique for each copy. As such, FPs address the ambiguity of WMs and enables tracking of IP misuse conducted by a specific user. | BibTex: chen2019deepmarks | Chen et al, ICMR 2019

-

A Deep Learning Framework Supporting Model Ownership Protection and Traitor Tracing: [Collusion-resistant fingerprinting for multimedia] | BibTex: xu2020deep | Xu et al, 2020 IEEE 26th International Conference on Parallel and Distributed Systems (ICPADS)

-

Mitigating Adversarial Attacks by Distributing Different Copies to Different Users: induce different sets of dversarial samples in different copies in a more controllable manner; 为了防止相同分发模型直接进行对抗攻击;也可用于attack tracing; based on attractors | BibTex: zhang2021mitigating | Zhang et al, 2021.11.30

-

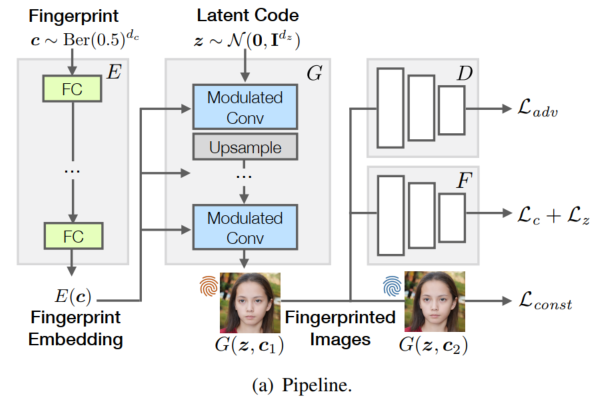

Responsible Disclosure of Generative Models Using Scalable Fingerprinting: 使fingerprints和watermark都可用, after training one generic fingerprinting model, we can instantiate a large number of generators adhoc with different fingerprints; conditional GAN, FP code as condition | BibTex: yu2020responsible | Yu et al, 2020.12

-

Decentralized Attribution of Generative Models: To redmey the

non-scalibilityof [Yu'ICCV 19]; Each binary classifier is parameterized by a user-specific key and distinguishes its associated model distribution from the authentic data distribution. We develop sufficient conditions of the keys that guarantee an attributability lower bound.| Code | BibTex: kim2020decentralized | Kim, Changhoon and Ren, Yi and Yang, Yezhou | ICLR 2021 -

Attributable Watermarking Of Speech Generative Models: model attribution, i.e., the classification of synthetic contents by their source models via watermarks embedded in the contents; 和图片的GAN attribution一样,可以把model attribution 单独分一个section? generative model attribution? [to do]

[作为access control]

-

Active DNN IP Protection: A Novel User Fingerprint Management and DNN Authorization Control Technique: using trigger sets as copyright management | BibTex: xue2020active | Xue et al, Security and Privacy in Computing and Communications (TrustCom) 2020

-

ActiveGuard: An Active DNN IP Protection Technique via Adversarial Examples: different compared with [xue2020active]: adversarial example based; 考虑了Users’ fingerprints allocation 的问题| update | BibTex: xue2021activeguard | Xue et al, 2021.3

Integrity verification ^

The user may want to be sure of the provenance fo the model in some security applications or senarios

[sensitive sample]

-

Verideep: Verifying integrity of deep neural networks through sensitive-sample fingerprinting: initial version of [he2019sensitive] | BibTex: he2018verideep | He et al, 2018.8

-

Sensitive-Sample Fingerprinting of Deep Neural Networks: we define Sensitive-Sample fingerprints, which are a small set of human unnoticeable transformed inputs that make the model outputs sensitive to the model’s parameters. | BibTex: he2019sensitive | He et al, CVPR 2019

-

Verification of integrity of deployed deep learning models using Bayesian optimization: handle both small and large weights pertubation | Kuttichira, Deepthi Praveenlal and Gupta, Sunil and Nguyen, Dang and Rana, Santu and Venkatesh, Svetha | kuttichira2022verification | Knowledge-Based Systems,2022

-

Sensitive Samples Revisited: Detecting Neural Network Attacks Using Constraint Solvers: He 的方法需要假设凸函数特性,对He的方法选择以一种新的优化求解方法;其中的故事描述可借鉴,e.g., to be trusted | Docena, Amel Nestor and Wahl, Thomas and Pearce, Trevor and Fei, Yunsi | docena2021sensitive | 2021.9

-

TamperNN: Efficient Tampering Detection of Deployed Neural Nets: In the remote interaction setup we consider, the proposed strategy is to identify markers of the model input space that are likely to change class if the model is attacked, allowing a user to detect a possible tampering. | BibTex: merrer2019tampernn | Merrer et al, IEEE 30th International Symposium on Software Reliability Engineering (ISSRE) 2019

-

Fragile Neural Network Watermarking with Trigger Image Set: The watermarked model is sensitive to malicious fine tuning and will produce unstable classification results of the trigger images. | zhu2021fragile, Zhu et al, International Conference on Knowledge Science, Engineering and Management 2021 (KSEM)

[Fragility]

-

MimosaNet: An Unrobust Neural Network Preventing Model Stealing: . In this paper, we propose a method for creating an equivalent version of an already trained fully connected deep neural network that can prevent network stealing: namely, it produces the same responses and classification accuracy, but it is extremely sensitive to weight changes; focus on three consecutive FC layer | BibTex: szentannai2019mimosanet | Szentannai et al, 2019.7

-

DeepiSign: Invisible Fragile Watermark to Protect the Integrity and Authenticity of CNN: convert to DCT domain, choose the high frequency to adopt LSB for information hiding, To verify the integrity and authenticity of the model | BibTex: abuadbba2021deepisign | Abuadbba et al, SAC 2021

-

NeuNAC: A Novel Fragile Watermarking Algorithm for Integrity Protection of Neural Networks: white-box | BibTex: botta2021neunac | Botta et al, Information Sciences (2021)

[Reversible]

-

Reversible Watermarking in Deep Convolutional Neural Networks for Integrity Authentication: chose the least important weights as the cover, can reverse the original model performance, can authenticate the integrity | Reversible data hiding | BibTex: guan2020reversible | Guan et al, ACM MM 2020

[others]

- SafetyNets: verifiable execution of deep neural networks on an untrusted cloud: Specifically, SafetyNets develops and implements a specialized interactive proof (IP) protocol for verifiable execution of a class of deep neural networks, | BibTex: ghodsi2017safetynets | Ghodsi et al, Proceedings of the 31st International Conference on Neural Information Processing Systems. 2017 [to do]

- Minimal Modifications of Deep Neural Networks using Verification: Adi 团队;利用模型维护领域的想法, 模型有漏洞,需要重新打补丁,但是不能使用re-train, 如何修改已经训练好的模型;所属领域:model verification, model repairing ...; 提出了一种移除水印需要多少的代价的评价标准,measure the resistance of model watermarking | Coide | BibTex: goldberger2020minimal | Goldberger et al, LPAR 2020

| A Survey on Model Watermarking Neural Networks | ||

|---|---|---|

| Requirement | Explanation | Motivation |

| Fidelity | Prediction quality of the model on its original task should not be degraded significantly | Ensures the model's performance on the original task |

| Robustness | Watermark should be robust against removal attacks | Prevents attacker from removing the watermark to avoid copyright claims of the original owner |

| Reliability | Exhibit minimal false negative rate | Allows legitimate users to identify their intellectual property with a high probability |

| Integrity | Exhibit minimal false alarm rate | Avoids erroneously accusing honest parties with similar models of theft |

| Capacity | Allow for inclusion of large amounts of information | Enables inclusion of potentially long watermarks \eg a signature of the legitimate model owner |

| Secrecy | Presence of the watermark should be secret, watermark should be undetectable | Prevents watermark detection by an unauthorized party |

| Efficiency | Process of including and verifying a watermark to ML model should be fast | Does not add large overhead |

| Unforgeability | Watermark should be unforgeable | No adversary can add additional watermarks to a model, or claim ownership of existing watermark from different party |

| Authentication | Provide strong link between owner and watermark that can be verified | Proves legitimate owner's identity |

| Generality | Watermarking algorithm should be independent of the dataset and the ML algorithms used | Allows for broad use |

| DNN Intellectual Property Protection: Taxonomy, Methods, Attack Resistance, and Evaluations | |

|---|---|

| Fidelity | The function and performance of the model cannot be affected by embedding the watermark. |

| Robustnesss | The watermarking method should be able to resist model modification, such as compression, pruning, fine-tuning, or watermark overwriting. |

| Functionality | It can support ownership verification, can use watermark to uniquely identify the model, and clearly associate the model with the identity of the IP owner. |

| Capacity | The amount of information that the watermarking method can embed. |

| Efficiency | The watermark embedding and extraction processes should be fast with negligible computational and communication overhead. |

| Reliability | The watermarking method should generate the least false negatives (FN) and false positives (FP); the relevant key can be used to effectively detect the watermarked model. |

| Generality | The watermarking method/the DNN authentication framework can be applicable to white-box and black-box scenarios, various data sets and architectures, various computing platforms. |

| Uniqueness | The watermark/fingerprint should be unique to the target classifier. Further, each user's identity (fingerprint) should also be unique. |

| Indistinguishability | The attacker cannot distinguish the wrong prediction from the correct model prediction. |

| Scalability | The watermark verification technique should be able to verify DNNs of different sizes. |

| A survey of deep neural network watermarking techniques | |

|---|---|

| Evaluations | Description |

| Robustness | The embedded watermark should resist different kinds of processing. |

| Security | The watermark should be secure against intentional attacks from an unauthorized party. |

| Fidelity | The watermark embedding should not significantly affect the accuracy of the target DNN architectures. |

| Capacity | A multi-bit watermarking scheme should allow to embed as much information as possible into the host target DNN. |

| Integrity | The bit error rate should be zero (or negligible) for multibit watermarking and the false alarm and missed detection probabilities should be small for the zero-bit case. |

| Generality | The watermarking methodology can be applied to various DNN architectures and datasets. |

| Efficiency | The computational overhead of watermark embedding and extraction processes should be negligible. |

-

Practical Evaluation of Neural Network Watermarking Approaches: To do

-

SoK: How Robust is Deep Neural Network Image Classification Watermarking?: | Lukas, et al, S&P2022 | [Toolbox]

-

Copy, Right? A Testing Framework for Copyright Protection of Deep Learning Models | Jialong Chen et al, S&P2022

- 数字水印技术及应用2004(孙圣和)1.7.1 评价问题

- 数字水印技术及其应用2018(楼偶俊) 2.3 数字水印系统的性能评价

- 数字水印技术及其应用2015(蒋天发)1.6 数字水印的性能评测方法

- Digital Rights Management The Problem of Expanding Ownership Rights

-

Forgotten siblings: Unifying attacks on machine learning and digital watermarking: The two research communities have worked in parallel so far, unnoticeably developing similar attack and defense strategies. This paper is a first effort to bring these communities together. To this end, we present a unified notation of blackbox attacks against machine learning and watermarking. | Cited by: [Protecting artificial intelligence IPs: a survey of watermarking and fingerprinting for machine learning] | BibTex: quiring2018forgotten | Quiring et al, IEEE European Symposium on Security and Privacy (EuroS&P) 2018

-

Evaluating the Robustness of Trigger Set-Based Watermarks Embedded in Deep Neural Networks: FT, model stealing, parameter pruning, evasion,

- Embedding Watermarks into Deep Neural Networks:第一篇模型水印工作 | Code | [BibTex]): uchida2017embedding | Uchia et al, ICMR 2017.1

- DeepSigns: An End-to-End Watermarking Framework for Protecting the Ownership of Deep Neural Networks:using activation map as cover | code | BibTex: rouhani2019deepsigns | Rouhani et al, ASPLOS 2019

- Embedding Watermarks into Deep Neural Networks:第一篇模型水印工作 | Code | Pruning): han2015deep; ICLR 2016 | [BibTex]): uchida2017embedding | Uchia et al, ICMR 2017.1

-

IPGuard: Protecting Intellectual Property of Deep Neural Networks via Fingerprinting the Classification Boundary: Based on this observation, IPGuard extracts some data points near the classification boundary of the model owner’s classifier and uses them to fingerprint the classifier | BibTex: cao2019ipguard | Cao et al, AsiaCCS 2021

-

Robust Watermarking of Neural Network with Exponential Weighting:using original training data with wrong label as triggers; increase the weight value exponentially so that model modification cannot change the prediction behavior of samples (including key samples) before and after model modification; introduce query modification attack, namely, pre-processing to query | BibTex: namba2019robust | et al, Proceedings of the 2019 ACM Asia Conference on Computer and Communications Security (AisaCCS) 2019

(like robust defense)

-

Effectiveness of Distillation Attack and Countermeasure on DNN watermarking:Distilling the model's knowledge to another model of smaller size from scratch destroys all the watermarks because it has a fresh model architecture and training process; countermeasure: embedding the watermark into NN in an indiret way rather than directly overfitting the model on watermark, specifically, let the target model learn the general patterns of the trigger not regarding it as noise. evaluate both embedding and trigger watermarking | Distillation: yang2019effectiveness; NIPS 2014 Deep Learning Workshop | BibTex: yang2019effectiveness | Yang et al, 2019.6

-

Attacks on digital watermarks for deep neural networks:weights variance or weights standard deviation, will increase noticeably and systematically during the process of watermark embedding algorithm by Uchida et al; using L2 regulatization to achieve stealthiness; w tend to mean=0, var=1 | BibTex: wang2019attacks | Wang et al, ICASSP 2019

-

On the Robustness of the Backdoor-based Watermarking in Deep Neural Networks: white-box: just surrogate model attack with limited data; black-box: L2 regularization to prevent over-fitting to backdoor noise and compensate with fine-tuning; property inference attack: detect whether the backdoor-based watermark is embedded in the model | BibTex: shafieinejad2019robustness | Shafieinejad et al, 2019.6

-

Leveraging unlabeled data for watermark removal of deep neural networks:carefully-designed fine-tuning method; Leveraging auxiliary unlabeled data significantly decreases the amount of labeled training data needed for effective watermark removal, even if the unlabeled data samples are not drawn from the same distribution as the benign data for model evaluation | BibTex: chen2019leveraging | Chen et al, ICML workshop on Security and Privacy of Machine Learning 2019

-

REFIT: A Unified Watermark Removal Framework For Deep Learning Systems With Limited Data:) an adaption of the elastic weight consolidation (EWC) algorithm, which is originally proposed for mitigating the catastrophic forgetting phenomenon; unlabeled data augmentation (AU), where we leverage auxiliary unlabeled data from other sources | Code | BibTex: chen2019refit | Chen et al, ASIA CCS 2021

-

Removing Backdoor-Based Watermarks in Neural Networks with Limited Data:we benchmark the robustness of watermarking; propose "WILD" (data augmentation and alignment of deature distribution) with the limited access to training data| BibTex: liu2020removing | Liu et al, ICASSP 2019

-

The Hidden Vulnerability of Watermarking for Deep Neural Networks: First, we propose a novel preprocessing function, which embeds imperceptible patterns and performs spatial-level transformations over the input. Then, conduct fine-tuning strategy using unlabelled and out-ofdistribution samples. | BibTex: guo2020hidden | Guo et al, 2020.9 | PST is analogical to Backdoor attack in the physical world | BibTex: li2021backdoor | Li et al, ICLR 2021 Workshop on Robust and Reliable Machine Learning in the Real World

-

Neural network laundering: Removing black-box backdoor watermarks from deep neural networks: propose a ‘laundering’ algorithm aiming to remove watermarks‐based black‐box methods ([adi, zhang]) using low‐level manipulation of the neural network based on the relative activation of neurons. | Aiken et al, Computers & Security (2021) 2021

-

Re-markable: Stealing Watermarked Neural Networks Through Synthesis:using DCGAN to synthesize own training data, and using transfer learning to execute removal; analyze the failure of evasion attack, e.g., Hitaj ; introduce the MLaaS | BibTex: chattopadhyay2020re | Chattopadhyay et al, International Conference on Security, Privacy, and Applied Cryptography Engineering 2020

-

Neural cleanse: Identifying and mitigating backdoor attacks in neural networks: reverse the backdoor| BibTex: wang2019neural | Wang et al, IEEE Symposium on Security and Privacy (SP) 2019

-

Fine-pruning: Defending against backdooring attacks on deep neural networks | Fine-tuning: girshick2014rich; CVPR 2014 | BibTex: liu2018fine | Liu et al, International Symposium on Research in Attacks, Intrusions, and Defenses 2018

-

SPECTRE: Defending Against Backdoor Attacks Using Robust Statistics: We propose a novel defense algorithm using robust covariance estimation to amplify the spectral signature of corrupted data. | BibTex:hayase2021spectre | Hayase et al, 2021.4

-

Detect and remove watermark in deep neural networks via generative adversarial networks: backdoorbased DNN watermarks are vulnerable to the proposed GANbased watermark removal attack, like Neural Cleanse, replacing the optimized method with GAN | BibTex: wang2021detect | Wang et al, 2021.6 | To do

-

Fine-tuning Is Not Enough: A Simple yet Effective Watermark Removal Attack for DNN Models | BibTex: guofine | IJCAI 2021

-

Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks | BibTex: li2021neural | Li et al, ICLR 2021

- DeepMarks: A Secure Fingerprinting Framework for Digital Rights Management of Deep Learning Models: focusing on the watermark bit, which using Anti Collusion Codes (ACC), e.g., Balanced Incomplete Block Design (BIBID) | BibTex: chen2019deepmarks | Chen et al, ICMR 2019

Query-modification (like detection-based defense)

-

Evasion Attacks Against Watermarking Techniques found in MLaaS Systems:ensemble prediction based on voting-mechanism | BibTex: hitaj2019evasion | Hitaj et al, Sixth International Conference on Software Defined Systems (SDS) 2019 | Initial Version: Have You Stolen My Model? Evasion Attacks Against Deep Neural Network Watermarking Techniques

-

An Evasion Algorithm to Fool Fingerprint Detector for Deep Neural Networks: . 该逃避算法的核心是设计了一个指纹样本检测器— —— FingerprintGGAN. 利用生成对抗网络(generative adversarial network,) 原理,学习正常样本在隐空间的特征表示及其分布,根据指纹 GAN 样本与正常样本在隐空间中特征表示的差异性,检测到指纹样本,并向目标模型所有者返回有别于预测的标签,使模型所有者的指纹比对方法失效. | BibTex: yaguan2021evasion | Qian et al, Journal of Computer Research and Development 2021

-

Persistent Watermark For Image Classification Neural Networks By Penetrating The Autoencoder: enhance the robustness against AE pre-processing | li2021persistent | Li et al, ICIP 2021

energy perspective

forgery attack; protocol attack; invisible attack

- Combatting ambiguity attacks via selective detection of embedded watermarks:

common attack in media watermarking| BibTex: sencar2007combatting | IEEE Transactions on Information Forensics and Security (TIFS) 2007

Shall distinguishing surrogate model attack and inference attack

-

Stealing machine learning models via prediction apis: protecting against an adversary with physical access to the host device of the policy is often impractical or disproportionately costly | BibTex: tramer2016stealing | Tramer et al, 25th USENIX 2016

-

Knockoff nets: Stealing functionality of black-box models | BibTex: orekondy2019knockoff | CVPR 2019

-

Stealing Deep Reinforcement Learning Models for Fun and Profit: first model extraction attack against Deep Reinforcement Learning (DRL), which enables an external adversary to precisely recover a black-box DRL model only from its interaction with the environment | Bibtex: chen2020stealing | Chen et al, 2020.6

-

Good Artists Copy, Great Artists Steal: Model Extraction Attacks Against Image Translation Generative Adversarial Networks: we show the first model extraction attack against real-world generative adversarial network (GAN) image translation models | BibTex: szyller2021good | Szyller et al, 2021.4

-

High Accuracy and High Fidelity Extraction of Neural Networks: distinguish between two types of model extraction-fidelity extraction and accuracy extraction | BibTex: jagielski2020high | Jagielski et al, 29th {USENIX} Security Symposium (S&P) 2020

-

Model Extraction Warning in MLaaS Paradigm: | BibTex: kesarwani2018model | Kesarwani et al, Proceedings of the 34th Annual Computer Security Applications Conference(ACSAC) 2018

-

Stealing neural networks via timing side channels: Here, an adversary can extract the Neural Network parameters, infer the regularization hyperparameter, identify if a data point was part of the training data, and generate effective transferable adversarial examples to evade classifiers; this paper is exploiting the timing side channels to infer the depth of the network; using reinforcement learning to reduce the search space | BibTex: duddu2018stealing | Duddu et al, 2018.12

[Countermeasures]

model watermarking methods