Code and model for AAAI 2024 (Oral): UMIE: Unified Multimodal Information Extraction with Instruction Tuning

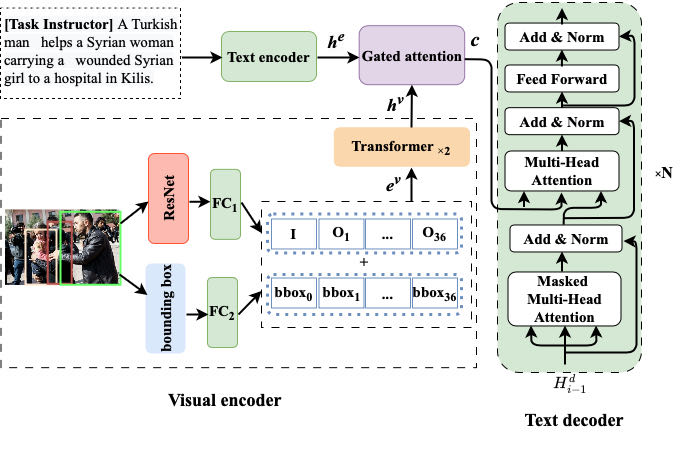

The overall architecture of our hierarchical modality fusion network.

-

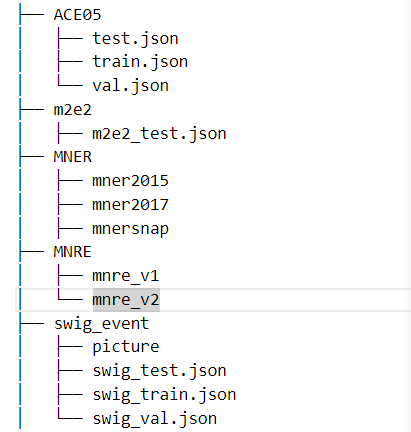

Twitter2015 & Twitter2017

The text data follows the conll format. You can download the Twitter2015 data via this link and download the Twitter2017 data via this link. Please place them in

data/NNER. -

MNRE The MNRE dataset comes from MEGA, many thanks.

MEE

-

The MEE dataset comes from MEE, many thanks.

-

SWiG(Visual Event Extraction Data) We download situation recognition data from SWiG. Please find the preprocessed data in M2E2 and GSR.

-

ACE05 We preprcoessed ACE following OneIE. The sample data format is in sample.json. Due to license reason, the ACE 2005 dataset is only accessible to those with LDC2006T06 license

Vision

To extract visual object images, we first use the NLTK parser to extract noun phrases from the text and apply the visual grouding toolkit to detect objects. Detailed steps are as follows:

- Using the NLTK parser (or Spacy, textblob) to extract noun phrases from the text.

- Applying the visual grouding toolkit to detect objects. Taking the twitter2015 dataset as an example, the extracted objects are stored in

twitter2015_images.h5. The images of the object obey the following naming format:imgname_pred.png, whereimgnameis the name of the raw image corresponding to the object,numis the number of the object predicted by the toolkit.

The detected objects and the dictionary of the correspondence between the raw images and the objects are available in our data links.

Text We preprcoessed Text data following uie

bash data_processing/run_data_generation.bashExapmle:

Entity

{

"text": "@USER Kyrie plays with @USER HTTPURL",

"label": "person, Kyrie",

"image_id": "149.jpg"

}Relation

{

"text": "Do Ryan Reynolds and Blake Lively ever take a bad photo ? 😍",

"label": "Ryan Reynolds <spot> couple <spot> Blake Lively",

"image_id": "O_1311.jpg"

}Event Trigger

{

"text":"Smoke rises over the Syrian city of Kobani , following a US led coalition airstrike, seen from outside Suruc",

"label": "attack, airstrike",

"image_id": "VOA_EN_NW_2015.10.21.3017239_4.jpg"

}Event Argument

{

"text":"Smoke rises over the Syrian city of Kobani , following a US led coalition airstrike, seen from outside Suruc",

"label": "attack <spot> attacker, coalition <spot> Target, O1",

"O1": [1, 190, 508, 353],

"image_id": "VOA_EN_NW_2015.10.21.3017239_4.jpg"

}To run the codes, you need to install the requirements:

pip install -r requirements.txtbash -x scripts/full_finetuning.sh -p 1 --task ie_multitask --model flan-t5 --ports 26754 --epoch 30 --lr 1e-4bash -x scripts/test_eval.sh -p 1 --task ie_multitask --model flan-t5 --ports 26768 Please kindly cite our paper if you find any data/model in this repository helpful:

@inproceedings{Sun2024UMIE,

title={UMIE: Unified Multimodal Information Extraction with Instruction Tuning},

author={Lin Sun and Kai Zhang and Qingyuan Li and Renze Lou},

year={2024},

booktitle={Proceedings of AAAI}

}