LyDROO

Lyapunov-guided Deep Reinforcement Learning for Stable Online Computation Offloading in Mobile-Edge Computing Networks

PyTorch code to reproduce LyDROO algorithm [1], which is an online computation offloading algorithm to maximize the network data processing capability subject to the long-term data queue stability and average power constraints. It applies Lyapunov optimization to decouple the multi-stage stochastic MINLP into deterministic per-frame MINLP subproblems and solves each subproblem via DROO algorithm. It includes:

-

memory.py: the codes of Deep Reinforcement Learning based on fully connected neural networks (DNN) implemented on PyTorch.

-

memoryTF2conv.py: the codes of Deep Reinforcement Learning based on convolutional neural networks (CNN) implemented on Tenforflow 2.

-

ResourceAllocation: Algorithms for resource allocation.

-

LyDROO.py: run this file for LyDROO with DNN implemented on PyTorch, namely LyDROO (DNN).

-

LyDROOwithTF2conv.py: run this file for LyDROO with CNN implemented on Tenforflow 2, namely LyDROO (CNN).

Cite this work

- Suzhi Bi, Liang Huang, and Ying-jun Angela Zhang, ``Lyapunov-guided Deep Reinforcement Learning for Stable Online Computation Offloading in Mobile-Edge Computing Networks'', IEEE Transactions on Wireless Communications, vol. 20, no. 11, pp. 7519-7537, November 2021.

@ARTICLE{bi2021LyDROO,

author={Bi, Suzhi and Huang, Liang and Wang, Hui and Zhang, Ying-Jun Angela},

journal={IEEE Transactions on Wireless Communications},

title={Lyapunov-guided Deep Reinforcement Learning for Stable Online Computation Offloading in Mobile-Edge Computing Networks},

year={2021},

volume={20},

number={11},

pages={7519-7537},

doi={10.1109/TWC.2021.3085319}

}

About authors

-

Suzhi BI, bsz AT szu.edu.cn

-

Liang HUANG, lianghuang AT zjut.edu.cn

-

Ying Jun (Angela) Zhang, yjzhang AT ie.cuhk.edu.hk

How the code works

- For LyDROO (DNN), run the file, LyDROO.py. Please make sure that PyTorch has been successfully installed before execution.

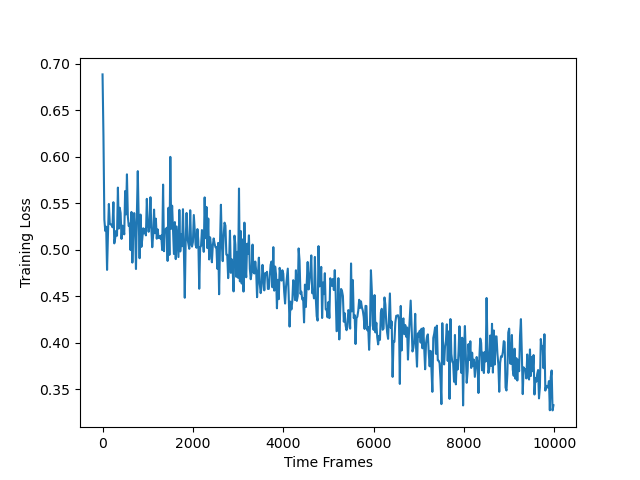

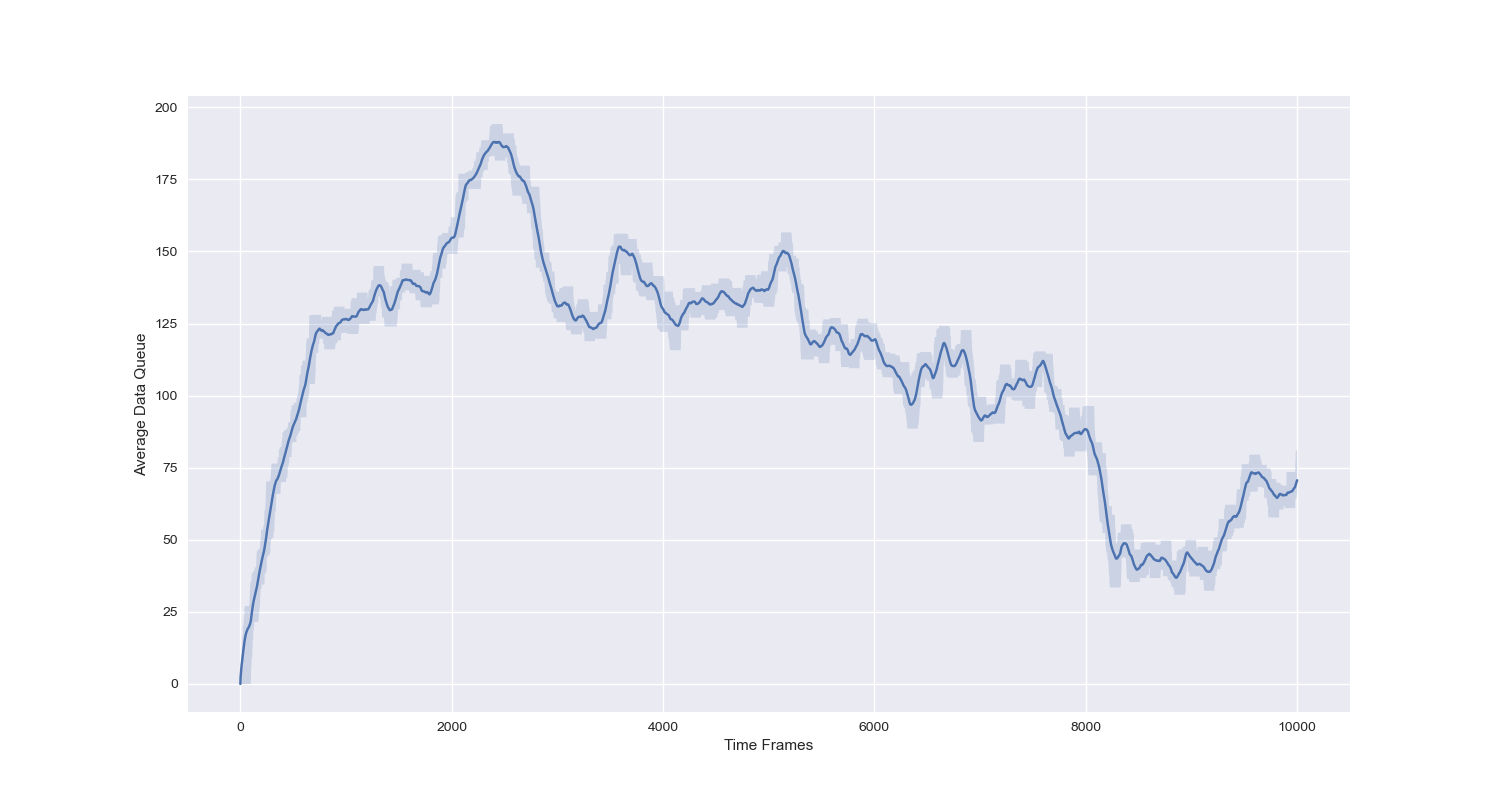

| Training Loss | Average Data Queue |

|---|---|

|

|

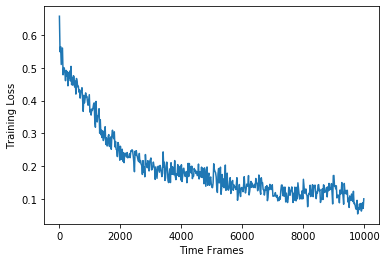

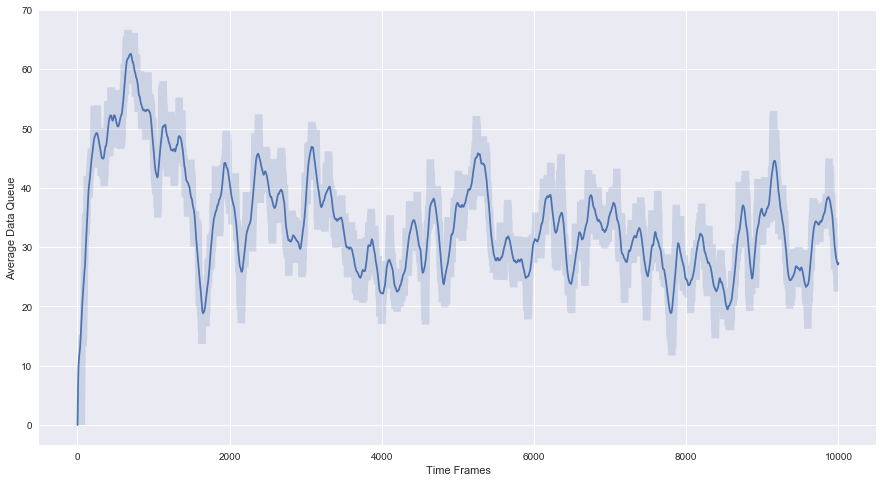

- For LyDROO (CNN), run the file, LyDROOwithTF2conv.py. Please make sure that Tensorflow 2 has been successfully installed before execution.

| Training Loss | Average Data Queue |

|---|---|

|

|

- Remarks:

- You may arrive at similar but different results since both wireless channels and data arrivals are randomly generated on lines 130-139 of LyDROO.py or LyDROOwithTF2conv.py.

- LyDROO (DNN) requires more training frames before convegence, e.g., increase the time frame variable 'n' from 10000 to 20000 on line 65 of LyDROO.py.

- In general, LyDROO (CNN) converges much faster than LyDROO (DNN).