This repository contains the accompanying code for Deep Virtual Markers for Articulated 3D Shapes, ICCV'21

- 2021-08-16: The first virsion of Deep Virtual Markers is published

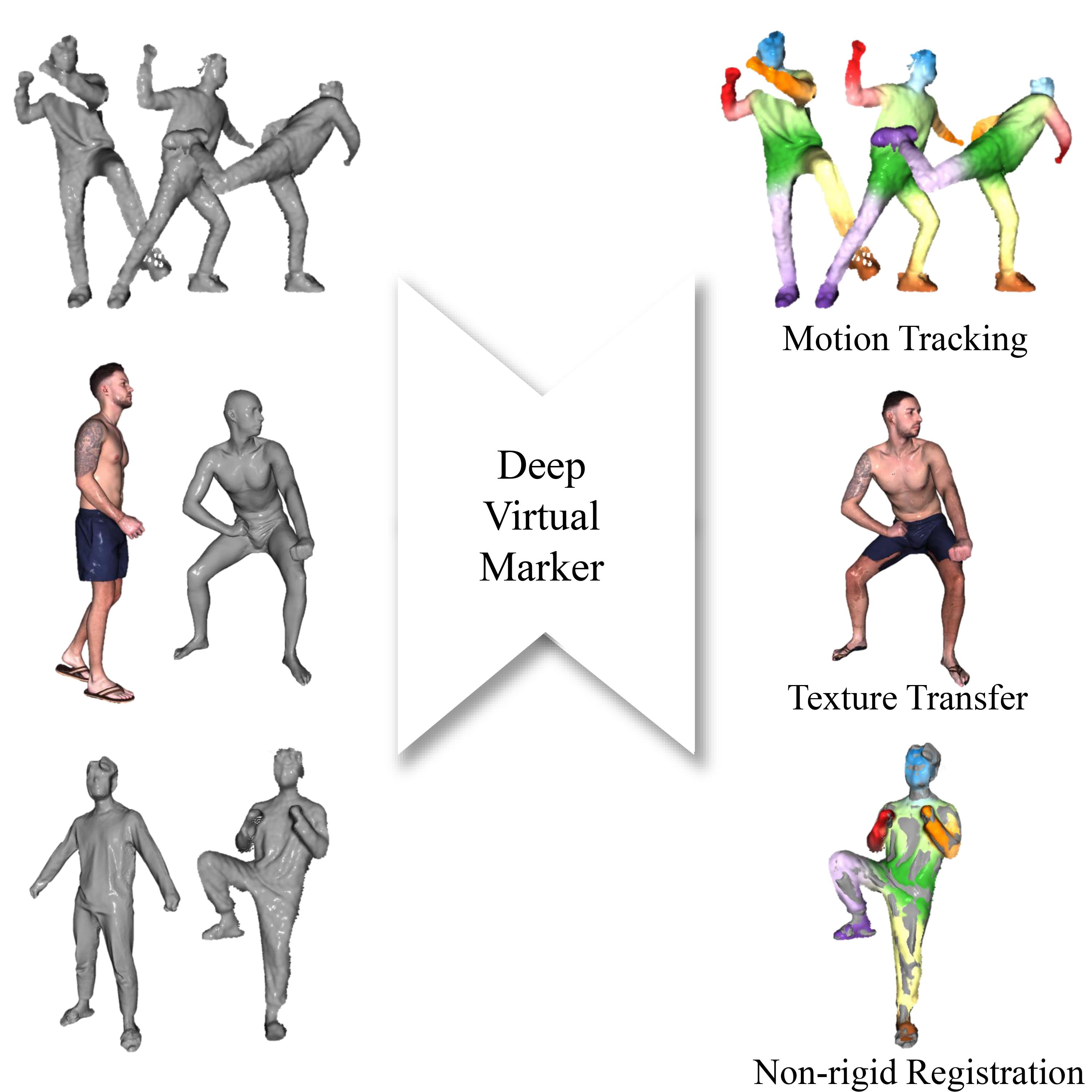

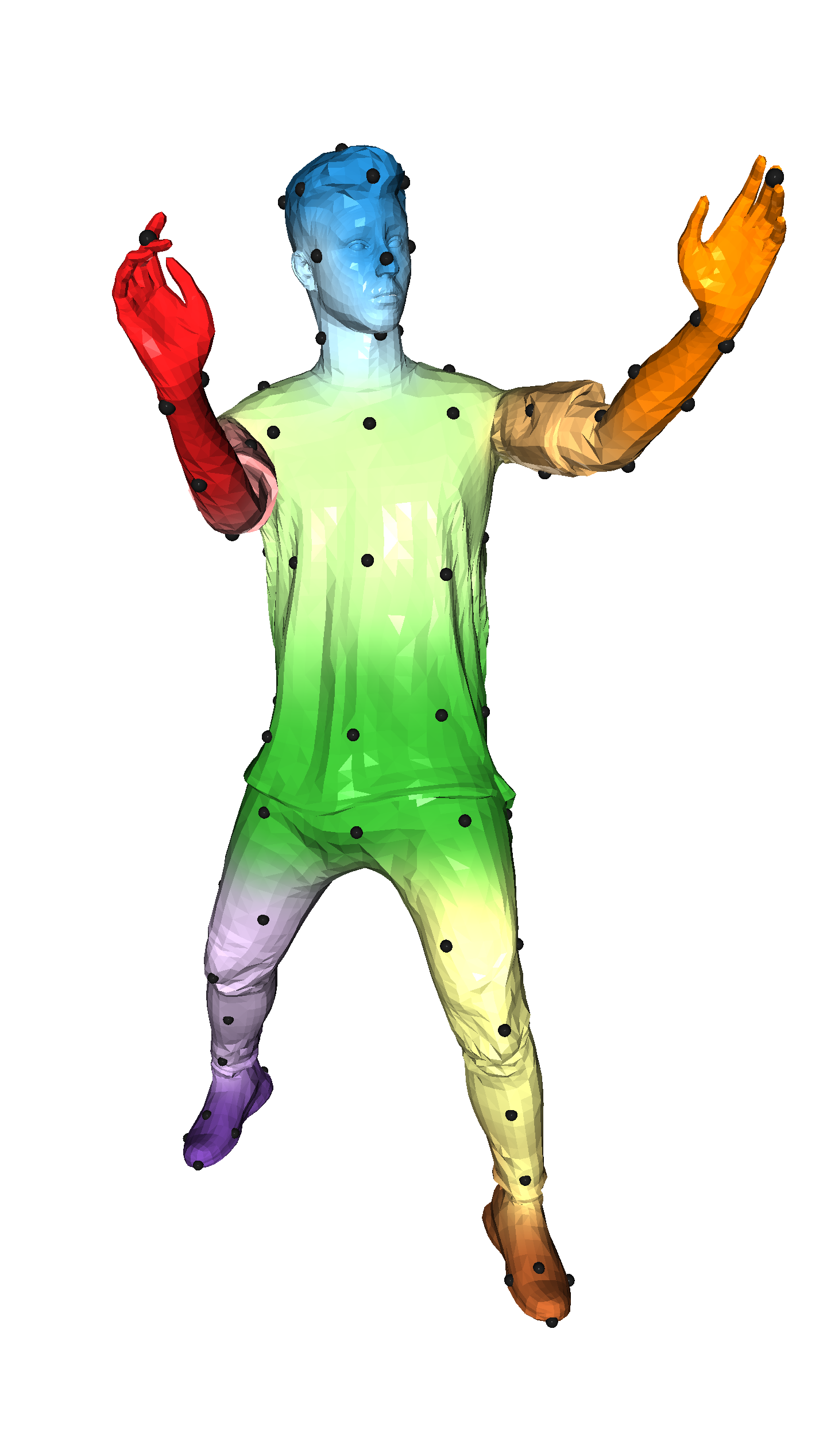

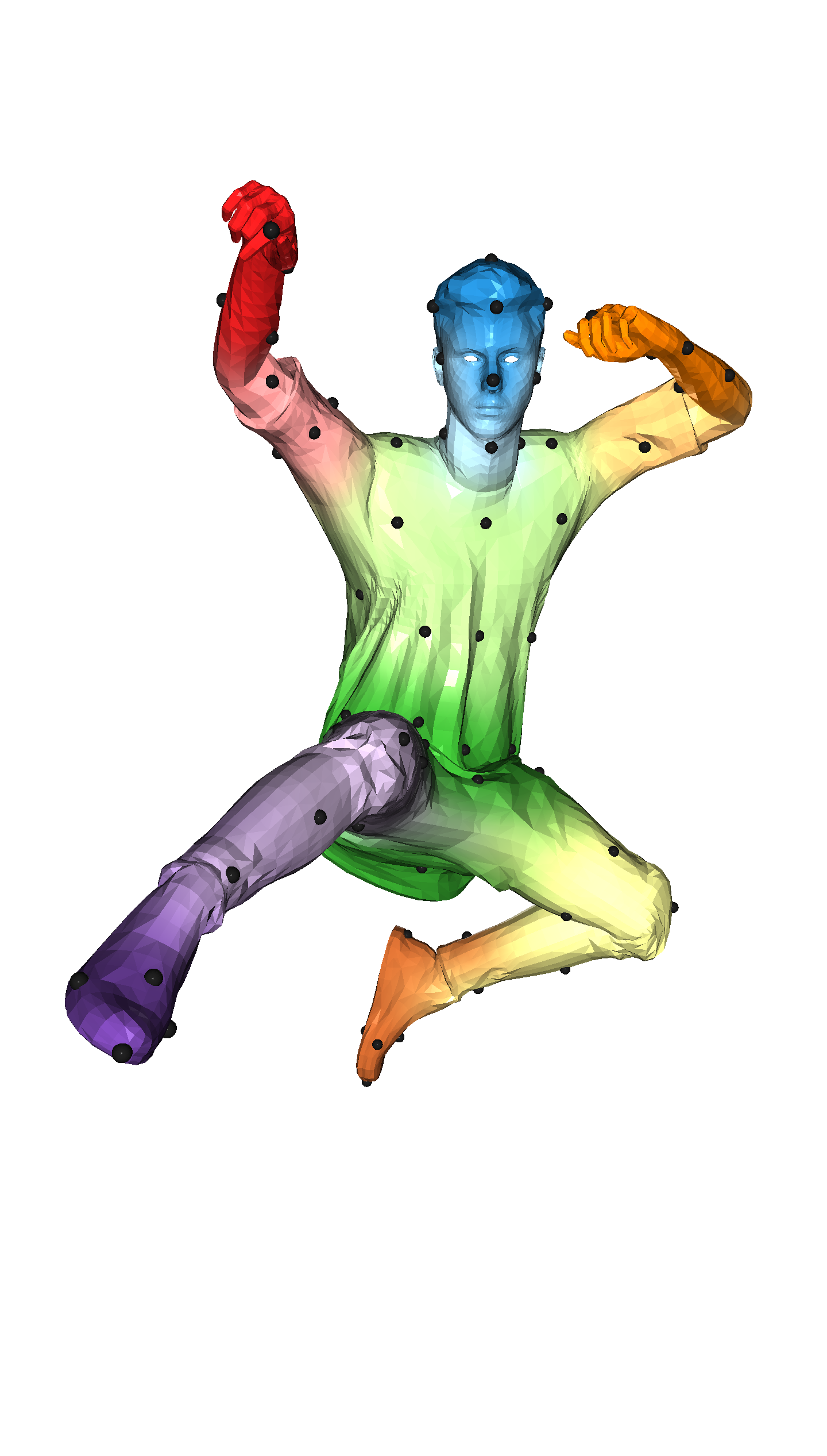

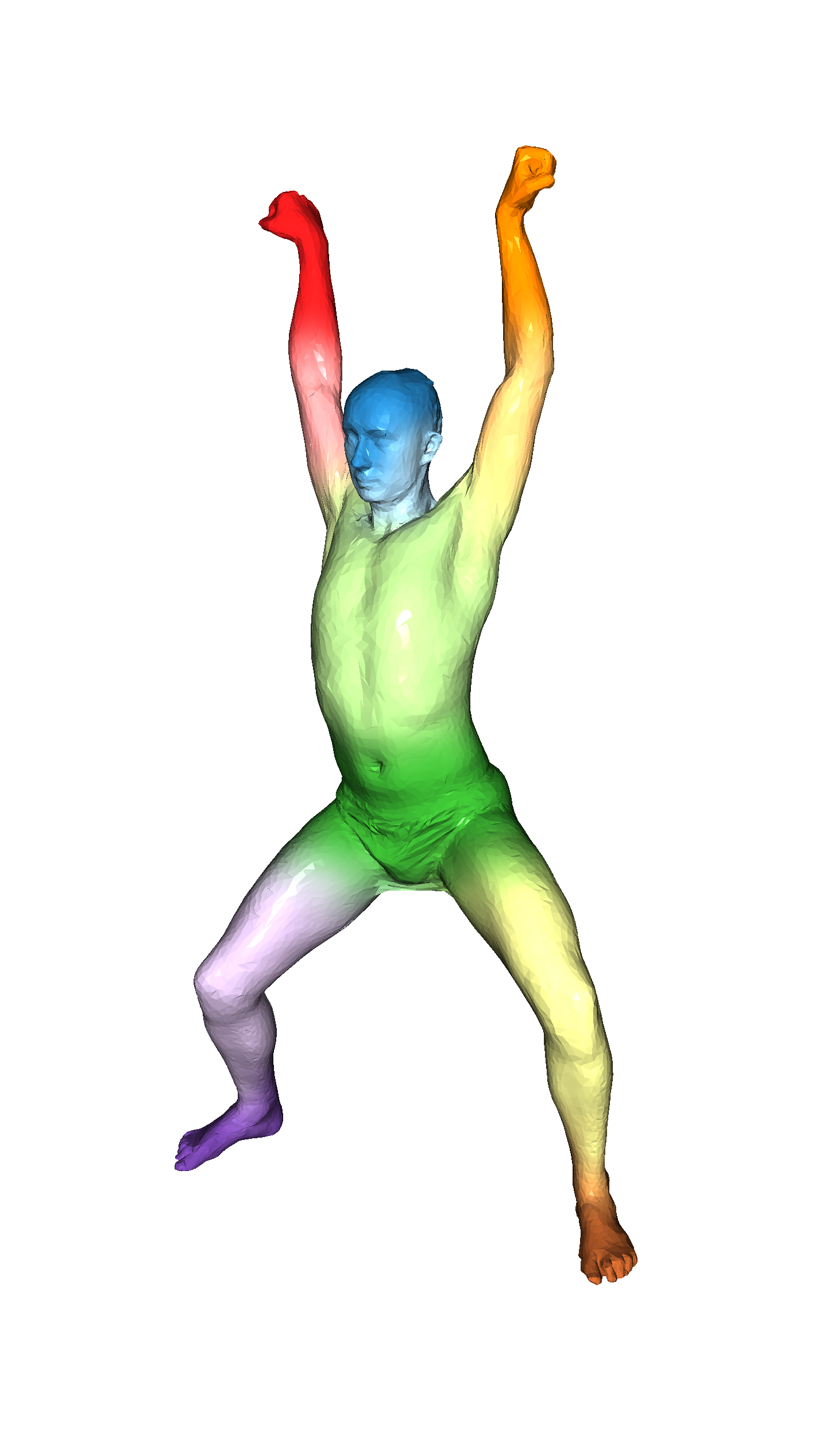

We propose deep virtual markers, a framework for estimating dense and accurate positional information for various types of 3D data. We design a concept and construct a framework that maps 3D points of 3D articulated models, like humans, into virtual marker labels. To realize the framework, we adopt a sparse convolutional neural network and classify 3D points of an articulated model into virtual marker labels. We propose to use soft labels for the classifier to learn rich and dense interclass relationships based on geodesic distance. To measure the localization accuracy of the virtual markers, we test FAUST challenge, and our result outperforms the state-of-the-art. We also observe outstanding performance on the generalizability test, unseen data evaluation, and different 3D data types (meshes and depth maps). We show additional applications using the estimated virtual markers, such as non-rigid registration, texture transfer, and realtime dense marker prediction from depth maps.

- Ubuntu 18.06 or higher

- CUDA 10.2 or higher

- pytorch 1.6 or higher

- python 3.8 or higher

- GCC 6 or higher

- We recommend using docker

docker pull min00001/cuglmink

Get sample data and pre-trained weight from here

docker pull min00001/cuglmink

./run_dvm_test.sh

This software is being made available under the terms in the LICENSE file.

Any exemptions to these terms requires a license from the Pohang University of Science and Technology.

@inproceedings{kim2021deep,

title={Deep Virtual Markers for Articulated 3D Shapes},

author={Hyomin Kim, Jungeon Kim, Jaewon Kam, Jaesik Park and Seungyong Lee},

booktitle={ICCV},

year={2021}

}

NOTE : Our implementation is based on the "4D-SpatioTemporal ConvNets" repository