PyTorch implementation and pretrained models for DINO. For details, see Emerging Properties in Self-Supervised Vision Transformers.

[blogpost] [arXiv]

You can choose to download only the weights of the pretrained backbone used for downstream tasks, or the full checkpoint which contains backbone and projection head weights for both student and teacher networks. We also provide the training and evaluation logs.

| arch | params | k-nn | linear | download | ||||

|---|---|---|---|---|---|---|---|---|

| DeiT-S/16 | 21M | 74.5% | 77.0% | backbone only | full checkpoint | args | logs | eval logs |

| DeiT-S/8 | 21M | 78.3% | 79.7% | backbone only | full checkpoint | args | logs | eval logs |

| ViT-B/16 | 85M | 76.1% | 78.2% | backbone only | full checkpoint | args | logs | eval logs |

| ViT-B/8 | 85M | 77.4% | 80.1% | backbone only | full checkpoint | args | logs | eval logs |

| ResNet-50 | 23M | 67.5% | 75.3% | backbone only | full checkpoint | args | logs | eval logs |

The pretrained models are available on PyTorch Hub.

import torch

deits16 = torch.hub.load('facebookresearch/dino', 'dino_deits16')

deits8 = torch.hub.load('facebookresearch/dino', 'dino_deits8')

vitb16 = torch.hub.load('facebookresearch/dino', 'dino_vitb16')

vitb8 = torch.hub.load('facebookresearch/dino', 'dino_vitb8')

resnet50 = torch.hub.load('facebookresearch/dino', 'dino_resnet50')Please install PyTorch and download the ImageNet dataset. This codebase has been developed with python version 3.6, PyTorch version 1.7.1, CUDA 11.0 and torchvision 0.8.2. The exact arguments to reproduce the models presented in our paper can be found in the args column of the pretrained models section. For a glimpse at the full documentation of DINO training please run:

python main_dino.py --help

Run DINO with DeiT-small network on a single node with 8 GPUs for 100 epochs with the following command. Training time is 1.75 day and the resulting checkpoint should reach ~69.3% on k-NN eval and ~73.8% on linear eval. We will shortly provide training and linear evaluation logs for this run to help reproducibility.

python -m torch.distributed.launch --nproc_per_node=8 main_dino.py --arch deit_small --data_path /path/to/imagenet/train --output_dir /path/to/saving_dir

We use Slurm and submitit (pip install submitit). To train on 2 nodes with 8 GPUs each (total 16 GPUs):

python run_with_submitit.py --nodes 2 --ngpus 8 --arch deit_small --data_path /path/to/imagenet/train --output_dir /path/to/saving_dir

DINO with ViT-base network.

python run_with_submitit.py --nodes 2 --ngpus 8 --use_volta32 --arch vit_base --data_path /path/to/imagenet/train --output_dir /path/to/saving_dir

You can improve the performance of the vanilla run by:

- training for more epochs:

--epochs 300, - increasing the teacher temperature:

--teacher_temp 0.07 --warmup_teacher_temp_epochs 30. - removing last layer normalization (only safe with

--arch deit_small):--norm_last_layer false,

Full command.

python run_with_submitit.py --arch deit_small --epochs 300 --teacher_temp 0.07 --warmup_teacher_temp_epochs 30 --norm_last_layer false --data_path /path/to/imagenet/train --output_dir /path/to/saving_dir

The resulting pretrained model should reach ~73.4% on k-NN eval and ~76.1% on linear eval. Training time is 2.6 days with 16 GPUs. We will shortly provide training and linear evaluation logs for this run to help reproducibility.

This code also works for training DINO on convolutional networks, like ResNet-50 for example. We highly recommend to adapt some optimization arguments in this case. For example here is a command to train DINO on ResNet-50 on a single node with 8 GPUs for 100 epochs:

python -m torch.distributed.launch --nproc_per_node=8 main_dino.py --arch resnet50 --optimizer sgd --weight_decay 1e-4 --weight_decay_end 1e-4 --global_crops_scale 0.14 1 --local_crops_scale 0.05 0.14 --data_path /path/to/imagenet/train --output_dir /path/to/saving_dir

To evaluate a simple k-NN classifier with a single GPU on a pre-trained model, run:

python -m torch.distributed.launch --nproc_per_node=1 eval_knn.py --data_path /path/to/imagenet

If you choose not to specify --pretrained_weights, then DINO reference weights are used by default. If you want instead to evaluate checkpoints from a run of your own, you can run for example:

python -m torch.distributed.launch --nproc_per_node=1 eval_knn.py --pretrained_weights /path/to/checkpoint.pth --checkpoint_key teacher --data_path /path/to/imagenet

To train a supervised linear classifier on frozen weights on a single node with 8 gpus, run:

python -m torch.distributed.launch --nproc_per_node=8 eval_linear.py --data_path /path/to/imagenet

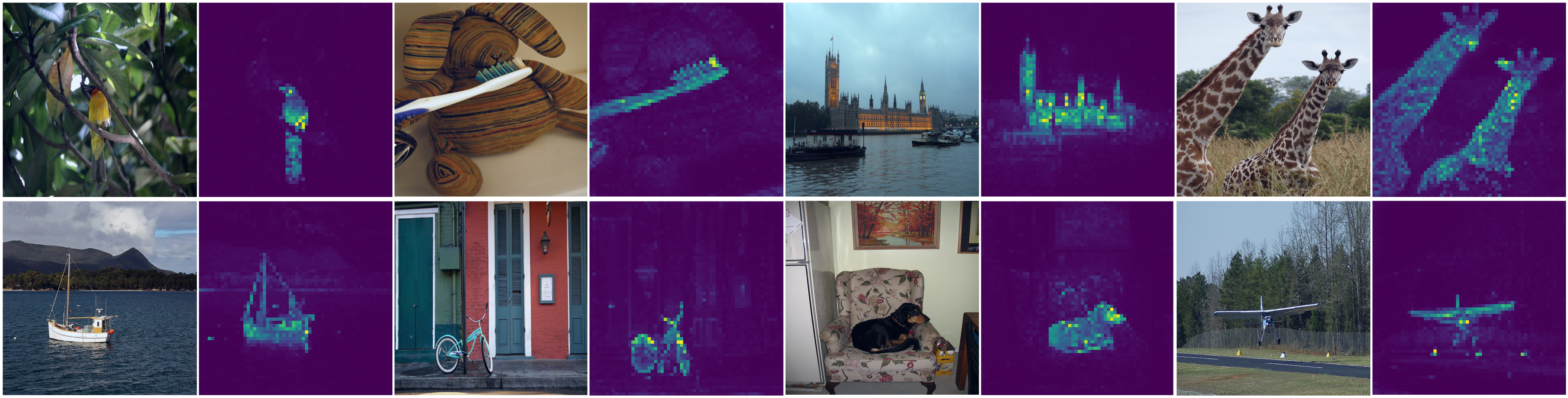

You can look at the self-attention of the [CLS] token on the different heads of the last layer by running:

python visualize_attention.py

See the LICENSE file for more details.

If you find this repository useful, please consider giving a star ⭐ and citation 🦖:

@article{caron2021emerging,

title={Emerging Properties in Self-Supervised Vision Transformers},

author={Caron, Mathilde and Touvron, Hugo and Misra, Ishan and J\'egou, Herv\'e and Mairal, Julien and Bojanowski, Piotr and Joulin, Armand},

journal={arXiv preprint arXiv:2104.14294},

year={2021}

}