Real-time Photorealistic Dynamic Scene Representation and Rendering with 4D Gaussian Splatting,

Zeyu Yang, Hongye Yang, Zijie Pan, Li Zhang

Fudan University

ICLR 2024

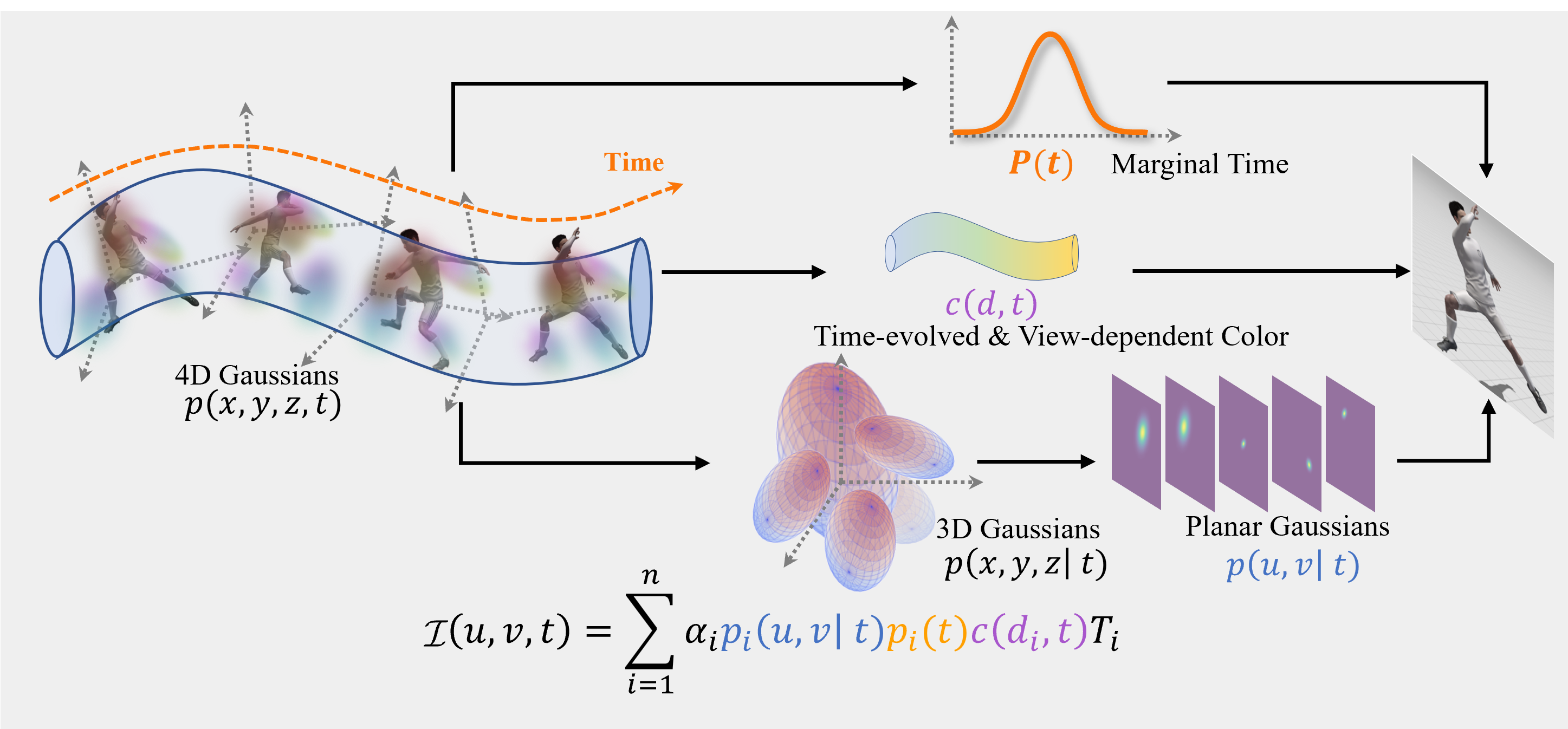

This repository is the official implementation of "Real-time Photorealistic Dynamic Scene Representation and Rendering with 4D Gaussian Splatting". In this paper, we propose coherent integrated modeling of the space and time dimensions for dynamic scenes by formulating unbiased 4D Gaussian primitives along with a dedicated rendering pipeline.

The hardware and software requirements are the same as those of the 3D Gaussian Splatting, which this code is built upon. To setup the environment, please run the following command:

git clone https://github.com/fudan-zvg/4d-gaussian-splatting

cd 4d-gaussian-splatting

conda env create --file environment.yml

conda activate 4dgsDyNeRF dataset:

Download the Neural 3D Video dataset and extract each scene to data/N3V. After that, preprocess the raw video by executing:

python scripts/n3v2blender.py data/N3V/$scene_nameDNeRF dataset:

The dataset can be downloaded from drive or dropbox. Then, unzip each scene into data/dnerf.

After the installation and data preparation, you can train the model by running:

python train.py --config $config_pathtest_view_cam0_comp.mp4

bullet_time.mp4

free_view.mp4

mutant_video.mp4

@inproceedings{yang2023gs4d,

title={Real-time Photorealistic Dynamic Scene Representation and Rendering with 4D Gaussian Splatting},

author={Yang, Zeyu and Yang, Hongye and Pan, Zijie and Zhang, Li},

booktitle = {International Conference on Learning Representations (ICLR)},

year={2024}

}