This repository is for PointCMT introduced in the following paper

Xu Yan*, Heshen Zhan*, Chaoda Zheng, Jiantao Gao, Ruimao Zhang, Shuguang Cui, Zhen Li*, "Let Images Give You More: Point Cloud Cross-Modal Training for Shape Analysis", NeurIPS 2022 (Spotlight) 😃 [arxiv].

If you find our work useful in your research, please consider citing:

@InProceedings{yan2022let,

title={Let Images Give You More: Point Cloud Cross-Modal Training for Shape Analysis},

author={Xu Yan and Heshen Zhan and Chaoda Zheng and Jiantao Gao and Ruimao Zhang and Shuguang Cui and Zhen Li},

year={2022},

booktitle={NeurIPS}

}

@inproceedings{yan20222dpass,

title={2dpass: 2d priors assisted semantic segmentation on lidar point clouds},

author={Yan, Xu and Gao, Jiantao and Zheng, Chaoda and Zheng, Chao and Zhang, Ruimao and Cui, Shuguang and Li, Zhen},

booktitle={European Conference on Computer Vision},

pages={677--695},

year={2022},

organization={Springer}

}Our another work for cross-modal semantic segmentation (ECCV 2022) is released HERE.

2022/12/19: The codes for ModelNet40 are released 🚀!

2022/11/16: Our paper is selected as spotlight in NeurIPS 2022!

The latest codes are tested on CUDA10.1, PyTorch 1.4.0 and Python 3.7.5. Please use the same environment with us!

conda install pytorch==1.4.0 cudatoolkit=10.1 -c pytorch

pip install -r requirements.txtCompile the library through:

cd emdloss

python setup.py install

cd ../pointnet2/

pip install -e . && cd ..- Download alignment point cloud data of ModelNet40 HERE and save in

dataset/ModelNet40/modelnet40_normal_resampled/. - Download multiview dataset HERE and save in

dataset/ModelNet40/ModelNet40_mv_20view/. - Process the multiview and point clouds, and the data will be saved in

dataset/ModelNet40/data/

cd data

python modelnet40_processor.pyYou can also download processed data directly from HERE.

Train and evaluate MVCNN model through

# training

python main_mvcnn_modelnet.py --exp_name mvcnn_default

# evaluation

python main_mvcnn_modelnet.py --exp_name mvcnn_default --eval --model_path [CHECKPOINT_PATH]Note that the large batch size for MVCNN is necessary!! If you use smaller batch size due to the memory limitation, you need increase the --accumulation_step during the training. After training, the performance of MVCNN should be around 97%.

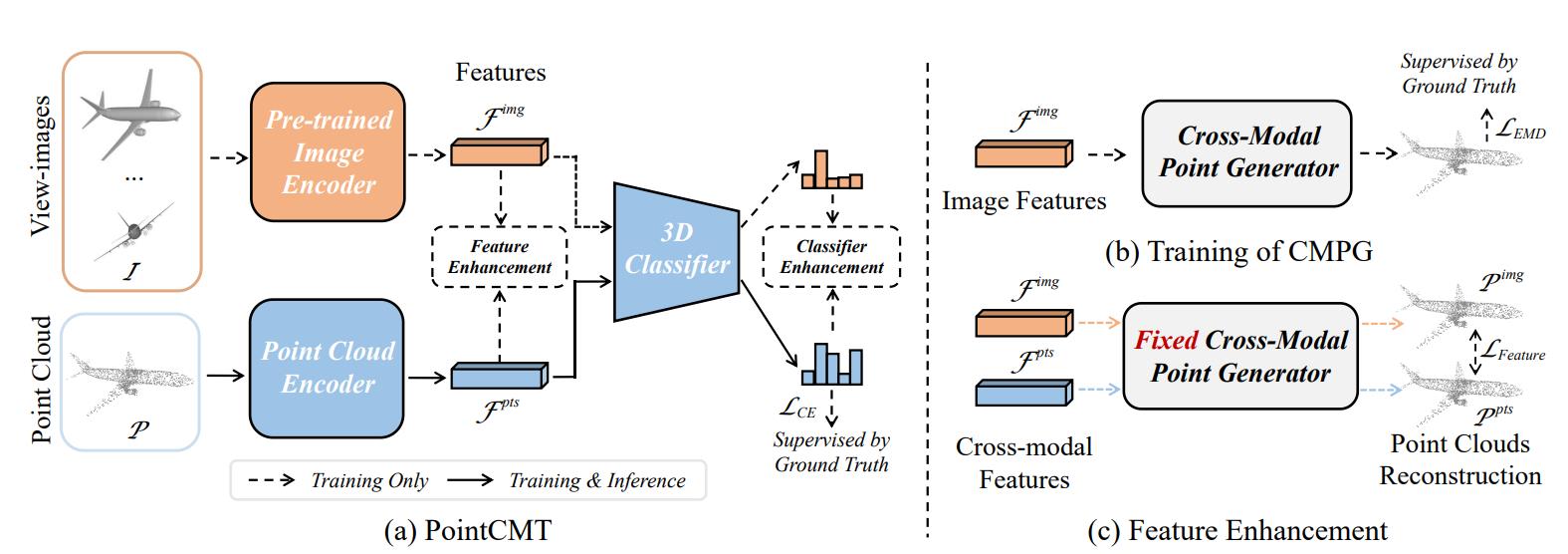

Training cross-model point generator through

python train_cmpg.py --exp_name cmpg_default --teacher_path [MVCNN_CHECKPOINT]Before conducting cross-modal training, we first generate the features of MVCNN offline to speedup the training:

python offline_feature_generation.py --model_path [MVCNN_CHECKPOINT]The features will be saved in dataset/ModelNet40/data/.

After that, we train PointNet++ with PointCMT through

python train_pointcmt.py --exp_name pointnet2_pointcmt --cmpg_checkpoint [CMPG_CHECKPOINT]You can also train the vanilla model through --no_pointcmt option.

We conduct voting test on our trained model:

python voting_test.py --checkpoint [POINITNET2_CHECKPOINT]You can also use our pre-trained models from pretrained/modelnet40/, including MVCNN, CMPG and PointNet++. More models and codes for ScanObjectNN will be released very soon!

| Model | Accuracy |

|---|---|

| MVCNN | 97.0% |

| PointNet++ | 94.6% |

Code is built based on PointNet++, SimpleView and EMD(Earth Mover's Distance).

This repository is released under MIT License (see LICENSE file for details).