- 2024-05-16: Our paper is accepted by ACL 2024 Main! 🎉

- 2024-02-16: Submission version.

- 2024-01-20: Ongoing work.

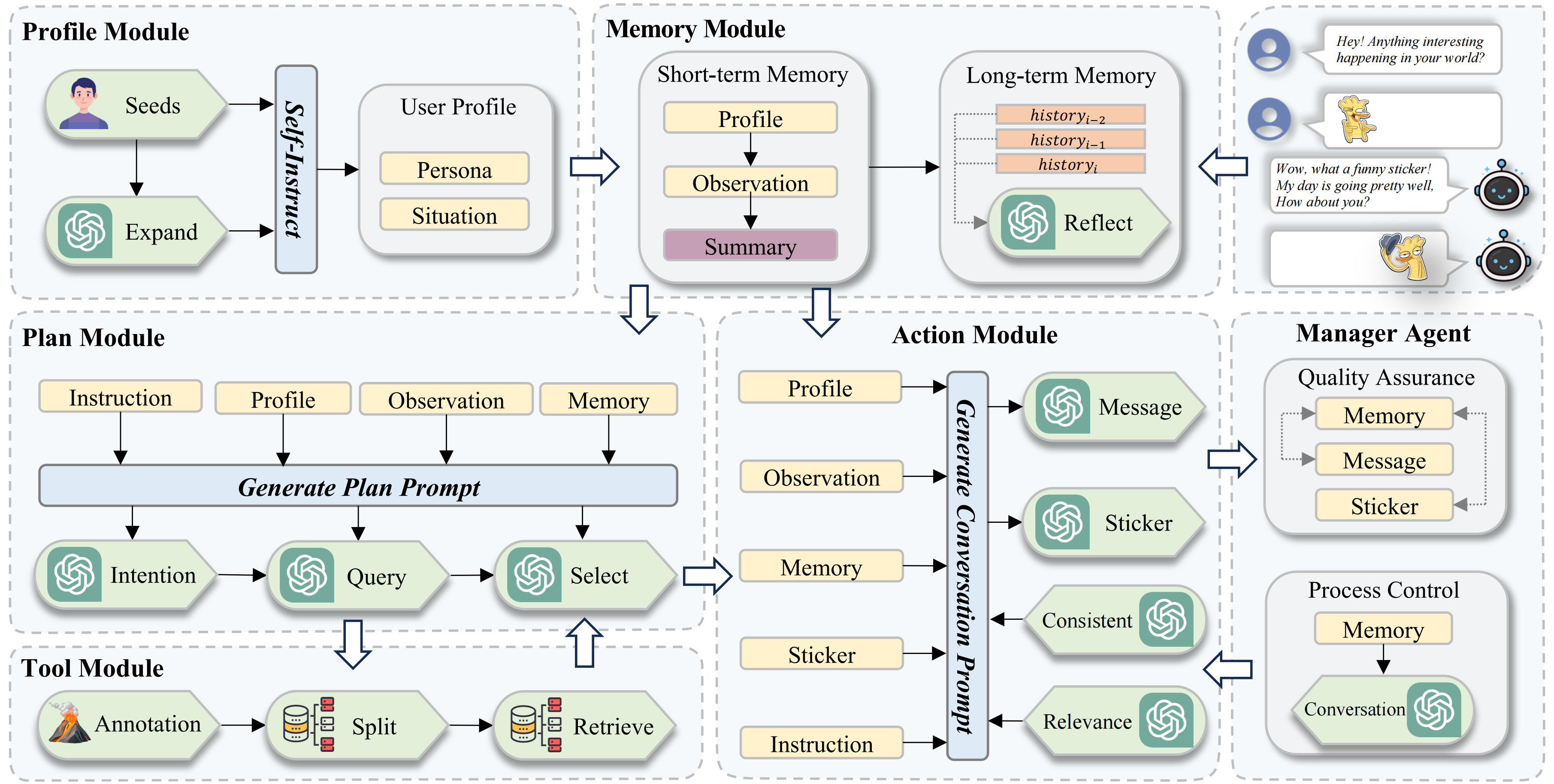

The overview of Agent4SC.

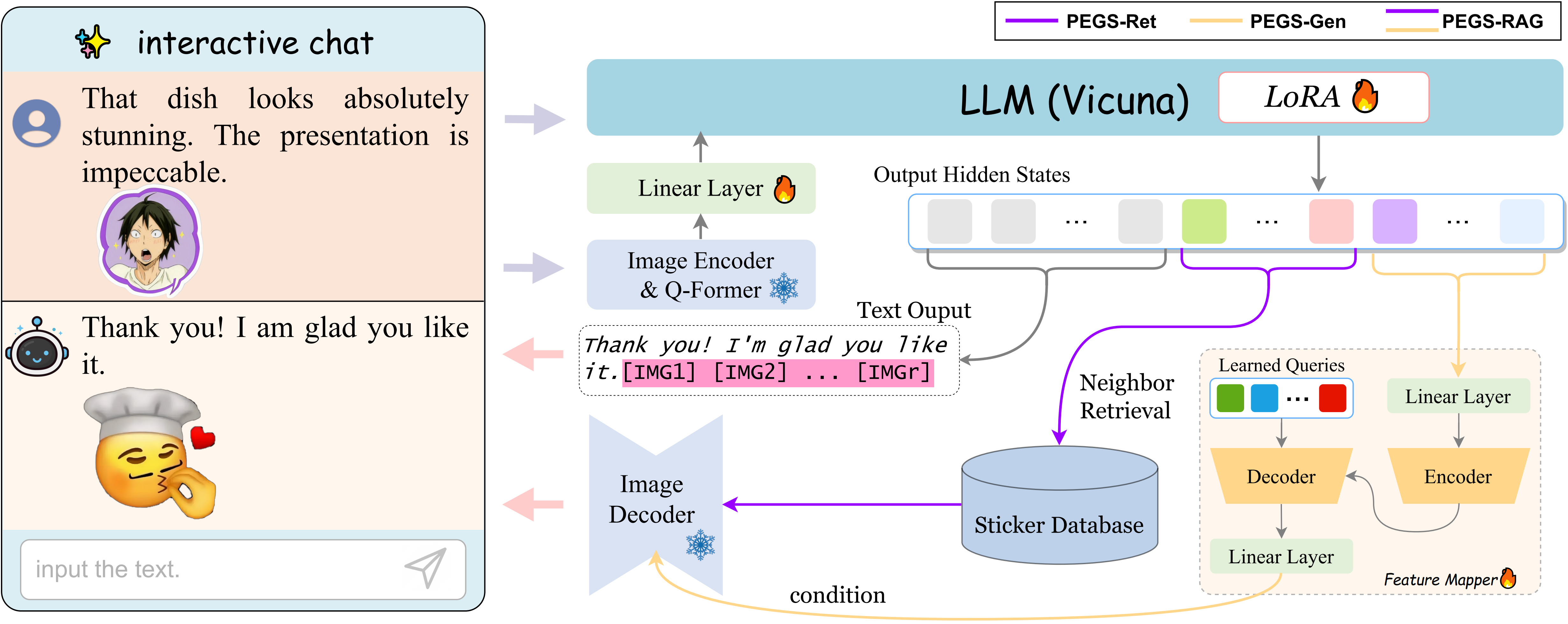

The architecture of PEGS framework.

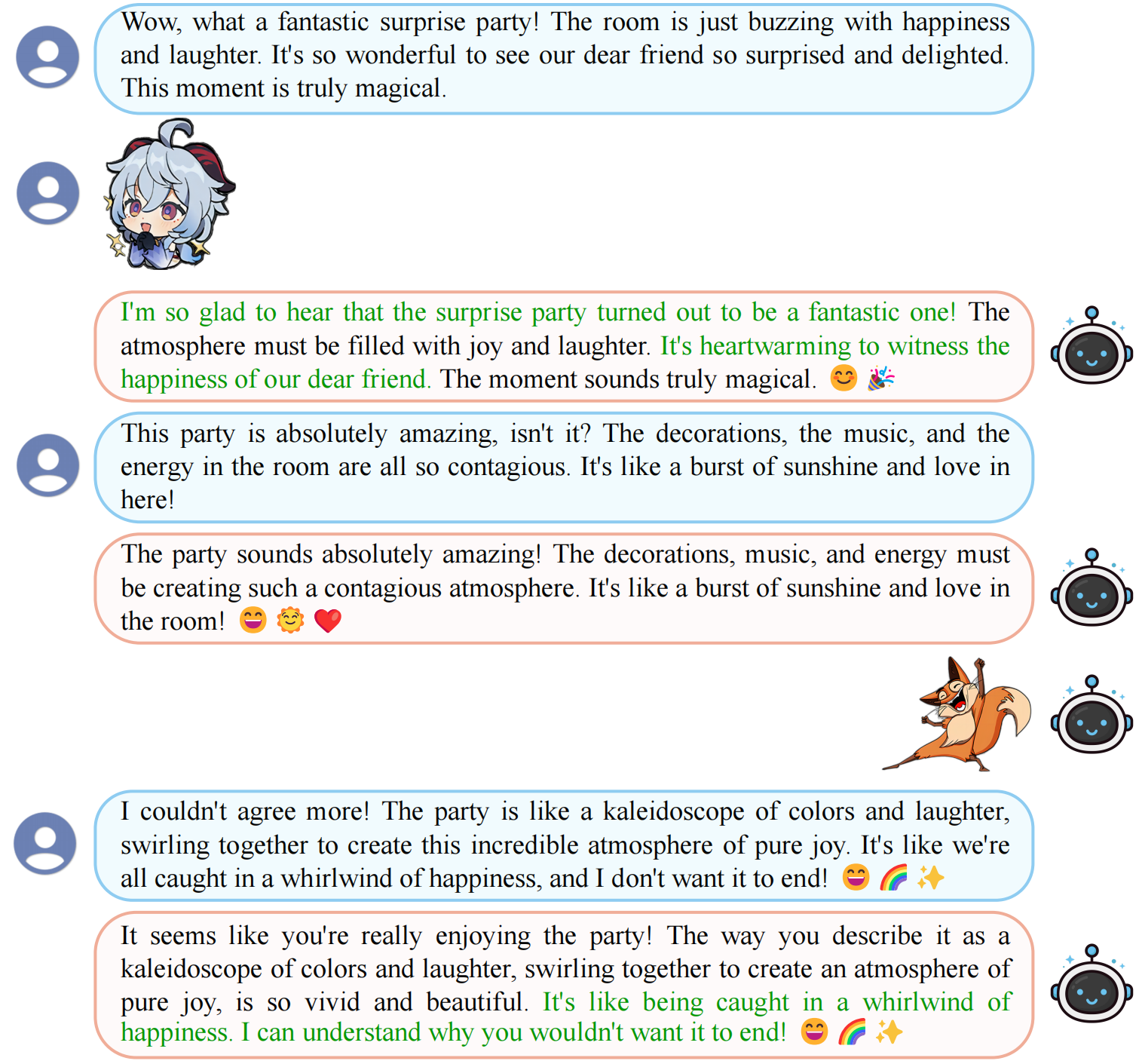

An example of multimodal conversation in our StickerConv dataset.

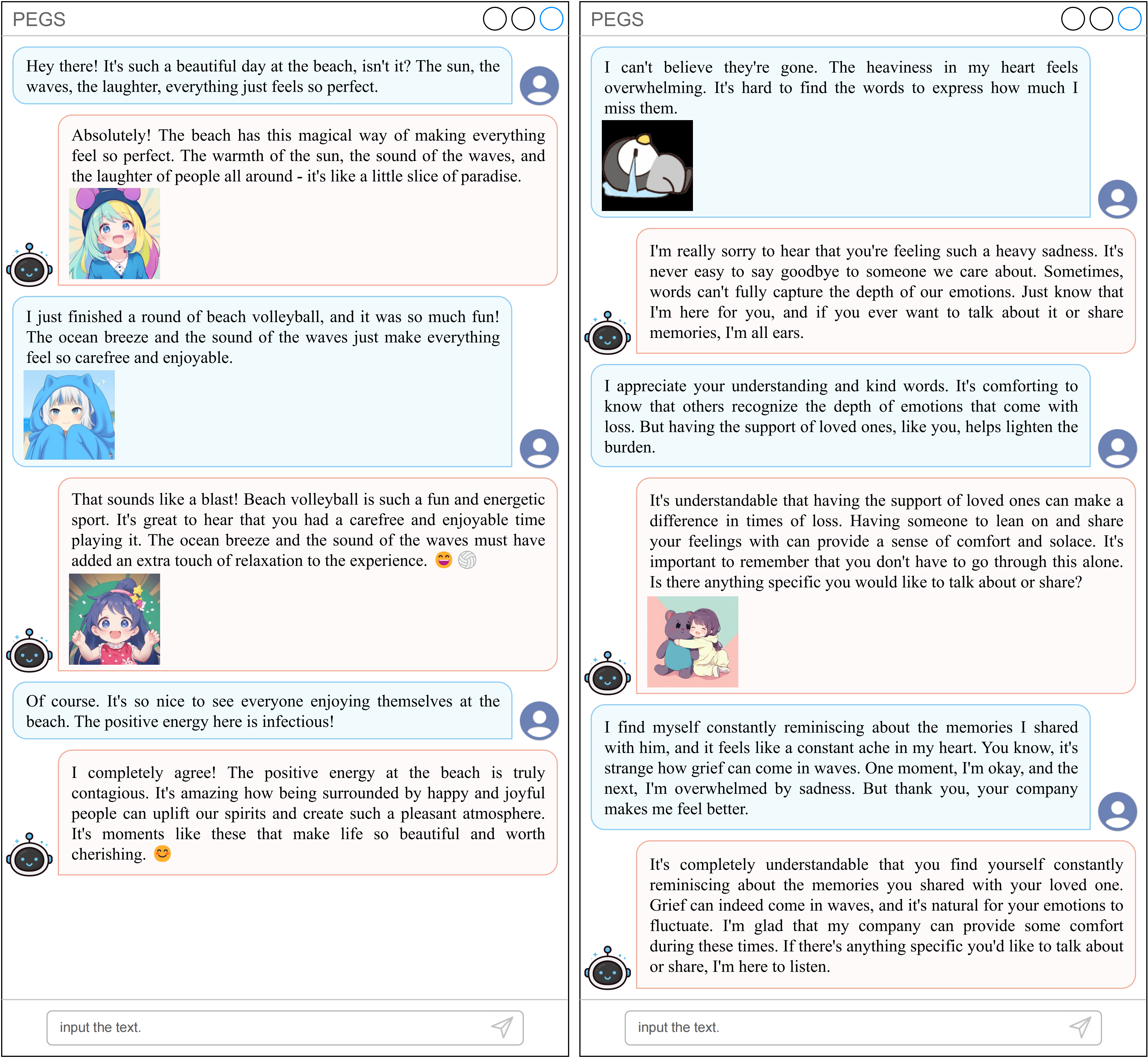

Examples of conversations by users interacting with PEGS. Users can chat with multimodal content (text and stickers) and will receive multimodal empathetic responses. Left: a conversation characterized by positive emotion (happiness). Right: a conversation characterized by negative emotion (sadness).

You can download the StickerConv model directly from the Hugging Face Hub. However, the sticker data is not included due to licensing restrictions. Please contact the original SER30K authors for access: link to GitHub repository.

We have also prepared a separate version of the data specifically formatted for use with Large Language Models (LLMs). Please download the appropriate version based on your needs. Formatted dataset download Link.

For assistance, contact: 2210737@stu.neu.edu.cn

SER30K: A Large-Scale Dataset for Sticker Emotion Recognition