- [2024/07/01] 🔥 We release the test cases in the assets/images directory.

- [2024/06/21] 🔥 We release the inpainting feature to enable outfit changing. Experimental Feature.

- [2024/06/13] 🔥 We release the Gradio_demo of IMAGDressing-v1.

- [2024/05/28] 🔥 We release the inference code of SD1.5 that is compatible with IP-Adapter and ControlNet.

- [2024/05/08] 🔥 We launch the project page of IMAGDressing-v1.

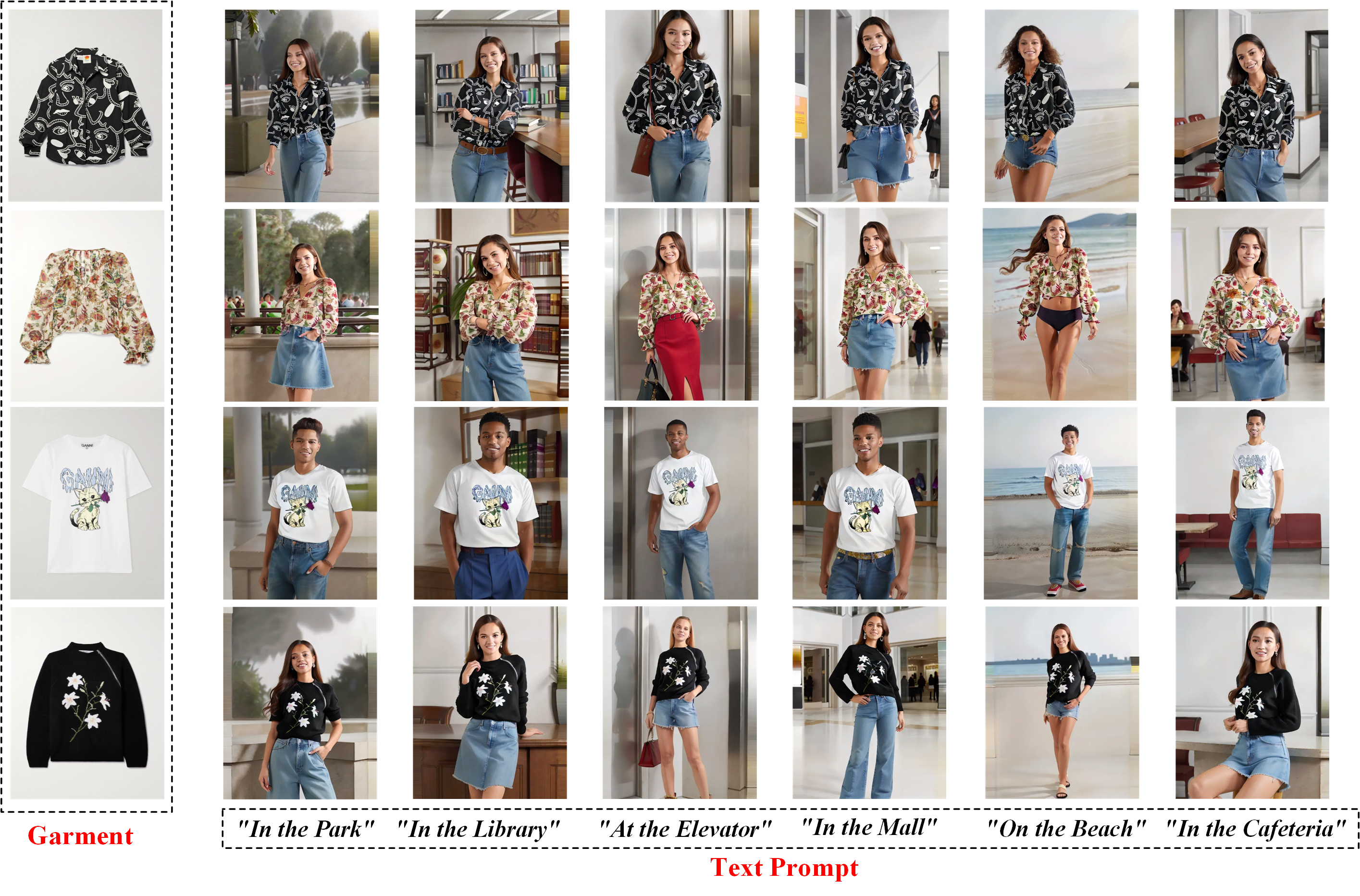

- Simple Architecture: IMAGDressing-v1 produces lifelike garments and enables easy user-driven scene editing.

- Flexible Plugin Compatibility: IMAGDressing-v1 modestly integrates with extension plugins such as IP-Adapter, ControlNet, T2I-Adapter, and AnimateDiff.

- Rapid Customization: Enables rapid customization in seconds without the need for additional LoRA training.

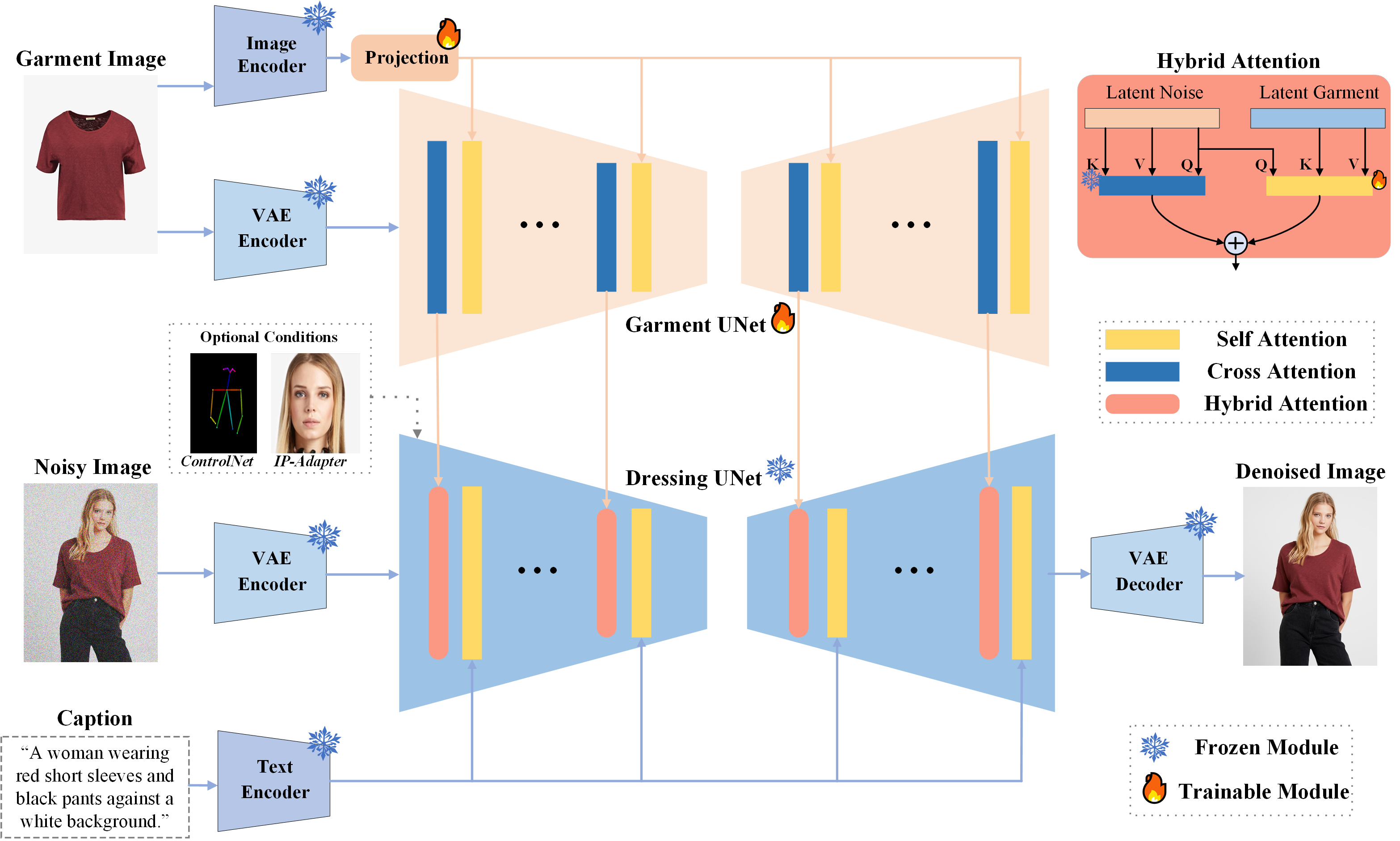

To address the need for flexible and controllable customizations in virtual try-on systems, we propose IMAGDressing-v1. Specifically, we introduce a garment UNet that captures semantic features from CLIP and texture features from VAE. Our hybrid attention module includes a frozen self-attention and a trainable cross-attention, integrating these features into a frozen denoising UNet to ensure user-controlled editing. We will release a comprehensive dataset, IGv1, with over 200,000 pairs of clothing and dressed images, and establish a standard data assembly pipeline. Furthermore, IMAGDressing-v1 can be combined with extensions like ControlNet, IP-Adapter, T2I-Adapter, and AnimateDiff to enhance diversity and controllability.

- Python >= 3.8 (Recommend to use Anaconda or Miniconda)

- PyTorch >= 2.0.0

- cuda==11.8

conda create --name IMAGDressing python=3.8.10

conda activate IMAGDressing

pip install -U pip

# Install requirements

pip install -r requirements.txtYou can download our models from HuggingFace or 百度云. You can download the other component models from the original repository, as follows.

- stabilityai/sd-vae-ft-mse.

- SG161222/Realistic_Vision_V4.0_noVAE.

- h94/IP-Adapter-FaceID.

- lllyasviel/control_v11p_sd15_openpose.

python inference_IMAGdressing.py --cloth_path [your cloth path]python inference_IMAGdressing_controlnetpose.py --cloth_path [your cloth path] --pose_path [your posture path]python inference_IMAGdressing_ipa_controlnetpose.py --cloth_path [your cloth path] --face_path [your face path] --pose_path [your posture path]Please download the humanparsing and openpose model file from IDM-VTON-Huggingface to the ckpt folder first.

python inference_IMAGdressing_controlnetinpainting.py --cloth_path [your cloth path] --model_path [your model path]Join us on this exciting journey to transform virtual try-on systems. Star⭐️ our repository to stay updated with the latest advancements, and contribute to making IMAGDressing the leading solution for virtual clothing generation.

We would like to thank the contributors to the IDM-VTON, MagicClothing, IP-Adapter, ControlNet, T2I-Adapter, and AnimateDiff repositories, for their open research and exploration.

The IMAGDressing code is available for both academic and commercial use. However, the models available for manual and automatic download from IMAGDressing are intended solely for non-commercial research purposes. Similarly, our released checkpoints are restricted to research use only. Users are free to create images using this tool, but they must adhere to local laws and use it responsibly. The developers disclaim any liability for potential misuse by users.

If you find IMAGDressing-v1 useful for your research and applications, please cite using this BibTeX:

@article{shen2024IMAGDressing-v1,

title={IMAGDressing-v1: Customizable Virtual Dressing},

author={Shen, Fei and Jiang, Xin and He, Xin and Ye, Hu and Wang, Cong, and Du, Xiaoyu, and Tang, Jinghui},

booktitle={Coming Soon},

year={2024}

}- Gradio demo

- Inference code

- Model weights (512 sized version)

- Support inpaint

- Model weights (More higher sized version)

- Paper

- Training code

- Video Dressing

- Others, such as User-Needed Requirements

If you have any questions, please feel free to contact with me at shenfei140721@126.com.