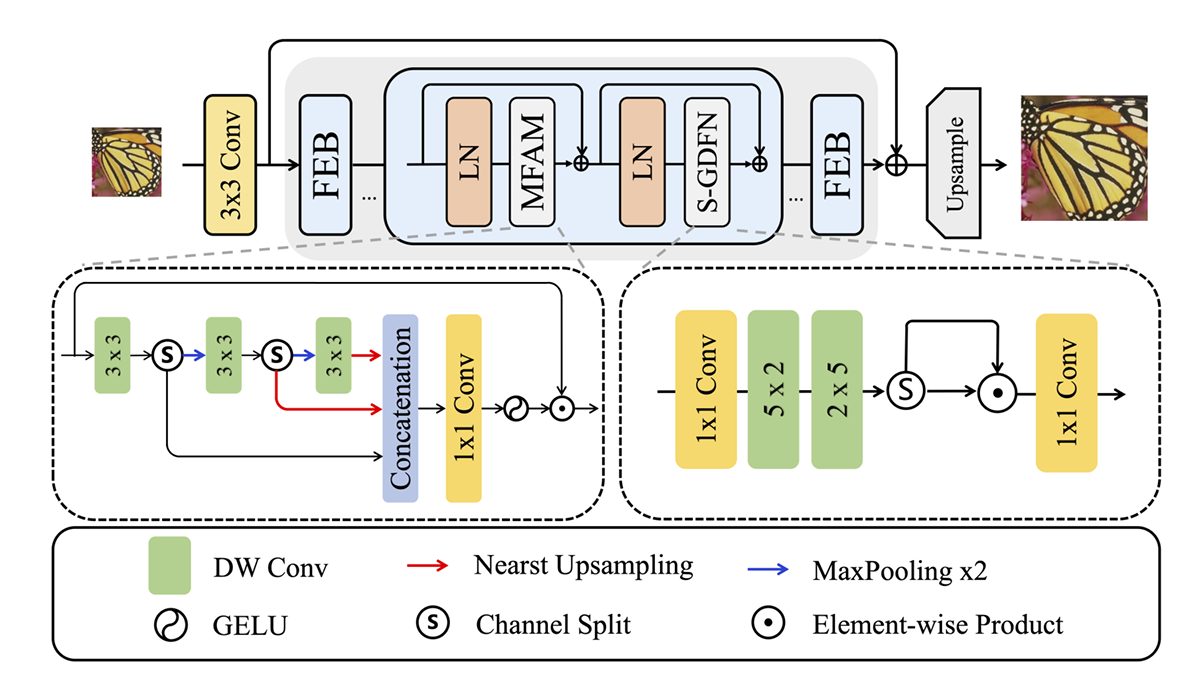

An overview of the proposed MFA model

- Run the [

run.sh] script (./run.sh)CUDA_VISIBLE_DEVICES=0 python test_demo.py --data_dir [path to your data dir] --save_dir [path to your save dir] --model_id 16

- Be sure the change the directories

--data_dirand--save_dir.

- Be sure the change the directories

from utils.model_summary import get_model_flops, get_model_activation

from models.team16_MFA import MFA

model = MFA(dim=33, n_blocks=8, ffn_scale=1.8, upscaling_factor=4)

input_dim = (3, 256, 256) # set the input dimension

activations, num_conv = get_model_activation(model, input_dim)

activations = activations / 10 ** 6

print("{:>16s} : {:<.4f} [M]".format("#Activations", activations))

print("{:>16s} : {:<d}".format("#Conv2d", num_conv))

flops = get_model_flops(model, input_dim, False)

flops = flops / 10 ** 9

print("{:>16s} : {:<.4f} [G]".format("FLOPs", flops))

num_parameters = sum(map(lambda x: x.numel(), model.parameters()))

num_parameters = num_parameters / 10 ** 6

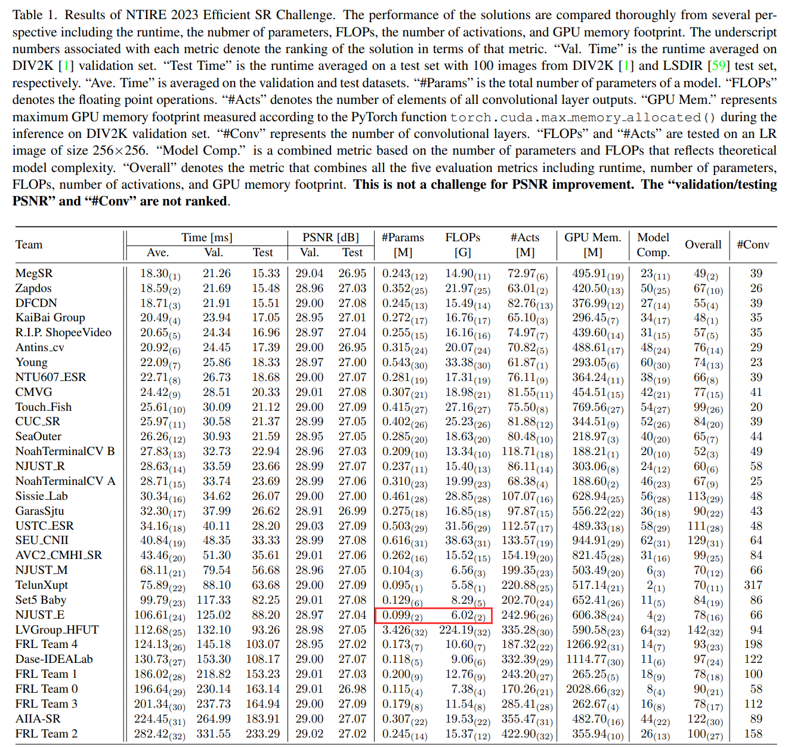

print("{:>16s} : {:<.4f} [M]".format("#Params", num_parameters))Our team (NJUST_E) placed 2nd in the Parameters and FLOPs sub-track of the NTIRE 2023 ESR Challenge.