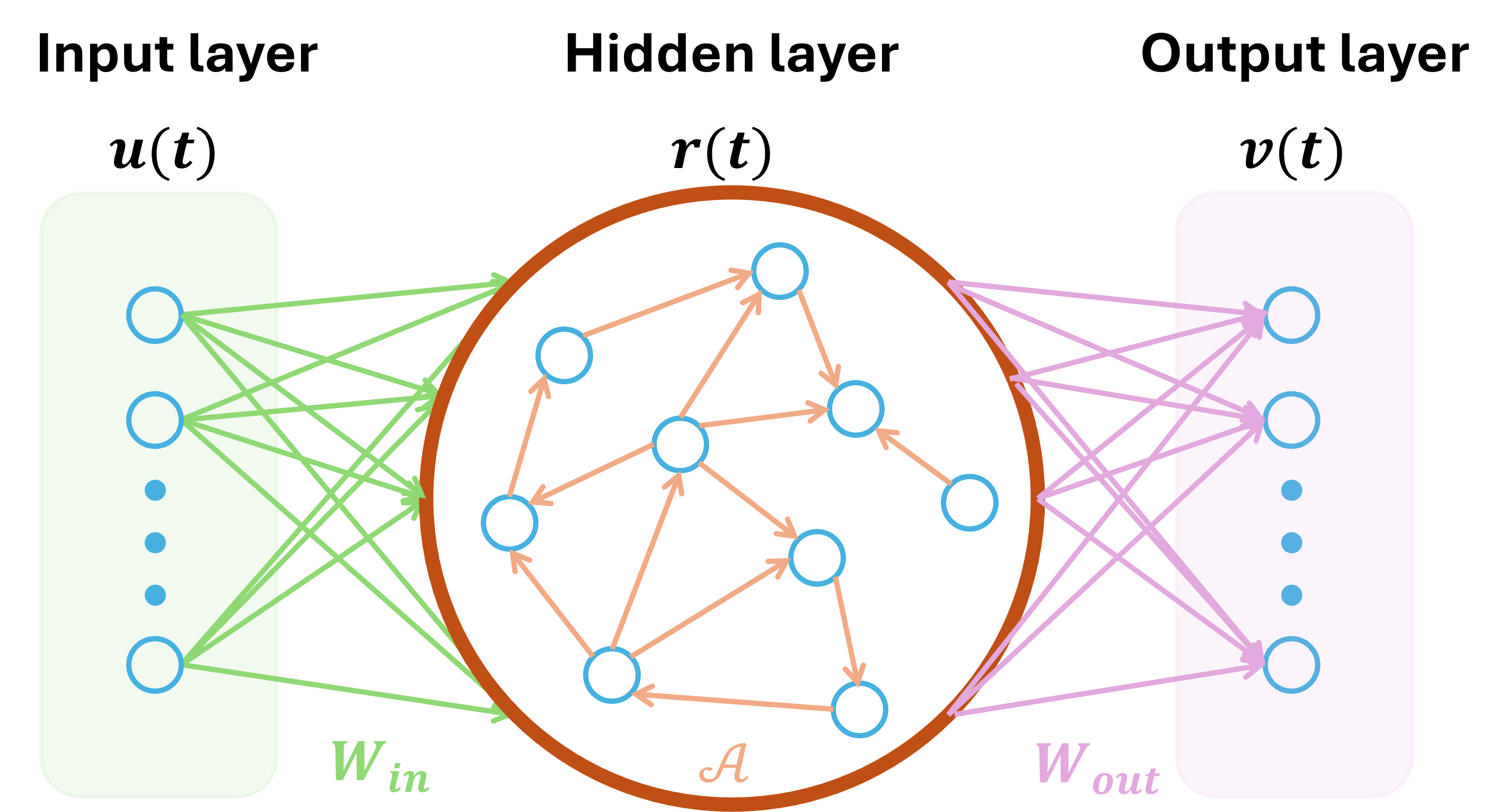

Reservoir computing (RC) is a type of recurrent neural networks (RNNs). A reservoir computer is composed of three layers: an input layer, a hidden recurrent layer, and an output layer. The distinguishing feature of RC is that only the readout weights (

Now suppose we have the training data: input

We calculate, update, and concatenate the vector

where

Once we finish the training as previously described, we can continue to get the input in the testing phase and update the reservoir state

There are various hyperparameters to be optimized (I will introduce the hyperparameters optimization later), for example, the spectral radius

Reservoir computing is a powerful tool that can be used to solve a wide variety of problems, such as time series prediction, trajectories tracking control, inverse modeling, speech and signal processing, and more. For a deeper insights, you are invited to explore my homepage. Additionally, the field is witnessing growing interest in building reservoir computing systems through real-world experiments (physical reservoirs) and in understanding its closer alignment with neural science, enhancing our knowledge of both brain functioning and machine learning.

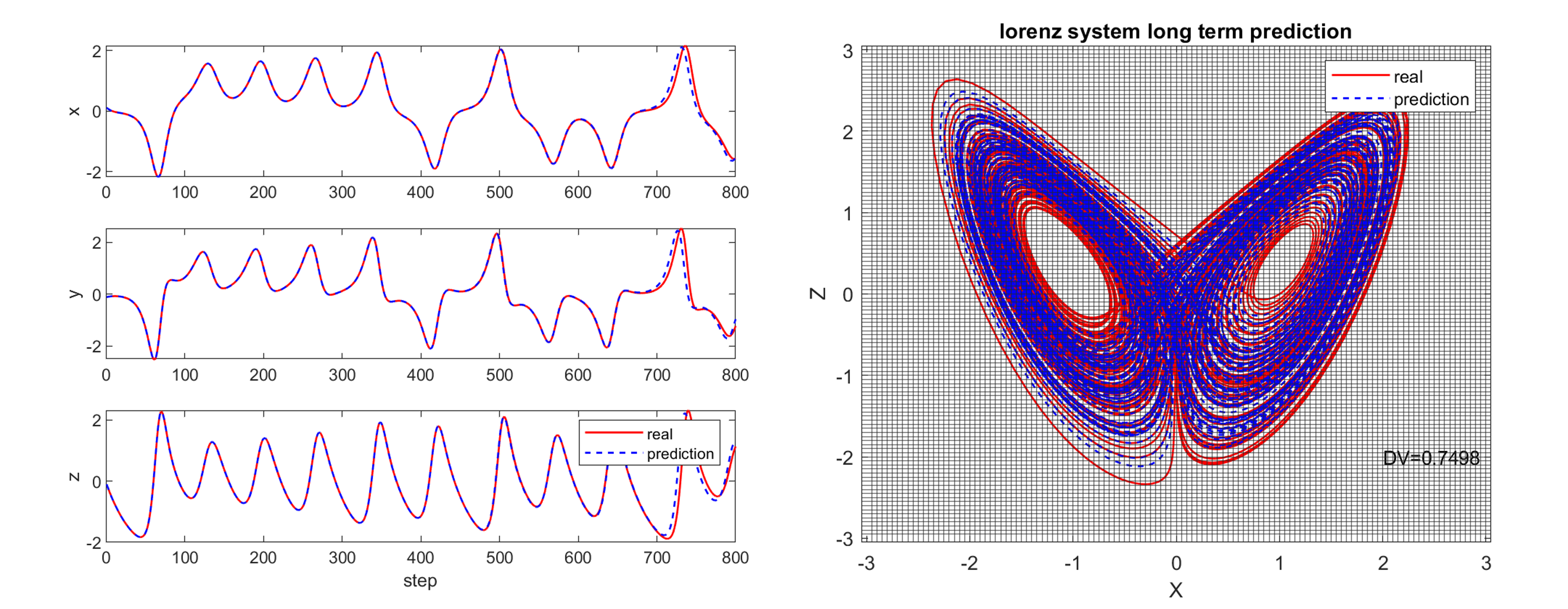

We take two examples: the Lorenz and Mackey-Glass systems to show the short- and long-term prediction performance of reservoir computing.

The classic Lorenz chaotic system is a simplified model for atmospheric convection, which can be discribed by the three dimensional nonlinear differential equations:

where 'reservoir.m' (or 'reservoir.py') to train the reservoir computer and make predictions, we can obtain the following results:

As can be seen in the figure above, the reservoir computer gave us satisfactory performance on both short-term and long-term prediction. Note that the Lorenz system prediction is a relatively simple task so we choose a small network, and choose hyperparameters randomly.

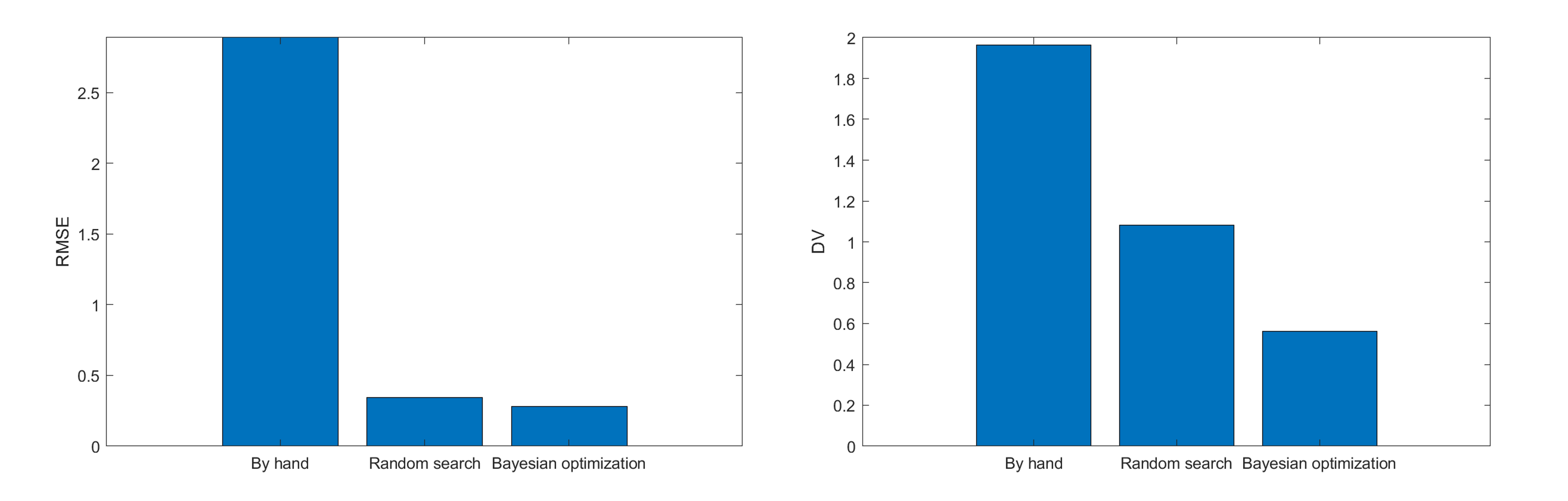

We use RMSE (root-mean-square error) and DV (deviation value) to evaluate the performance of short- and long-term prediction, respectively. We define the DV calculation (see our work at the end) as follows: we place a uniform grid in a 2-d subspace with cell size

where

The Mackey-Glass system is an infinite dimensional system described by a delayed differential equation:

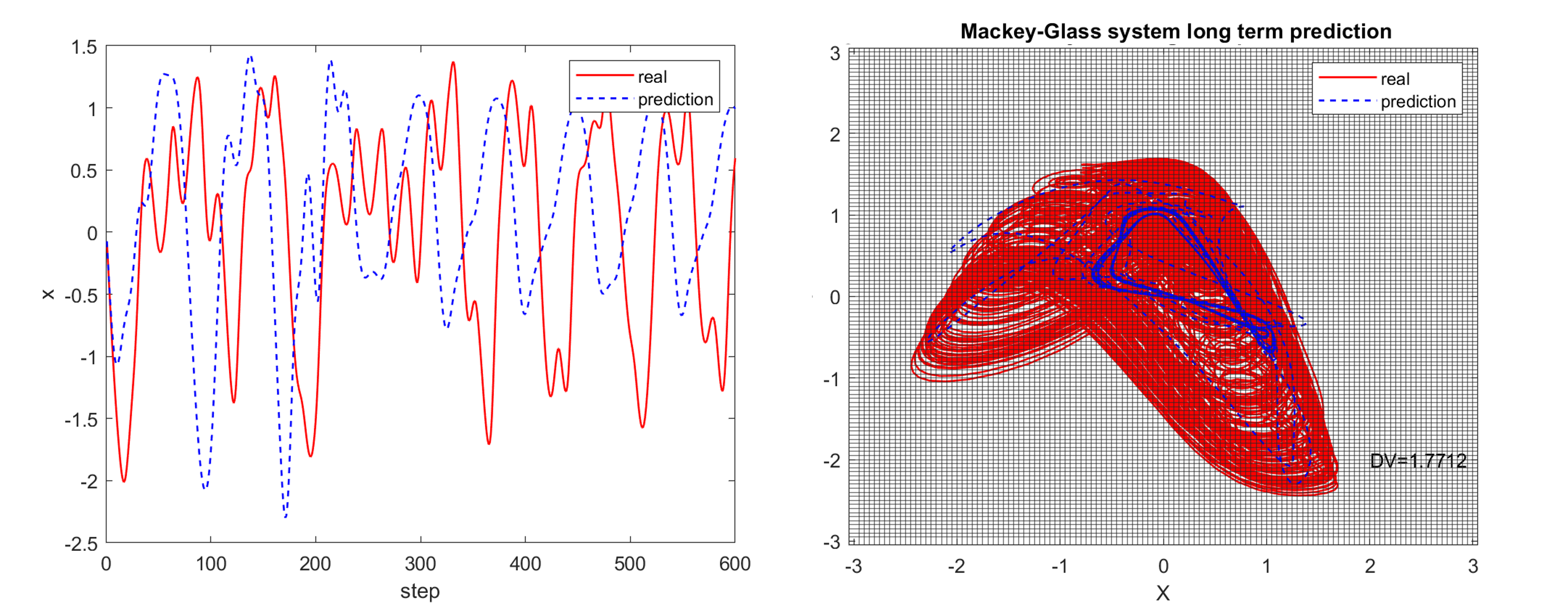

where 'reservoir_mg.m' (or 'reservoir_mg.py') to train the reservoir computer and make predictions for the Mackey-Glass system:

Seems the reservoir is not capable of predicting complex tasks like Mackey-Glass system ... But, please wait!

As I mentioned before, we chose the hyperparameters by hand. However, reservoir computing is extremenly sensitive to its hyperparameters. In other words, for simple tasks like the prediction of Lorenz system, the role of hyperparameters may not be obvious, but once the tasks are more complex, the hyperparameters are crucial to the performance. Next I will introduce hyperparameters optimization and show the performance of our reservoir computing.

Hyperparameters optimization, also known as hyperparameter tuning, is a vital step in machine learning. Unlike model parameters, which are learned during training, hyperparameters are external configurations and are set prior to the training process and can significantly impact the performance of the model.

We typically separate our dataset into training, validation, and testing sets. We prepare various candidates of hyperparameters sets, train the reservoir computer and collect the performance on the validation dataset with each hyperparameters set. Then, we select a set of hyperparameters that yield the best performance on the validation dataset. We anticipate that these optimized hyperparameters will also perform well on the unseen testing set.

There are a numer of optimization strategies, such as grid search, random search, Bayesian optimization, evolutionary algorithms, and so on. Here we introduce two of them: Random search and Bayesian optimization. (I will not go into the details of the algorithms, but will focus on the effects of using these algorithms.)

Random search is a method where values are selected randomly within the search space. Suppose we need to optimize six hyperparameters, each with numerous possible values. Testing every combination (known as grid search) would result in high computation time. Random search selects a combination of random values for each hyperparameter from its respective pool evey time step and test the performance, offering a more efficient alternative to grid search.

Execute 'opt_random_search_mg.m' to perform a random search for optimizing hyperparameters in predicting Mackey-Glass system. We record the hyperparameter set that yields the lowest RMSE on the validation dataset. Using these optimal hyperparameters, the reservoir computer produced the following results:

As depicted in the figure, the reservoir computer successfully learned the short-term behavior of the Mackey-Glass system but failed to accurately reconstruct its long-term attractor. In theory, iterating for a sufficient long time could yield a set of optimal hyperparameters. However, here I suggest employing a more efficient algorithm: Bayesian optimization.

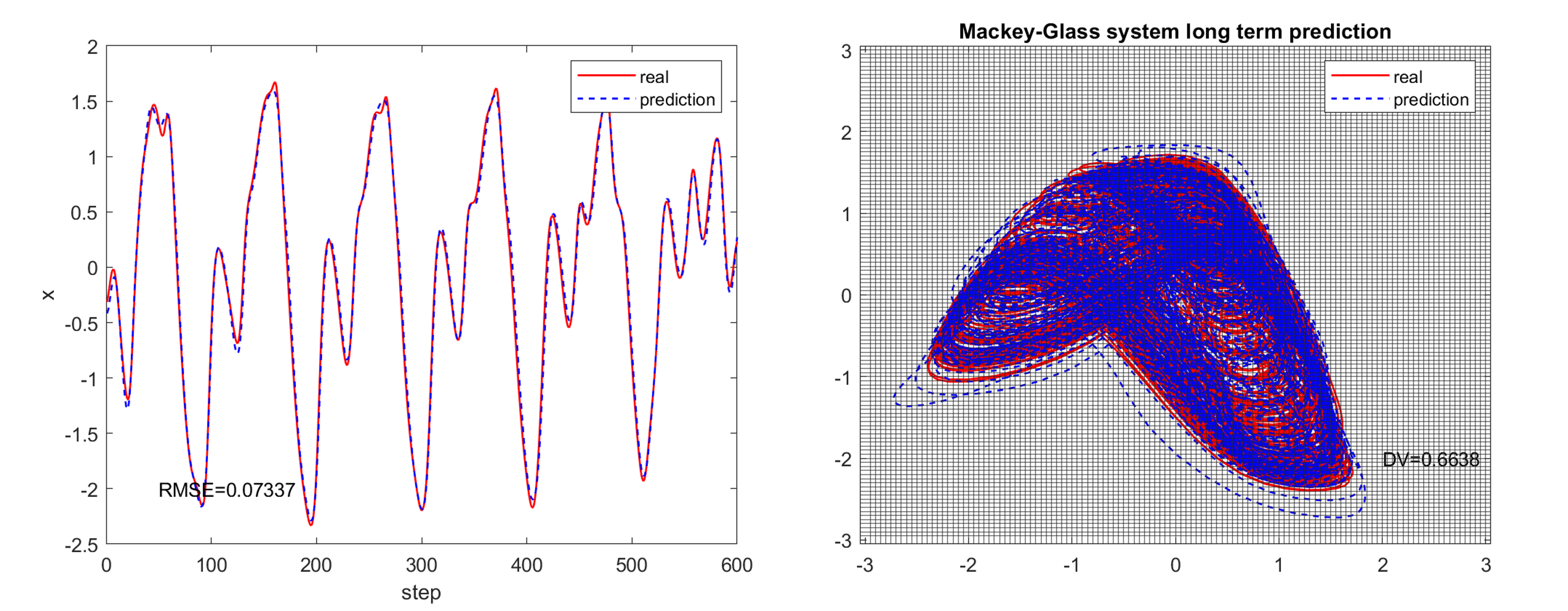

Bayesian optimization is an efficient method for optimizing complex, costly-to-evaluate functions. It uses a probabilistic model to guide the search for optimal parameters, balancing exploration and exploitation. For Python, I use Bayesian optimization (see package bayesian-optimization) to determine the optimal hyperparameters. Meanwhile, for MATLAB codes, I employ a similar yet distinct method: Surrogate optimization (see package surrogateopt), which also yields optimal hyperparameters. Run 'opt_mg.m' or 'opt_mg.py' to optimize hyperparameters in predictring Mackey-Glass system. Using the optimaized hyperparmeters, the reservoir computer produced the following results:

As depicted in the figure, the reservoir computer perfectly predicted the short-term behavior of the Mackey-Glass system with

In order to understand more intuitively these hyperparameters and their impace, I show the hyperparameters and the corresponding machine learning performance below:

| Hyperparameters | By hand | Random Search | Bayesian Optimization |

|---|---|---|---|

| 0.5 | 2.26 | 0.97 | |

| 0.5 | 0.35 | 1.05 | |

| 0.5 | 0.44 | 0.11 | |

| 0.5 | 0.20 | 0.93 | |

Run 'comparison.m' to compare the average values of RMSE (short-term prediction performance) and DV (long-term prediction performance) of 16 iterations for different hyperparameters sets:

In conclusion, reservoir computing is a powerful tool that can be applied to a variety of complex tasks. For simpler tasks, such as predicting the Lorenz system, a small reservoir network with randomly selected hyperparameters suffices. However, as tasks become more complex, optimizing hyperparameters becomes crucial for effective reservoir computing. Various optimization algorithms can be employed for this purpose, including random search and Bayesian optimization.

- The results were generated using MATLAB. A Python version is aslo available which includes only the reservoir computing prediction and Bayesian optimization.

- Some applications:

- You can find the parameter aware reservoir computing for collapse prediction of the food-chain, voltage, and spatial-temporal Kuramoto-Sivashinsky systems on my friend's Github page.

- You can find the nonlinear tracking control achieved by reservoir comptuing with various chaotic and periodic trajectories examples on my Github page .

- You can find the parameters extraction/tracking by reservoir computing on my Github page.

- Some tips:

- Increasing the size of the reservoir network can significantly enhance performance. For efficient hyperparameter optimization, you might start with a relatively small network (to save time), and then use a larger network combined with the optimized hyperparameters.

- During hyperparameter optimization, consider setting a shorter prediction length initially and using a broader range of hyperparameters. After the initial optimization, in a second round, you can opt for a longer prediction length and narrow down the hyperparameters, focusing around the values obtained in the first round.

- The reservoir state

$r$ should be continuously maintained during both training and testing, and the final reservior state from training is the starting point for testing, to ensure seamless prediction. - Uderstanding the meanings of hyperparameters is beneficial. For instance, the value of

$\rho$ determines the memory ability of the reservoir, which explains predictions for complex systems like the Mackey-Glass system typically require a large$\rho$ . In addition, introducing noise to the data for relatively complex tasks can significantly enhance the performance, which can be understood as stochastic resonance (see our work below).

If you have any questions or any suggestions, please feel free to contact me.

@article{PhysRevResearch.5.033127,

title = {Emergence of a resonance in machine learning},

author = {Zhai, Zheng-Meng and Kong, Ling-Wei and Lai, Ying-Cheng},

journal = {Phys. Rev. Res.},

volume = {5},

issue = {3},

pages = {033127},

numpages = {12},

year = {2023},

month = {Aug},

publisher = {American Physical Society},

doi = {10.1103/PhysRevResearch.5.033127},

url = {https://link.aps.org/doi/10.1103/PhysRevResearch.5.033127}

}