Introduction

KAmalEngine (KAE) aims at building a lightweight algorithm package for Knowledge Amalgamation and Model Transferability Estimation.

Features:

- Algorithms for knowledge amalgamation and distillation

- Deep model transferability estimation based on attribution maps

- Predefined callbacks & metrics for evaluation and visualization

- Easy-to-use tools for multi-tasking training, e.g. synchronized transformation

Table of contents

Quick Start

Please follow the instructions in QuickStart.md for the basic usage of KAE. More examples can be found in examples, including knowledge amalgamation, model slimming and transferability.

Algorithms

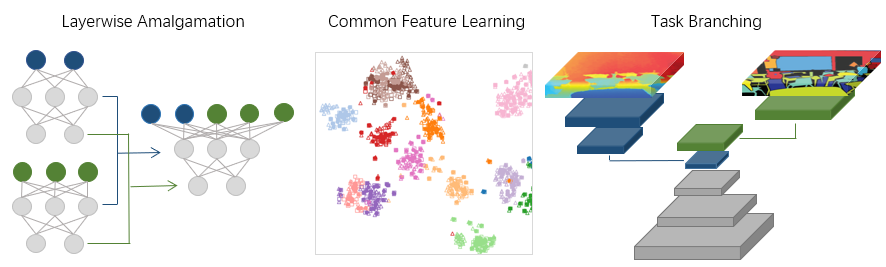

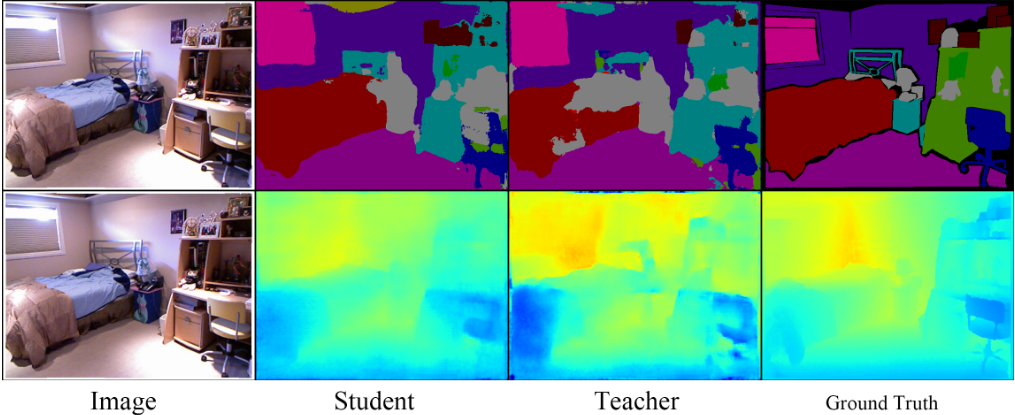

1. Task Branching

Student Becoming the Master: Knowledge Amalgamation for Joint Scene Parsing, Depth Estimation, and More (CVPR 2019)

@inproceedings{ye2019student,

title={Student Becoming the Master: Knowledge Amalgamation for Joint Scene Parsing, Depth Estimation, and More},

author={Ye, Jingwen and Ji, Yixin and Wang, Xinchao and Ou, Kairi and Tao, Dapeng and Song, Mingli},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={2829--2838},

year={2019}

}

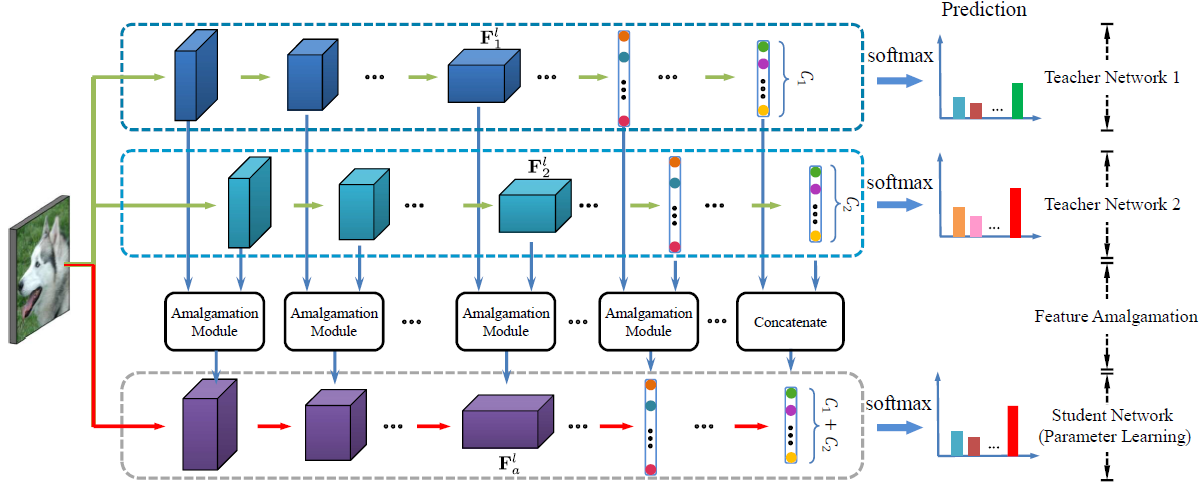

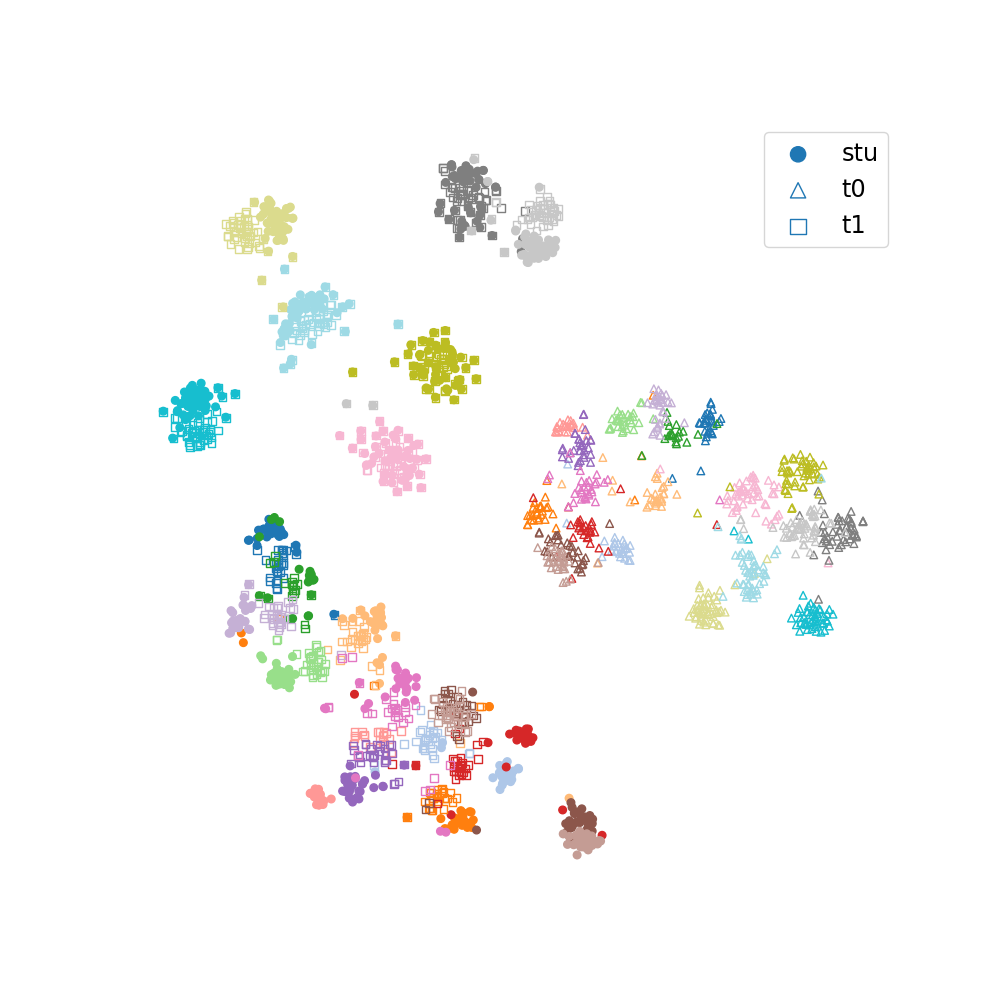

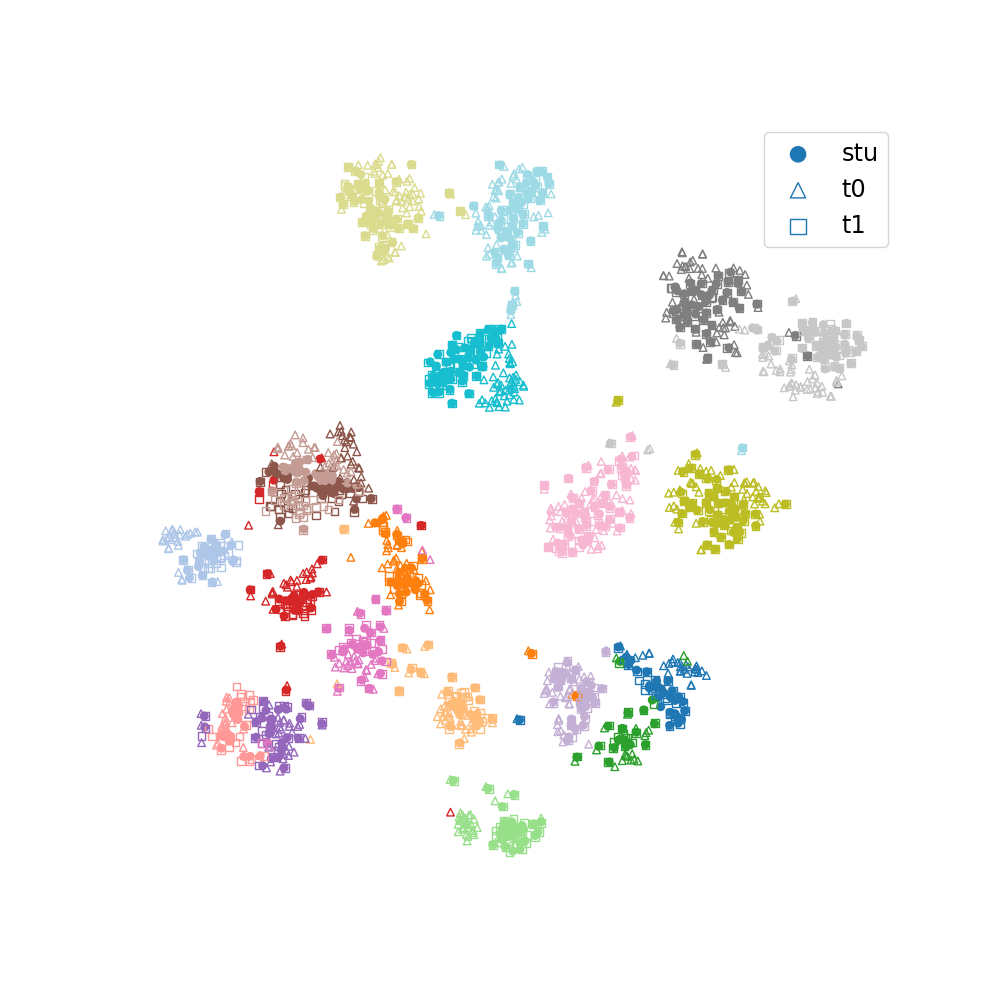

2. Common Feature Learning

Knowledge Amalgamation from Heterogeneous Networks by Common Feature Learning (IJCAI 2019)

| Feature Space | Common Space |

|---|---|

|

|

@inproceedings{luo2019knowledge,

title={Knowledge Amalgamation from Heterogeneous Networks by Common Feature Learning},

author={Luo, Sihui and Wang, Xinchao and Fang, Gongfan and Hu, Yao and Tao, Dapeng and Song, Mingli},

booktitle={Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI)},

year={2019},

}

3. Layerwise Amalgamation

Amalgamating Knowledge towards Comprehensive Classification (AAAI 2019)

@inproceedings{shen2019amalgamating,

author={Shen, Chengchao and Wang, Xinchao and Song, Jie and Sun, Li and Song, Mingli},

title={Amalgamating Knowledge towards Comprehensive Classification},

booktitle={AAAI Conference on Artificial Intelligence (AAAI)},

pages={3068--3075},

year={2019}

}

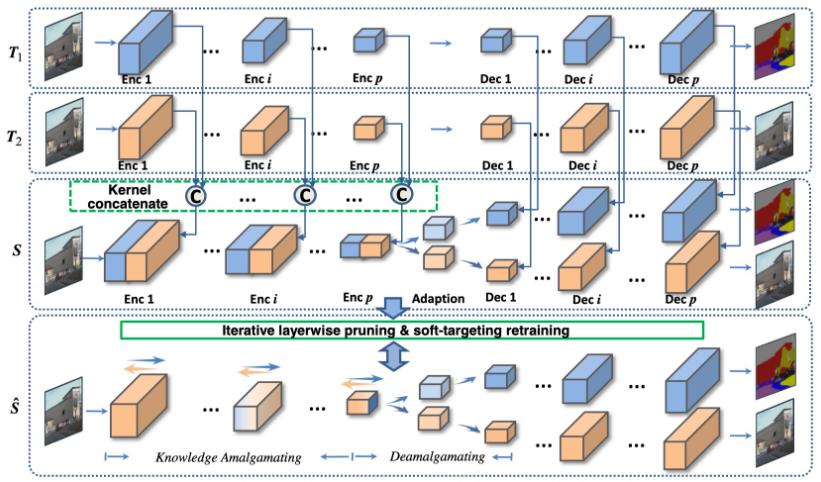

4. Recombination

Build a new multi-task model by combining & pruning weight matrixs from distinct-task teachers.

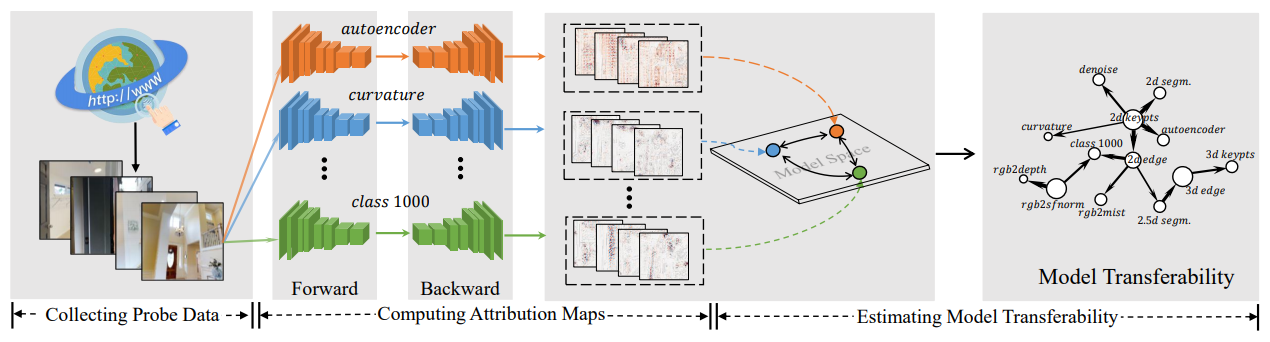

5. Deep model transferability from attribution maps

@inproceedings{song2019deep,

title={Deep model transferability from attribution maps},

author={Song, Jie and Chen, Yixin and Wang, Xinchao and Shen, Chengchao and Song, Mingli},

booktitle={Advances in Neural Information Processing Systems},

pages={6182--6192},

year={2019}

}

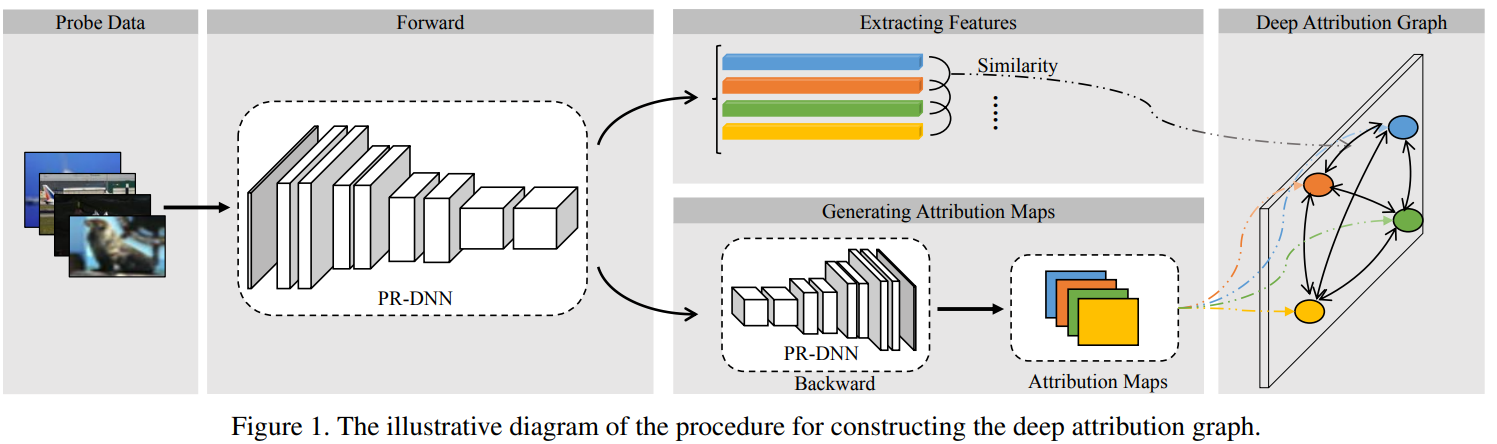

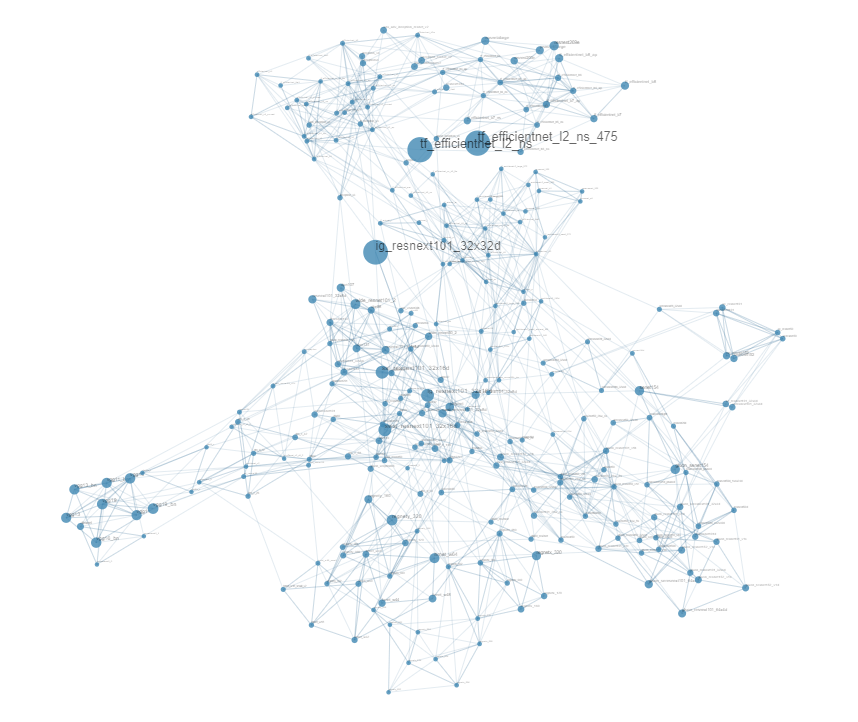

6. DEPARA: Deep Attribution Graph for Deep Knowledge Transferability

@inproceedings{song2020depara,

title={DEPARA: Deep Attribution Graph for Deep Knowledge Transferability},

author={Song, Jie and Chen, Yixin and Ye, Jingwen and Wang, Xinchao and Shen, Chengchao and Mao, Feng and Song, Mingli},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={3922--3930},

year={2020}

}

Transferability Graph

This is an example for deep model transferability on 300 classification models. see examples/transferability for more details.

Authors

This project is developed by VIPA Lab from Zhejiang University and Zhejiang Lab