Zhongjie Yu1, Lin Chen2, Zhongwei Cheng2, Jiebo Luo3 1University of Wisconsin-Madison 2 Futurewei 3University of Rochester

Full paper: Link

Zhongjie Yu's email: zhongjie0721@gmail.com

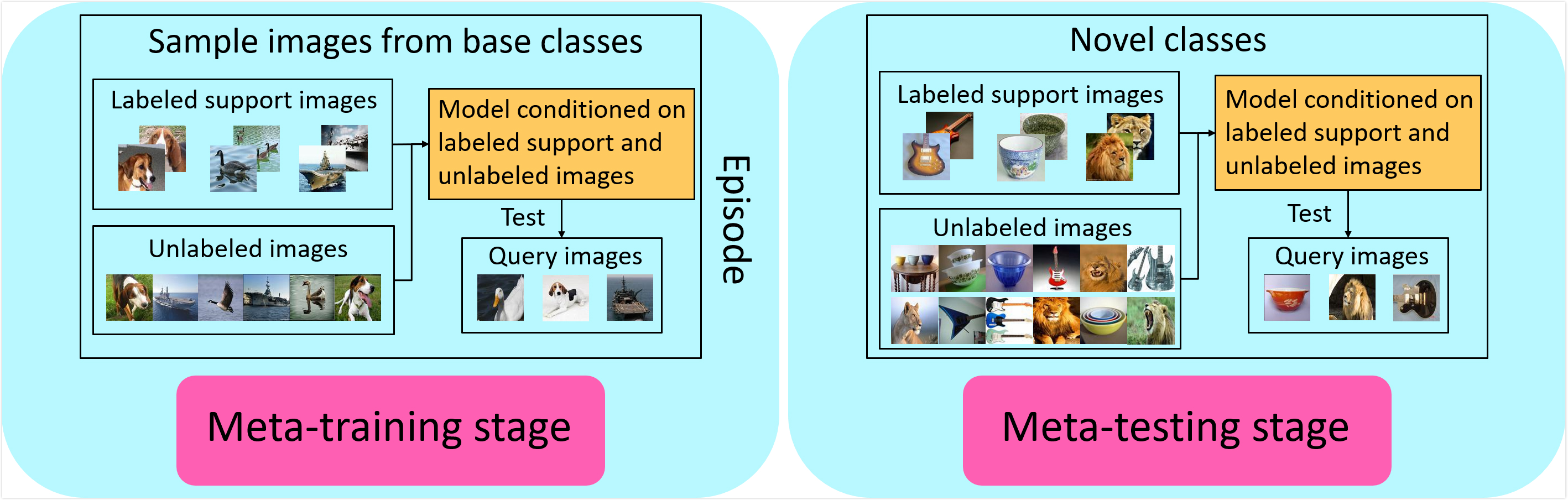

The successful application of deep learning to many visual recognition tasks relies heavily on the availability of a large amount of labeled data which is usually expensive to obtain. The few-shot learning problem has attracted increasing attention from researchers for building a robust model upon only a few labeled samples. Most existing works tackle this problem under the meta-learning framework by mimicking the few-shot learning task with an episodic training strategy. In this paper, we propose a new transfer-learning framework for semi-supervised few-shot learning to fully utilize the auxiliary information from labeled base-class data and unlabeled novel-class data. The framework consists of three components: 1) pre-training a feature extractor on base-class data; 2) using the feature extractor to initialize the classifier weights for the novel classes; and 3) further updating the model with a semi-supervised learning method. Under the proposed framework, we develop a novel method for semi-supervised few-shot learning called TransMatch by instantiating the three components with Imprinting and MixMatch. Extensive experiments on two popular benchmark datasets for few-shot learning, CUB-200-2011 and miniImageNet, demonstrate that our proposed method can effectively utilize the auxiliary information from labeled base-class data and unlabeled novel-class data to significantly improve the accuracy of few-shot learning task.

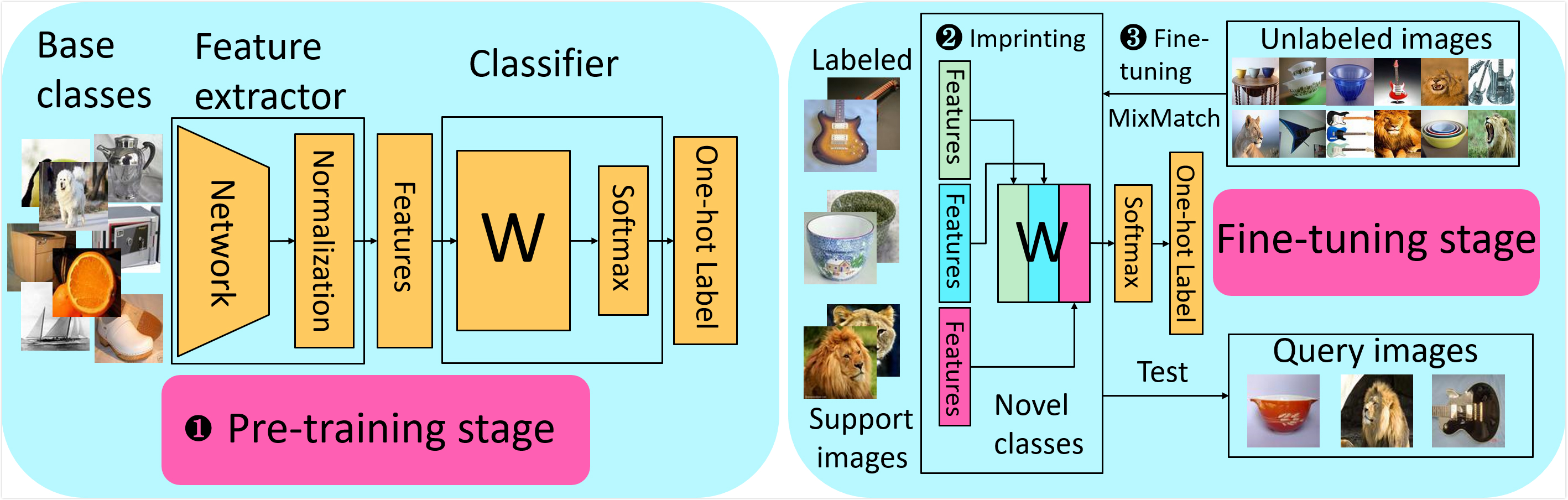

Pre-train feature extractor: We pre-train a base network on all examples in base classes. Different conventional meta-learning based semi-supervised few-shot learning, this helps the classifier fully adsorb the information from base classes.

Classifer weight imprinting: We use the feature extractor to extract feature embeddings for few labeled samples on novel classes. Then we use these feature embeddings to imprint weights of the novel classifier, which serves as a better initialization for the classifier.

Semi-supervised fine-tuning by MixMatch: We adopt the holisitc semi-supervised method MixMatch to fine-tune the novel classifier on few labeled samples and many unlabeled samples. Any better semi-supervised methods could be applied directly for fine-tuning the novel classifier.

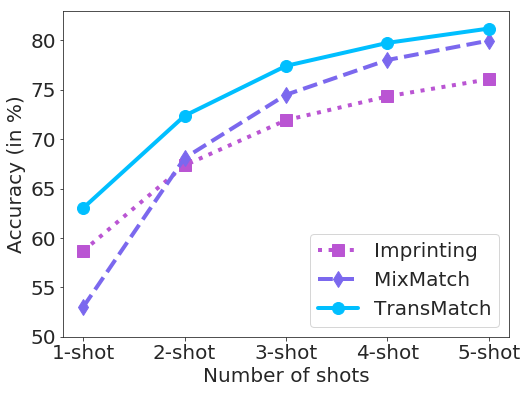

Comparison of Imprinting, MixMatch and our TransMatch for 5-way classification with different number of shots on miniImagenet

Comparison of using Pseudo-Label and MixMatch in the semi-supervised fine-tuning part on miniImagenet

Accuracy (in %) of MixMatch and TransMatch with different number of distractor classes on miniImagenet

Please cite our paper if it is helpful to your work:

@inproceedings{yu2020transmatch,

title={TransMatch: A Transfer-Learning Scheme for Semi-Supervised Few-Shot Learning},

author={Yu, Zhongjie and Chen, Lin and Cheng, Zhongwei and Luo, Jiebo},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={12856--12864},

year={2020}

}