简体中文 | English

This is an Imagination NCSDK enabled PaddlePaddle model zoo, which is part of Hardware Ecosystem Co-Creation Program. Take devices that embedded with Imagination computing IPs(such as GPU and NNA) as target, PaddlePaddle inference model is compiled into deployment package utilizing Imagination Neural Compute SDK(NCSDK). This repository includes deployment models and test code on these models.

We start from models in PaddleClas, such as EfficientNet. The Tutorial of Deployment and Test on models in PaddleSeg, please click here.

Only ROC1 from Unisoc is considered so far.

HW: Unisoc ROC1

SW: NCSDK 2.8, NNA DDK 3.4, GPU DDK 1.17

The evaluation code are written in Python, which depend on following packages.

- pillow

- PyYAML

- numpy==1.19.5

- scipy==1.5.4

- scikit-learn==0.24.2

Deployment models and valset could be downloaded from ftp server.

sftp server: transfer.imgtec.com

http client: https://transfer.imgtec.com

user_name: imgchinalab_public

password: public

The test framework support inference mode or evalution mode. It could run the non-quantized PaddlePaddle model or the quantized PowerVR deployment models to evaluate quantization impacts. It also support distributed evaluation utilizing gRPC.

Host: Powerful workstation used to compile models and has PaddlePaddle installed.

Target: Resource constrained device equipped with Imagination compute IPs.

Inference runs on one batch. It consist of preprocessing, NN inference, and postprocessing. NN running on Imagination compute IPs is determined at compiling stage.

Evaluation runs on multiple batches from evaluation dataset. It calculate performance metrics from the multiple inference results.

PaddlePaddle engine and PowerVR compute runtime could be regarded as different inference backend. They share the same preprocessing and postprocessing. PaddlePaddle engine runs non-quantized models, whereas PowerVR runtime runs quantized models.

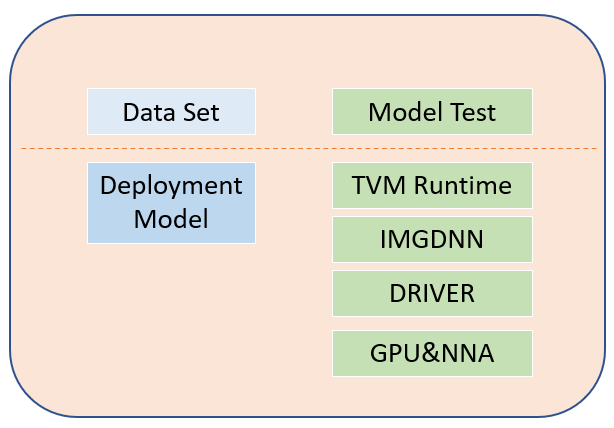

For PowerVR models, it is straight forward to run all stack on target device(Fig.1). However, it's not a good option here because of reasons below:

- Test code is written in Python as it is popular and powerful in AI/ML, however, it is not natively support in some platform, such as Android.

- Target devices are generally resource constrained.

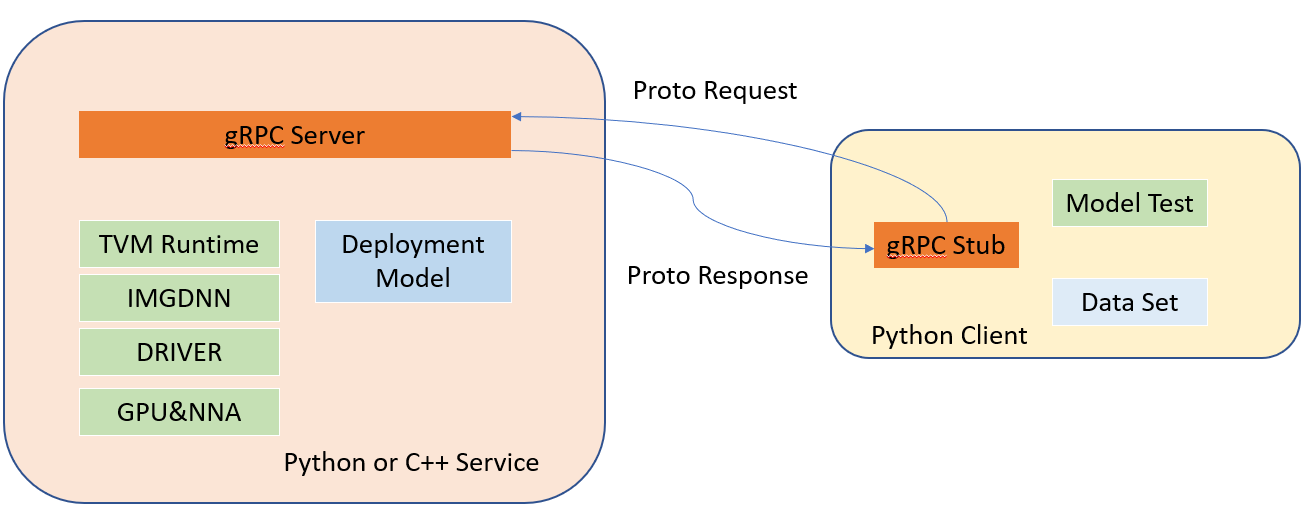

So we set up a distributed test system(Fig.2).Only NN inference runs on target device with deployment model. Dataloader, preprocess, postprocess, performance metric calcuation run on Host. We recommend run inference/evaluation through distributed system.

Fig.1 Standalone system

Setup development host and deployment target device according to NCSDK documents. Make sure basic IMGDNN test pass and tutorial example could be deployed successfully.

- Install PaddlePaddle

- Compile PaddlePaddle model into PowerVR deployment packages.

- Clone this repository.

- Place evaluation dataset in EvalDatasetPath directory.

- Copy python/engine/backend/pvr_grpc/* to GRPCServerPath directory on target devices;

- Copy PowerVR deployment package to DeploymentModelPath;

- Set base_name field in $GRPCServerPath/pvr_service_config.yml, e.g

| field | description | values |

|---|---|---|

| base_name | path to vm file | $DeploymentModelPath/EfficientNetB0-AX2185-ncsdk-2_8_deploy.ro |

- Launch the gRPC server

python PVRInferServer.py

Create a config file in Yaml, some fields are described below. Also refer to configs/image_classification/EfficientNetB0.yaml

| field | description | values |

|---|---|---|

| Global.mode | test mode | evaluation,inference |

| Dataloader.dataset.name | dataset class name to be instantialize | ImageNetDataset |

| Dataloader.dataset.image_root | root directory of imagenet dataset image | string |

| Dataloader.dataset.label_path | path to file to class_id label | string |

| Dataloader.sampler.batch_size | set the batch size of inupt | integer |

| Infer.infer_imgs | path to test image or directory | string |

| Infer.batch_size | batch size of inference | interger |

To inference with Paddle backend, fields below need to be set.

| field | description | values |

|---|---|---|

| Model.backend | backend used to inference | paddle |

| Model.Paddle.path | path to Paddle model | string |

| Model.Paddle.base_name | base name of model files | string |

To run all test code on target device, set the fields below

| field | description | values |

|---|---|---|

| Model.backend | backend used to inference | powervr |

| Model.PowerVR.base_name | path to vm file | string |

| Model.PowerVR.input_name | network input name | string |

| Model.PowerVR.output_shape | shape of output tensor | list |

To run test code on host and run inference on target device, set the fields below

| field | description | values |

|---|---|---|

| Model.backend | backend used to inference | powervr_grpc |

| Model.PowerVR_gRPC.pvr_server | IP of gRPC server | IP address |

| Model.PowerVR.input_name | network input name | string |

| Model.PowerVR.output_shape | shape of output tensor | list |

python tools/test_egret.py -c ./configs/image_classification/EfficientNetB0.yaml

Some field could be override at command line, e.g. to override the batch_size

python tools/test_egret.py -c ./configs/image_classification/EfficientNetB0.yaml \

-o DataLoader.Eval.sampler.batch_size=1

| Model | top-1 | top-5 | time(ms) bs=1 |

time(ms) bs=4 |

Download Address |

|---|---|---|---|---|---|

| ResNet50 (d16-w16-b16) |

75.4 | 93.1 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/ResNet50-AX2185-d16w16b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| ResNet50 (non-quant) |

75.4 | 93.4 | null | null | link |

| ResNet50 (d8-w8-b16) |

74.6 | 93.2 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/ResNet50-AX2185-d8w8b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| HRNet_W48_C_ssld (d16-w16-b16) |

81.9 | 97.0 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/HRNet_W48_C_ssld-AX2185-d16w16b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| HRNet_W48_C_ssld (non-quant) |

82.2 | 96.9 | null | null | link |

| HRNet_W48_C_ssld (d8-w8-b16) |

81.2 | 96.6 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/HRNet_W48_C_ssld-AX2185-d8w8b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| VGG16 (d16-w16-b16) |

70.9 | 90.0 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/VGG16-AX2185-d16w16b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| VGG16 (non-quant) |

71.1 | 90.0 | null | null | link |

| VGG16 (d8-w8-b16) |

70.3 | 89.6 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/VGG16-AX2185-d8w8b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| EfficientNetB0 (d16-w16-b16) |

75.4 | 93.2 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/EfficientNetB0-AX2185-d16w16b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| EfficientNetB0 (non-quant) |

75.9 | 93.7 | null | null | link |

| MobileNetV3_large_x1_0 (d16-w16-b16) |

75.5 | 93.6 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/MobileNetV3-AX2185-d16w16b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| MobileNetV3_large_x1_0 (non-quant) |

75.4 | 93.2 | null | null | link |

| DarkNet53 (d16-w16-b16) |

76.8 | 93.4 | null | null | sftp://transfer.imgtec.com/paddle_models/paddle_classification/DarkNet53-AX2185-d16w16b16-ncsdk_2_8-aarch64_linux_gnu.zip |

| DarkNet53 (non-quant) |

76.6 | 93.4 | null | null | link |

Contributions are highly welcomed and we would really appreciate your feedback!!

You are welcome to contribute to project PaddlePaddle Model Zoo based on Imagination NCSDK. In order for us to be able to accept your contributions, we will need explicit confirmation from you that you are able and willing to provide them under these terms, and the mechanism we use to do this is called a Developer's Certificate of Origin or DCO.

To participate under these terms, all that you must do is include a line like the following as the last line of the commit message for each commit in your contribution:

Signed-Off-By: Random J. Developer <random@developer.example.org>

The simplest way to accomplish this is to add -s or --signoff to your git commit command.

You must use your real name (sorry, no pseudonyms, and no anonymous contributions).