- Authors: Shoubin Yu, Jaemin Cho, Prateek Yadav, Mohit Bansal

- Paper: arXiv

- Online Demo: Try our Gradio demo on Hugging Face

# data & data preprocessing

./sevila_data

# pretrained checkpoints

./sevila_checkpoints

# SeViLA code

./lavis/

# running scripts for SeViLA localizer/answerer training/inference

./run_scripts

- (Optional) Creating conda environment

conda create -n sevila python=3.8

conda activate sevila- build from source

pip install -e .We pre-train SeViLA localizer on QVHighlights and hold checkpoints via Hugging Face. Download checkpoints and put it under /sevila_checkpoints. The checkpoints (814.55M) contains pre-trained localizer and zero-shot answerer.

We also provide a UI for testing our SeViLA locally that is built with gradio. Running demo locally requires about 12GB of memory.

- Installing Gradio:

pip install gradio==3.30.0- Running the following command in a terminal will launch the demo:

python app.pyWe test our model on:

Please download original QA data and preprocess them via our scripts.

We provide SeViLA training and inference script examples as follows.

And please refer to dataset page to custom your data path.

sh run_scripts/sevila/pre-train/pretrain_qvh.shsh run_scripts/sevila/refinement/nextqa_sr.shsh run_scripts/sevila/finetune/nextqa_ft.shsh run_scripts/sevila/inference/nextqa_infer.shWe thank the developers of LAVIS, BLIP-2, CLIP, All-in-One, for their public code release.

Please cite our paper if you use our models in your works:

@article{yu2023self,

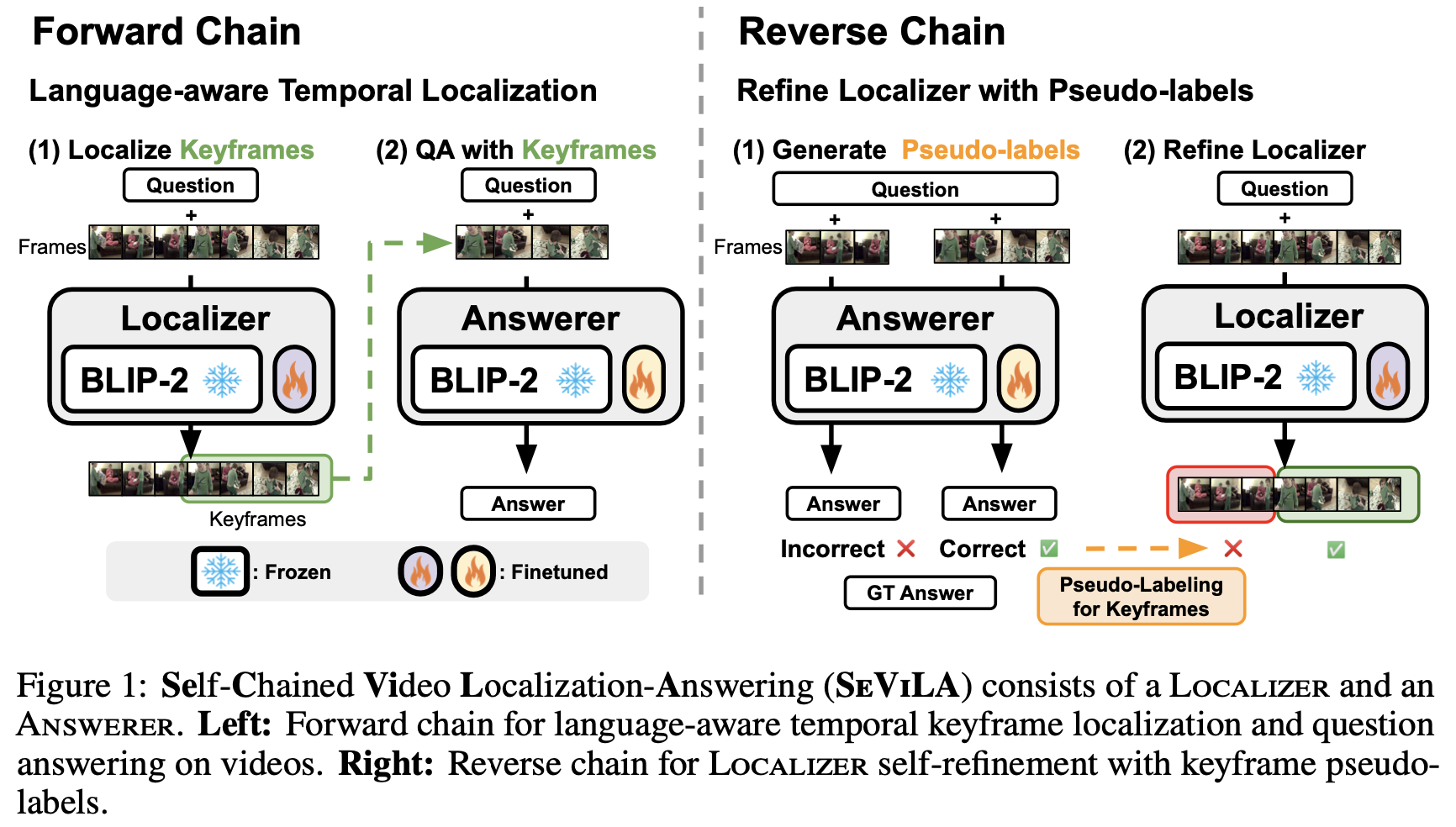

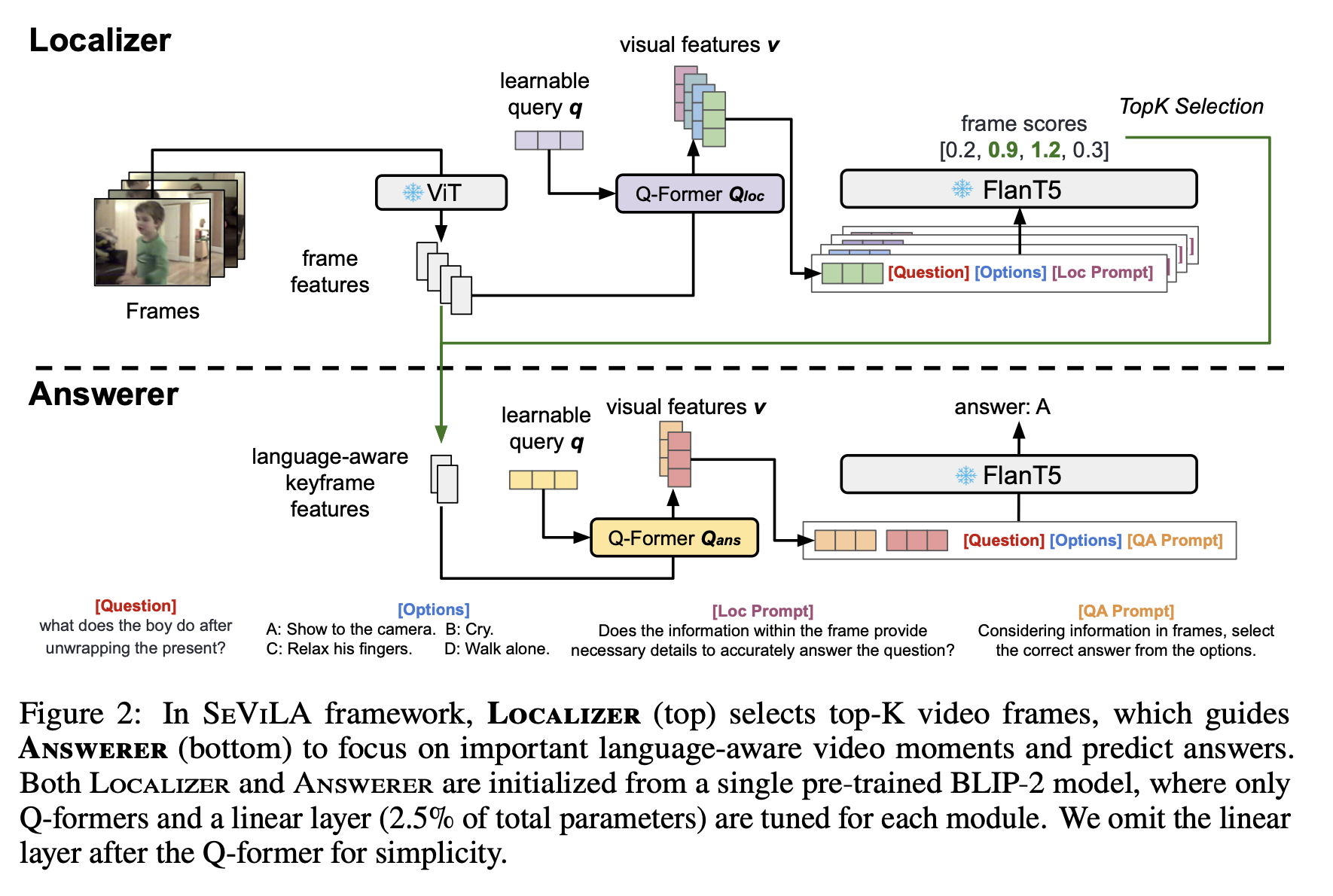

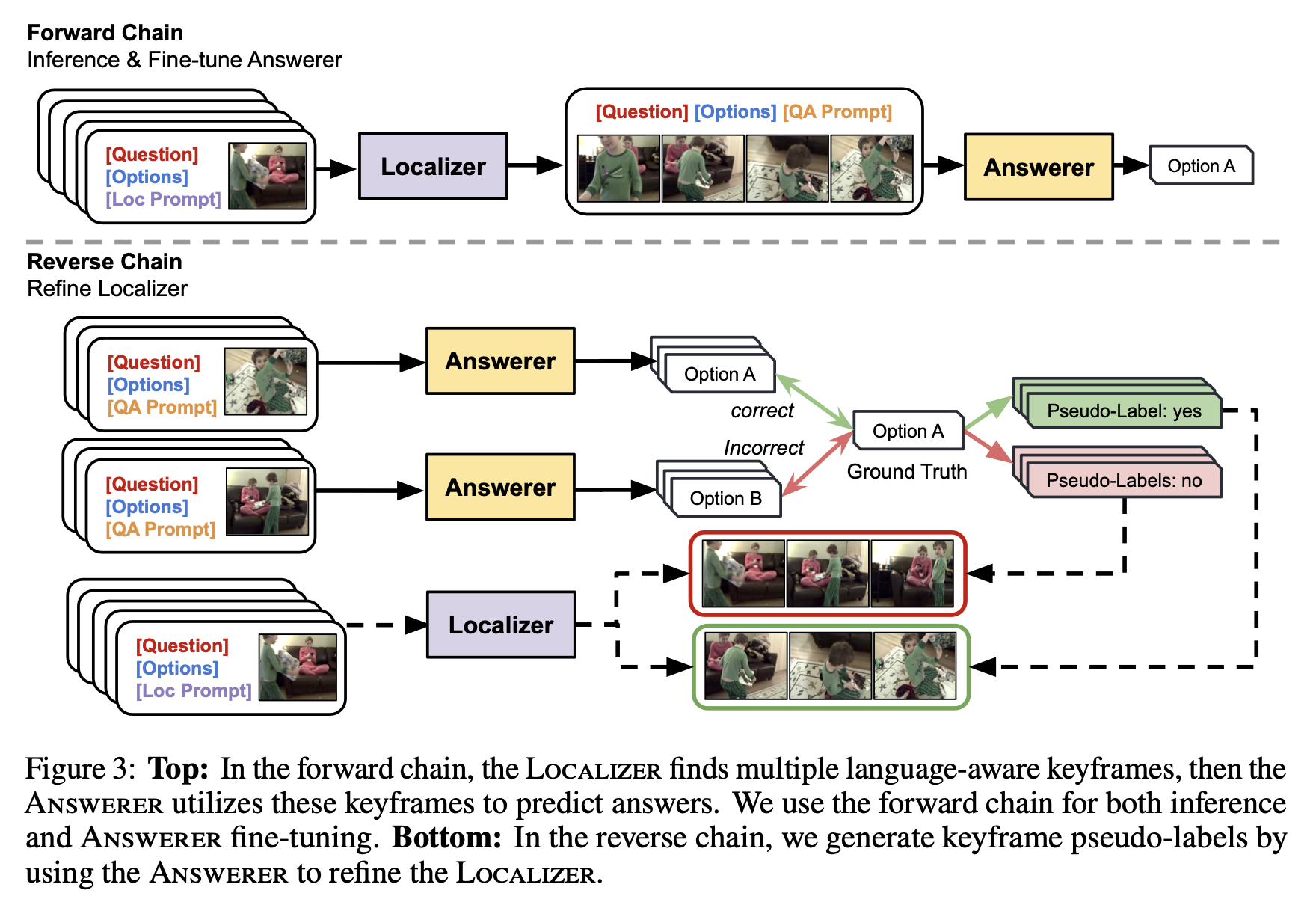

title={Self-Chained Image-Language Model for Video Localization and Question Answering},

author={Yu, Shoubin and Cho, Jaemin and Yadav, Prateek and Bansal, Mohit},

journal={arXiv preprint arXiv:2305.06988},

year={2023}

}