Lingxiao Yang, Ru-Yuan Zhang, Yanchen Wang, Xiaohua Xie

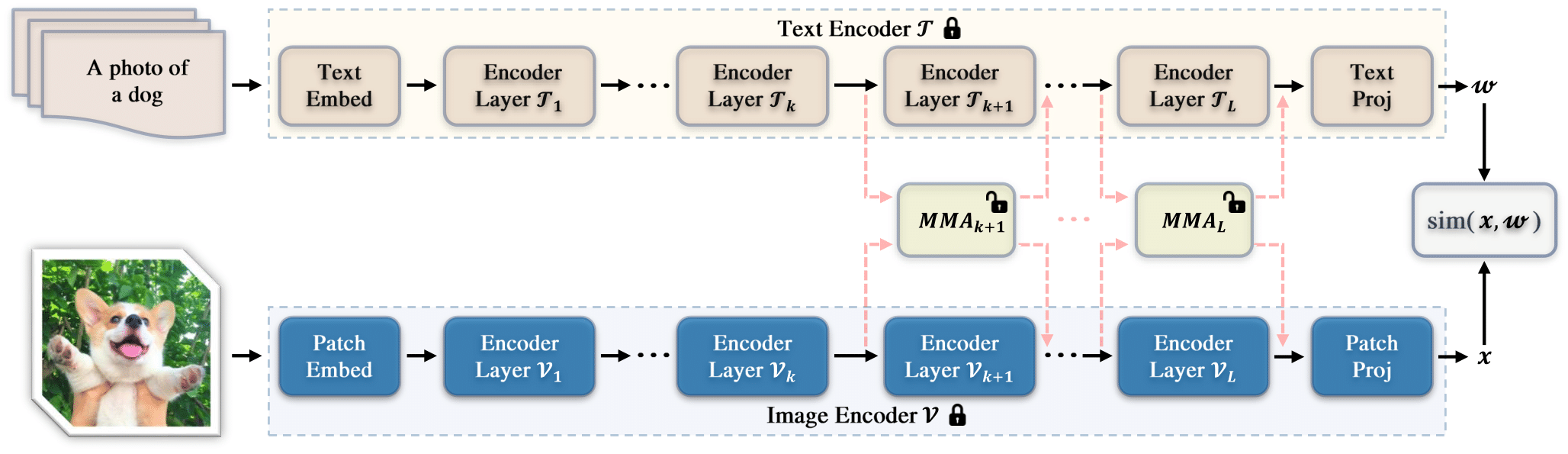

Abstract: Pre-trained Vision-Language Models (VLMs) have served as excellent foundation models for transfer learning in diverse downstream tasks. However, tuning VLMs for few-shot generalization tasks faces a discrimination — generalization dilemma, i.e., general knowledge should be preserved and task-specific knowledge should be fine-tuned. How to precisely identify these two types of representations remains a challenge. In this paper, we propose a Multi-Modal Adapter (MMA) for VLMs to improve the alignment between representations from text and vision branches. MMA aggregates features from different branches into a shared feature space so that gradients can be communicated across branches. To determine how to incorporate MMA, we systematically analyze the discriminability and generalizability of features across diverse datasets in both the vision and language branches, and find that (1) higher layers contain discriminable dataset-specific knowledge, while lower layers contain more generalizable knowledge, and (2) language features are more discriminable than visual features, and there are large semantic gaps between the features of the two modalities, especially in the lower layers. Therefore, we only incorporate MMA to a few higher layers of transformers to achieve an optimal balance between discrimination and generalization. We evaluate the effectiveness of our approach on three tasks: generalization to novel classes, novel target datasets, and domain generalization. Compared to many state-of-the-art methods, our MMA achieves leading performance in all evaluations.

- We introduce a dataset-level analysis method to systematically examine feature representations for transformer-based CLIP models. This analysis helps build more effective and efficient adapters for VLMs.

- We propose a novel adapter that contains separate projection layers to improve feature representations for image and text encoders independently. We also introduce a shared projection to provide better alignment between vision-language representations.

- We integrate our adapter into the well-known CLIP model and evaluate them on various few-shot generalization tasks. Experiment results show that our method achieves leading performance among all compared approaches.

Results reported below are average accuracy across 11 recognition datasets over 3 seeds. Please refer to our paper for more details.

| Name | Base Accuracy | Novel Accuracy | Harmonic Mean |

|---|---|---|---|

| CLIP | 69.34 | 74.22 | 71.70 |

| CoOp | 82.69 | 63.22 | 71.66 |

| CoCoOp | 80.47 | 71.69 | 75.83 |

| ProDA | 81.56 | 72.30 | 76.65 |

| KgCoOp | 80.73 | 73.60 | 77.00 |

| MaPLe | 82.28 | 75.14 | 78.55 |

| LASP | 82.70 | 74.90 | 78.61 |

| LASP-V | 83.18 | 76.11 | 79.48 |

| RPO | 81.13 | 75.00 | 77.78 |

| MMA | 83.20 | 76.80 | 79.87 |

This code is built on top of the awesome project - CoOp, so you need to follow its setup steps:

First, you need to install the dassl environment - Dassl.pytorch. Simply follow the instructions described here to install dassl as well as PyTorch. After that, run pip install -r requirements.txt under Multi-Modal-Adapter/ to install a few more packages required by CLIP (this should be done when dassl is activated).

Second, you need to follow DATASETS.md to install the datasets.

The script run_examples.sh provides a simple illustration. For example, to run the training and evaluation on Base-to-Novel generalization with seed-1 on the GPU-0, you can use the following command:

# arg1 = used gpu_id

# arg2 = seed number

bash run_examples.sh 0 1If you find this work helpful for your research, please kindly cite the following paper:

@InProceedings{Yang_2024_CVPR,

author = {Yang, Lingxiao and Zhang, Ru-Yuan and Wang, Yanchen and Xie, Xiaohua},

title = {MMA: Multi-Modal Adapter for Vision-Language Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {23826-23837}

}