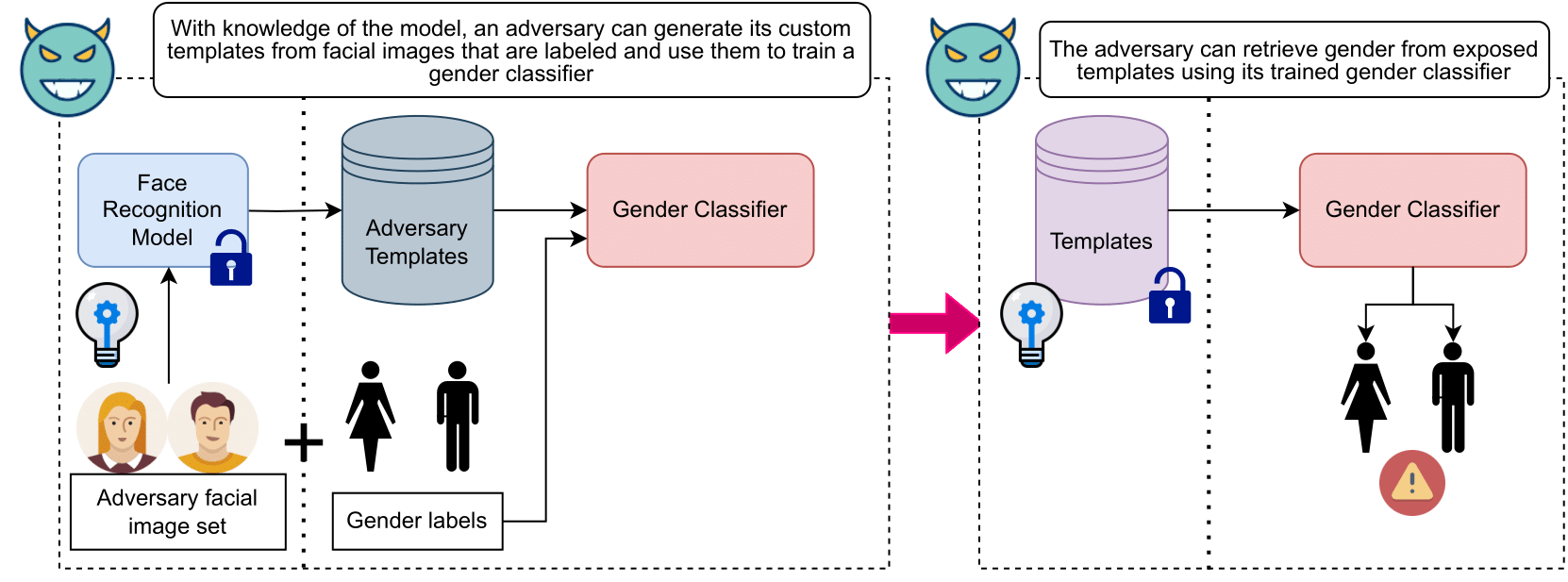

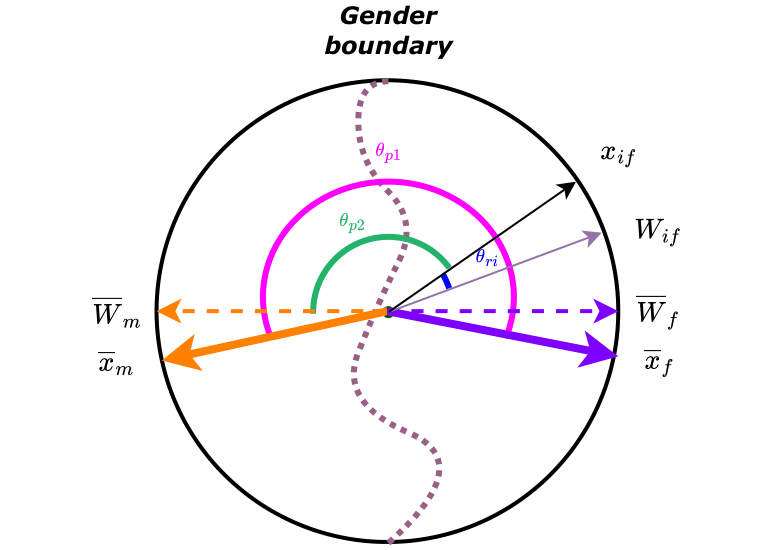

This repository contains code to reproduce the results from our paper "Gender Privacy Angular Constraints for Face Recognition" published in the journal IEEE Transactions on Biometrics, Behavior, and Identity Science in 2024. By applying angular constraints during model finetuning, the project aims to minimize the risk of revealing individuals' gender information, thereby enhancing privacy at the template level of face recognition systems.

To install the necessary dependencies for this project, use the provided requirements.txt file:

pip install -r requirements.txtThe project utilizes datasets such as LFW, AgeDB, ColorFeret, and a sample of VGGFace2 for verification and evaluation of gender privacy. You can download the binary files containing the images for evaluation as well as the pre-trained privacy-enhanced layers for each backbone here.

Ensure that your image dataset is organized in a specific folder structure. The root folder of the dataset should contain subject folders, and within each subject folder, you should place the images belonging to that subject. The subject folders should be named subjectid_gender:

subjectid: A unique identifier for the subject.gender: The perceived gender expression (feminine 1 or masculine 0) of the subject. A sample directory tree could be:

dataset_root/

├── MariaCallas_1/

│ ├── image1.jpg

│ ├── image2.jpg

│ └── ...

├── ElvisPresley_0/

│ ├── image1.jpg

│ ├── image2.jpg

│ └── ...

└── ...

After specifying the training configurations in the file [./config/config.py], run this command:

python finetune.pyPlease refer to our paper for the choice of hyperparameters.

python eval/evaluation.py --log_root "path/to/log" --model_root "path/to/model/directory" --experiment-name "experimentname" --reference_pth "path/to/reference/csv/results"

experiment-name should refer to the different types of training settings. For instance if you train using only one dataset e.g ColorFeret, experiment-name = OneDataset/ColorFeret. The script evaluate.py will run the evaluations of all the models generated with this experiment setting.

If you encounter any issues, have suggestions, or want to contribute improvements, please submit a pull request.

This repository contains some code from InsightFace: 2D and 3D Face Analysis Project. If you find this repository useful for you and use it for research purposes, please consider citing the following papers.

@article{rezgui2024gender,

title={Gender Privacy Angular Constraints for Face Recognition},

author={Rezgui, Zohra and Strisciuglio, Nicola and Veldhuis, Raymond},

journal={IEEE Transactions on Biometrics, Behavior, and Identity Science},

year={2024},

publisher={IEEE}

}@inproceedings{boutros2022elasticface,

title={Elasticface: Elastic margin loss for deep face recognition},

author={Boutros, Fadi and Damer, Naser and Kirchbuchner, Florian and Kuijper, Arjan},

booktitle={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

pages={1578--1587},

year={2022}

}@article{liu2022sphereface,

title={Sphereface revived: Unifying hyperspherical face recognition},

author={Liu, Weiyang and Wen, Yandong and Raj, Bhiksha and Singh, Rita and Weller, Adrian},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

volume={45},

number={2},

pages={2458--2474},

year={2022},

publisher={IEEE}

}@inproceedings{deng2019arcface,

title={Arcface: Additive angular margin loss for deep face recognition},

author={Deng, Jiankang and Guo, Jia and Xue, Niannan and Zafeiriou, Stefanos},

booktitle={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

pages={4690--4699},

year={2019}

}This work was supported by the PriMa project that has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No. 860315.