Implementations of various RL Algorithms

Contents:

- Reinforcement Learning: An Introduction (2nd ed, 2018) by Sutton and Barto

- UCL Course on RL (2016) YouTube lectures by David Silver

- Mini Posts - other algorithms

Implementation of selected algorithms from the book. I tried to make code snippets minimal and faithful to the book.

| Part I: Tabular Solution Methods | |

|

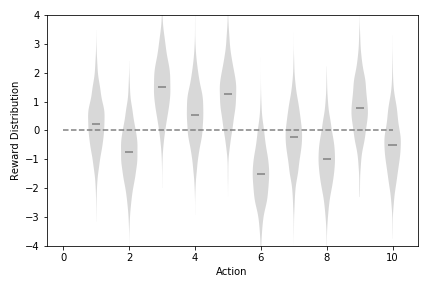

Chapter 2: Multi-armed Bandits

|

|

|

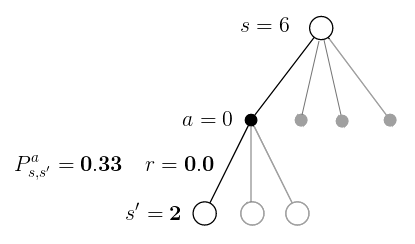

Chapter 4: Dynamic Programming

|

|

|

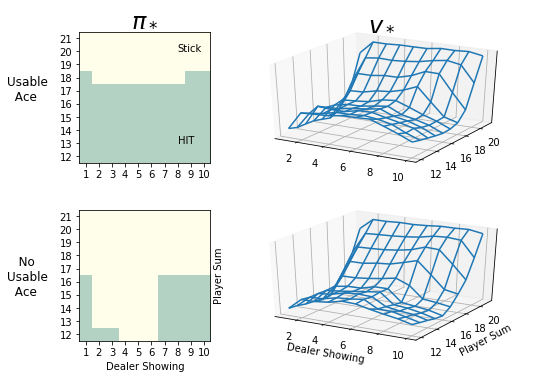

Chapter 5: Monte Carlo Methods

|

|

|

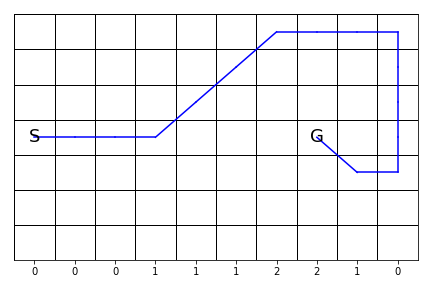

Chapter 6: Temporal-Difference Learning

|

|

| Part II: Approximate Solution Methods | |

|

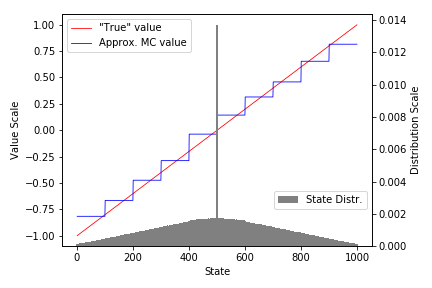

Chapter 9: On-Policy Prediction with Approximation

|

|

|

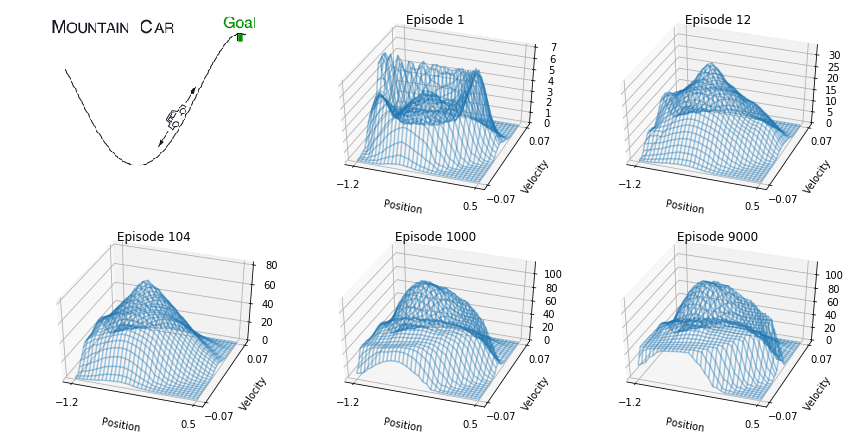

Chapter 10: On-Policy Control with Approximation

|

|

A bit more in-depth explanation of selected concepts from David Sivler lectures and Sutton and Barto book.

-

Lecture 3 - Dynamic Programming

- Dynamic Programming - Iterative Policy Evaluation, Policy Iteration, Value Iteration

-

Lecture 4 - Model Free Prediction

- MC and TD Prediction

- N-Step and TD(λ) Prediction - Forward TD(λ) and Backward TD(λ) with Eligibility Traces

-

Lecture 4 - Model-Free Control

- On-Policy Control - MC, TD, N-Step, Forward TD(λ), Backward TD(λ) with Eligibility Traces

- Off-Policy Control - Expectation Based - Q-Learning, Expected SARSA, Tree Backup

- Off-Policy Control - Importance Sampling - I.S. SARSA, N-Step I.S. SARSA, Off-Policy MC Control

- ANN and Correlated Data - simplest possible example showing why memory reply is necessary

- Minimal TF Keras - fit sine wave