This repository contains our submission for the Robotic Vision Scene Understanding Challenge 2023, as part of the Computer Vision 2 course at the University of Amsterdam. Vision-based scene understanding is a common task in the fields of robotics and computer vision in which visual systems attempt to understand their environment, both from a semantic and geometric perspective.

The core of our method is the creation of a 3D point cloud map of a simulated environment with the use of fast global registration. The semantic map is then created using a pre-trained 3D object detection model that assigns bounding boxes around objects in the original 3D map. The results indicate that, while very accurate in terms of predicting the label of an object, our method is not robust regarding other aspects important to the resulting map, such as the number of objects detected and their spatial quality. Our pipeline is impaired by components related to the estimation of coordinate transformations and limitations of the fast global registration method.

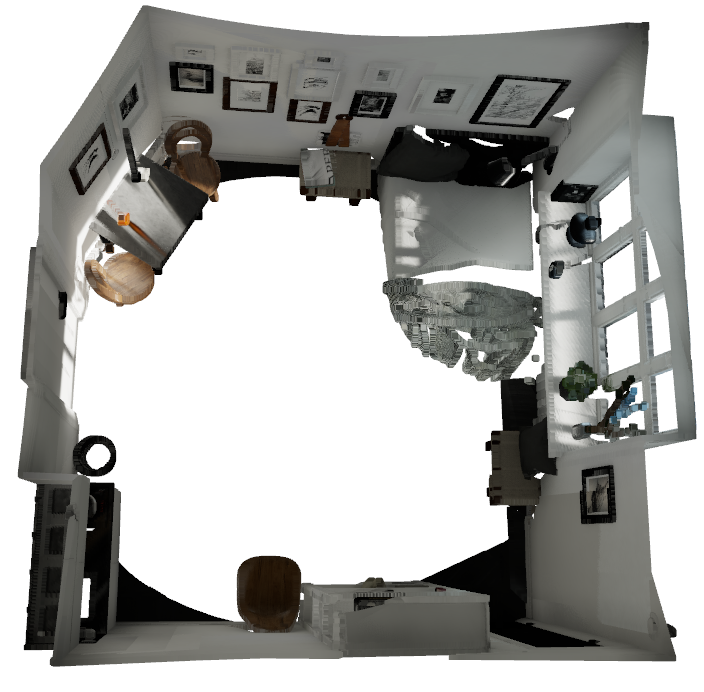

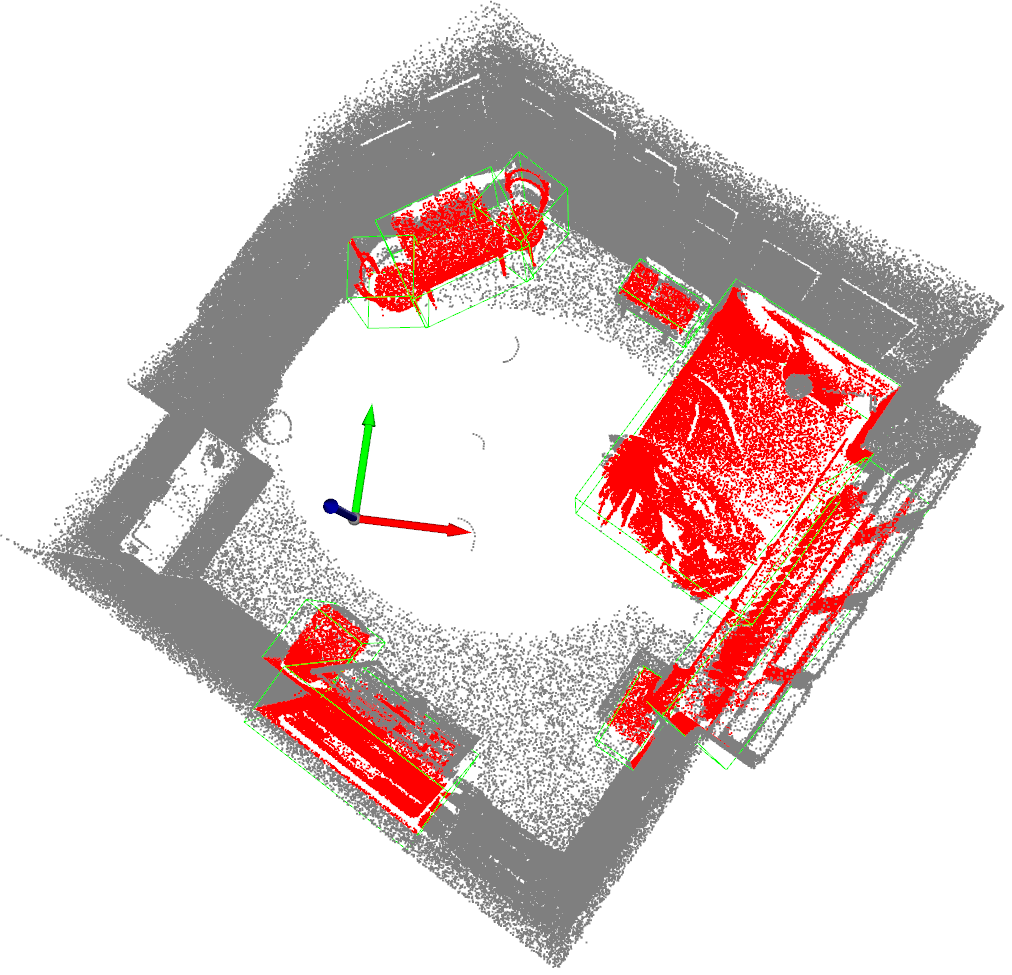

The figures show a 3D reconstruction of the "Miniroom" environment, as well as the 3D segmentation of the created point cloud.

- Benchbot software stack

- pytorch 1.12

- cudatoolkit 10.2

Step 0. Install MMEngine, MMCV and MMDetection using MIM.

pip install -U openmim

mim install mmengine

mim install 'mmcv>=2.0.0rc4'

mim install 'mmdet>=3.0.0'Step 1. Install MMDetection3D version included in this repository.

cd mmdetection3d

pip install -v -e .Step 1. Load the simulation environment and the robot.

benchbot_run --robot carter_omni --env miniroom:1 --task semantic_slam:active:ground_truth -fStep 2. Run the semantic SLAM pipeline.

- Note: should be run from the 'src' directory.

benchbot_submit --native python3 solution/live_custom.pyThe robot will now follow a set of waypoints and build a semantic map of the environment. The results of the pipeline will be saved in the 'result.json' file.

Step 3. Evaluate the results.

benchbot_eval --method omq result.json