clone from:https://github.com/bearpaw/pytorch-classification/blob/master/TRAINING.md (cifar&&imagenet&&curves)

- Install PyTorch

- Clone recursively

git clone --recursive git@github.com:lcaikk1314/pytorch-classification.git

models : 模型定义 models_init : 初始模型,从别的github获取的 models_onnx : 转出的ONNX模型存储位置

按文件组织结构操作

python3 imagenet.py

--arch MobileNetV3

--data ../imagenet/

--epochs 30 --schedule 11 21 --lr 0.0005 --gpu-id 0,1

--model_path './models_init/mbv3_large.pth.tar'

--checkpoint checkpoints_MobileNetV3_Large/imagenet/MobileNetV3

python3 imagenet.py

--arch MobileNetV3

--data ../imagenet/

--gpu-id 0,1,2,3

--model_path './models_init/mbv3_large.pth.tar'

--evaluate

python3 convertonnx.py

--arch MobileNetV3

--model_path './models_init/mbv3_large.pth.tar'

--dest_path './models_onnx/mbv3_large.onnx'

| Model | Params (M) | MFLOPS | model size(M) | Top-1 Error(%) | Top-5 Error(%) | 1threadtimes(ms) | 2threadtimes(ms) | VSS(K) | RSS(K) |

|---|---|---|---|---|---|---|---|---|---|

| mobileV3-large | 3.96 | 271.036 | 30.3 | 29.212 | 10.59 | 89.649 | 59.5683 | 61916 | 49112 |

| mobileV3-small | 2.51 | 65.558 | 19.2 | 35.074 | 14.534 | 29.9176 | 18.8802 | 42972 | 33668 |

| efficientnet-b0 | 5.289 | 401.679 | 20.4 | 23.57 | 6.952 | 145.293 | 134.429 | 103900 | 82436 |

| efficientnet-b1 | 7.794 | 591.948 | 30.1 | 21.628 | 5.964 | 236.81 | 202.56 | 122844 | 103528 |

| efficientnet-b2 | 9.110 | 682.357 | 35.1 | 23.04 | 6.81 | 296.041 | 222.683 | 137692 | 114812 |

| efficientnet-lite0 | 4.652 | 398.727 | 17.9 | 24.606 | 7.500 | 109.905 | 63.9615 | 69596 | 52736 |

| regnet200m | 4.151 | 255.463 | 15.9 | 29.91 | 10.466 | 63.1528 | 37.8787 | 69084 | 45972 |

| regnet400m | 5.74 | 513.125 | 22.0 | 26.21 | 8.282 | 141.344 | 76.282 | 83932 | 65632 |

| regnet600m | 6.633 | 595.577 | 25.4 | 25.27 | 7.648 | ||||

| ghostnetv1 | 5.183 | 148.788 | 19.9 | 26.02 | 8.538 | 63.1484 | 36.6433 | 72292 | 55764 |

| Shufflenet_V2_1.0 | 2.28 | 148.809 | 8.78 | 30.64 | 11.684 | 45.672 | 29.876 | 37340 | 27404 |

| Model | Params (M) | MFLOPS | model size(M) | Top-1 Error (%) | Top-5 Error (%) |

|---|---|---|---|---|---|

Classification on CIFAR-10/100 and ImageNet with PyTorchV1.0.

- Unified interface for different network architectures

- Multi-GPU support

- Training progress bar with rich info

- Training log and training curve visualization code (see

./utils/logger.py)

- Install PyTorch

- Clone recursively

git clone --recursive https://github.com/bearpaw/pytorch-classification.git

Please see the Training recipes for how to train the models.

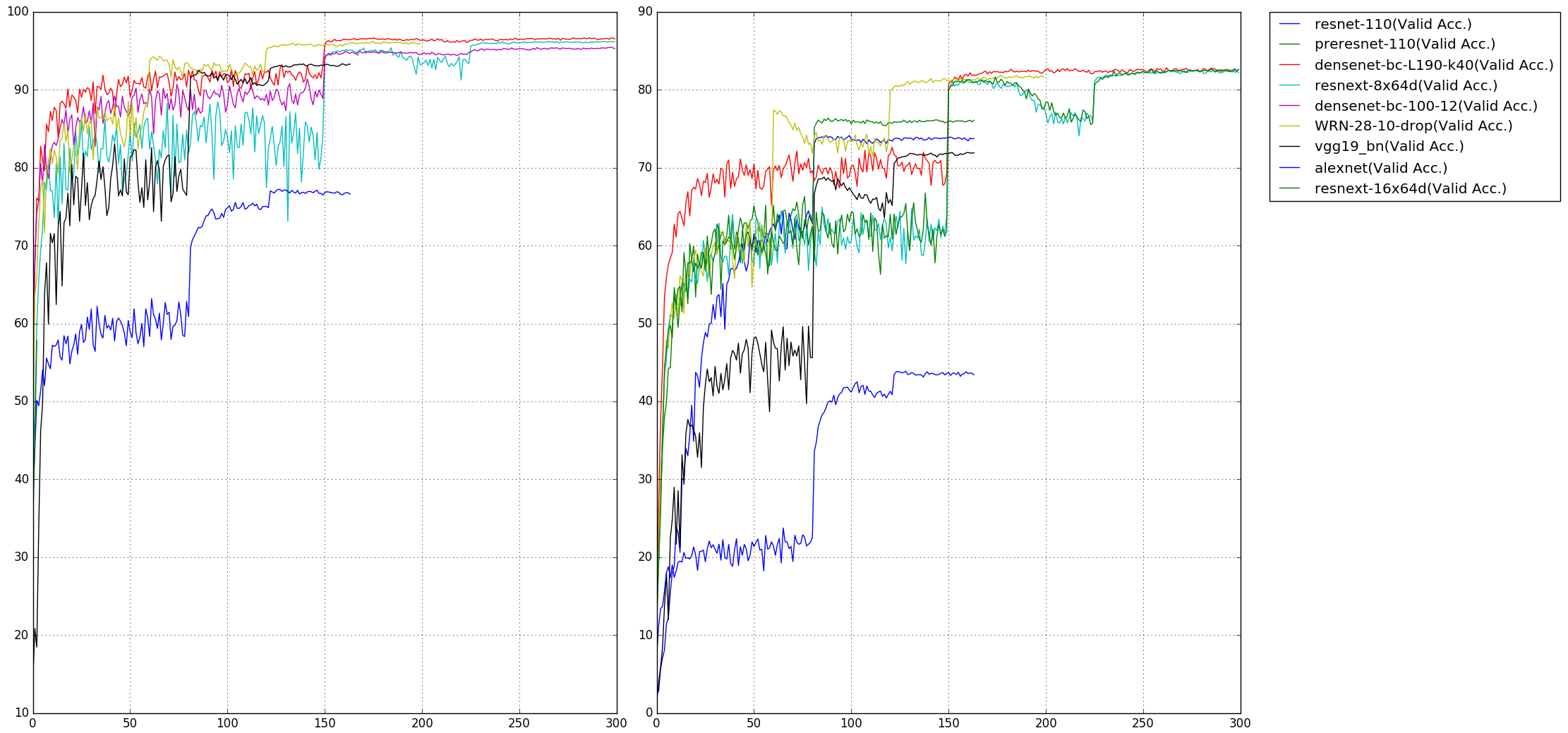

Top1 error rate on the CIFAR-10/100 benchmarks are reported. You may get different results when training your models with different random seed. Note that the number of parameters are computed on the CIFAR-10 dataset.

| Model | Params (M) | CIFAR-10 (%) | CIFAR-100 (%) |

|---|---|---|---|

| alexnet | 2.47 | 22.78 | 56.13 |

| vgg19_bn | 20.04 | 6.66 | 28.05 |

| ResNet-110 | 1.70 | 6.11 | 28.86 |

| PreResNet-110 | 1.70 | 4.94 | 23.65 |

| WRN-28-10 (drop 0.3) | 36.48 | 3.79 | 18.14 |

| ResNeXt-29, 8x64 | 34.43 | 3.69 | 17.38 |

| ResNeXt-29, 16x64 | 68.16 | 3.53 | 17.30 |

| DenseNet-BC (L=100, k=12) | 0.77 | 4.54 | 22.88 |

| DenseNet-BC (L=190, k=40) | 25.62 | 3.32 | 17.17 |

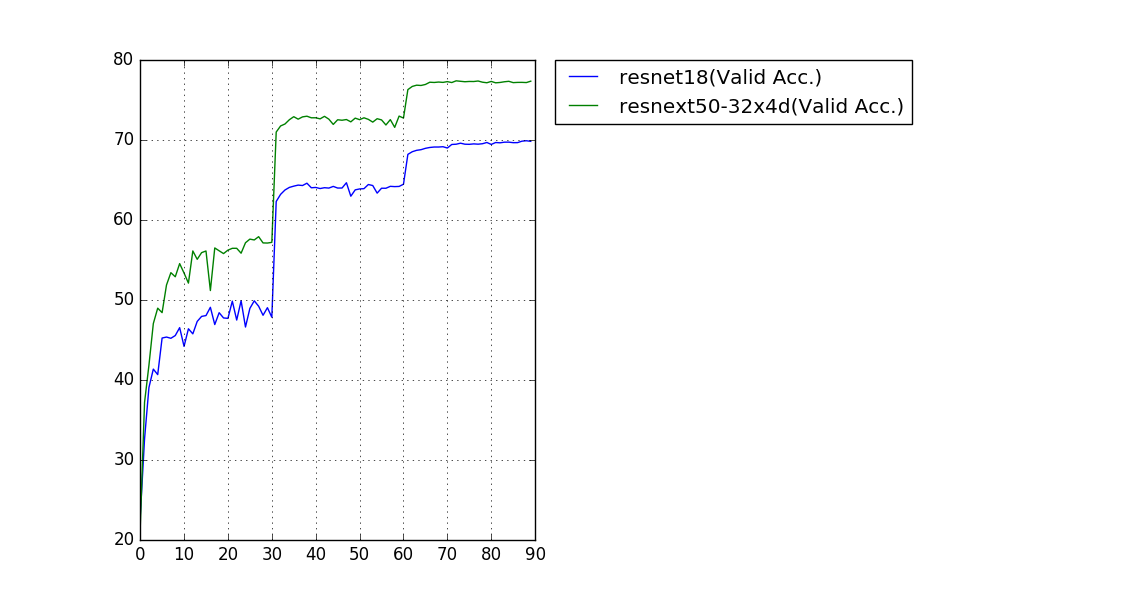

Single-crop (224x224) validation error rate is reported.

| Model | Params (M) | Top-1 Error (%) | Top-5 Error (%) |

|---|---|---|---|

| ResNet-18 | 11.69 | 30.09 | 10.78 |

| ResNeXt-50 (32x4d) | 25.03 | 22.6 | 6.29 |

Our trained models and training logs are downloadable at OneDrive.

Since the size of images in CIFAR dataset is 32x32, popular network structures for ImageNet need some modifications to adapt this input size. The modified models is in the package models.cifar:

- AlexNet

- VGG (Imported from pytorch-cifar)

- ResNet

- Pre-act-ResNet

- ResNeXt (Imported from ResNeXt.pytorch)

- Wide Residual Networks (Imported from WideResNet-pytorch)

- DenseNet

- All models in

torchvision.models(alexnet, vgg, resnet, densenet, inception_v3, squeezenet) - ResNeXt

- Wide Residual Networks

Feel free to create a pull request if you find any bugs or you want to contribute (e.g., more datasets and more network structures).