StoreManger is a shop-rental-and-sell platform for those who want to start a business. The app ranks the real estate for sale or rents according to the area, price, population, etc. Of course, users can choose the specific location, square meter, and price margin.

The App organizes the data crawled from other webs or platforms and analyzes the basic info(area, price, etc.) and surroundings(MRT stations, population, etc.) to help users pick the best place, starting their businesses.

After you hit the search button. The App goes to the page that shows a list of ranked shops. The score is estimated by the population of each MRT station, transport stations, and the region; the surrounding environment of the shop, such as school, park, parking lot, night market, etc.

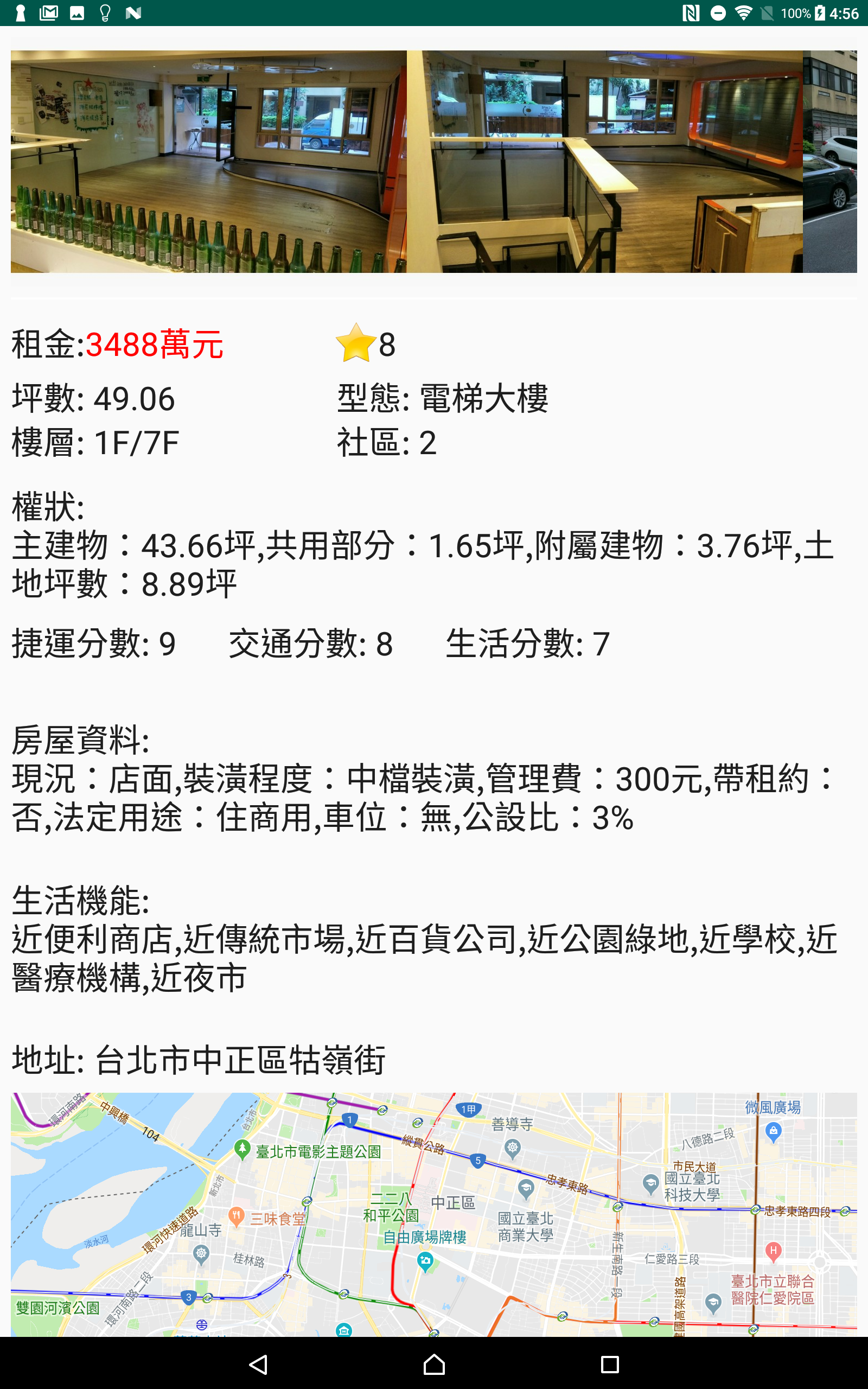

The picture above shows the shop's detail after choosing one of the slots on the list. It also shows the three substantial scores, which we calculated out the total score shown in the previous list by weighted average. The photos on the top of the page are rollable, depending on how many pictures we got; The map marking the location can be browsed on the mini-map or the google map.

StoreManager collects the data and organizes it as the following pictures.

These data correspond to the Activity that display the shop info.

The data correspond to the same Activity mentioned above for the shop's mini-map that links to google map.

And this corresponds to the Activity that shows the search result.

All about this was done by the python scripts. The crawler goes to the websites for data every 24 hours then update the data for the server. At first, the data was manually updated every 3 to 5 days. However, we realized it's not faster enough for getting first-hand info and the data was growing larger every day. Therefore, there were more scripts but hard to run manually. In this case, we must design the multi-processing and self-updating program. Now, simply run the init.py. The script runs every subroutine and updates data every day.

Available under GPL License