An example project for Surround using face recognition models.

This project demonstrates how Surround can be used to build a facial recognition pipeline.

For usage instructions, run python3 -m facerecognition -h.

The pipeline can be run in three different modes: server, batch, and worker:

servermode:- Usage:

python3 -m facerecognition server [-w] - Description: Runs an HTTP server that provides REST endpoints for person/face registration and recognition. Additionally, the

-w/--webcamflag can be used to run a TCP server alongside the HTTP server that will provide frames and face detection from a local webcam. NOTE: This currently only works on Linux. See the Known Issues section.

- Usage:

batchmode:- Usage:

python3 -m facerecognition batch - Description: Processes a directory of image files and produce an encoding for each one.

- Usage:

workermode:- Usage:

celery -A facerecognition.worker worker - Description: Run a Celery worker that will listen to the configured broker for face encoding jobs.

- Usage:

For detailed usage, supply -h to each subcommand.

To view a list of endpoints, visit the /info endpoint. A summarised list is as follows:

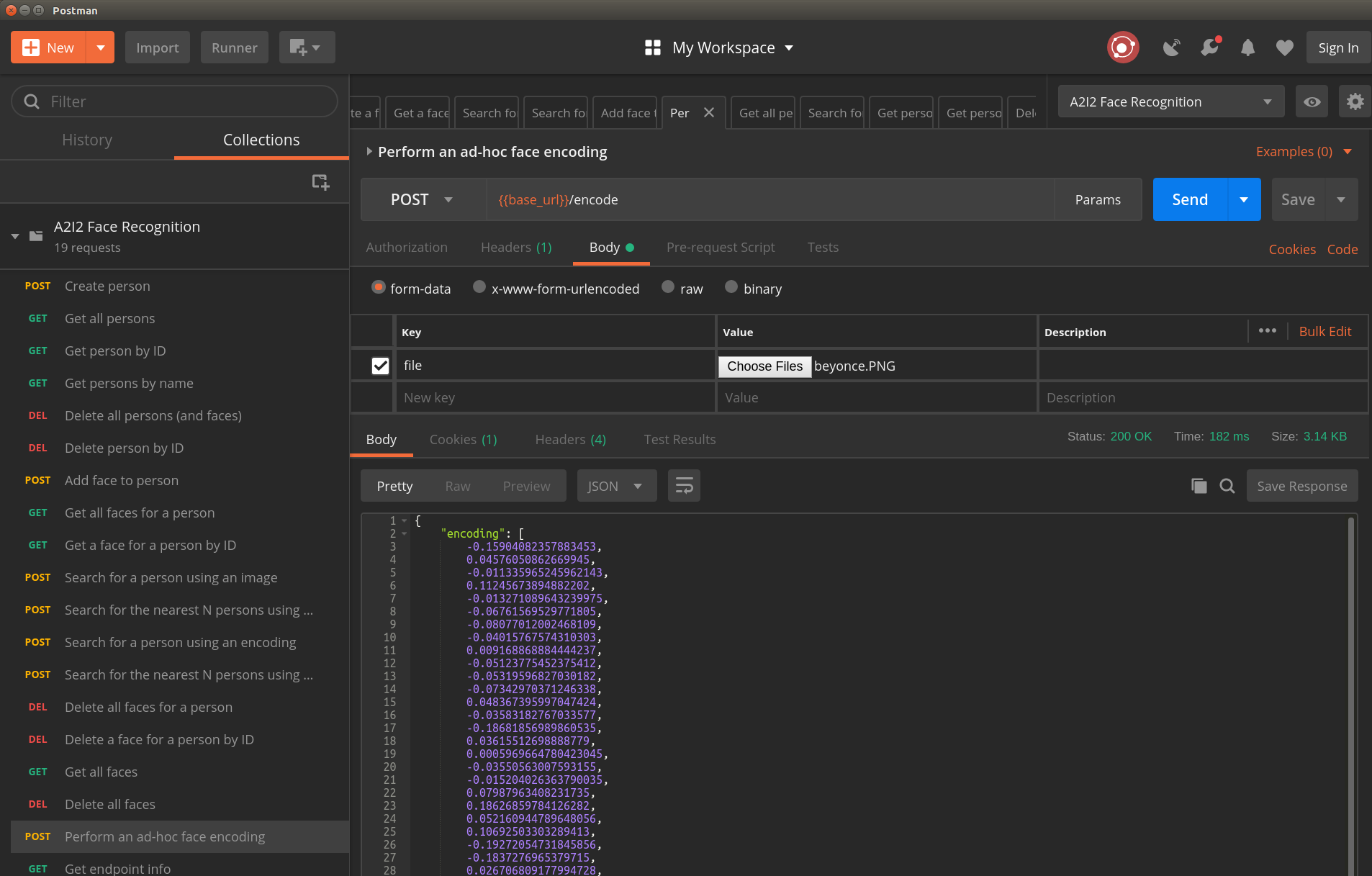

POST /persons: Create a personGET /persons: Get all personsGET /persons?name=name: Search for a person by nameGET /persons/:id: Get a single person by IDGET /persons/:id/faces: Get all face encodings for a personPOST /persons/:id/faces: Add a face to a personGET /persons/:id/faces/:id: Get a single face for a person by IDDELETE /persons/:id/faces/:id: Delete a face for a personDELETE /persons/:id/faces: Delete all faces for a personDELETE /persons: Delete all personsDELETE /persons/:id: Delete a person by IDPOST /persons/photo-search: Search for a person using a photoPOST /persons/photo-search?nearest=N: Get a list of the nearest people (in order of confidence) using a photo, up to a maximum of N peoplePOST /persons/encoding-search: Search for a person using an encodingPOST /persons/encoding-search?nearest=N: Get a list of the nearest people (in order of confidence) using a photo, up to a maximum of N peopleGET /faces: Get all face encodingsDELETE /faces: Delete all face encodingsPOST /encode: Perform a once-off encoding (don't save anything)"

An easy way to test the endpoints is to import the Postman collection and environment from the postman folder.

Batch mode can be used to encode a directory of images and produce an encoding for each one. To test, run the following:

python3 -m facerecognition -i data/input -o data/output -c facerecognition/config.yaml

Then, check the data/output directory for encoding output.

Worker mode can be used to encode large volumes of images. This mode requires a RabbitMQ and Redis server for job/result management. The easiest way to test this is to run via docker-compose:

docker-compose -f docker-compose-distributed.yml up

This will run a worker alongside all required backing services, and will share the data/input directory into the Docker container to the target path /var/lib/face-recognition/input.

You can visit the Flower dashboard at localhost:5555 to view worker/job status, and send jobs via cURL:

curl -X POST -d '{"args":["/var/lib/face-recognition/input/beyonce.PNG"]}' http://localhost:5555/api/task/async-apply/face-recognition.worker.encode

You can also interact with the workers from the Python repl as follows:

>>> from facerecognition.worker import encode

>>> encode.delay("/var/lib/face-recognition/input/beyonce.PNG")

<AsyncResult: bbe00661-d417-4596-b122-30e23df8beff>

>>>

This project is available as a Docker image, which can be run via the following command (As above, supply server or batch to choose the operating mode):

docker run a2i2/face-recognition

Running in server mode requires a PostgreSQL server to store registered people/faces. The easiest way to do this is to run via docker-compose:

docker-compose up

- The webcam feed only works on Linux because extra steps are required to share a webcam with Docker on OSX/Windows hosts.

workermode currently does nothing with the results; it is there mostly for demonstration purposes.