- What

- Why

- How

- Data Model Choice

- Addressing Other Scenarios

- Data Dictionary

- How to use this project

- Log Analysis Scenario

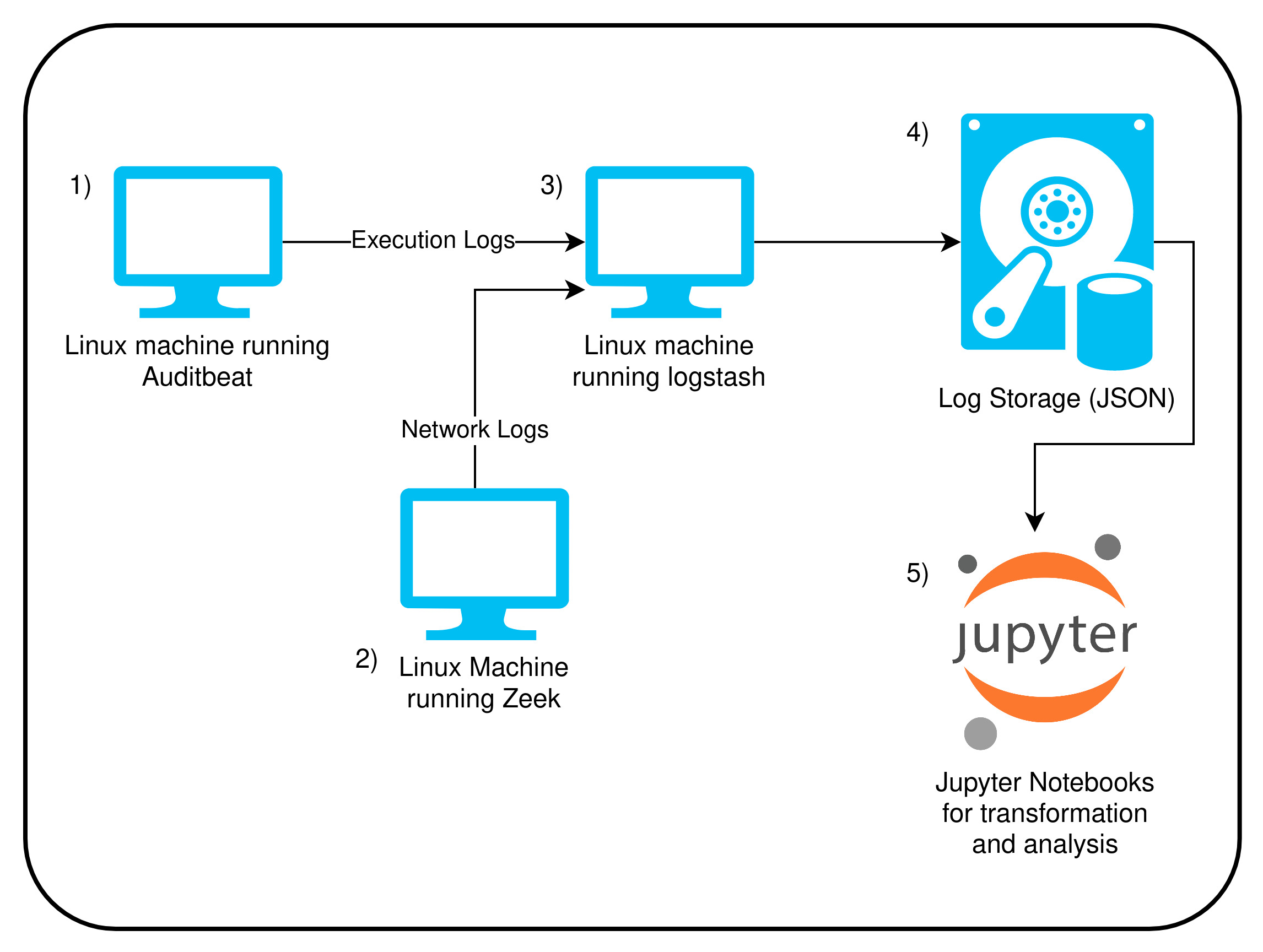

This project gets data from 2 different data sources, network logs, and host logs, and uses them to identify anomalous behavior in the network. These 2 logs are 2 of the most straightforward ones when the point is to have good intelligence with relatively low effort, and that's why those 2 sources were chosen.

There is a big spectrum of tools that can be utilized to gather the information, however, thinking on a scenario that could be done using only free and/or open source solutions, the end game utilized tools from Elastic and Zeek projects, as follows:

- Zeek: Network log aggregation and ingestion.

- Logstash: A host that will listen in the network for files being sent from whatever source configured, and output them into a more structured folder schema to help out with the data lake formation and log centralization.

- Filebeat: Gather logs from local Zeek machine and send them to the Logstash host.

- Auditbeat: Gather host logs like network, commands and so on, and sends them to the Logstash Host.

- Network Log Generation: To generate the fake network traffic, I've built a tool in Bash that get's a list of URLs and sends a cURL request to it. The URL list I've used here is the majestic million.

Usually in the security monitoring scenario, tools that are commonly used to aggregate and analyze data are the so called SIEMs, and theses tools work pretty well for what they are supposed to, however, they are very pricey as the more logs you have. Thinking in a solution that could have a similar analysis potential but would be cheaper, a good way of doing so would be with a data lake architecture using Spark as it's core. With this in mind, all the transformation, search and analysis in this project is made with Pyspark, the Python API to Spark.

- Linux machine generate host logs through Auditbeat and sends them through network to Logstash Host.

- Linux machine generate network logs through Zeek and sends them through network to Logstash Host.

- Logstash host listens to and receive logs through network, process it and creates folders locally in Hive partition format. A cool upgrade here would be to have logstash sending these logs to S3, but since we want it to be cheap, local storage is used insted.

- Logs are stored locally in the Logstash host.

- Jupyter notebooks are used to transform and analyze the generated data using Pyspark as it's core Engine.

- Auditbeat: Host Logs

- Zeek: Network Logs

Logs are a different scenario regarding data modelling. For most use cases, no transformation in the raw data is ever needed, since any transformation usually happens at read time, depending on the given analysis that will take place. Because of that, the final data model is the same as the format that the utilized tools (auditbeat and zeek) generate.

This scenario is easy to manage while using Spark, which is a big data tool. In this case, the easiest way to deal with the scenario is to horizontally escalate the Spark Cluster that works with the data.

Apache Airflow could be used as the scheduler to run the specific tasks as notebooks or python scripts.

Apache spark is a tool that work well with large data, but to address the large number of users, a tool that can manage the user and access part of Spark is Databricks (althought it's a paid tool).

You can find the detail of what is every field in both datasets in the file Data Dictionary.md.

This projects utilizes pyspark do run all the transformation and analysis. To make it easier and more immutable the process of having the environment to work with spark, I've also built this container to have all the programs needed without need to configure it.

To use this container, run as follows (supposing you already have docker on your machine):

PROJECT_PATH="<ABSOLUTE_PROJECT_PATH>"

docker pull ajesus37/pyspark-docker

docker run --rm -it -p 8888:8888 -v $PROJECT_PATH:/home/user/udacity_project ajesus37/pyspark-docker bash

cd /home/user/udacity_project

python3 ETL.py

pyspark

The above commands should do the following:

- Download the pyspark-docker image locally;

- Execute the container image sharing the project path and the container;

- Decompress the raw dataset inside the project folder;

- Start JupyterLabs with pyspark backend already working;

After that, get the url with 127.0.0.1 in it from the terminal output and paste it in your browser. Now you just need to navigate through files and execute the notebooks as wished.

To show what can be done using these 2 datasets together, the notebook Log Analysis Scenario.ipynb shows an investigation of a malicious website accessed by a given host in the network, and it uses the network and host logs to determine what software called the malicious website.