This code pattern is a high-level overview of what to expect in a data science pipeline and the tools that can be used along the way. It starts from framing the business question, to buiding and deploying a data model. The pipeline is demonstrated through the employee attrition problem.

Employees are the backbone of any organization. Its performance is heavily based on the quality of the employees and retaining them. With employee attrition, organizations are faced with a number of challenges:

- Expensive in terms of both money and time to train new employees

- Loss of experienced employees

- Impact on productivity

- Impact on profit

The following solution is designed to help address the employee attrition problem. When the reader has completed this code pattern, they will understand:

- The Process involved in solving a data science problem

- How to create and use Watson Studio instance

- How to mitigate bias by transforming the original dataset through use of the AI Fairness 360 (AIF360) toolkit

- How to build and deploy the model in Watson Studio using various tools

The dataset used in the code pattern is supplied by Kaggle and contains HR analytics data of employees that stay and leave. The types of data include metrics such as education level, job satisfactions, and commmute distance.

The data is made available under the following license agreements:

| Asset | License | Source Link |

|---|---|---|

| Employee Attrition Data - Database License | Open Database License (ODbL) | Kaggle |

| Employee Attrition Data - Content License | Database Content license (DbCL) | Kaggle |

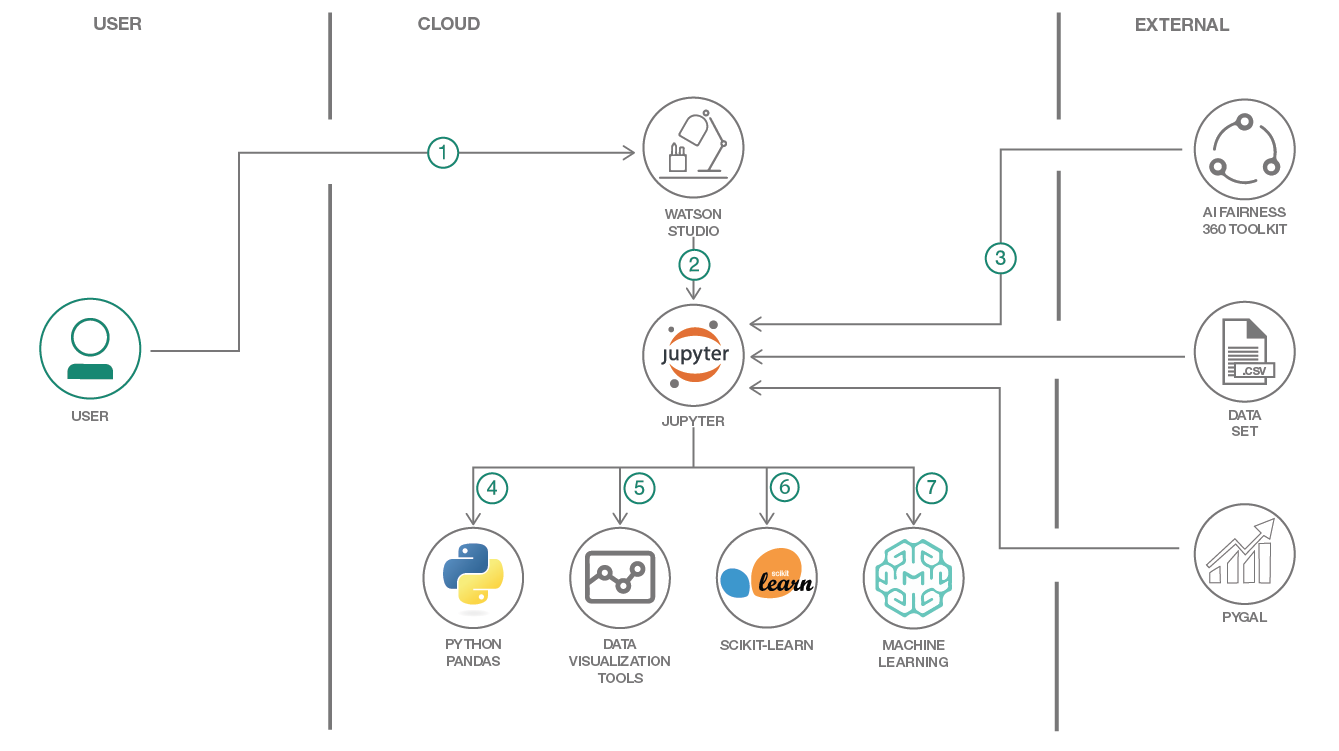

- Create and login to the IBM Watson Studio.

- Upload the jupyter notebook and start running it.

- Notebook downloads the dataset and imports fairness toolkit (AIF360) and Pygal data visualization library.

- Pandas is used for reading the data and perform initial data exploration.

- Matplotlib, Seaborn, Plotly, Bokeh and Pygal (from step-3) are used for visualizing the data.

- Scikit-Learn and AIF360 (from step-3) are used for model development.

- Use the IBM Watson Machine Learning feature to deploy and access the model to generate employee attrition classification.

- IBM Watson Studio: Analyze data using RStudio, Jupyter, and Python in a configured, collaborative environment that includes IBM value-adds, such as managed Spark.

- IBM Watson Machine Learning: a set of REST APIs to develop applications that make smarter decisions, solve tough problems, and improve user outcomes.

- Jupyter Notebook: An open source web application that allows you to create and share documents that contain live code, equations, visualizations, and explanatory text.

- Artificial Intelligence: Artificial intelligence can be applied to disparate solution spaces to deliver disruptive technologies.

- Data Science: Systems and scientific methods to analyze structured and unstructured data in order to extract knowledge and insights.

- Python: Python is a programming language that lets you work more quickly and integrate your systems more effectively.

- Pandas: A Python library providing high-performance, easy-to-use data structures.

- AIF360 Fairness toolkit: This extensible open source toolkit can help you examine, report, and mitigate discrimination and bias in machine learning models throughout the AI application lifecycle.

- Scikit-Learn: Free software machine learning library for the Python programming language.

- Data Visualization tools: Bokeh, Matplotlib, Seaborn, Pygal and Plotly.

- Create a Watson Machine Learning service instance

- Sign up for the Watson Studio

- Create a new Watson Studio project

- Create the notebook

- Run the notebook

- Save and Share

Note: if you would prefer to skip the following steps and just follow along by viewing the completed Notebook, simply:

- View the completed notebook and its outputs, as is.

- While viewing the notebook, you can optionally download it to store for future use.

From your IBM Cloud Dashboard create a Watson Machine Learning instance.

Once created, take note of the credentials listed under the Service credentials tab. These credentials will need to be added to the notebook created in the following steps.

Note: The

Machine Learningservice is required by our notebook to facilitate model deployment.

Log in or sign up for IBM's Watson Studio.

- Select the

New Projectoption from the Watson Studio landing page and choose theData Scienceoption.

- To create a project in Watson Studio, give the project a name and either create a new

Cloud Object Storageservice or select an existing one from your IBM Cloud account.

- Upon a successful project creation, you are taken to a dashboard view of your project. Take note of the

AssetsandSettingstabs, we'll be using them to associate our project with any external assets (datasets and notebooks) and any IBM cloud services.

-

From the project dashboard view, click the

+ Add to projectbutton, then selectNotebookas the asset type. -

Give your notebook a name and select your desired runtime, in this case we'll be using python Runtime.

-

Now select the

From URLtab to specify the URL to the notebook in this repository.

- Enter this URL:

https://github.com/IBM/employee-attrition-aif360/blob/master/notebooks/employee-attrition.ipynb- Click the

Createbutton.

Note: If queried for a Python version, select version

3.5.

When running the notebook, you will come to the cell that requires you to enter your Watson Machine Learning instance credentials. These will be required to complete the notebook. Refer to step #1 above for more details.

When a notebook is executed, what is actually happening is that each code cell in the notebook is executed, in order, from top to bottom.

Each code cell is selectable and is preceded by a tag in the left margin. The tag

format is In [x]:. Depending on the state of the notebook, the x can be:

- A blank, this indicates that the cell has never been executed.

- A number, this number represents the relative order this code step was executed.

- A

*, this indicates that the cell is currently executing.

There are several ways to execute the code cells in your notebook:

- One cell at a time.

- Select the cell, and then press the

Playbutton in the toolbar.

- Select the cell, and then press the

- Batch mode, in sequential order.

- From the

Cellmenu bar, there are several options available. For example, you canRun Allcells in your notebook, or you canRun All Below, that will start executing from the first cell under the currently selected cell, and then continue executing all cells that follow.

- From the

- At a scheduled time.

- Press the

Schedulebutton located in the top right section of your notebook panel. Here you can schedule your notebook to be executed once at some future time, or repeatedly at your specified interval.

- Press the

Under the File menu, there are several ways to save your notebook:

Savewill simply save the current state of your notebook, without any version information.Save Versionwill save your current state of your notebook with a version tag that contains a date and time stamp. Up to 10 versions of your notebook can be saved, each one retrievable by selecting theRevert To Versionmenu item.

You can share your notebook by selecting the Share button located in the top

right section of your notebook panel. The end result of this action will be a URL

link that will display a “read-only” version of your notebook. You have several

options to specify exactly what you want shared from your notebook:

Only text and output: will remove all code cells from the notebook view.All content excluding sensitive code cells: will remove any code cells that contain a sensitive tag. For example,# @hidden_cellis used to protect your credentials from being shared.All content, including code: displays the notebook as is.- A variety of

download asoptions are also available in the menu.

View a copy of the notebook including output here.

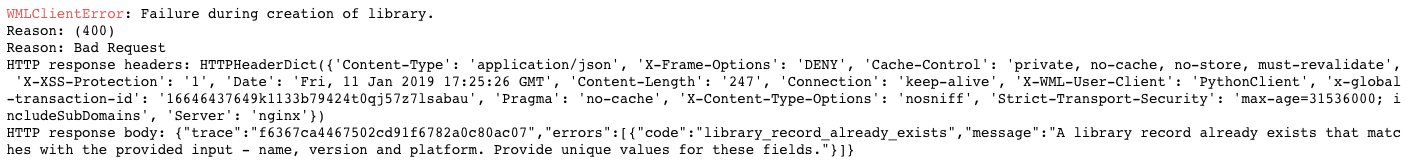

-

Notebook error:

This will occur if you run the notebook multiple times. The custom library

NAMEfound in the structure below must be unique for each run. Change the value and run the cell again.library_metadata = { client.runtimes.LibraryMetaNames.NAME: "PipelineLabelEncoder-Custom", client.runtimes.LibraryMetaNames.DESCRIPTION: "label_encoder_sklearn", client.runtimes.LibraryMetaNames.FILEPATH: "Pipeline_LabelEncoder-0.1.zip", client.runtimes.LibraryMetaNames.VERSION: "1.0", client.runtimes.LibraryMetaNames.PLATFORM: {"name": "python", "versions": ["3.5"]} }

- Artificial Intelligence Code Patterns: Enjoyed this Code Pattern? Check out our other AI Code Patterns.

- Data Analytics Code Patterns: Enjoyed this Code Pattern? Check out our other Data Analytics Code Patterns

- AI and Data Code Pattern Playlist: Bookmark our playlist with all of our Code Pattern videos

- With Watson: Want to take your Watson app to the next level? Looking to utilize Watson Brand assets? Join the With Watson program to leverage exclusive brand, marketing, and tech resources to amplify and accelerate your Watson embedded commercial solution.

- Watson Studio: Master the art of data science with IBM's Watson Studio

This code pattern is licensed under the Apache License, Version 2. Separate third-party code objects invoked within this code pattern are licensed by their respective providers pursuant to their own separate licenses. Contributions are subject to the Developer Certificate of Origin, Version 1.1 and the Apache License, Version 2.