Kwanghyeon Lee, Mina Kang, Hyungho Na, Heesun Bae, Byeonghu Na, Doyun Kwon, Seungjae Shin, Yeongmin Kim, Taewoo Kim, Seungmin Yun, and Il-Chul Moon

| [paper] |

We will upload our paper to Arxiv soon.

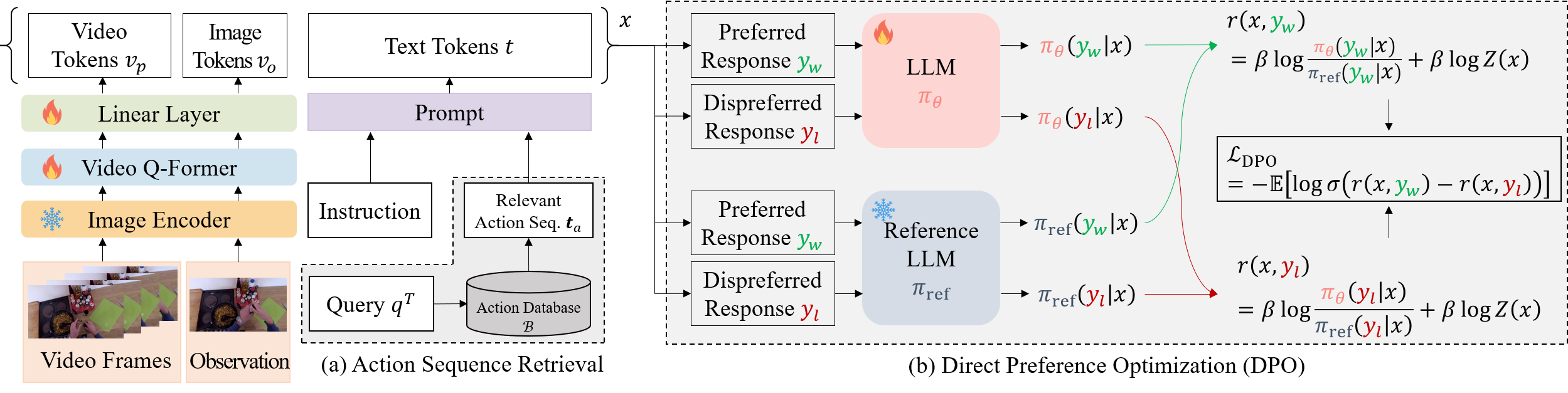

Our method consists of two components: Direct Preference Optimization (DPO) and Retrieval-Augmented Generation (RAG). We retrieve and add additional narration from action database using RAG, and train Multi-modal Large Language Models (MLLMs) with DPO loss.

- Our implementation is based on EgoPlan-Bench.

- We used Instruction Dataset & Corresponding Video/Image Dataset and Model Checkpoint refer to EgoPlan-Bench.

- We also provide our generated Action Database and Model Checkpoint.

- Instruction Dataset & Corresponding Dataset

- EgoPlan-Bench (Train / Valid / Test) & EpicKitchens / Ego4D Dataset

- Instruction Dataset:

- Train (50K): EgoPlan_IT.json

- Valid (3K): EgoPlan_validation.json

- Test (2K): EgoPlan_test.json

- Video Dataset:

- Epickitchens Dataset: EPIC-KITCHENS-100

- Ego4D Dataset: Ego4D

- Instruction Dataset:

- Image-based Instructions from MiniGPT-4 (3K) & cc_sbu_align Dataset (zip file has instruction .json file and images.)

- Instruction & Image Dataset:

- Image-based Instructions from LLaVA (150K) & MS COCO 2014 Training Dataset

- Instruction Dataset:

- LLaVA Instruction Dataset: llava_instruct_150k.json

- Image Dataset:

- MS COCO 2014 Training Image Dataset: MS COCO 2014 Training Image Dataset:

- Instruction Dataset:

- Video-based Instructions from VideoChat (11K) & WebVid Dataset

- Instruction Dataset:

- Videochat Instruction Dataset: videochat_instruct_11k.json

- Important! Since we don't get full WebVid dataset, we use revised instruction dataset file for own situation. You can download videochat_instruct_11k_revised.json

- Video Dataset:

- WebVid Dataset (for VideoChat Instuction): Since WebVid dataset is no longer available, we download the video dataset by two steps.

- Download WebVid-10M dataset information csv file.

- Download the video file and save it into your specific path by our provided python code. (The videos are not fully download because some of videos are not allowed to download. So we use videochat_instruct_11k_revised.json instead.)

- WebVid Dataset (for VideoChat Instuction): Since WebVid dataset is no longer available, we download the video dataset by two steps.

- Instruction Dataset:

- EgoPlan-Bench (Train / Valid / Test) & EpicKitchens / Ego4D Dataset

- Model Checkpoint:

- Original (Vanilla) Video-LLaMA: Original Video-LLaMA

- Provided Finetuned Video-LLaMA with EgoPlan_IT dataset from EgoPlan-Bench: Finetuned Video-LLaMA (with lora weights)

- Vision Transformer: eva_vit_g.pth (You should use Git LFS to download it.)

- Q-Former: blip2_pretrained_flant5xxl.pth (You should use Git LFS to download it.)

- BERT: bert-base-uncased

- Our RAG Dataset

- Our Checkpoint

- You can download our model ckpt from here.

- Instruction Dataset & Corresponding Dataset

Since EpicKitchens and Ego4D datasets are large datasets, you need to download only necessary thing if you have limited resource. We follow path setting from EgoPlan-Bench.

Download the RGB frames of EPIC-KITCHENS-100 and videos of Ego4D. The folder structure of two datasets are shown below:

- EpicKitchens Dataset:

EPIC-KITCHENS └── P01 └── P01_01 ├── frame_0000000001.jpg └── ... - Ego4D Dataset:

Ego4D └── v1 ├── 000786a7-3f9d-4fe6-bfb3-045b368f7d44.mp4 └── ...

We provide the file we used and setting for reproduction.

- Since we have some trouble with downloading Epickitchens dataset, we also share the Epickitchens Video ID list file we used to check if there any missed video compared with original EPIC-KITCHENS-100.

- You can download our model config to reproduce models in table. (DPO Finetuned model checkpoint is here with lora weights.)

You can generate training dataset and test dataset with additional narration from action database (EpicKitchens training dataset + Ego4D generated dataset) by RAG.

- Before generating RAG Train / Test dataset, you should download our Ego4D generated action database here.

- RAG Training Dataset (/RAG_train):

- download dataset for RAG training data.

- run bash code.

bash run.sh

- RAG Test Dataset (/RAG_test):

- download dataset for RAG test data.

- run each code in order.

bash run.sh

Before finetuning or evaluating, you need to prepare .yaml file to set configuration. If you want to train dataset with RAG, you need to change config 'datasets.datasets.egoplan_contrastive.answer_type' to "egoplan_qa_with_narr"!

- run

bash scripts/format_finetune.sh {config} {device} {node} {master port}- Ex.

bash scripts/format_finetune.sh Original_RAG_X_loss_DPO 0,1,2,3,4,5,6,7 8 26501

- run

bash scripts/format_eval.sh {config} {device} {RAG} {epoch}bash scripts/format_test.sh {config} {device} {RAG} {epoch}- Ex.

bash scripts/format_eval.sh Original_RAG_X_loss_DPO 0 True 9

bash scripts/format_test.sh Original_RAG_X_loss_DPO 0 True 9

| DPO loss | Test Acc.(%) | |

|---|---|---|

| Base → | 41.35 | |

| Ours → | ✔ | 53.98 |

- Test accuracy 53.98% of DPO finetuned model is achived at epoch 9 (10/10).

| Base | Loss type | RAG | Valid Acc.(%) / Approx. Training Time | |

|---|---|---|---|---|

| Baseline | Original | - | - | 30.44† / Given Pre-trained Model |

| Contrastive | ✗ | 44.42† / Given Pre-trained Model | ||

| Ours | Original | DPO | ✗ | 60.24 / 0.5 days |

| DPO-Finetuned | Contrastive (Iterative) | ✓ | 46.05 / 0.5 days | |

| DPO (Iterative) | ✗ | 61.11 / 0.5 days | ||

| DPO (Iterative) | ✓ | 60.24 / 0.5 days |

Note that Base indicates the initial checkpoint from which the model is fine_tuned.

- Valid accuracy 60.24% of DPO finetuned model is achived at epoch 8 (9/10).

This repository benefits from Epic-Kitchens, Ego4D, EgoPlan, Video-LLaMA, LLaMA, MiniGPT-4, LLaVA, VideoChat. Thanks for their wonderful works!