We use GDELT dataset with Pycaret to demonstrate how low code machine learning library to quickly prototype machine learning models.

The GDELT Project is an initiative to construct a catalog of human societal-scale behavior and beliefs across all countries of the world, connecting every person, organization, location, count, theme, news source, and event across the planet into a single massive network that captures what's happening around the world, what its context is and who's involved, and how the world is feeling about it, every single day.

Automated EDA

from typing import List

import pandas as pd

from utils import transform_data , int_to_datetime , get_null_values

pd .set_option ('display.max_columns' , None )

pd .set_option ('display.max_rows' , None )data = pd .read_csv ("230722.csv" )

column_names = [

'GlobalEventID' ,

'Day' ,

'MonthYear' ,

'Year' ,

'FractionDate' ,

'Actor1Code' ,

'Actor1Name' ,

'Actor1CountryCode' ,

'Actor1KnownGroupCode' ,

'Actor1EthnicCode' ,

'Actor1Religion1Code' ,

'Actor1Religion2Code' ,

'Actor1Type1Code' ,

'Actor1Type2Code' ,

'Actor1Type3Code' ,

'Actor2Code' ,

'Actor2Name' ,

'Actor2CountryCode' ,

'Actor2KnownGroupCode' ,

'Actor2EthnicCode' ,

'Actor2Religion1Code' ,

'Actor2Religion2Code' ,

'Actor2Type1Code' ,

'Actor2Type2Code' ,

'Actor2Type3Code' ,

'IsRootEvent' ,

'EventCode' ,

'EventBaseCode' ,

'EventRootCode' ,

'QuadClass' ,

'GoldsteinScale' ,

'NumMentions' ,

'NumSources' ,

'NumArticles' ,

'AvgTone' ,

'Actor1Geo_Type' ,

'Actor1Geo_FullName' ,

'Actor1Geo_CountryCode' ,

'Actor1Geo_ADM1Code' ,

'Actor1Geo_Lat' ,

'Actor1Geo_Long' ,

'Actor1Geo_FeatureID' ,

'Actor2Geo_Type' ,

'Actor2Geo_FullName' ,

'Actor2Geo_CountryCode' ,

'Actor2Geo_ADM1Code' ,

'Actor2Geo_Lat' ,

'Actor2Geo_Long' ,

'Actor2Geo_FeatureID' ,

'DateAdded' ,

'SourceURL'

]

data = transform_data (data , column_names )

data = int_to_datetime (data , "day" )

data = int_to_datetime (data , "dateadded" )

# Let use small segment of the dataset

data_sm = data [data ['year' ]== 2022 ]

data_lm = data [data ['year' ]!= 2022 ]

# columns = data_sm.columns.tolist()

# columns.remove('quadclass')

# columns.append('quadclass')

# data_sm = data_sm[columns]

data_lm .head ()

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

globaleventid

day

monthyear

year

fractiondate

actor1code

actor1name

actor1countrycode

actor1knowngroupcode

actor1ethniccode

actor1religion1code

actor1religion2code

actor1type1code

actor1type2code

actor1type3code

actor2code

actor2name

actor2countrycode

actor2knowngroupcode

actor2ethniccode

actor2religion1code

actor2religion2code

actor2type1code

actor2type2code

actor2type3code

isrootevent

eventcode

eventbasecode

eventrootcode

quadclass

goldsteinscale

nummentions

numsources

numarticles

avgtone

actor1geo_type

actor1geo_fullname

actor1geo_countrycode

actor1geo_adm1code

actor1geo_lat

actor1geo_long

actor1geo_featureid

actor2geo_type

actor2geo_fullname

actor2geo_countrycode

actor2geo_adm1code

actor2geo_lat

actor2geo_long

actor2geo_featureid

dateadded

sourceurl

13

1116435795

2023-06-22

202306

2023

2023.4712

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

BUS

COMPANY

NaN

NaN

NaN

NaN

NaN

BUS

NaN

NaN

0

40

40

4

Verbal Cooperation

1.0

5

1

5

1.118963

0

NaN

NaN

NaN

NaN

NaN

NaN

3

Fort Smith, Arkansas, United States

US

USAR

35.3859

-94.3985

76952

2023-07-22

https://www.kuaf.com/show/ozarks-at-large/2023 ...

14

1116435796

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

4

1

4

0.790514

1

Russia

RS

RS

60.0000

100.0000

RS

1

Russia

RS

RS

60.0000

100.0000

RS

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

15

1116435797

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUS

RUSSIAN

RUS

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

10

3

10

-0.491013

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

16

1116435798

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUS

RUSSIAN

RUS

NaN

NaN

NaN

NaN

NaN

NaN

NaN

0

43

43

4

Verbal Cooperation

2.8

2

1

2

0.790514

1

Russia

RS

RS

60.0000

100.0000

RS

1

Russia

RS

RS

60.0000

100.0000

RS

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

17

1116435799

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUSGOV

RUSSIAN

RUS

NaN

NaN

NaN

NaN

GOV

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

18

3

18

-0.491013

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

actor2type3code 87517

actor1type3code 87517

actor2religion2code 87351

actor1religion2code 87289

actor2ethniccode 87168

actor1ethniccode 87011

actor2knowngroupcode 86724

actor1knowngroupcode 86627

actor2religion1code 86621

actor1religion1code 86523

actor2type2code 86025

actor1type2code 85240

actor2type1code 58516

actor1type1code 50638

actor2countrycode 48626

actor1countrycode 37956

actor2code 25351

actor2name 25351

actor1geo_fullname 11051

actor1geo_long 11051

actor1geo_lat 11051

actor1geo_featureid 11044

actor1geo_adm1code 11044

actor1geo_countrycode 11044

actor1name 8630

actor1code 8630

actor2geo_lat 2723

actor2geo_fullname 2723

actor2geo_long 2723

actor2geo_adm1code 2718

actor2geo_countrycode 2718

actor2geo_featureid 2718

actor2geo_type 0

dateadded 0

globaleventid 0

isrootevent 0

actor1geo_type 0

avgtone 0

numarticles 0

numsources 0

nummentions 0

goldsteinscale 0

quadclass 0

eventrootcode 0

eventbasecode 0

eventcode 0

day 0

fractiondate 0

year 0

monthyear 0

sourceurl 0

dtype: int64

new_data = data_lm [

[ 'fractiondate' ,

'year' ,

'monthyear' ,

'actor1code' ,

'actor1name' ,

'actor1geo_type' ,

'actor1geo_long' ,

'actor1geo_lat' ,

'actor2code' ,

'actor2name' ,

'actor2geo_type' ,

'actor2geo_long' ,

'actor2geo_lat' ,

'isrootevent' ,

'eventcode' ,

'eventrootcode' ,

'goldsteinscale' ,

'nummentions' ,

'numsources' ,

'avgtone' ,

'quadclass'

]

]

new_data .head ()

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

fractiondate

year

monthyear

actor1code

actor1name

actor1geo_type

actor1geo_long

actor1geo_lat

actor2code

actor2name

actor2geo_type

actor2geo_long

actor2geo_lat

isrootevent

eventcode

eventrootcode

goldsteinscale

nummentions

numsources

avgtone

quadclass

13

2023.4712

2023

202306

NaN

NaN

0

NaN

NaN

BUS

COMPANY

3

-94.3985

35.3859

0

40

4

1.0

5

1

1.118963

Verbal Cooperation

14

2023.4712

2023

202306

AFR

AFRICA

1

100.0000

60.0000

NaN

NaN

1

100.0000

60.0000

1

43

4

2.8

4

1

0.790514

Verbal Cooperation

15

2023.4712

2023

202306

AFR

AFRICA

4

28.2294

-25.7069

RUS

RUSSIAN

4

28.2294

-25.7069

1

43

4

2.8

10

3

-0.491013

Verbal Cooperation

16

2023.4712

2023

202306

AFR

AFRICA

1

100.0000

60.0000

RUS

RUSSIAN

1

100.0000

60.0000

0

43

4

2.8

2

1

0.790514

Verbal Cooperation

17

2023.4712

2023

202306

AFR

AFRICA

4

28.2294

-25.7069

RUSGOV

RUSSIAN

4

28.2294

-25.7069

1

43

4

2.8

18

3

-0.491013

Verbal Cooperation

from pycaret .classification import ClassificationExperiment

exp = ClassificationExperiment ()

exp .setup (data = new_data ,

target = 'quadclass' ,

session_id = 123

)

Description

Value

0

Session id

123

1

Target

quadclass

2

Target type

Multiclass

3

Target mapping

Material Conflict: 0, Material Cooperation: 1, Verbal Conflict: 2, Verbal Cooperation: 3

4

Original data shape

(87552, 21)

5

Transformed data shape

(87552, 31)

6

Transformed train set shape

(61286, 31)

7

Transformed test set shape

(26266, 31)

8

Ordinal features

1

9

Numeric features

13

10

Categorical features

7

11

Rows with missing values

40.5%

12

Preprocess

True

13

Imputation type

simple

14

Numeric imputation

mean

15

Categorical imputation

mode

16

Maximum one-hot encoding

25

17

Encoding method

None

18

Fold Generator

StratifiedKFold

19

Fold Number

10

20

CPU Jobs

-1

21

Use GPU

False

22

Log Experiment

False

23

Experiment Name

clf-default-name

24

USI

b912

<pycaret.classification.oop.ClassificationExperiment at 0x7fffbbcbba60>

Imported v0.1.58. After importing, execute '%matplotlib inline' to display charts in Jupyter.

AV = AutoViz_Class()

dfte = AV.AutoViz(filename, sep=',', depVar='', dfte=None, header=0, verbose=1, lowess=False,

chart_format='svg',max_rows_analyzed=150000,max_cols_analyzed=30, save_plot_dir=None)

Update: verbose=0 displays charts in your local Jupyter notebook.

verbose=1 additionally provides EDA data cleaning suggestions. It also displays charts.

verbose=2 does not display charts but saves them in AutoViz_Plots folder in local machine.

chart_format='bokeh' displays charts in your local Jupyter notebook.

chart_format='server' displays charts in your browser: one tab for each chart type

chart_format='html' silently saves interactive HTML files in your local machine

Shape of your Data Set loaded: (87552, 31)

#######################################################################################

######################## C L A S S I F Y I N G V A R I A B L E S ####################

#######################################################################################

Classifying variables in data set...

Data cleaning improvement suggestions. Complete them before proceeding to ML modeling.

Nuniques

dtype

Nulls

Nullpercent

NuniquePercent

Value counts Min

Data cleaning improvement suggestions

avgtone

19394

float64

0

0.000000

22.151407

0

actor2geo_long

5121

float64

0

0.000000

5.849095

0

actor1geo_long

4968

float64

0

0.000000

5.674342

0

actor2geo_lat

4904

float64

0

0.000000

5.601243

0

skewed: cap or drop outliers

actor1geo_lat

4759

float64

0

0.000000

5.435627

0

skewed: cap or drop outliers

actor1name

1242

float64

0

0.000000

1.418586

0

skewed: cap or drop outliers

actor2name

1077

float64

0

0.000000

1.230126

0

skewed: cap or drop outliers

actor1code

804

float64

0

0.000000

0.918311

0

skewed: cap or drop outliers

actor2code

715

float64

0

0.000000

0.816658

0

skewed: cap or drop outliers

nummentions

507

float64

0

0.000000

0.579084

0

highly skewed: drop outliers or do box-cox transform

eventcode

194

float64

0

0.000000

0.221583

0

highly skewed: drop outliers or do box-cox transform

numsources

172

float64

0

0.000000

0.196455

0

highly skewed: drop outliers or do box-cox transform

goldsteinscale

42

float64

0

0.000000

0.047971

0

eventrootcode

20

float64

0

0.000000

0.022844

0

fractiondate

5

float64

0

0.000000

0.005711

0

highly skewed: drop outliers or do box-cox transform

monthyear

3

float64

0

0.000000

0.003427

0

highly skewed: drop outliers or do box-cox transform

year

2

float64

0

0.000000

0.002284

0

highly skewed: drop outliers or do box-cox transform

actor2geo_type_4.0

2

float64

0

0.000000

0.002284

0

actor2geo_type_3.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor2geo_type_2.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor2geo_type_0.0

2

float64

0

0.000000

0.002284

0

highly skewed: drop outliers or do box-cox transform

actor2geo_type_5.0

2

float64

0

0.000000

0.002284

0

highly skewed: drop outliers or do box-cox transform

actor1geo_type_5.0

2

float64

0

0.000000

0.002284

0

highly skewed: drop outliers or do box-cox transform

actor1geo_type_2.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

isrootevent

2

float64

0

0.000000

0.002284

0

actor1geo_type_4.0

2

float64

0

0.000000

0.002284

0

actor1geo_type_0.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor1geo_type_3.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor1geo_type_1.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor2geo_type_1.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

30 Predictors classified...

No variables removed since no ID or low-information variables found in data set

################ Multi_Classification problem #####################

Number of variables = 30 exceeds limit, finding top 30 variables through XGBoost

No categorical feature reduction done. All 14 Categorical vars selected

Removing correlated variables from 16 numerics using SULO method

After removing highly correlated variables, following 11 numeric vars selected: ['actor1code', 'actor1name', 'actor2code', 'actor2name', 'eventcode', 'avgtone', 'eventrootcode', 'actor2geo_long', 'actor2geo_lat', 'fractiondate', 'numsources']

Adding 14 categorical variables to reduced numeric variables of 11

############## F E A T U R E S E L E C T I O N ####################

Current number of predictors = 25

Finding Important Features using Boosted Trees algorithm...

using 25 variables...

using 20 variables...

using 15 variables...

using 10 variables...

using 5 variables...

Found 22 important features

#######################################################################################

######################## C L A S S I F Y I N G V A R I A B L E S ####################

#######################################################################################

Classifying variables in data set...

Data cleaning improvement suggestions. Complete them before proceeding to ML modeling.

Nuniques

dtype

Nulls

Nullpercent

NuniquePercent

Value counts Min

Data cleaning improvement suggestions

avgtone

19394

float64

0

0.000000

22.151407

0

actor2geo_long

5121

float64

0

0.000000

5.849095

0

actor2geo_lat

4904

float64

0

0.000000

5.601243

0

skewed: cap or drop outliers

actor1name

1242

float64

0

0.000000

1.418586

0

skewed: cap or drop outliers

actor2name

1077

float64

0

0.000000

1.230126

0

skewed: cap or drop outliers

actor1code

804

float64

0

0.000000

0.918311

0

skewed: cap or drop outliers

actor2code

715

float64

0

0.000000

0.816658

0

skewed: cap or drop outliers

eventcode

194

float64

0

0.000000

0.221583

0

highly skewed: drop outliers or do box-cox transform

eventrootcode

20

float64

0

0.000000

0.022844

0

actor2geo_type_3.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor1geo_type_2.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor2geo_type_2.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor2geo_type_1.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor1geo_type_4.0

2

float64

0

0.000000

0.002284

0

actor2geo_type_4.0

2

float64

0

0.000000

0.002284

0

actor2geo_type_0.0

2

float64

0

0.000000

0.002284

0

highly skewed: drop outliers or do box-cox transform

actor1geo_type_3.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

isrootevent

2

float64

0

0.000000

0.002284

0

actor2geo_type_5.0

2

float64

0

0.000000

0.002284

0

highly skewed: drop outliers or do box-cox transform

actor1geo_type_1.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor1geo_type_0.0

2

float64

0

0.000000

0.002284

0

skewed: cap or drop outliers

actor1geo_type_5.0

2

float64

0

0.000000

0.002284

0

highly skewed: drop outliers or do box-cox transform

Use classification models to predict the type of event that is going to happen.

data = pd .read_csv ("230722.csv" )

column_names = [

'GlobalEventID' ,

'Day' ,

'MonthYear' ,

'Year' ,

'FractionDate' ,

'Actor1Code' ,

'Actor1Name' ,

'Actor1CountryCode' ,

'Actor1KnownGroupCode' ,

'Actor1EthnicCode' ,

'Actor1Religion1Code' ,

'Actor1Religion2Code' ,

'Actor1Type1Code' ,

'Actor1Type2Code' ,

'Actor1Type3Code' ,

'Actor2Code' ,

'Actor2Name' ,

'Actor2CountryCode' ,

'Actor2KnownGroupCode' ,

'Actor2EthnicCode' ,

'Actor2Religion1Code' ,

'Actor2Religion2Code' ,

'Actor2Type1Code' ,

'Actor2Type2Code' ,

'Actor2Type3Code' ,

'IsRootEvent' ,

'EventCode' ,

'EventBaseCode' ,

'EventRootCode' ,

'QuadClass' ,

'GoldsteinScale' ,

'NumMentions' ,

'NumSources' ,

'NumArticles' ,

'AvgTone' ,

'Actor1Geo_Type' ,

'Actor1Geo_FullName' ,

'Actor1Geo_CountryCode' ,

'Actor1Geo_ADM1Code' ,

'Actor1Geo_Lat' ,

'Actor1Geo_Long' ,

'Actor1Geo_FeatureID' ,

'Actor2Geo_Type' ,

'Actor2Geo_FullName' ,

'Actor2Geo_CountryCode' ,

'Actor2Geo_ADM1Code' ,

'Actor2Geo_Lat' ,

'Actor2Geo_Long' ,

'Actor2Geo_FeatureID' ,

'DateAdded' ,

'SourceURL'

]

data = transform_data (data , column_names )

data = int_to_datetime (data , "day" )

data = int_to_datetime (data , "dateadded" )

# Let use small segment of the dataset

data_sm = data [data ['year' ]== 2022 ]

data_lm = data [data ['year' ]!= 2022 ]

# columns = data_sm.columns.tolist()

# columns.remove('quadclass')

# columns.append('quadclass')

# data_sm = data_sm[columns]

data_lm .head ()

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

globaleventid

day

monthyear

year

fractiondate

actor1code

actor1name

actor1countrycode

actor1knowngroupcode

actor1ethniccode

actor1religion1code

actor1religion2code

actor1type1code

actor1type2code

actor1type3code

actor2code

actor2name

actor2countrycode

actor2knowngroupcode

actor2ethniccode

actor2religion1code

actor2religion2code

actor2type1code

actor2type2code

actor2type3code

isrootevent

eventcode

eventbasecode

eventrootcode

quadclass

goldsteinscale

nummentions

numsources

numarticles

avgtone

actor1geo_type

actor1geo_fullname

actor1geo_countrycode

actor1geo_adm1code

actor1geo_lat

actor1geo_long

actor1geo_featureid

actor2geo_type

actor2geo_fullname

actor2geo_countrycode

actor2geo_adm1code

actor2geo_lat

actor2geo_long

actor2geo_featureid

dateadded

sourceurl

13

1116435795

2023-06-22

202306

2023

2023.4712

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

BUS

COMPANY

NaN

NaN

NaN

NaN

NaN

BUS

NaN

NaN

0

40

40

4

Verbal Cooperation

1.0

5

1

5

1.118963

0

NaN

NaN

NaN

NaN

NaN

NaN

3

Fort Smith, Arkansas, United States

US

USAR

35.3859

-94.3985

76952

2023-07-22

https://www.kuaf.com/show/ozarks-at-large/2023 ...

14

1116435796

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

4

1

4

0.790514

1

Russia

RS

RS

60.0000

100.0000

RS

1

Russia

RS

RS

60.0000

100.0000

RS

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

15

1116435797

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUS

RUSSIAN

RUS

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

10

3

10

-0.491013

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

16

1116435798

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUS

RUSSIAN

RUS

NaN

NaN

NaN

NaN

NaN

NaN

NaN

0

43

43

4

Verbal Cooperation

2.8

2

1

2

0.790514

1

Russia

RS

RS

60.0000

100.0000

RS

1

Russia

RS

RS

60.0000

100.0000

RS

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

17

1116435799

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUSGOV

RUSSIAN

RUS

NaN

NaN

NaN

NaN

GOV

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

18

3

18

-0.491013

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

actor2type3code 87517

actor1type3code 87517

actor2religion2code 87351

actor1religion2code 87289

actor2ethniccode 87168

actor1ethniccode 87011

actor2knowngroupcode 86724

actor1knowngroupcode 86627

actor2religion1code 86621

actor1religion1code 86523

actor2type2code 86025

actor1type2code 85240

actor2type1code 58516

actor1type1code 50638

actor2countrycode 48626

actor1countrycode 37956

actor2code 25351

actor2name 25351

actor1geo_fullname 11051

actor1geo_long 11051

actor1geo_lat 11051

actor1geo_featureid 11044

actor1geo_adm1code 11044

actor1geo_countrycode 11044

actor1name 8630

actor1code 8630

actor2geo_lat 2723

actor2geo_fullname 2723

actor2geo_long 2723

actor2geo_adm1code 2718

actor2geo_countrycode 2718

actor2geo_featureid 2718

actor2geo_type 0

dateadded 0

globaleventid 0

isrootevent 0

actor1geo_type 0

avgtone 0

numarticles 0

numsources 0

nummentions 0

goldsteinscale 0

quadclass 0

eventrootcode 0

eventbasecode 0

eventcode 0

day 0

fractiondate 0

year 0

monthyear 0

sourceurl 0

dtype: int64

quadclass is the target and rest of the attributes are the feature. And I am trying to predict type of events.

new_data = data_lm [

[ 'fractiondate' ,

'year' ,

'monthyear' ,

'actor1code' ,

'actor1name' ,

'actor1geo_type' ,

'actor1geo_long' ,

'actor1geo_lat' ,

'actor2code' ,

'actor2name' ,

'actor2geo_type' ,

'actor2geo_long' ,

'actor2geo_lat' ,

'isrootevent' ,

'eventcode' ,

'eventrootcode' ,

'goldsteinscale' ,

'nummentions' ,

'numsources' ,

'avgtone' ,

'quadclass'

]

]

new_data .head ()

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

fractiondate

year

monthyear

actor1code

actor1name

actor1geo_type

actor1geo_long

actor1geo_lat

actor2code

actor2name

actor2geo_type

actor2geo_long

actor2geo_lat

isrootevent

eventcode

eventrootcode

goldsteinscale

nummentions

numsources

avgtone

quadclass

13

2023.4712

2023

202306

NaN

NaN

0

NaN

NaN

BUS

COMPANY

3

-94.3985

35.3859

0

40

4

1.0

5

1

1.118963

Verbal Cooperation

14

2023.4712

2023

202306

AFR

AFRICA

1

100.0000

60.0000

NaN

NaN

1

100.0000

60.0000

1

43

4

2.8

4

1

0.790514

Verbal Cooperation

15

2023.4712

2023

202306

AFR

AFRICA

4

28.2294

-25.7069

RUS

RUSSIAN

4

28.2294

-25.7069

1

43

4

2.8

10

3

-0.491013

Verbal Cooperation

16

2023.4712

2023

202306

AFR

AFRICA

1

100.0000

60.0000

RUS

RUSSIAN

1

100.0000

60.0000

0

43

4

2.8

2

1

0.790514

Verbal Cooperation

17

2023.4712

2023

202306

AFR

AFRICA

4

28.2294

-25.7069

RUSGOV

RUSSIAN

4

28.2294

-25.7069

1

43

4

2.8

18

3

-0.491013

Verbal Cooperation

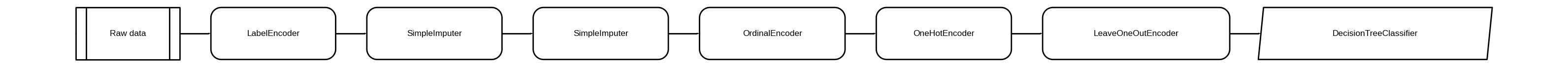

This function initializes the training environment and creates the transformation pipeline.

from pycaret .classification import ClassificationExperiment

cls_exp = ClassificationExperiment ()

cls_exp .setup (data = new_data ,

target = 'quadclass' ,

session_id = 123 ,

log_experiment = 'mlflow' , experiment_name = 'gdelt_experiment' )

Description

Value

0

Session id

123

1

Target

quadclass

2

Target type

Multiclass

3

Target mapping

Material Conflict: 0, Material Cooperation: 1, Verbal Conflict: 2, Verbal Cooperation: 3

4

Original data shape

(87552, 21)

5

Transformed data shape

(87552, 31)

6

Transformed train set shape

(61286, 31)

7

Transformed test set shape

(26266, 31)

8

Ordinal features

1

9

Numeric features

13

10

Categorical features

7

11

Rows with missing values

40.5%

12

Preprocess

True

13

Imputation type

simple

14

Numeric imputation

mean

15

Categorical imputation

mode

16

Maximum one-hot encoding

25

17

Encoding method

None

18

Fold Generator

StratifiedKFold

19

Fold Number

10

20

CPU Jobs

-1

21

Use GPU

False

22

Log Experiment

MlflowLogger

23

Experiment Name

gdelt_experiment

24

USI

631c

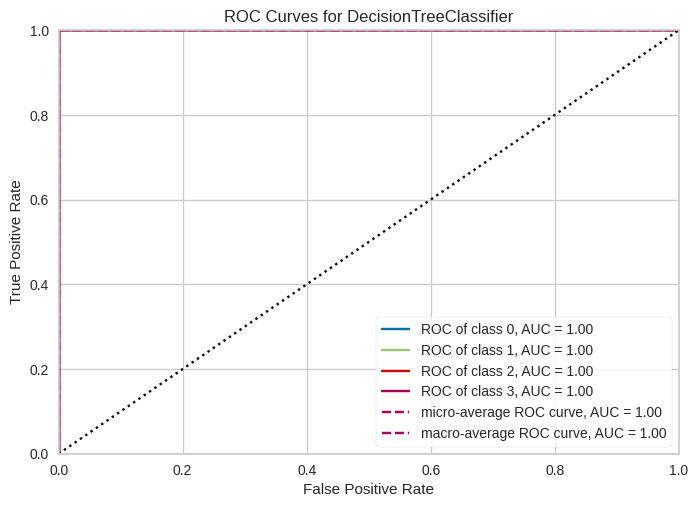

This function trains and evaluates the performance of all the estimators available in the model library using cross-validation.

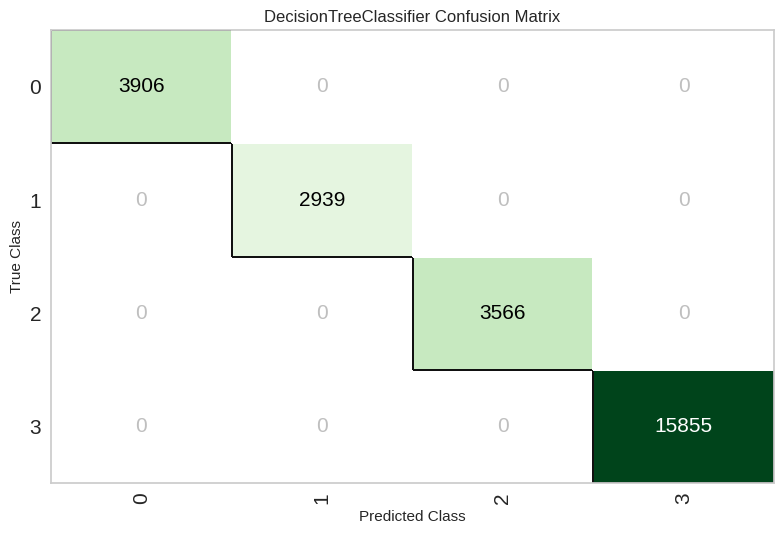

best = cls_exp .compare_models ()

Model

Accuracy

AUC

Recall

Prec.

F1

Kappa

MCC

TT (Sec)

dt

Decision Tree Classifier

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

0.2530

rf

Random Forest Classifier

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

0.3150

gbc

Gradient Boosting Classifier

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

0.3320

et

Extra Trees Classifier

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

0.3340

xgboost

Extreme Gradient Boosting

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

0.2430

lightgbm

Light Gradient Boosting Machine

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

0.3330

catboost

CatBoost Classifier

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

1.0000

0.2730

knn

K Neighbors Classifier

0.9886

0.9985

0.9886

0.9885

0.9885

0.9803

0.9803

1.5500

lda

Linear Discriminant Analysis

0.9741

0.9979

0.9741

0.9766

0.9734

0.9554

0.9562

0.2390

nb

Naive Bayes

0.9392

0.9888

0.9392

0.9397

0.9339

0.8936

0.8960

0.2320

lr

Logistic Regression

0.9098

0.9792

0.9098

0.8989

0.9021

0.8414

0.8440

0.4480

ada

Ada Boost Classifier

0.8881

0.9827

0.8881

0.8268

0.8485

0.8071

0.8252

0.2730

ridge

Ridge Classifier

0.8188

0.0000

0.8188

0.8539

0.7633

0.6703

0.6973

0.2050

svm

SVM - Linear Kernel

0.7028

0.0000

0.7028

0.6337

0.6357

0.4433

0.4887

0.1870

qda

Quadratic Discriminant Analysis

0.6721

0.9795

0.6721

0.8973

0.6889

0.5181

0.5880

0.2360

dummy

Dummy Classifier

0.6036

0.5000

0.6036

0.3644

0.4544

0.0000

0.0000

0.2030

cls_exp .evaluate_model (best )

cls_exp .plot_model (best , plot = 'auc' )

cls_exp .plot_model (best , plot = 'confusion_matrix' )

cls_exp .plot_model (best , plot = 'confusion_matrix' )

Click here for more.

Use regression models to predict the number of mentions.

import pandas as pd

from utils import transform_data , int_to_datetime , get_null_values

pd .set_option ('display.max_columns' , None )

pd .set_option ('display.max_rows' , None )data = pd .read_csv ("230722.csv" )

column_names = [

'GlobalEventID' ,

'Day' ,

'MonthYear' ,

'Year' ,

'FractionDate' ,

'Actor1Code' ,

'Actor1Name' ,

'Actor1CountryCode' ,

'Actor1KnownGroupCode' ,

'Actor1EthnicCode' ,

'Actor1Religion1Code' ,

'Actor1Religion2Code' ,

'Actor1Type1Code' ,

'Actor1Type2Code' ,

'Actor1Type3Code' ,

'Actor2Code' ,

'Actor2Name' ,

'Actor2CountryCode' ,

'Actor2KnownGroupCode' ,

'Actor2EthnicCode' ,

'Actor2Religion1Code' ,

'Actor2Religion2Code' ,

'Actor2Type1Code' ,

'Actor2Type2Code' ,

'Actor2Type3Code' ,

'IsRootEvent' ,

'EventCode' ,

'EventBaseCode' ,

'EventRootCode' ,

'QuadClass' ,

'GoldsteinScale' ,

'NumMentions' ,

'NumSources' ,

'NumArticles' ,

'AvgTone' ,

'Actor1Geo_Type' ,

'Actor1Geo_FullName' ,

'Actor1Geo_CountryCode' ,

'Actor1Geo_ADM1Code' ,

'Actor1Geo_Lat' ,

'Actor1Geo_Long' ,

'Actor1Geo_FeatureID' ,

'Actor2Geo_Type' ,

'Actor2Geo_FullName' ,

'Actor2Geo_CountryCode' ,

'Actor2Geo_ADM1Code' ,

'Actor2Geo_Lat' ,

'Actor2Geo_Long' ,

'Actor2Geo_FeatureID' ,

'DateAdded' ,

'SourceURL'

]

data = transform_data (data , column_names )

data = int_to_datetime (data , "day" )

data = int_to_datetime (data , "dateadded" )

# Let use small segment of the dataset

data_sm = data [data ['year' ]== 2022 ]

data_lm = data [data ['year' ]!= 2022 ]

# columns = data_sm.columns.tolist()

# columns.remove('quadclass')

# columns.append('quadclass')

# data_sm = data_sm[columns]

data_lm .head ()

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

globaleventid

day

monthyear

year

fractiondate

actor1code

actor1name

actor1countrycode

actor1knowngroupcode

actor1ethniccode

actor1religion1code

actor1religion2code

actor1type1code

actor1type2code

actor1type3code

actor2code

actor2name

actor2countrycode

actor2knowngroupcode

actor2ethniccode

actor2religion1code

actor2religion2code

actor2type1code

actor2type2code

actor2type3code

isrootevent

eventcode

eventbasecode

eventrootcode

quadclass

goldsteinscale

nummentions

numsources

numarticles

avgtone

actor1geo_type

actor1geo_fullname

actor1geo_countrycode

actor1geo_adm1code

actor1geo_lat

actor1geo_long

actor1geo_featureid

actor2geo_type

actor2geo_fullname

actor2geo_countrycode

actor2geo_adm1code

actor2geo_lat

actor2geo_long

actor2geo_featureid

dateadded

sourceurl

13

1116435795

2023-06-22

202306

2023

2023.4712

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

BUS

COMPANY

NaN

NaN

NaN

NaN

NaN

BUS

NaN

NaN

0

40

40

4

Verbal Cooperation

1.0

5

1

5

1.118963

0

NaN

NaN

NaN

NaN

NaN

NaN

3

Fort Smith, Arkansas, United States

US

USAR

35.3859

-94.3985

76952

2023-07-22

https://www.kuaf.com/show/ozarks-at-large/2023 ...

14

1116435796

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

4

1

4

0.790514

1

Russia

RS

RS

60.0000

100.0000

RS

1

Russia

RS

RS

60.0000

100.0000

RS

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

15

1116435797

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUS

RUSSIAN

RUS

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

10

3

10

-0.491013

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

16

1116435798

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUS

RUSSIAN

RUS

NaN

NaN

NaN

NaN

NaN

NaN

NaN

0

43

43

4

Verbal Cooperation

2.8

2

1

2

0.790514

1

Russia

RS

RS

60.0000

100.0000

RS

1

Russia

RS

RS

60.0000

100.0000

RS

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

17

1116435799

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUSGOV

RUSSIAN

RUS

NaN

NaN

NaN

NaN

GOV

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

18

3

18

-0.491013

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

new_data = data_lm [

[ 'fractiondate' ,

'year' ,

'monthyear' ,

'actor1code' ,

'actor1name' ,

'actor1geo_type' ,

'actor1geo_long' ,

'actor1geo_lat' ,

'actor2code' ,

'actor2name' ,

'actor2geo_type' ,

'actor2geo_long' ,

'actor2geo_lat' ,

'isrootevent' ,

'eventcode' ,

'eventrootcode' ,

'goldsteinscale' ,

'nummentions' ,

'numsources' ,

'avgtone' ,

'quadclass'

]

]

new_data .head ()

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

fractiondate

year

monthyear

actor1code

actor1name

actor1geo_type

actor1geo_long

actor1geo_lat

actor2code

actor2name

actor2geo_type

actor2geo_long

actor2geo_lat

isrootevent

eventcode

eventrootcode

goldsteinscale

nummentions

numsources

avgtone

quadclass

13

2023.4712

2023

202306

NaN

NaN

0

NaN

NaN

BUS

COMPANY

3

-94.3985

35.3859

0

40

4

1.0

5

1

1.118963

Verbal Cooperation

14

2023.4712

2023

202306

AFR

AFRICA

1

100.0000

60.0000

NaN

NaN

1

100.0000

60.0000

1

43

4

2.8

4

1

0.790514

Verbal Cooperation

15

2023.4712

2023

202306

AFR

AFRICA

4

28.2294

-25.7069

RUS

RUSSIAN

4

28.2294

-25.7069

1

43

4

2.8

10

3

-0.491013

Verbal Cooperation

16

2023.4712

2023

202306

AFR

AFRICA

1

100.0000

60.0000

RUS

RUSSIAN

1

100.0000

60.0000

0

43

4

2.8

2

1

0.790514

Verbal Cooperation

17

2023.4712

2023

202306

AFR

AFRICA

4

28.2294

-25.7069

RUSGOV

RUSSIAN

4

28.2294

-25.7069

1

43

4

2.8

18

3

-0.491013

Verbal Cooperation

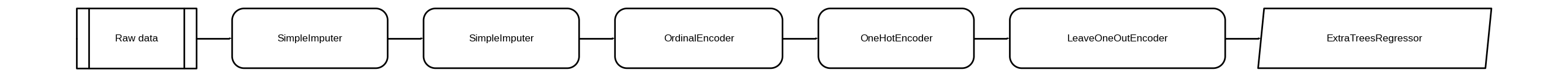

## 1. Import RegressionExperiment and init the class from pycaret .regression import RegressionExperiment reg_exp = RegressionExperiment ()

reg_exp .setup (new_data , target = 'nummentions' , session_id = 223 )

Description

Value

0

Session id

223

1

Target

nummentions

2

Target type

Regression

3

Original data shape

(87552, 21)

4

Transformed data shape

(87552, 34)

5

Transformed train set shape

(61286, 34)

6

Transformed test set shape

(26266, 34)

7

Ordinal features

1

8

Numeric features

12

9

Categorical features

8

10

Rows with missing values

40.5%

11

Preprocess

True

12

Imputation type

simple

13

Numeric imputation

mean

14

Categorical imputation

mode

15

Maximum one-hot encoding

25

16

Encoding method

None

17

Fold Generator

KFold

18

Fold Number

10

19

CPU Jobs

-1

20

Use GPU

False

21

Log Experiment

False

22

Experiment Name

reg-default-name

23

USI

c2ca

### 2. Compare baseline models best = reg_exp .compare_models ()

Model

MAE

MSE

RMSE

R2

RMSLE

MAPE

TT (Sec)

et

Extra Trees Regressor

7.1072

971.7544

30.7220

0.6642

0.7049

1.3150

7.8420

lr

Linear Regression

7.4204

1125.7411

33.0324

0.6264

0.7079

1.0732

0.7970

ridge

Ridge Regression

7.4197

1125.7176

33.0320

0.6264

0.7076

1.0730

0.2770

br

Bayesian Ridge

7.4039

1125.7355

33.0322

0.6264

0.7066

1.0764

0.3320

en

Elastic Net

7.3649

1133.0863

33.1390

0.6242

0.6787

1.1509

0.3000

lasso

Lasso Regression

7.3717

1133.9014

33.1559

0.6235

0.6786

1.1485

0.2990

llar

Lasso Least Angle Regression

7.3717

1133.9015

33.1559

0.6235

0.6786

1.1485

0.2800

omp

Orthogonal Matching Pursuit

7.3207

1133.4790

33.1510

0.6235

0.6704

1.1371

0.2590

gbr

Gradient Boosting Regressor

7.4531

1182.7314

33.7733

0.6089

0.7222

1.3741

3.9150

knn

K Neighbors Regressor

8.2201

1251.2758

34.8129

0.5846

0.7963

1.3226

0.9470

lightgbm

Light Gradient Boosting Machine

8.4165

1328.7091

35.7868

0.5611

0.8174

1.7037

0.5730

catboost

CatBoost Regressor

9.2361

1324.4911

35.8178

0.5594

0.8975

2.0437

3.6830

rf

Random Forest Regressor

11.6646

1506.5636

38.2956

0.4919

1.1009

3.0682

10.9790

xgboost

Extreme Gradient Boosting

10.2014

1576.1854

39.2998

0.4577

0.9663

2.3305

2.8680

dt

Decision Tree Regressor

12.7570

2345.6060

47.8260

0.1788

1.1485

3.2058

0.4750

huber

Huber Regressor

9.5112

2676.3266

50.8367

0.1279

0.8288

1.1368

0.4730

dummy

Dummy Regressor

13.2721

3071.2452

54.4972

-0.0001

1.1804

3.1352

0.2260

par

Passive Aggressive Regressor

10.4196

3129.2235

55.0295

-0.0206

1.0203

1.1399

0.3020

ada

AdaBoost Regressor

84.4408

10654.0191

100.9776

-2.9177

2.6662

27.1457

1.6290

lar

Least Angle Regression

34.3947

109968.5421

134.1696

-43.2102

0.9558

11.8419

0.2800

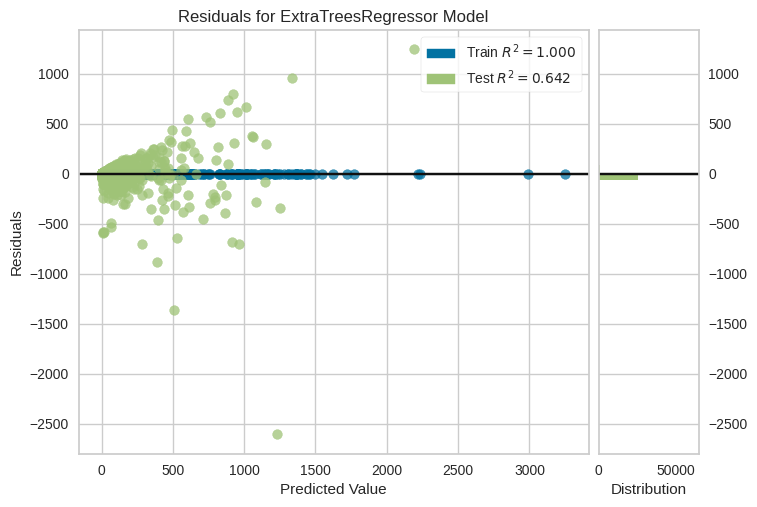

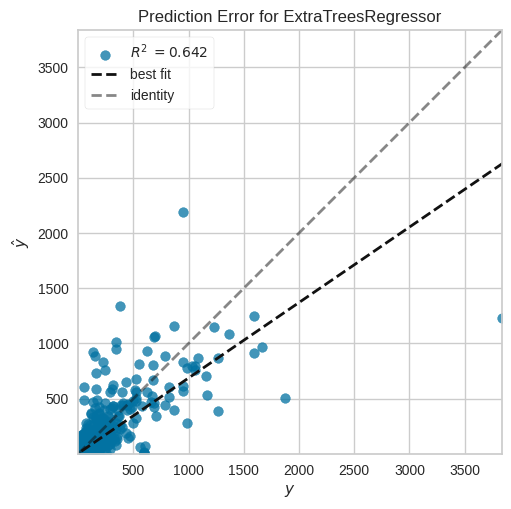

The plot_model function is used to analyze the performance of a trained model on the test set. It may require re-training the model in certain cases.

reg_exp .plot_model (best , plot = 'residuals' )

reg_exp .plot_model (best , plot = 'error' )

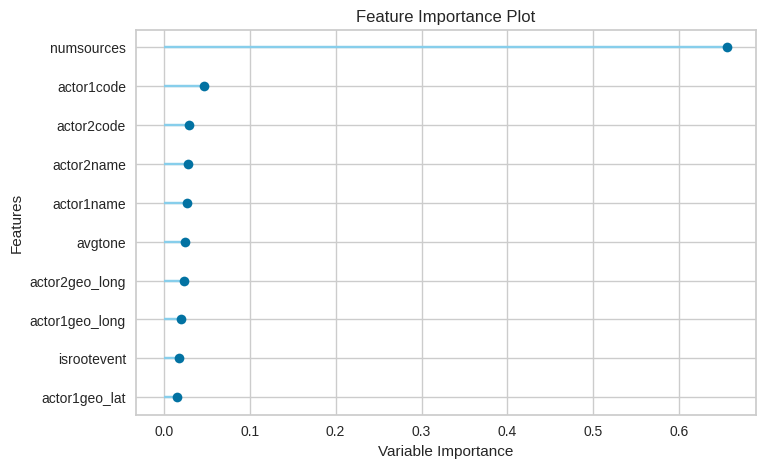

#### Plot feature importance reg_exp .plot_model (best , plot = 'feature' )

# check docstring to see available plots

# help(plot_model) An alternate to plot_model function is evaluate_model

reg_exp .evaluate_model (best )

The predict_model function returns prediction_label as new column to the input dataframe. When data is None (default), it uses the test set (created during the setup function) for scoring.

#### predict on the test set holdout_pred = reg_exp .predict_model (best )

Model

MAE

MSE

RMSE

R2

RMSLE

MAPE

0

Extra Trees Regressor

5.2705

1002.9253

31.6690

0.6425

0.4999

0.6888

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

fractiondate

year

monthyear

actor1code

actor1name

actor1geo_type

actor1geo_long

actor1geo_lat

actor2code

actor2name

...

actor2geo_lat

isrootevent

eventcode

eventrootcode

goldsteinscale

numsources

avgtone

quadclass

nummentions

prediction_label

56143

2023.553345

2023

202307

GBR

UNITED KINGDOM

4

-1.783330

51.700001

GOV

PRINCE

...

51.700001

1

36

3

4.0

1

1.671733

Verbal Cooperation

2

2.58

62156

2023.553345

2023

202307

USA

UNITED STATES

3

-118.327003

34.098301

NaN

NaN

...

-21.100000

1

10

1

0.0

1

-0.250627

Verbal Cooperation

1

2.63

81585

2023.553345

2023

202307

USA

TEXAS

2

-97.647499

31.106001

NaN

NaN

...

25.683300

0

84

8

7.0

1

2.936857

Material Cooperation

13

5.28

61106

2023.553345

2023

202307

MED

PUBLISHER

0

NaN

NaN

NaN

NaN

...

NaN

1

190

19

-10.0

9

0.366028

Material Conflict

65

80.50

8558

2023.553345

2023

202307

USA

PENNSYLVANIA

2

-77.264000

40.577301

EDU

SCHOOL

...

40.577301

0

125

12

-5.0

1

-1.845820

Verbal Conflict

10

9.07

5 rows × 22 columns

Click here for more.

Use clustering models to cluster the events.

import pandas as pd

from utils import transform_data , int_to_datetime , get_null_values

pd .set_option ('display.max_columns' , None )

pd .set_option ('display.max_rows' , None )data = pd .read_csv ("230722.csv" )

column_names = [

'GlobalEventID' ,

'Day' ,

'MonthYear' ,

'Year' ,

'FractionDate' ,

'Actor1Code' ,

'Actor1Name' ,

'Actor1CountryCode' ,

'Actor1KnownGroupCode' ,

'Actor1EthnicCode' ,

'Actor1Religion1Code' ,

'Actor1Religion2Code' ,

'Actor1Type1Code' ,

'Actor1Type2Code' ,

'Actor1Type3Code' ,

'Actor2Code' ,

'Actor2Name' ,

'Actor2CountryCode' ,

'Actor2KnownGroupCode' ,

'Actor2EthnicCode' ,

'Actor2Religion1Code' ,

'Actor2Religion2Code' ,

'Actor2Type1Code' ,

'Actor2Type2Code' ,

'Actor2Type3Code' ,

'IsRootEvent' ,

'EventCode' ,

'EventBaseCode' ,

'EventRootCode' ,

'QuadClass' ,

'GoldsteinScale' ,

'NumMentions' ,

'NumSources' ,

'NumArticles' ,

'AvgTone' ,

'Actor1Geo_Type' ,

'Actor1Geo_FullName' ,

'Actor1Geo_CountryCode' ,

'Actor1Geo_ADM1Code' ,

'Actor1Geo_Lat' ,

'Actor1Geo_Long' ,

'Actor1Geo_FeatureID' ,

'Actor2Geo_Type' ,

'Actor2Geo_FullName' ,

'Actor2Geo_CountryCode' ,

'Actor2Geo_ADM1Code' ,

'Actor2Geo_Lat' ,

'Actor2Geo_Long' ,

'Actor2Geo_FeatureID' ,

'DateAdded' ,

'SourceURL'

]

data = transform_data (data , column_names )

data = int_to_datetime (data , "day" )

data = int_to_datetime (data , "dateadded" )

# Let use small segment of the dataset

data_sm = data [data ['year' ]== 2022 ]

data_lm = data [data ['year' ]!= 2022 ]

# columns = data_sm.columns.tolist()

# columns.remove('quadclass')

# columns.append('quadclass')

# data_sm = data_sm[columns]

data_lm .head ()

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

globaleventid

day

monthyear

year

fractiondate

actor1code

actor1name

actor1countrycode

actor1knowngroupcode

actor1ethniccode

actor1religion1code

actor1religion2code

actor1type1code

actor1type2code

actor1type3code

actor2code

actor2name

actor2countrycode

actor2knowngroupcode

actor2ethniccode

actor2religion1code

actor2religion2code

actor2type1code

actor2type2code

actor2type3code

isrootevent

eventcode

eventbasecode

eventrootcode

quadclass

goldsteinscale

nummentions

numsources

numarticles

avgtone

actor1geo_type

actor1geo_fullname

actor1geo_countrycode

actor1geo_adm1code

actor1geo_lat

actor1geo_long

actor1geo_featureid

actor2geo_type

actor2geo_fullname

actor2geo_countrycode

actor2geo_adm1code

actor2geo_lat

actor2geo_long

actor2geo_featureid

dateadded

sourceurl

13

1116435795

2023-06-22

202306

2023

2023.4712

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

BUS

COMPANY

NaN

NaN

NaN

NaN

NaN

BUS

NaN

NaN

0

40

40

4

Verbal Cooperation

1.0

5

1

5

1.118963

0

NaN

NaN

NaN

NaN

NaN

NaN

3

Fort Smith, Arkansas, United States

US

USAR

35.3859

-94.3985

76952

2023-07-22

https://www.kuaf.com/show/ozarks-at-large/2023 ...

14

1116435796

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

4

1

4

0.790514

1

Russia

RS

RS

60.0000

100.0000

RS

1

Russia

RS

RS

60.0000

100.0000

RS

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

15

1116435797

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUS

RUSSIAN

RUS

NaN

NaN

NaN

NaN

NaN

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

10

3

10

-0.491013

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

16

1116435798

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUS

RUSSIAN

RUS

NaN

NaN

NaN

NaN

NaN

NaN

NaN

0

43

43

4

Verbal Cooperation

2.8

2

1

2

0.790514

1

Russia

RS

RS

60.0000

100.0000

RS

1

Russia

RS

RS

60.0000

100.0000

RS

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

17

1116435799

2023-06-22

202306

2023

2023.4712

AFR

AFRICA

AFR

NaN

NaN

NaN

NaN

NaN

NaN

NaN

RUSGOV

RUSSIAN

RUS

NaN

NaN

NaN

NaN

GOV

NaN

NaN

1

43

43

4

Verbal Cooperation

2.8

18

3

18

-0.491013

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

4

Pretoria, Gauteng, South Africa

SF

SF06

-25.7069

28.2294

-1273769

2023-07-22

https://www.beijingbulletin.com/news/273906652 ...

new_data = data_sm [

[ 'fractiondate' ,

'year' ,

'monthyear' ,

'actor1code' ,

'actor1name' ,

'actor1geo_type' ,

'actor1geo_long' ,

'actor1geo_lat' ,

'actor2code' ,

'actor2name' ,

'actor2geo_type' ,

'actor2geo_long' ,

'actor2geo_lat' ,

'isrootevent' ,

'eventcode' ,

'eventrootcode' ,

'goldsteinscale' ,

'nummentions' ,

'numsources' ,

'avgtone' ,

'quadclass'

]

]

new_data .head ()

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

fractiondate

year

monthyear

actor1code

actor1name

actor1geo_type

actor1geo_long

actor1geo_lat

actor2code

actor2name

actor2geo_type

actor2geo_long

actor2geo_lat

isrootevent

eventcode

eventrootcode

goldsteinscale

nummentions

numsources

avgtone

quadclass

0

2022.5534

2022

202207

CAN

CANADA

4

-137.8170

66.8500

CHRCTH

CATHOLIC

4

-137.8170

66.8500

1

20

2

3.0

13

2

-3.829401

Verbal Cooperation

1

2022.5534

2022

202207

CAN

CANADA

4

-137.8170

66.8500

CHRCTH

CATHOLIC

4

-137.8170

66.8500

1

90

9

-2.0

13

2

-3.829401

Material Cooperation

2

2022.5534

2022

202207

CVL

COMMUNITY

4

-137.8170

66.8500

CHRCTH

CATHOLIC

4

-137.8170

66.8500

1

20

2

3.0

7

2

-3.829401

Verbal Cooperation

3

2022.5534

2022

202207

CVL

COMMUNITY

4

-137.8170

66.8500

CHRCTH

CATHOLIC

4

-137.8170

66.8500

1

90

9

-2.0

7

2

-3.829401

Material Cooperation

4

2022.5534

2022

202207

EDU

STUDENT

3

-77.6497

39.0834

NaN

NaN

3

-77.6497

39.0834

0

110

11

-2.0

10

1

0.393701

Verbal Conflict

from pycaret .clustering import ClusteringExperiment

clu_exp = ClusteringExperiment ()clu_exp .setup (data = new_data , session_id = 234 )

Description

Value

0

Session id

234

1

Original data shape

(832, 21)

2

Transformed data shape

(832, 662)

3

Ordinal features

1

4

Numeric features

13

5

Categorical features

8

6

Rows with missing values

39.5%

7

Preprocess

True

8

Imputation type

simple

9

Numeric imputation

mean

10

Categorical imputation

mode

11

Maximum one-hot encoding

-1

12

Encoding method

None

13

CPU Jobs

-1

14

Use GPU

False

15

Log Experiment

False

16

Experiment Name

cluster-default-name

17

USI

0098

This function trains and evaluates the performance of a given model. Metrics evaluated can be accessed using the get_metrics function. Custom metrics can be added or removed using the add_metric and remove_metric function.

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

Name

Reference

ID

kmeans

K-Means Clustering

sklearn.cluster._kmeans.KMeans

ap

Affinity Propagation

sklearn.cluster._affinity_propagation.Affinity...

meanshift

Mean Shift Clustering

sklearn.cluster._mean_shift.MeanShift

sc

Spectral Clustering

sklearn.cluster._spectral.SpectralClustering

hclust

Agglomerative Clustering

sklearn.cluster._agglomerative.AgglomerativeCl...

dbscan

Density-Based Spatial Clustering

sklearn.cluster._dbscan.DBSCAN

optics

OPTICS Clustering

sklearn.cluster._optics.OPTICS

birch

Birch Clustering

sklearn.cluster._birch.Birch

kmodes

K-Modes Clustering

kmodes.kmodes.KModes

kmeans = clu_exp .create_model ('kmeans' )

Silhouette

Calinski-Harabasz

Davies-Bouldin

Homogeneity

Rand Index

Completeness

0

0.4875

2091.9609

0.5016

0

0

0

# train meanshift model

meanshift = clu_exp .create_model ('meanshift' )

Silhouette

Calinski-Harabasz

Davies-Bouldin

Homogeneity

Rand Index

Completeness

0

0.2849

649.3671

0.4694

0

0

0

This function assigns cluster labels to the training data, given a trained model.

kmeans_cluster = clu_exp .assign_model (kmeans )

kmeans_cluster

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

</style>

fractiondate

year

monthyear

actor1code

actor1name

actor1geo_type

actor1geo_long

actor1geo_lat

actor2code

actor2name

actor2geo_type

actor2geo_long

actor2geo_lat

isrootevent

eventcode

eventrootcode

goldsteinscale

nummentions

numsources

avgtone

quadclass

Cluster

0

2022.553345

2022

202207

CAN

CANADA

4

-137.817001

66.849998

CHRCTH

CATHOLIC

4

-137.817001

66.849998

1

20

2

3.0

13

2

-3.829401

Verbal Cooperation

Cluster 2

1

2022.553345

2022

202207

CAN

CANADA

4

-137.817001

66.849998

CHRCTH

CATHOLIC

4

-137.817001

66.849998

1

90

9

-2.0

13

2

-3.829401

Material Cooperation

Cluster 2

2

2022.553345

2022

202207

CVL

COMMUNITY

4

-137.817001

66.849998

CHRCTH

CATHOLIC

4

-137.817001

66.849998

1

20

2

3.0

7

2

-3.829401

Verbal Cooperation

Cluster 2

3

2022.553345

2022

202207

CVL

COMMUNITY

4

-137.817001

66.849998

CHRCTH

CATHOLIC

4

-137.817001

66.849998

1

90

9

-2.0

7

2

-3.829401

Material Cooperation

Cluster 2

4

2022.553345

2022

202207

EDU

STUDENT

3

-77.649696

39.083401

NaN

NaN

3

-77.649696

39.083401

0

110

11

-2.0

10

1

0.393701

Verbal Conflict

Cluster 2

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

...

87979

2022.553345

2022

202207

USAGOV

HOUSTON

3

-95.363297

29.763300

USA

TEXAS

3

-95.363297

29.763300

1

112

11

-2.0

3

1

-10.795455

Verbal Conflict

Cluster 2

87980

2022.553345

2022

202207

USAGOV

UNITED STATES

3

-95.363297

29.763300

USA

UNITED STATES

2

-97.647499

31.106001

1

112

11

-2.0

1

1

-10.795455

Verbal Conflict

Cluster 2

87981

2022.553345

2022

202207

USAGOV

HOUSTON

3

-95.363297

29.763300

USA

TEXAS

2

-97.647499

31.106001

1

112

11

-2.0

6

1

-10.795455

Verbal Conflict

Cluster 2

87982

2022.553345

2022

202207

USAGOV

UNITED STATES

3

-95.363297

29.763300

USA

HOUSTON

2

-97.647499

31.106001

1

180

18

-9.0

6

1

-10.795455

Material Conflict

Cluster 2

87983

2022.553345

2022

202207

USAGOV

HOUSTON

3

-95.363297

29.763300

USA

TEXAS

2

-97.647499

31.106001

1

180

18

-9.0

4

1

-10.795455

Material Conflict

Cluster 2

832 rows × 22 columns

Click here for more.