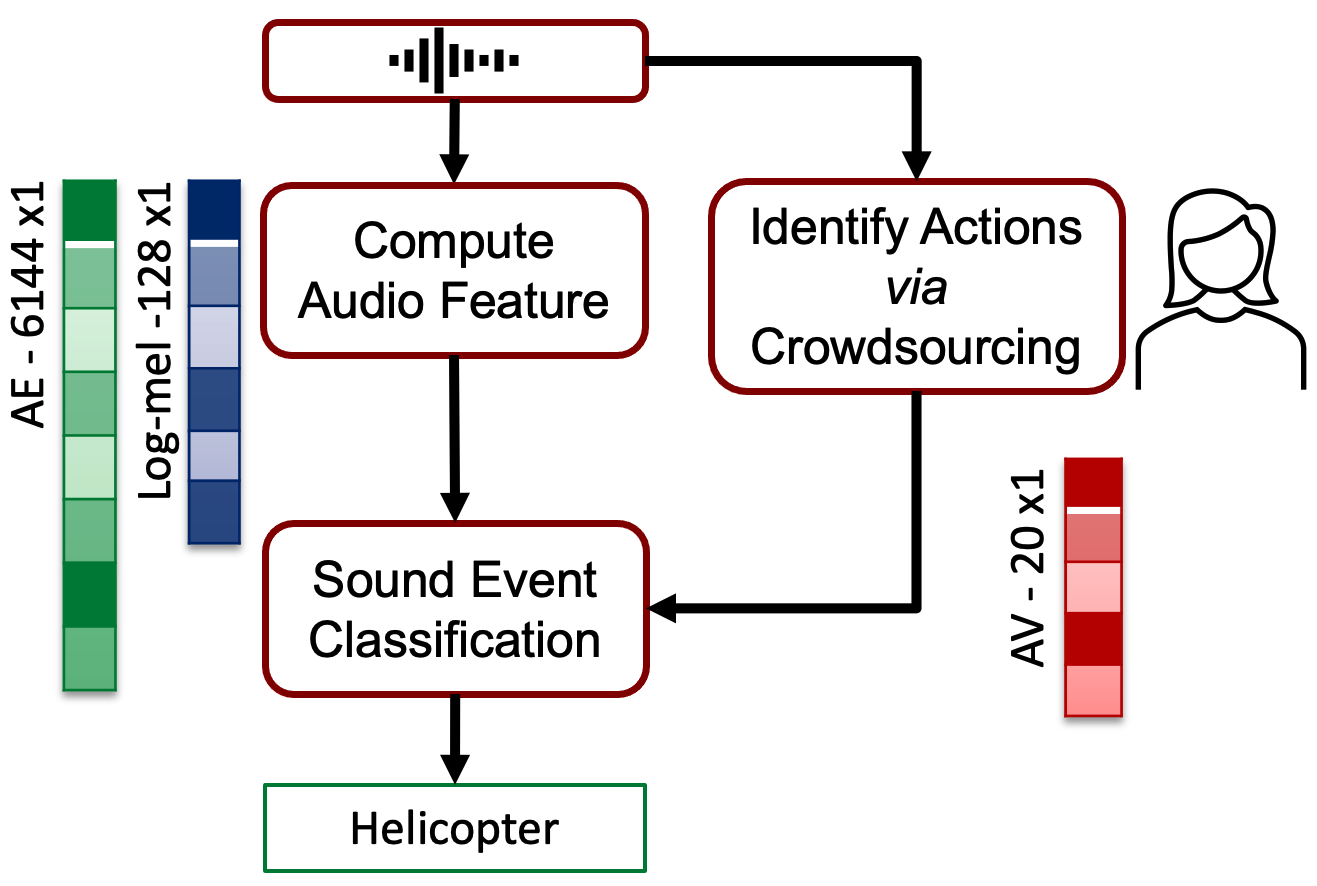

In Psychology, actions are paramount for humans to identify sound events. In Machine Learning (ML), action recognition achieves high accuracy; however, it has not been asked whether identifying actions can benefit Sound Event Classification (SEC), as opposed to mapping the audio directly to a sound event. Therefore, we propose a new Psychology-inspired approach for SEC that includes identification of actions via human listeners. To achieve this goal, we used crowdsourcing to have listeners identify 20 actions that in isolation or in combination may have produced any of the 50 sound events in the well-studied dataset ESC-50. The resulting annotations for each audio recording relate actions to a database of sound events for the first time. The annotations were used to create semantic representations called Action Vectors (AVs). We evaluated SEC by comparing the AVs with two types of audio features -- log-mel spectrograms and state-of-the-art audio embeddings. Because audio features and AVs capture different abstractions of the acoustic content, we combined them and achieved one of the highest reported accuracies (88%).

Typically, SEC takes the input audio, computes audio features and assigns a class label. We proposed to add an intermediate step where listeners identify actions in the audio. The identified actions are transformed into Action Vectors and are used for automatic SEC.

In order to relate actions to sound events, we chose a well-studied sound event dataset called ESC-50. We selected 20 actions that in isolation or combination could have produced at least part (of most) of the 50 sound events.

| dripping | rolling | groaning | crumpling | wailing |

| splashing | scraping | gasping | blowing | calling |

| pouring | exhaling | singing | exploding | ringing |

| breaking | vibrating | tapping | rotating | sizzling |

The ESC-50 dataset is a sound event labeled collection of 2000 audio recordings suitable for benchmarking methods of environmental sound classification. The dataset consists of 5-second-long recordings organized into 50 semantical classes (with 40 examples per class) loosely arranged into 5 major categories:

| Animals | Natural soundscapes & water sounds | Human, non-speech sounds | Interior/domestic sounds | Exterior/urban noises |

|---|---|---|---|---|

| Dog | Rain | Crying baby | Door knock | Helicopter |

| Rooster | Sea waves | Sneezing | Mouse click | Chainsaw |

| Pig | Crackling fire | Clapping | Keyboard typing | Siren |

| Cow | Crickets | Breathing | Door, wood creaks | Car horn |

| Frog | Chirping birds | Coughing | Can opening | Engine |

| Cat | Water drops | Footsteps | Washing machine | Train |

| Hen | Wind | Laughing | Vacuum cleaner | Church bells |

| Insects (flying) | Pouring water | Brushing teeth | Clock alarm | Airplane |

| Sheep | Toilet flush | Snoring | Clock tick | Fireworks |

| Crow | Thunderstorm | Drinking, sipping | Glass breaking | Hand saw |

If you find this research or annotations useful please cite the paper below:

Identifying Actions for Sound Event Classification

@misc{elizalde2021identifying,

title={Identifying Actions for Sound Event Classification},

author={Benjamin Elizalde and Radu Revutchi and Samarjit Das and Bhiksha Raj and Ian Lane and Laurie M. Heller},

year={2021},

eprint={2104.12693},

archivePrefix={arXiv},

primaryClass={cs.SD}

}

For more research on Sound Event Classification with Machine Learning + Psychology refer to:

Never-Ending Learning of Sounds - PhD thesis

@phdthesis{elizalde2020never,

title={Never-Ending Learning of Sounds},

author={Elizalde, Benjamin},

year={2020},

school={Carnegie Mellon University}

}

WASPAA 2021

Reviewer 1: "This paper presents a new idea of annotating sound events [...] is very interesting and highly novel."

Reviewer 2: "This is a strong paper [...] well motivated, organized and written."

Reviewer 3: "The idea of using semantic information to help the learning process is quite interesting."

Thanks to the different funding sources, Bosch Research Pittsburgh, Sense Of Wonder Group and CONACyT.