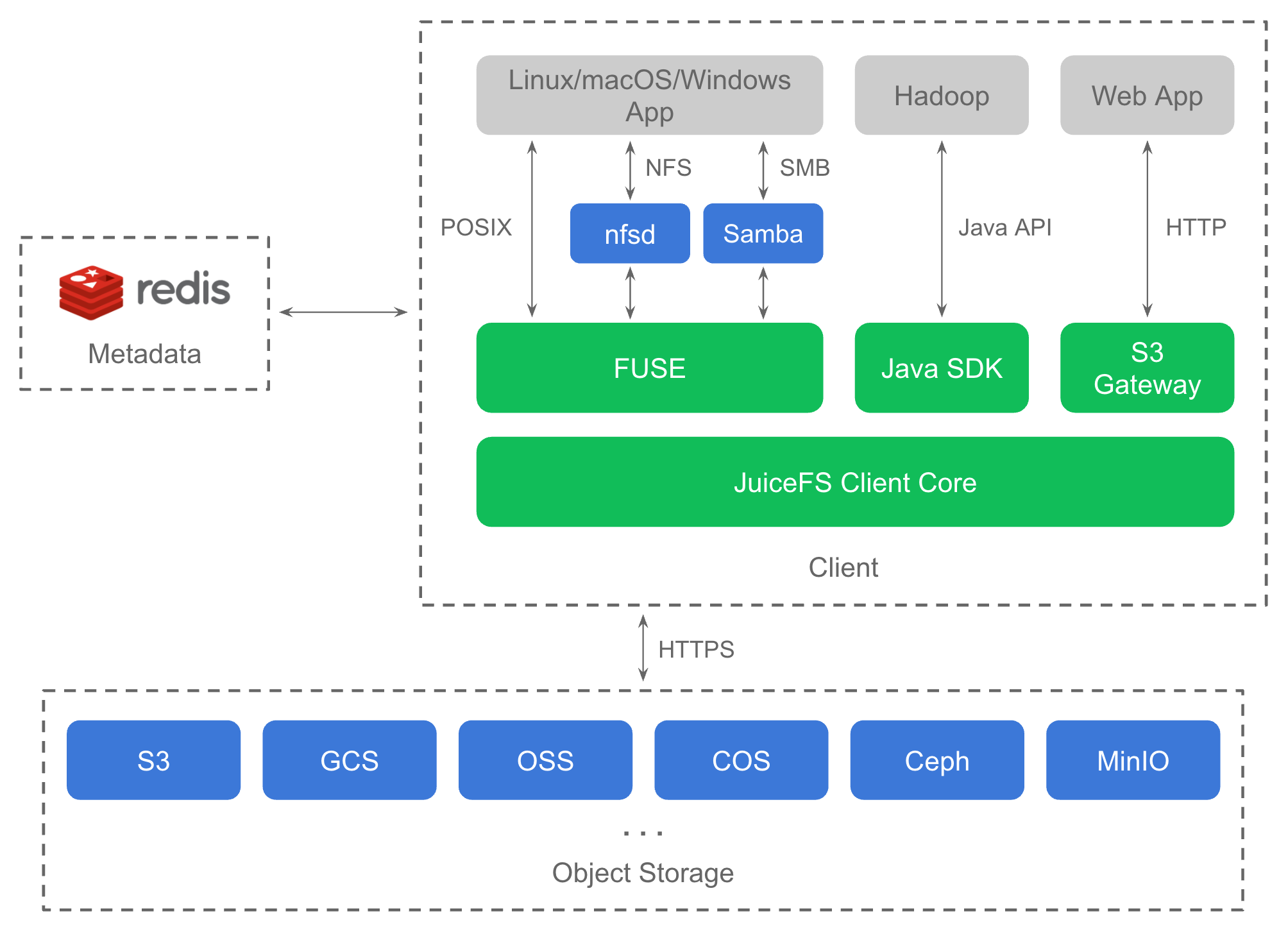

JuiceFS is an open-source POSIX file system built on top of Redis and object storage (e.g. Amazon S3), designed and optimized for cloud native environment. By using the widely adopted Redis and S3 as the persistent storage, JuiceFS serves as a stateless middleware to enable many applications to share data easily.

The highlighted features are:

- Fully POSIX-compatible: JuiceFS is a fully POSIX-compatible file system. Existing applications can work with it without any change. See pjdfstest result below.

- Fully Hadoop-compatible: JuiceFS Hadoop Java SDK is compatible with Hadoop 2.x and Hadoop 3.x. As well as variety of components in Hadoop ecosystem.

- S3-compatible: JuiceFS S3 Gateway provides S3-compatible interface.

- Outstanding Performance: The latency can be as low as a few milliseconds and the throughput can be expanded to nearly unlimited. See benchmark result below.

- Cloud Native: JuiceFS provides Kubernetes CSI driver to help people who want to use JuiceFS in Kubernetes.

- Sharing: JuiceFS is a shared file storage that can be read and written by thousands clients.

In addition, there are some other features:

- Global File Locks: JuiceFS supports both BSD locks (flock) and POSIX record locks (fcntl).

- Data Compression: JuiceFS supports use LZ4 or Zstandard to compress all your data.

- Data Encryption: JuiceFS supports data encryption in transit and at rest, read the guide for more information.

Architecture | Getting Started | Administration | POSIX Compatibility | Performance Benchmark | Supported Object Storage | Status | Roadmap | Reporting Issues | Contributing | Community | Usage Tracking | License | Credits | FAQ

JuiceFS relies on Redis to store file system metadata. Redis is a fast, open-source, in-memory key-value data store and very suitable for storing the metadata. All the data will store into object storage through JuiceFS client.

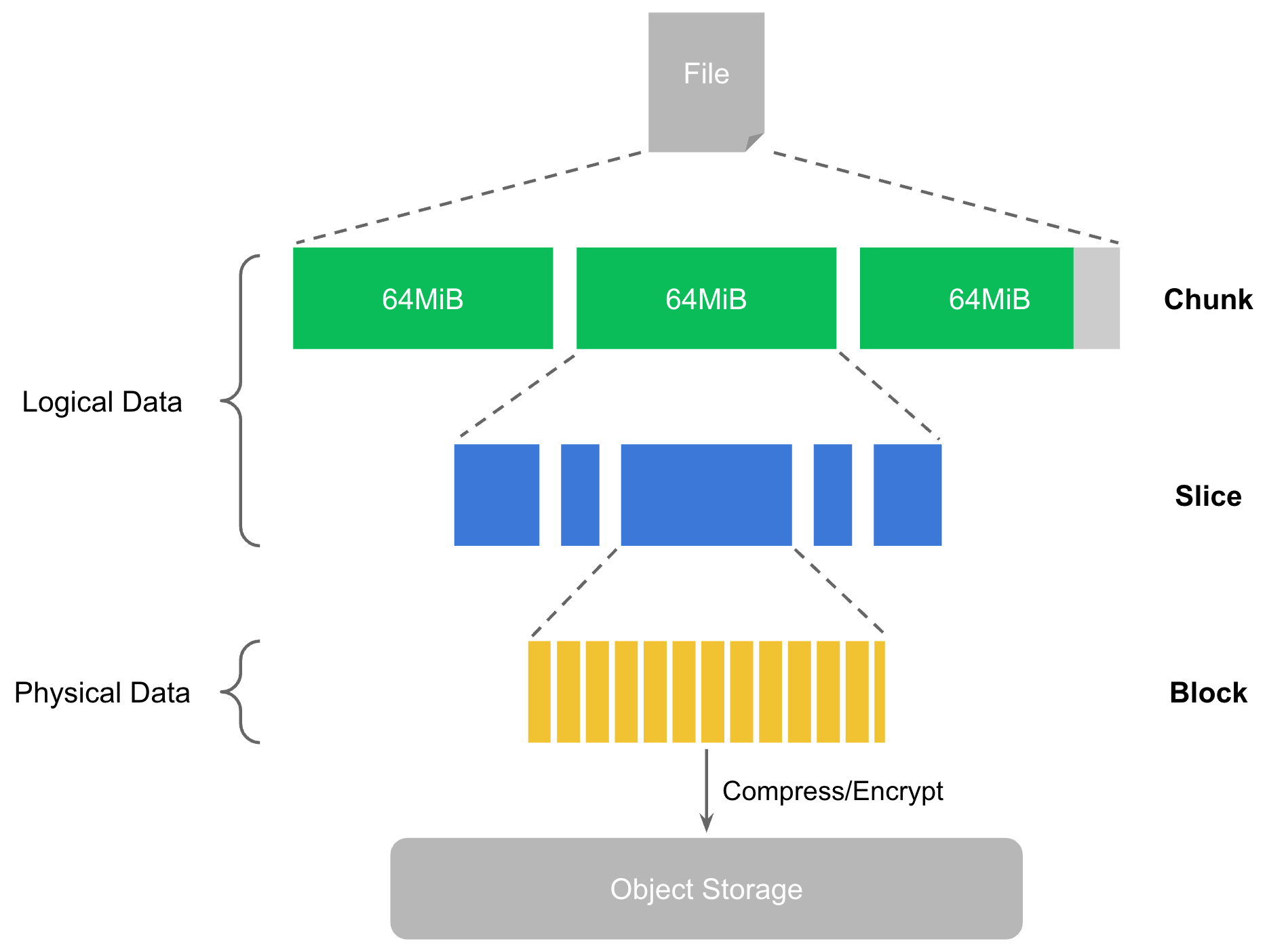

The storage format of one file in JuiceFS consists of three levels. The first level called "Chunk". Each chunk has fixed size, currently it is 64MiB and cannot be changed. The second level called "Slice". The slice size is variable. A chunk may have multiple slices. The third level called "Block". Like chunk, its size is fixed. By default one block is 4MiB and you could modify it when formatting a volume (see following section). At last, the block will be compressed and encrypted (optional) store into object storage.

You can download precompiled binaries from releases page.

You need first installing Go 1.14+ and GCC 5.4+, then run following commands:

$ git clone https://github.com/juicedata/juicefs.git

$ cd juicefs

$ makeFor users in China, it's recommended to set GOPROXY to speed up compilation, e.g. Goproxy China.

A Redis server (>= 2.8) is needed for metadata, please follow Redis Quick Start.

macFUSE is also needed for macOS.

The last one you need is object storage. There are many options for object storage, local disk is the easiest one to get started.

Assume you have a Redis server running locally, we can create a volume called test using it to store metadata:

$ ./juicefs format localhost testIt will create a volume with default settings. If there Redis server is not running locally, the address could be specified using URL, for example, redis://username:password@host:6379/1, the password can also be specified by environment variable REDIS_PASSWORD to hide it from command line options.

Note: After Redis 6.0.0, AUTH command was extended with two arguments, i.e. username and password. If you use Redis < 6.0.0, just omit the username parameter in the URL, e.g. redis://:password@host:6379/1.

As JuiceFS relies on object storage to store data, you can specify a object storage using --storage, --bucket, --access-key and --secret-key. By default, it uses a local directory to serve as an object store, for all the options, please see ./juicefs format -h.

For the details about how to setup different object storage, please read the guide.

Once a volume is formatted, you can mount it to a directory, which is called mount point.

$ ./juicefs mount -d localhost ~/jfsAfter that you can access the volume just like a local directory.

To get all options, just run ./juicefs mount -h.

If you wanna mount JuiceFS automatically at boot, please read the guide.

There is a command reference to see all options of the subcommand.

Using JuiceFS on Kubernetes is so easy, have a try.

If you wanna use JuiceFS in Hadoop, check Hadoop Java SDK.

- Redis Best Practices

- Mount JuiceFS at Boot

- How to Setup Object Storage

- Cache Management

- Fault Diagnosis and Analysis

- FUSE Mount Options

- Sync Account on Multiple Hosts

- Using JuiceFS on Kubernetes

- Using JuiceFS on Windows

- S3 Gateway

JuiceFS passed all of the 8813 tests in latest pjdfstest.

All tests successful.

Test Summary Report

-------------------

/root/soft/pjdfstest/tests/chown/00.t (Wstat: 0 Tests: 1323 Failed: 0)

TODO passed: 693, 697, 708-709, 714-715, 729, 733

Files=235, Tests=8813, 233 wallclock secs ( 2.77 usr 0.38 sys + 2.57 cusr 3.93 csys = 9.65 CPU)

Result: PASS

Besides the things covered by pjdfstest, JuiceFS provides:

- Close-to-open consistency. Once a file is closed, the following open and read can see the data written before close. Within same mount point, read can see all data written before it.

- Rename and all other metadata operations are atomic guaranteed by Redis transaction.

- Open files remain accessible after unlink from same mount point.

- Mmap is supported (tested with FSx).

- Fallocate with punch hole support.

- Extended attributes (xattr).

- BSD locks (flock).

- POSIX record locks (fcntl).

JuiceFS provides a subcommand to run a few basic benchmarks to understand how it works in your environment:

$ ./juicefs bench /jfs

Written a big file (1024.00 MiB): (113.67 MiB/s)

Read a big file (1024.00 MiB): (127.12 MiB/s)

Written 100 small files (102.40 KiB): 151.7 files/s, 6.6 ms for each file

Read 100 small files (102.40 KiB): 692.1 files/s, 1.4 ms for each file

Stated 100 files: 584.2 files/s, 1.7 ms for each file

FUSE operation: 19333, avg: 0.3 ms

Update meta: 436, avg: 1.4 ms

Put object: 356, avg: 4.8 ms

Get object first byte: 308, avg: 0.2 ms

Delete object: 356, avg: 0.2 ms

Used: 23.4s, CPU: 69.1%, MEM: 147.0 MiBPerformed a sequential read/write benchmark on JuiceFS, EFS and S3FS by fio, here is the result:

It shows JuiceFS can provide 10X more throughput than the other two, read more details.

Performed a simple mdtest benchmark on JuiceFS, EFS and S3FS by mdtest, here is the result:

It shows JuiceFS can provide significantly more metadata IOPS than the other two, read more details.

There is a virtual file called .accesslog in the root of JuiceFS to show all the operations and the time they takes, for example:

$ cat /jfs/.accesslog

2021.01.15 08:26:11.003330 [uid:0,gid:0,pid:4403] write (17669,8666,4993160): OK <0.000010>

2021.01.15 08:26:11.003473 [uid:0,gid:0,pid:4403] write (17675,198,997439): OK <0.000014>

2021.01.15 08:26:11.003616 [uid:0,gid:0,pid:4403] write (17666,390,951582): OK <0.000006>The last number on each line is the time (in seconds) current operation takes. You can use this directly to debug and analyze performance issues, or try ./juicefs profile /jfs to monitor real time statistics. Please run ./juicefs profile -h or refer to here to learn more about this subcommand.

- Amazon S3

- Google Cloud Storage

- Azure Blob Storage

- Alibaba Cloud Object Storage Service (OSS)

- Tencent Cloud Object Storage (COS)

- QingStor Object Storage

- Ceph RGW

- MinIO

- Local disk

- Redis

For the detailed list, see this document.

It's considered as beta quality, the storage format is not stabilized yet. It's not recommended to deploy it into production environment. Please test it with your use cases and give us feedback.

- Stabilize storage format

- Other databases for metadata

We use GitHub Issues to track community reported issues. You can also contact the community for getting answers.

Thank you for your contribution! Please refer to the CONTRIBUTING.md for more information.

Welcome to join the Discussions and the Slack channel to connect with JuiceFS team members and other users.

JuiceFS by default collects anonymous usage data. It only collects core metrics (e.g. version number), no user or any sensitive data will be collected. You could review related code here.

These data help us understand how the community is using this project. You could disable reporting easily by command line option --no-usage-report:

$ ./juicefs mount --no-usage-reportJuiceFS is open-sourced under GNU AGPL v3.0, see LICENSE.

The design of JuiceFS was inspired by Google File System, HDFS and MooseFS, thanks to their great work.

JuiceFS already supported many object storage, please check the list first. If this object storage is compatible with S3, you could treat it as S3. Otherwise, try reporting issue.

The simple answer is no. JuiceFS uses transaction to guarantee the atomicity of metadata operations, which is not well supported in cluster mode. Sentinal or other HA solution for Redis are needed.

See "Redis Best Practices" for more information.

See "Comparison with Others" for more information.

For more FAQs, please see the full list.