A repository for generating salient co-speech gesture. This is the repository for paper Salient Co-Speech Gesture Synthesizing with Discrete Motion Representation.

conda create -f environment.yml python=3.8- Download dataset to

data/baijia_all - Run

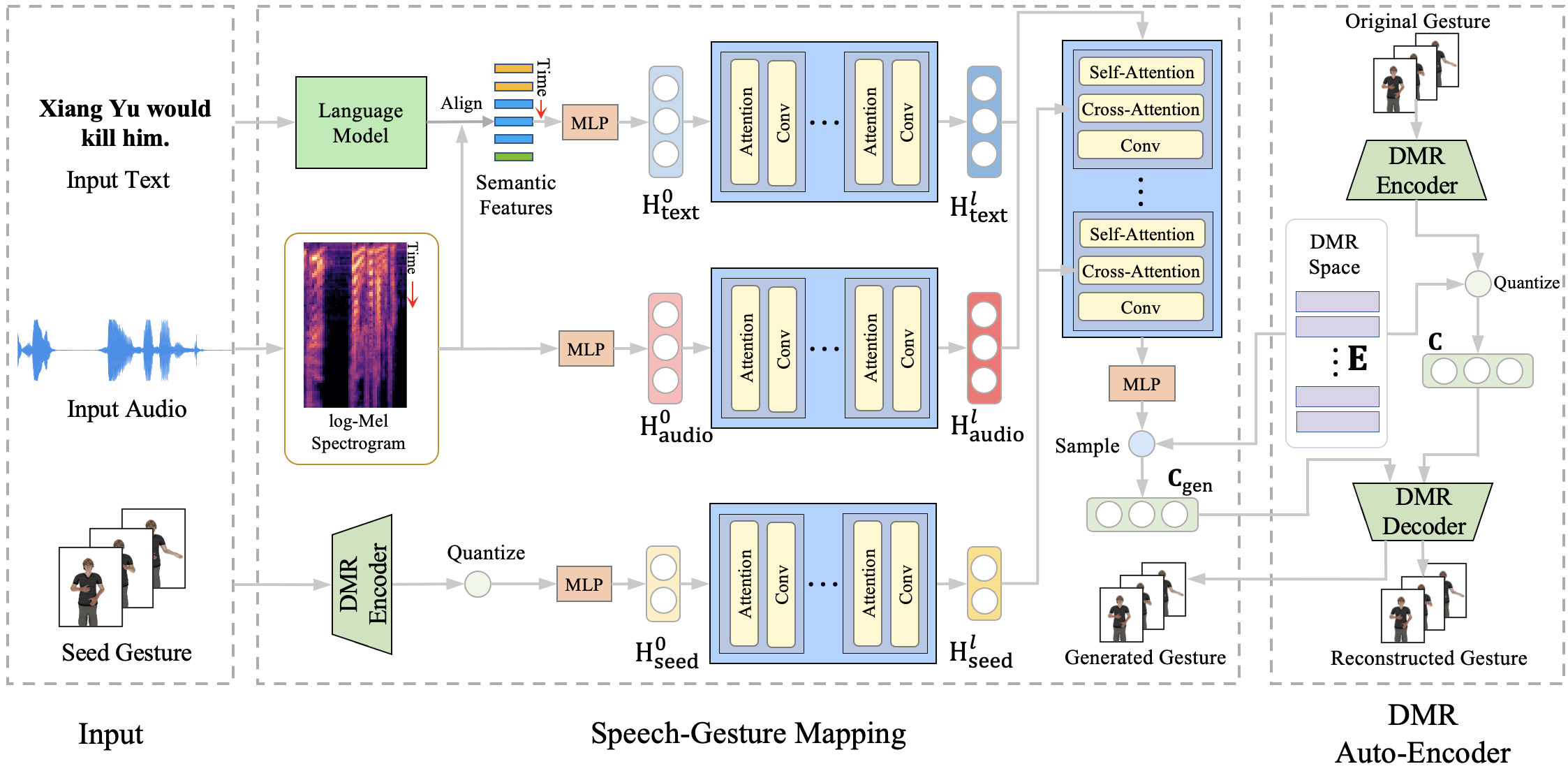

train_vae.py -c data/motion_vqvae.ymlto train Motion Auto-Encoder. - Run

train_transformer.py -c data/motion_vqvae.ymlto train Speech-Gesture Mapping network. - Run

evaluate_transformer.pyto evaluate FGD and MVD. - Run

inference_transformer.pyto evaluate SMS.

We leverage lmdb to store the dataset. The data can be downloaed at link, and then run cat xa* > data.mdb.

TODO