Official implementation (PyTorch) of the paper:

Evaluating Scalable Bayesian Deep Learning Methods for Robust Computer Vision, 2019 [arXiv] [project].

Fredrik K. Gustafsson, Martin Danelljan, Thomas B. Schön.

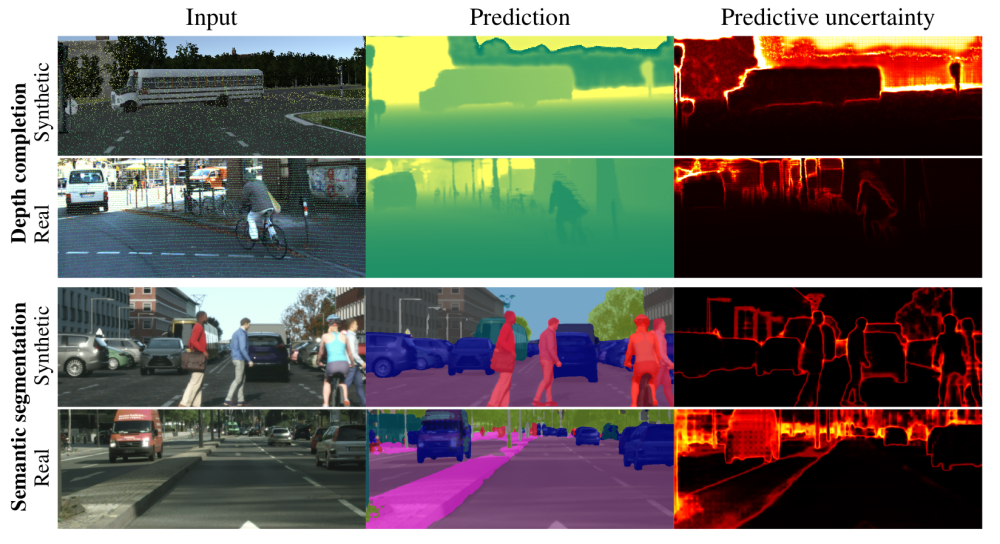

We propose an evaluation framework for predictive uncertainty estimation that is specifically designed to test the robustness required in real-world computer vision applications. Using the proposed framework, we perform an extensive comparison of the popular ensembling and MC-dropout methods on the tasks of depth completion and street-scene semantic segmentation. Our comparison suggests that ensembling consistently provides more reliable uncertainty estimates.

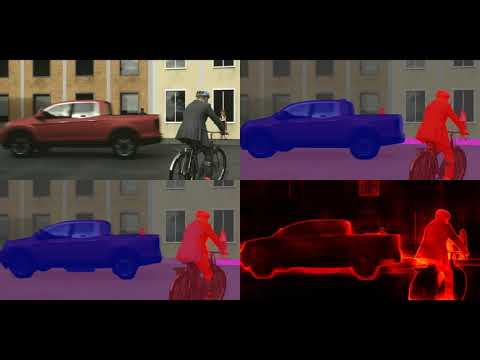

Youtube video with qualitative results:

If you find this work useful, please consider citing:

@article{gustafsson2019evaluating,

title={Evaluating Scalable Bayesian Deep Learning Methods for Robust Computer Vision},

author={Gustafsson, Fredrik K and Danelljan, Martin and Sch{\"o}n, Thomas B},

journal={arXiv preprint arXiv:1906.01620},

year={2019}

}

- The depthCompletion code is based on the implementation by @fangchangma found here.

- The segmentation code is based on the implementation by @PkuRainBow found here, which in turn utilizes inplace_abn by @mapillary.

The code has been tested on Ubuntu 16.04. Docker images are provided (see below).

- $ sudo docker pull fregu856/evaluating_bdl:pytorch_pytorch_0.4_cuda9_cudnn7_evaluating_bdl

- Create start_docker_image_toyProblems_depthCompletion.sh containing (My username on the server is fregu482, i.e., my home folder is /home/fregu482. You will have to modify this accordingly):

#!/bin/bash

# DEFAULT VALUES

GPUIDS="0"

NAME="toyProblems_depthCompletion_GPU"

NV_GPU="$GPUIDS" nvidia-docker run -it --rm --shm-size 12G \

-p 5700:5700\

--name "$NAME""0" \

-v /home/fregu482:/root/ \

fregu856/evaluating_bdl:pytorch_pytorch_0.4_cuda9_cudnn7_evaluating_bdl bash

-

(Inside the image, /root/ will now be mapped to /home/fregu482, i.e., $ cd -- takes you to the regular home folder)

-

(To create more containers, change the lines GPUIDS="0", --name "$NAME""0" and -p 5700:5700)

-

General Docker usage:

-

- To start the image:

-

-

- $ sudo sh start_docker_image_toyProblems_depthCompletion.sh

-

-

- To commit changes to the image:

-

-

- Open a new terminal window.

-

-

-

- $ sudo docker commit toyProblems_depthCompletion_GPU0 fregu856/evaluating_bdl:pytorch_pytorch_0.4_cuda9_cudnn7_evaluating_bdl

-

-

- To exit the image without killing running code:

-

-

- Ctrl + P + Q

-

-

- To get back into a running image:

-

-

- $ sudo docker attach toyProblems_depthCompletion_GPU0

-

-

Download the KITTI depth completion dataset (data_depth_annotated.zip, data_depth_selection.zip and data_depth_velodyne.zip) and place it in /root/data/kitti_depth (/root/data/kitti_depth should contain the folders train, val and depth_selection).

-

Create /root/data/kitti_raw and download the KITTI raw dataset using download_kitti_raw.py.

-

Create /root/data/kitti_rgb. For each folder in /root/data/kitti_depth/train (e.g. 2011_09_26_drive_0001_sync), copy the corresponding folder in /root/data/kitti_raw and place it in /root/data/kitti_rgb/train.

-

Download the virtual KITTI dataset (vkitti_1.3.1_depthgt.tar and vkitti_1.3.1_rgb.tar) and place in /root/data/virtualkitti (/root/data/virtualkitti should contain the folders vkitti_1.3.1_depthgt and vkitti_1.3.1_rgb).

-

Example usage:

$ sudo sh start_docker_image_toyProblems_depthCompletion.sh

$ cd --

$ python evaluating_bdl/depthCompletion/ensembling_train_virtual.py

- $ sudo docker pull fregu856/evaluating_bdl:rainbowsecret_pytorch04_20180905_evaluating_bdl

- Create start_docker_image_segmentation.sh containing (My username on the server is fregu482, i.e., my home folder is /home/fregu482. You will have to modify this accordingly):

#!/bin/bash

# DEFAULT VALUES

GPUIDS="0,1"

NAME="segmentation_GPU"

NV_GPU="$GPUIDS" nvidia-docker run -it --rm --shm-size 12G \

-p 5900:5900 \

--name "$NAME""01" \

-v /home/fregu482:/home/ \

fregu856/evaluating_bdl:rainbowsecret_pytorch04_20180905_evaluating_bdl bash

-

(Inside the image, /home/ will now be mapped to /home/fregu482, i.e., $ cd home takes you to the regular home folder)

-

(To create more containers, change the lines GPUIDS="0,1", --name "$NAME""01" and -p 5900:5900)

-

General Docker usage:

-

- To start the image:

-

-

- $ sudo sh start_docker_image_segmentation.sh

-

-

- To commit changes to the image:

-

-

- Open a new terminal window.

-

-

-

- $ sudo docker commit segmentation_GPU01 fregu856/evaluating_bdl:rainbowsecret_pytorch04_20180905_evaluating_bdl

-

-

- To exit the image without killing running code:

-

-

- Ctrl + P + Q

-

-

- To get back into a running image:

-

-

- $ sudo docker attach segmentation_GPU01

-

-

Download resnet101-imagenet.pth from here and place it in segmentation.

-

Download the Cityscapes dataset and place it in /home/data/cityscapes (/home/data/cityscapes should contain the folders leftImg8bit and gtFine).

-

Download the Synscapes dataset and place it in /home/data/synscapes (/home/data/synscapes should contain the folder img, which in turn should contain the folders rgb-2k and class).

-

Run segmentation/utils/preprocess_synscapes.py (This will, among other things, create /home/data/synscapes_meta/train_img_ids.pkl and /home/data/synscapes_meta/val_img_ids.pkl by randomly selecting subsets of examples. The ones used in the paper are found in segmentation/lists/synscapes).

-

Example usage:

$ sudo sh start_docker_image_segmentation.sh

$ cd home

$ /root/miniconda3/bin/python evaluating_bdl/segmentation/ensembling_train_syn.py

- Example usage:

$ sudo sh start_docker_image_toyProblems_depthCompletion.sh

$ cd --

$ python evaluating_bdl/toyRegression/Ensemble-Adam/train.py

- Example usage:

$ sudo sh start_docker_image_toyProblems_depthCompletion.sh

$ cd --

$ python evaluating_bdl/toyClassification/Ensemble-Adam/train.py

- Example usage:

$ sudo sh start_docker_image_toyProblems_depthCompletion.sh

$ cd --

$ python evaluating_bdl/depthCompletion/ensembling_train_virtual.py

-

criterion.py: Definitions of losses and metrics.

-

datasets.py: Definitions of datasets, for KITTI depth completion (KITTI) and virtualKITTI.

-

model.py: Definition of the CNN.

-

model_mcdropout.py: Definition of the CNN, with inserted dropout layers.

-

%%%%%

-

ensembling_train.py: Code for training M model.py models, on KITTI train.

-

ensembling_train_virtual.py: As above, but on virtualKITTI train.

-

ensembling_eval.py: Computes the loss and RMSE for a trained ensemble, on KITTI val. Also creates visualization images of the input data, ground truth, prediction and the estimated uncertainty.

-

ensembling_eval_virtual.py: As above, but on virtualKITTI val.

-

ensembling_eval_auce.py: Computes the AUCE (mean +- std) for M = [1, 2, 4, 8, 16, 32] on KITTI val, based on a total of 33 trained ensemble members. Also creates calibration plots.

-

ensembling_eval_auce_virtual.py: As above, but on virtualKITTI val.

-

ensembling_eval_ause.py: Computes the AUSE (mean +- std) for M = [1, 2, 4, 8, 16, 32] on KITTI val, based on a total of 33 trained ensemble members. Also creates sparsification plots and sparsification error curves.

-

ensembling_eval_ause_virtual.py: As above, but on virtualKITTI val.

-

ensembling_eval_seq.py: Creates visualization videos (input data, ground truth, prediction and the estimated uncertainty) for a trained ensemble, on all sequences in KITTI val.

-

ensembling_eval_seq_virtual.py: As above, but on all sequences in virtualKITTI val.

-

%%%%%

-

mcdropout_train.py: Code for training M model_mcdropout.py models, on KITTI train.

-

mcdropout_train_virtual.py: As above, but on virtualKITTI train.

-

mcdropout_eval.py: Computes the loss and RMSE for a trained MC-dropout model with M forward passes, on KITTI val. Also creates visualization images of the input data, ground truth, prediction and the estimated uncertainty.

-

mcdropout_eval_virtual.py: As above, but on virtualKITTI val.

-

mcdropout_eval_auce.py: Computes the AUCE (mean +- std) for M = [1, 2, 4, 8, 16, 32] forward passes on KITTI val, based on a total of 16 trained MC-dropout models. Also creates calibration plots.

-

mcdropout_eval_auce_virtual.py: As above, but on virtualKITTI val.

-

mcdropout_eval_ause.py: Computes the AUSE (mean +- std) for M = [1, 2, 4, 8, 16, 32] forward passes on KITTI val, based on a total of 16 trained MC-dropout models. Also creates sparsification plots and sparsification error curves.

-

mcdropout_eval_ause_virtual.py: As above, but on virtualKITTI val.

-

mcdropout_eval_seq.py: Creates visualization videos (input data, ground truth, prediction and the estimated uncertainty) for a trained MC-dropout model with M forward passes, on all sequences in KITTI val.

-

mcdropout_eval_seq_virtual.py: As above, but on all sequences in virtualKITTI val.

- Example usage:

$ sudo sh start_docker_image_segmentation.sh

$ cd home

$ /root/miniconda3/bin/python evaluating_bdl/segmentation/ensembling_train_syn.py

-

models:

-

-

- model.py: Definition of the CNN.

-

-

-

- model_mcdropout.py: Definition of the CNN, with inserted dropout layers.

-

-

-

- aspp.py: Definition of the ASPP module.

-

-

-

- resnet_block.py: Definition of a ResNet block.

-

-

utils:

-

-

- criterion.py: Definition of the cross-entropy loss.

-

-

-

- preprocess_synscapes.py: Creates the Synscapes train (val) dataset by randomly selecting a subset of 2975 (500) examples, and resizes the labels to 1024 x 2048.

-

-

-

- utils.py: Helper functions for evaluation and visualization.

-

-

datasets.py: Definitions of datasets, for Cityscapes and Synscapes.

-

%%%%%

-

ensembling_train.py: Code for training M model.py models, on Cityscapes train.

-

ensembling_train_syn.py: As above, but on Synscapes train.

-

ensembling_eval.py: Computes the mIoU for a trained ensemble, on Cityscapes val. Also creates visualization images of the input image, ground truth, prediction and the estimated uncertainty.

-

ensembling_eval_syn.py: As above, but on Synscapes val.

-

ensembling_eval_ause_ece.py: Computes the AUSE (mean +- std) and ECE (mean +- std) for M = [1, 2, 4, 8, 16] on Cityscapes val, based on a total of 26 trained ensemble members. Also creates sparsification plots, sparsification error curves and reliability diagrams.

-

ensembling_eval_ause_ece_syn.py: As above, but on Synscapes val.

-

ensembling_eval_seq.py: Creates visualization videos (input image, prediction and the estimated uncertainty) for a trained ensemble, on the three demo sequences in Cityscapes.

-

ensembling_eval_seq_syn.py: Creates a visualization video (input image, ground truth, prediction and the estimated uncertainty) for a trained ensemble, showing the 30 first images in Synscapes val.

-

%%%%%

-

mcdropout_train.py: Code for training M model_mcdropout.py models, on Cityscapes train.

-

mcdropout_train_syn.py: As above, but on Synscapes train.

-

mcdropout_eval.py: Computes the mIoU for a trained MC-dropout model with M forward passes, on Cityscapes val. Also creates visualization images of the input image, ground truth, prediction and the estimated uncertainty.

-

mcdropout_eval_syn.py: As above, but on Synscapes val.

-

mcdropout_eval_ause_ece.py: Computes the AUSE (mean +- std) and ECE (mean +- std) for M = [1, 2, 4, 8, 16] forward passes on Cityscapes val, based on a total of 8 trained MC-dropout models. Also creates sparsification plots, sparsification error curves and reliability diagrams.

-

mcdropout_eval_ause_ece_syn.py: As above, but on Synscapes val.

-

mcdropout_eval_seq.py: Creates visualization videos (input image, prediction and the estimated uncertainty) for a trained MC-dropout model with M forward passes, on the three demo sequences in Cityscapes.

-

mcdropout_eval_seq_syn.py: Creates a visualization video (input image, ground truth, prediction and the estimated uncertainty) for a trained MC-dropout model with M forward passes, showing the 30 first images in Synscapes val.

- Example usage:

$ sudo sh start_docker_image_toyProblems_depthCompletion.sh

$ cd --

$ python evaluating_bdl/toyRegression/Ensemble-Adam/train.py

-

Ensemble-Adam:

-

- Ensembling by minimizing the MLE objective using Adam and random initialization.

-

-

- datasets.py: Definition of the training dataset.

-

-

-

- model.py: Definition of the feed-forward neural network.

-

-

-

- train.py: Code for training M models.

-

-

-

- eval.py: Creates a plot of the obtained predictive distribution and the HMC "ground truth" predictive distribution, for a set value of M. Also creates histograms for the model parameters.

-

-

-

- eval_plots.py: Creates plots of the obtained predictive distributions for different values of M.

-

-

-

- eval_kl_div.py: Computes the KL divergence between the obtained predictive distribution and the HMC "ground truth", for different values of M.

-

-

Ensemble-MAP-Adam:

-

-

- Ensembling by minimizing the MAP objective using Adam and random initialization.

-

-

Ensemble-MAP-Adam-Fixed:

-

-

- Ensembling by minimizing the MAP objective using Adam and NO random initialization.

-

-

Ensemble-MAP-SGD:

-

-

- Ensembling by minimizing the MAP objective using SGD and random initialization.

-

-

Ensemble-MAP-SGDMOM:

-

-

- Ensembling by minimizing the MAP objective using SGDMOM and random initialization.

-

-

MC-Dropout-MAP-02-Adam:

-

-

- MC-dropout by minimizing the MAP objective using Adam, p=0.2.

-

-

MC-Dropout-MAP-02-SGD

-

-

- MC-dropout by minimizing the MAP objective using SGD, p=0.2.

-

-

MC-Dropout-MAP-02-SGDMOM:

-

-

- MC-dropout by minimizing the MAP objective using SGDMOM, p=0.2.

-

-

SGLD-256:

-

-

- Implementation of SGLD, trained for 256 times longer than each member of an ensemble.

-

-

SGLD-64:

-

-

- Implementation of SGLD, trained for 64 times longer than each member of an ensemble..

-

-

SGHMC-256:

-

-

- Implementation of SGHMC, trained for 256 times longer than each member of an ensemble.

-

-

SGHMC-64:

-

-

- Implementation of SGHMC, trained for 64 times longer than each member of an ensemble.

-

-

HMC:

-

-

- Implementation of HMC using Pyro.

-

-

Deterministic:

-

-

- Implementation of a fully deterministic model, i.e., direct regression.

-

- Example usage:

$ sudo sh start_docker_image_toyProblems_depthCompletion.sh

$ cd --

$ python evaluating_bdl/toyClassification/Ensemble-Adam/train.py

-

Ensemble-Adam:

-

- Ensembling by minimizing the MLE objective using Adam and random initialization.

-

-

- datasets.py: Definition of the training dataset.

-

-

-

- model.py: Definition of the feed-forward neural network.

-

-

-

- train.py: Code for training M models.

-

-

-

- eval.py: Creates a plot of the obtained predictive distribution and the HMC "ground truth" predictive distribution, for a set value of M. Also creates histograms for the model parameters.

-

-

-

- eval_plots.py: Creates plots of the obtained predictive distributions for different values of M.

-

-

-

- eval_kl_div.py: Computes the KL divergence between the obtained predictive distribution and the HMC "ground truth", for different values of M.

-

-

Ensemble-Adam-Fixed:

-

-

- Ensembling by minimizing the MLE objective using Adam and NO random initialization.

-

-

Ensemble-MAP-Adam:

-

-

- Ensembling by minimizing the MAP objective using Adam and random initialization.

-

-

Ensemble-MAP-SGD:

-

-

- Ensembling by minimizing the MAP objective using SGD and random initialization.

-

-

Ensemble-MAP-SGDMOM:

-

-

- Ensembling by minimizing the MAP objective using SGDMOM and random initialization.

-

-

MC-Dropout-MAP-01-Adam:

-

-

- MC-dropout by minimizing the MAP objective using Adam, p=0.1.

-

-

MC-Dropout-MAP-02-SGD

-

-

- MC-dropout by minimizing the MAP objective using SGD, p=0.2.

-

-

MC-Dropout-MAP-02-SGDMOM:

-

-

- MC-dropout by minimizing the MAP objective using SGDMOM, p=0.2.

-

-

SGLD-256:

-

-

- Implementation of SGLD, trained for 256 times longer than each member of an ensemble.

-

-

SGLD-64:

-

-

- Implementation of SGLD, trained for 64 times longer than each member of an ensemble..

-

-

SGHMC-256:

-

-

- Implementation of SGHMC, trained for 256 times longer than each member of an ensemble.

-

-

SGHMC-64:

-

-

- Implementation of SGHMC, trained for 64 times longer than each member of an ensemble.

-

-

HMC:

-

-

- Implementation of HMC using Pyro.

-

-

depthCompletion:

-

-

- depthCompletion/trained_models/ensembling_virtual_0/checkpoint_40000.pth (obtained by running ensembling_train_virtual.py)

-

-

-

- depthCompletion/trained_models/mcdropout_virtual_0/checkpoint_40000.pth (obtained by running mcdropout_train_virtual.py)

-

-

segmentation:

-

-

- segmentation/trained_models/ensembling_0/checkpoint_40000.pth (obtained by running ensembling_train.py)

-

-

-

- segmentation/trained_models/ensembling_syn_0/checkpoint_40000.pth (obtained by running ensembling_train_syn.py)

-

-

-

- segmentation/trained_models/mcdropout_syn_0/checkpoint_60000.pth (obtained by running mcdropout_train_syn.py)

-