[:star: New :star:] Please head over to StyleGAN2.pytorch for my stylegan2 pytorch implementation.

This repository contains the unofficial PyTorch implementation of the following paper:

A Style-Based Generator Architecture for Generative Adversarial Networks

Tero Karras (NVIDIA), Samuli Laine (NVIDIA), Timo Aila (NVIDIA)

http://stylegan.xyz/paperAbstract: We propose an alternative generator architecture for generative adversarial networks, borrowing from style transfer literature. The new architecture leads to an automatically learned, unsupervised separation of high-level attributes (e.g., pose and identity when trained on human faces) and stochastic variation in the generated images (e.g., freckles, hair), and it enables intuitive, scale-specific control of the synthesis. The new generator improves the state-of-the-art in terms of traditional distribution quality metrics, leads to demonstrably better interpolation properties, and also better disentangles the latent factors of variation. To quantify interpolation quality and disentanglement, we propose two new, automated methods that are applicable to any generator architecture. Finally, we introduce a new, highly varied and high-quality dataset of human faces.

- Progressive Growing Training

- Exponential Moving Average

- Equalized Learning Rate

- PixelNorm Layer

- Minibatch Standard Deviation Layer

- Style Mixing Regularization

- Truncation Trick

- Using official tensorflow pretrained weights

- Gradient Clipping

- Multi-GPU Training

- FP-16 Support

- Conditional GAN

- yacs

- tqdm

- numpy

- torch

- torchvision

- tensorflow(Optional, for ./convert.py)

Train from scratch:

python train.py --config configs/sample.yamlResume training from a checkpoint (start form 128x128):

python train.py --config config/sample.yaml --start_depth 5 --generator_file [] [--gen_shadow_file] --discriminator_file [] --gen_optim_file [] --dis_optim_file []python generate_mixing_figure.py --config config/sample.yaml --generator_file [] Thanks to dataset provider:Copyright(c) 2018, seeprettyface.com, BUPT_GWY contributes the dataset.

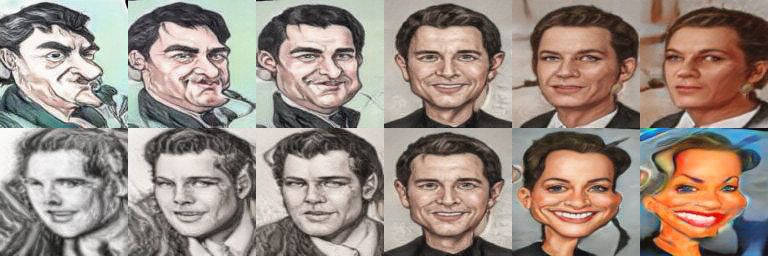

python generate_truncation_figure.py --config configs/sample_cari2_128_truncation.yaml --generator_file cari2_128_truncation_gen.pthpython convert.py --config configs/sample_ffhq_1024.yaml --input_file PATH/karras2019stylegan-ffhq-1024x1024.pkl --output_file ffhq_1024_gen.pthUsing weights tranferred from official tensorflow repo.

[WebCaricatureDataset](128x128)

- stylegan[official]: https://github.com/NVlabs/stylegan

- pro_gan_pytorch: https://github.com/akanimax/pro_gan_pytorch

- pytorch_style_gan: https://github.com/lernapparat/lernapparat

Please feel free to open PRs / issues / suggestions here.

This code heavily uses NVIDIA's original

StyleGAN code. We accredit and acknowledge their work here. The

Original License

is located in the base directory (file named LICENSE_ORIGINAL.txt).