Litti-ML is an extensible ML inference engine, which natively understands model and feature metadata in inference context.

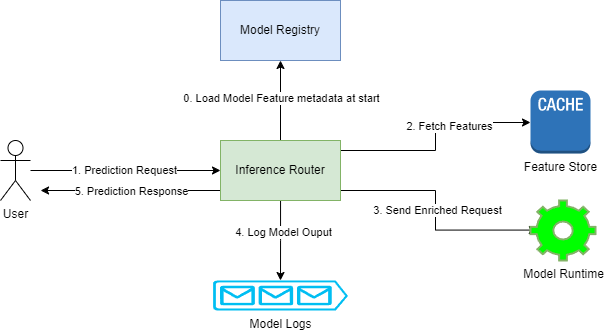

- Request is sent from client to inference router.

- Inference router is already aware of features required for inferencing because it can access management server at startup.

- Inference router queries the relevant feature stores to add feature values to prediction request.

- Inference router forwards the request to prediction runtime.

- Prediction Runtime does model computation and returns result to inference router.

- Inference router logs model prediction and returns response.

- Define specifications and add examples for multi-framework inference support for most jvm based frameworks.

- Define specifications and add examples for multi-framework inference support for non-jvm based frameworks.

- Define specifications for an extensible integration of inference feature stores.

- Define specifications for model and feature store inference telemetry for platform engineers.

- Install maven

- Install docker

- Build modules and publish docker images:

mvn clean install -am -Pbuild-docker- Build only single module image :

mvn clean install -pl :litti-management-ui -am -Pbuild-docker

- Build only single module image :

- Start service using docker-compose.yaml under deployment/docker-compose folder.