You can now find us at CVPR 2020 . Our live Q&A sessions are on June 18, 2020 @ 5pm - 7pm PDT (click here to join) and June 19, 2020 @ 5am - 7am PDT (click here to join) . We are looking forward to seeing you at CVPR!

CVPR 2020 is now over, and we thank you for all the interesting discussions! Our presentation video is available on YouTube . We will continue the development of the code and models in this repository, so stay tuned!

Blueprint Separable Convolutions (BSConv) This repository provides code and trained models for the CVPR 2020 paper (official , arXiv ):

Rethinking Depthwise Separable Convolutions: How Intra-Kernel Correlations Lead to Improved MobileNets

Daniel Haase*, Manuel Amthor*

Overview Results

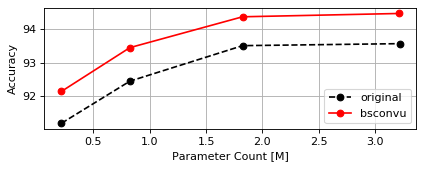

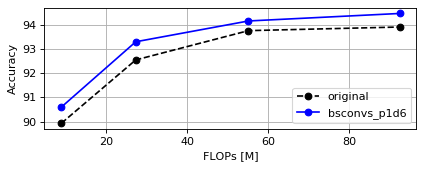

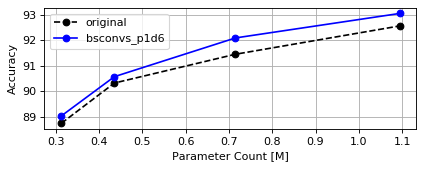

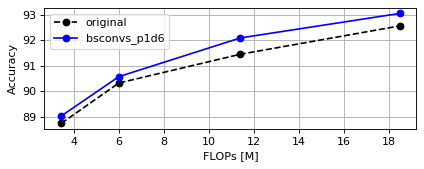

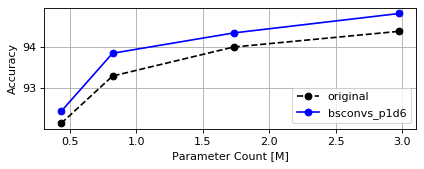

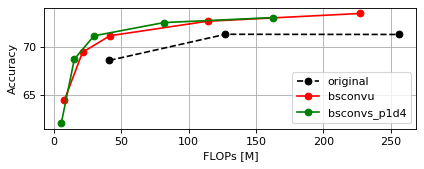

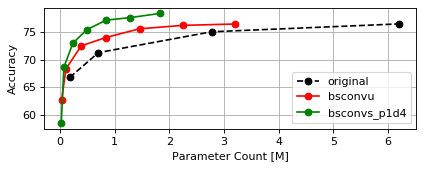

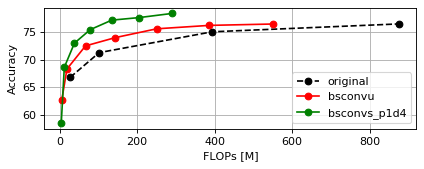

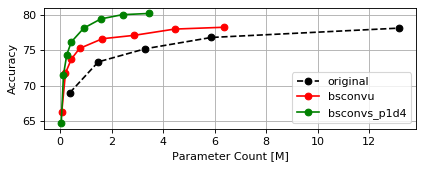

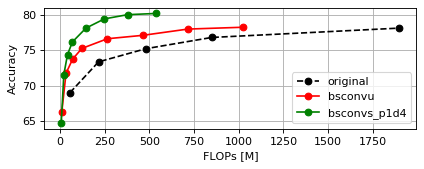

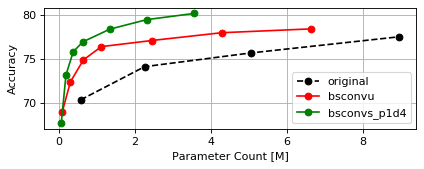

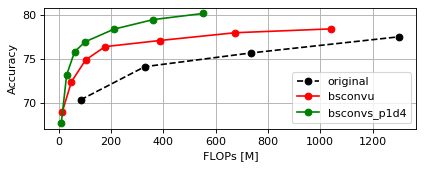

CIFAR10 - MobileNetV1 CIFAR10 - MobileNetV2 CIFAR10 - MobileNetV3-small CIFAR10 - MobileNetV3-large CIFAR10 - WRN-16 CIFAR10 - WRN-28 CIFAR10 - WRN-40 CIFAR100 - MobileNetV1 CIFAR100 - MobileNetV2 CIFAR100 - MobileNetV3-small CIFAR100 - MobileNetV3-large CIFAR100 - ResNets CIFAR100 - WRN-16 CIFAR100 - WRN-28 CIFAR100 - WRN-40

Requirements Installation Usage Change Log Citation

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_mobilenetv1_w193.57

3.22

179.34

cifar_mobilenetv1_w3d493.51

1.82

102.66

cifar_mobilenetv1_w1d292.44

0.82

47.21

cifar_mobilenetv1_w1d491.17

0.22

12.99

cifar_mobilenetv1_w1_bsconvu94.48

3.22

254.64

cifar_mobilenetv1_w3d4_bsconvu94.38

1.82

144.98

cifar_mobilenetv1_w1d2_bsconvu93.45

0.82

65.98

cifar_mobilenetv1_w1d4_bsconvu92.13

0.22

17.66

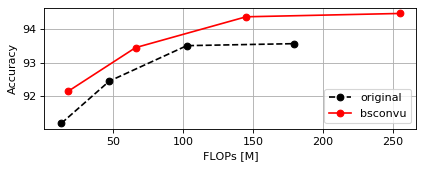

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_mobilenetv2_w193.91

2.24

92.40

cifar_mobilenetv2_w3d493.76

1.36

55.13

cifar_mobilenetv2_w1d292.55

0.70

27.32

cifar_mobilenetv2_w1d489.93

0.25

8.97

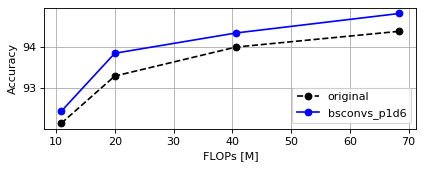

cifar_mobilenetv2_w1_bsconvs_p1d694.47

2.24

92.40

cifar_mobilenetv2_w3d4_bsconvs_p1d694.16

1.36

55.13

cifar_mobilenetv2_w1d2_bsconvs_p1d693.30

0.70

27.32

cifar_mobilenetv2_w1d4_bsconvs_p1d690.60

0.25

8.97

CIFAR10 - MobileNetV3-small

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_mobilenetv3_small_w192.57

1.09

18.48

cifar_mobilenetv3_small_w3d491.46

0.72

11.40

cifar_mobilenetv3_small_w1d290.33

0.44

6.00

cifar_mobilenetv3_small_w7d2088.75

0.31

3.45

cifar_mobilenetv3_small_w1_bsconvs_p1d693.06

1.09

18.48

cifar_mobilenetv3_small_w3d4_bsconvs_p1d692.10

0.72

11.40

cifar_mobilenetv3_small_w1d2_bsconvs_p1d690.58

0.44

6.00

cifar_mobilenetv3_small_w7d20_bsconvs_p1d689.04

0.31

3.45

CIFAR10 - MobileNetV3-large

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_mobilenetv3_large_w194.38

2.98

68.45

cifar_mobilenetv3_large_w3d494.00

1.73

40.67

cifar_mobilenetv3_large_w1d293.30

0.82

20.00

cifar_mobilenetv3_large_w7d2092.16

0.44

10.89

cifar_mobilenetv3_large_w1_bsconvs_p1d694.81

2.98

68.45

cifar_mobilenetv3_large_w3d4_bsconvs_p1d694.34

1.73

40.67

cifar_mobilenetv3_large_w1d2_bsconvs_p1d693.85

0.82

20.00

cifar_mobilenetv3_large_w7d20_bsconvs_p1d692.45

0.44

10.89

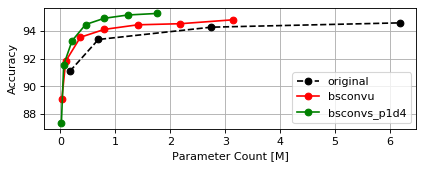

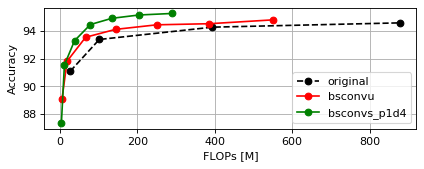

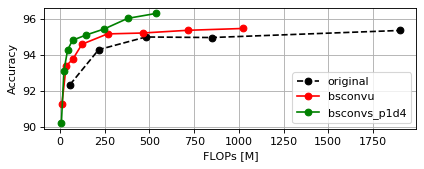

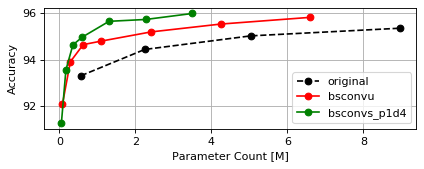

CIFAR10 - WideResNets (WRN-16)

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_wrn16_191.11

0.18

27.06

cifar_wrn16_293.40

0.69

101.86

cifar_wrn16_494.29

2.75

394.06

cifar_wrn16_694.60

6.17

877.10

cifar_wrn16_895.05

10.96

1550.99

cifar_wrn16_1095.03

17.12

2415.71

cifar_wrn16_1295.11

24.64

3471.28

cifar_wrn16_1_bsconvu89.09

0.03

5.57

cifar_wrn16_2_bsconvu91.83

0.10

18.74

cifar_wrn16_4_bsconvu93.56

0.36

66.59

cifar_wrn16_6_bsconvu94.13

0.80

143.80

cifar_wrn16_8_bsconvu94.46

1.41

250.38

cifar_wrn16_10_bsconvu94.54

2.19

386.31

cifar_wrn16_12_bsconvu94.82

3.13

551.60

cifar_wrn16_1_bsconvs_p1d487.34

0.02

4.01

cifar_wrn16_2_bsconvs_p1d491.56

0.06

11.85

cifar_wrn16_4_bsconvs_p1d493.31

0.21

38.00

cifar_wrn16_6_bsconvs_p1d494.48

0.46

78.84

cifar_wrn16_8_bsconvs_p1d494.93

0.80

134.35

cifar_wrn16_10_bsconvs_p1d495.17

1.23

204.55

cifar_wrn16_12_bsconvs_p1d495.28

1.75

289.42

CIFAR10 - WideResNets (WRN-28)

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_wrn28_192.36

0.37

55.72

cifar_wrn28_294.29

1.47

215.79

cifar_wrn28_394.99

3.29

479.94

cifar_wrn28_494.96

5.85

848.42

cifar_wrn28_695.35

13.14

1898.38

cifar_wrn28_895.73

23.35

3365.68

cifar_wrn28_1095.72

36.48

5250.31

cifar_wrn28_1295.54

52.52

7552.27

cifar_wrn28_1_bsconvu91.28

0.05

10.09

cifar_wrn28_2_bsconvu93.39

0.19

34.08

cifar_wrn28_3_bsconvu93.77

0.42

71.44

cifar_wrn28_4_bsconvu94.59

0.73

122.43

cifar_wrn28_6_bsconvu95.16

1.61

265.31

cifar_wrn28_8_bsconvu95.21

2.82

462.71

cifar_wrn28_10_bsconvu95.36

4.39

714.64

cifar_wrn28_12_bsconvu95.46

6.29

1021.10

cifar_wrn28_1_bsconvs_p1d490.22

0.04

7.25

cifar_wrn28_2_bsconvs_p1d493.13

0.12

21.47

cifar_wrn28_3_bsconvs_p1d494.28

0.24

42.23

cifar_wrn28_4_bsconvs_p1d494.81

0.41

69.82

cifar_wrn28_6_bsconvs_p1d495.10

0.88

145.44

cifar_wrn28_8_bsconvs_p1d495.44

1.53

248.32

cifar_wrn28_10_bsconvs_p1d496.02

2.36

378.46

cifar_wrn28_12_bsconvs_p1d496.29

3.37

535.87

CIFAR10 - WideResNets (WRN-40)

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_wrn40_193.30

0.56

84.37

cifar_wrn40_294.44

2.24

329.73

cifar_wrn40_395.03

5.04

735.78

cifar_wrn40_495.36

8.95

1302.78

cifar_wrn40_695.63

20.12

2919.66

cifar_wrn40_895.58

35.75

5180.37

cifar_wrn40_1095.66

55.84

8084.90

cifar_wrn40_1_bsconvu92.07

0.08

14.61

cifar_wrn40_2_bsconvu93.91

0.29

49.41

cifar_wrn40_3_bsconvu94.65

0.63

103.88

cifar_wrn40_4_bsconvu94.80

1.09

178.27

cifar_wrn40_6_bsconvu95.20

2.41

386.81

cifar_wrn40_8_bsconvu95.54

4.24

675.05

cifar_wrn40_10_bsconvu95.83

6.59

1042.98

cifar_wrn40_1_bsconvs_p1d491.24

0.05

10.49

cifar_wrn40_2_bsconvs_p1d493.55

0.17

31.08

cifar_wrn40_3_bsconvs_p1d494.64

0.36

61.38

cifar_wrn40_4_bsconvs_p1d494.98

0.61

101.64

cifar_wrn40_6_bsconvs_p1d495.66

1.31

212.04

cifar_wrn40_8_bsconvs_p1d495.74

2.27

362.29

cifar_wrn40_10_bsconvs_p1d496.00

3.50

552.38

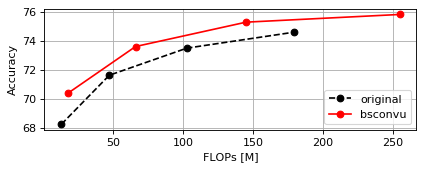

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_mobilenetv1_w174.58

3.31

179.43

cifar_mobilenetv1_w3d473.48

1.89

102.72

cifar_mobilenetv1_w1d271.61

0.87

47.25

cifar_mobilenetv1_w1d468.23

0.24

13.01

cifar_mobilenetv1_w1_bsconvu75.80

3.31

254.73

cifar_mobilenetv1_w3d4_bsconvu75.27

1.89

145.04

cifar_mobilenetv1_w1d2_bsconvu73.59

0.87

66.03

cifar_mobilenetv1_w1d4_bsconvu70.37

0.24

17.68

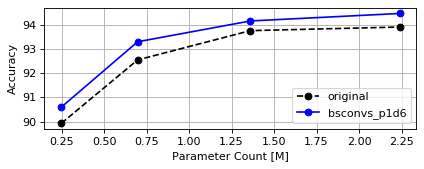

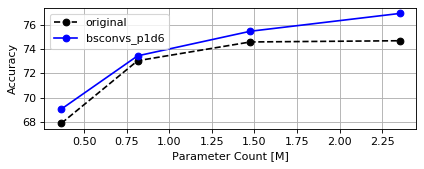

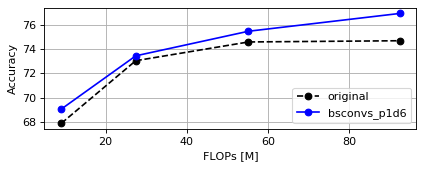

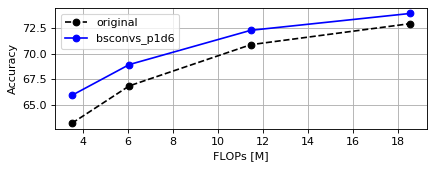

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_mobilenetv2_w174.67

2.35

92.51

cifar_mobilenetv2_w3d474.57

1.48

55.24

cifar_mobilenetv2_w1d273.03

0.81

27.43

cifar_mobilenetv2_w1d467.89

0.36

9.08

cifar_mobilenetv2_w1_bsconvs_p1d676.91

2.35

92.51

cifar_mobilenetv2_w3d4_bsconvs_p1d675.45

1.48

55.24

cifar_mobilenetv2_w1d2_bsconvs_p1d673.43

0.81

27.43

cifar_mobilenetv2_w1d4_bsconvs_p1d669.06

0.36

9.08

CIFAR100 - MobileNetV3-small

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_mobilenetv3_small_w172.93

1.15

18.54

cifar_mobilenetv3_small_w3d470.87

0.77

11.46

cifar_mobilenetv3_small_w1d266.83

0.49

6.05

cifar_mobilenetv3_small_w7d2063.16

0.37

3.50

cifar_mobilenetv3_small_w1_bsconvs_p1d673.93

1.15

18.54

cifar_mobilenetv3_small_w3d4_bsconvs_p1d672.28

0.77

11.46

cifar_mobilenetv3_small_w1d2_bsconvs_p1d668.92

0.49

6.05

cifar_mobilenetv3_small_w7d20_bsconvs_p1d665.90

0.37

3.50

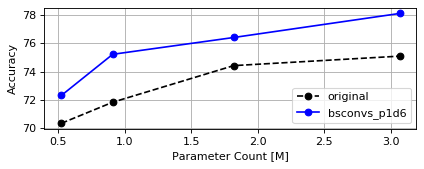

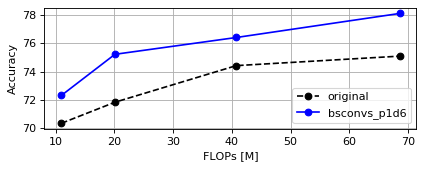

CIFAR100 - MobileNetV3-large

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_mobilenetv3_large_w175.09

3.07

68.54

cifar_mobilenetv3_large_w3d474.42

1.82

40.75

cifar_mobilenetv3_large_w1d271.83

0.91

20.09

cifar_mobilenetv3_large_w7d2070.34

0.52

10.98

cifar_mobilenetv3_large_w1_bsconvs_p1d678.11

3.07

68.54

cifar_mobilenetv3_large_w3d4_bsconvs_p1d676.41

1.82

40.75

cifar_mobilenetv3_large_w1d2_bsconvs_p1d675.22

0.91

20.09

cifar_mobilenetv3_large_w7d20_bsconvs_p1d672.31

0.52

10.98

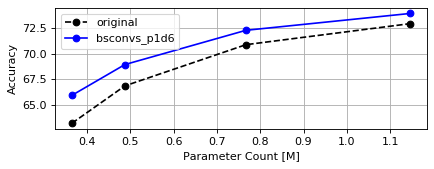

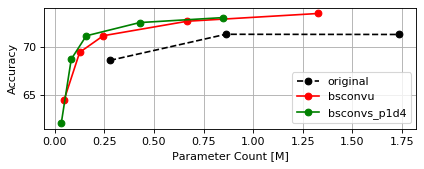

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_resnet2068.59

0.28

41.42

cifar_resnet5671.31

0.86

127.39

cifar_resnet11071.29

1.74

256.34

cifar_resnet30272.22

4.85

714.83

cifar_resnet60271.22

9.71

1431.22

cifar_resnet20_bsconvu64.41

0.05

7.86

cifar_resnet56_bsconvu69.43

0.13

21.42

cifar_resnet110_bsconvu71.16

0.24

41.77

cifar_resnet302_bsconvu72.67

0.67

114.12

cifar_resnet602_bsconvu73.48

1.33

227.17

cifar_resnet20_bsconvs_p1d462.03

0.03

5.66

cifar_resnet56_bsconvs_p1d468.72

0.08

15.37

cifar_resnet110_bsconvs_p1d471.15

0.16

29.93

cifar_resnet302_bsconvs_p1d472.53

0.43

81.70

cifar_resnet602_bsconvs_p1d473.05

0.85

162.60

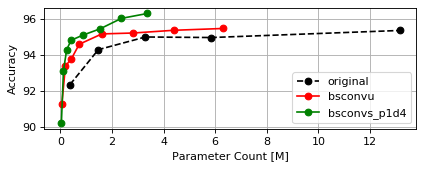

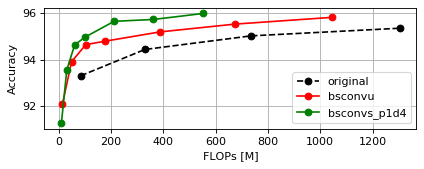

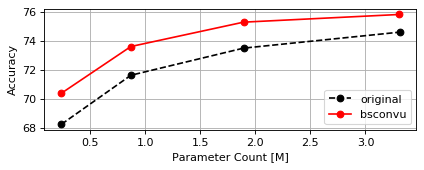

CIFAR100 - WideResNets (WRN-16)

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_wrn16_166.81

0.18

27.07

cifar_wrn16_271.29

0.70

101.87

cifar_wrn16_475.07

2.77

394.08

cifar_wrn16_676.50

6.21

877.14

cifar_wrn16_877.30

11.01

1551.03

cifar_wrn16_1077.28

17.17

2415.77

cifar_wrn16_1278.02

24.71

3471.34

cifar_wrn16_1_bsconvu62.79

0.03

5.57

cifar_wrn16_2_bsconvu68.33

0.11

18.75

cifar_wrn16_4_bsconvu72.51

0.39

66.62

cifar_wrn16_6_bsconvu74.02

0.84

143.84

cifar_wrn16_8_bsconvu75.61

1.45

250.42

cifar_wrn16_10_bsconvu76.23

2.24

386.36

cifar_wrn16_12_bsconvu76.48

3.20

551.67

cifar_wrn16_1_bsconvs_p1d458.48

0.02

4.02

cifar_wrn16_2_bsconvs_p1d468.62

0.07

11.86

cifar_wrn16_4_bsconvs_p1d473.01

0.24

38.03

cifar_wrn16_6_bsconvs_p1d475.46

0.49

78.87

cifar_wrn16_8_bsconvs_p1d477.18

0.84

134.40

cifar_wrn16_10_bsconvs_p1d477.64

1.29

204.60

cifar_wrn16_12_bsconvs_p1d478.39

1.82

289.49

CIFAR100 - WideResNets (WRN-28)

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_wrn28_169.00

0.38

55.72

cifar_wrn28_273.38

1.48

215.80

cifar_wrn28_375.25

3.31

479.96

cifar_wrn28_476.85

5.87

848.44

cifar_wrn28_678.18

13.18

1898.42

cifar_wrn28_878.07

23.40

3365.72

cifar_wrn28_1078.58

36.54

5250.36

cifar_wrn28_1279.04

52.59

7552.34

cifar_wrn28_1_bsconvu66.21

0.06

10.09

cifar_wrn28_2_bsconvu71.78

0.20

34.09

cifar_wrn28_3_bsconvu73.79

0.44

71.46

cifar_wrn28_4_bsconvu75.29

0.75

122.45

cifar_wrn28_6_bsconvu76.67

1.64

265.34

cifar_wrn28_8_bsconvu77.15

2.87

462.76

cifar_wrn28_10_bsconvu78.04

4.44

714.70

cifar_wrn28_12_bsconvu78.30

6.36

1021.17

cifar_wrn28_1_bsconvs_p1d464.65

0.04

7.26

cifar_wrn28_2_bsconvs_p1d471.55

0.13

21.48

cifar_wrn28_3_bsconvs_p1d474.42

0.26

42.25

cifar_wrn28_4_bsconvs_p1d476.22

0.43

69.84

cifar_wrn28_6_bsconvs_p1d478.18

0.92

145.47

cifar_wrn28_8_bsconvs_p1d479.49

1.58

248.36

cifar_wrn28_10_bsconvs_p1d480.09

2.42

378.52

cifar_wrn28_12_bsconvs_p1d480.26

3.44

535.94

CIFAR100 - WideResNets (WRN-40)

Model

Accuracy (top-1)

Params [M]

FLOPs [M]

cifar_wrn40_170.34

0.57

84.38

cifar_wrn40_274.13

2.26

329.74

cifar_wrn40_375.70

5.06

735.79

cifar_wrn40_477.55

8.97

1302.81

cifar_wrn40_677.41

20.15

2919.70

cifar_wrn40_878.33

35.79

5180.42

cifar_wrn40_1078.49

55.90

8084.96

cifar_wrn40_1_bsconvu68.98

0.09

14.61

cifar_wrn40_2_bsconvu72.41

0.30

49.42

cifar_wrn40_3_bsconvu74.91

0.64

103.90

cifar_wrn40_4_bsconvu76.42

1.12

178.29

cifar_wrn40_6_bsconvu77.12

2.44

386.85

cifar_wrn40_8_bsconvu78.01

4.29

675.09

cifar_wrn40_10_bsconvu78.45

6.64

1043.03

cifar_wrn40_1_bsconvs_p1d467.66

0.06

10.49

cifar_wrn40_2_bsconvs_p1d473.19

0.18

31.09

cifar_wrn40_3_bsconvs_p1d475.83

0.37

61.40

cifar_wrn40_4_bsconvs_p1d476.97

0.63

101.66

cifar_wrn40_6_bsconvs_p1d478.42

1.34

212.07

cifar_wrn40_8_bsconvs_p1d479.51

2.32

362.33

cifar_wrn40_10_bsconvs_p1d480.21

3.56

552.44

Python>=3.6PyTorch>=1.0.0 (support for other frameworks will be added later)

pip install --upgrade bsconv

See here for PyTorch usage details .

Support for other frameworks will be added later.

Please note that the code provided here is work-in-progress. Therefore, some features may be missing or may change between versions.

BSConv for PyTorch:

added support for more model architectures (see bsconv.pytorch.get_model)

added result tables and plots for ResNets, WRNs, MobileNets on CIFAR datasets

removed script bin/bsconv_pytorch_list_architectures.py, because bsconv.pytorch.get_model is more flexible now (see the BSConv PyTorch usage readme for available architectures)

BSConv for PyTorch:

added ready-to-use model definitions (MobileNetV1, MobileNetV2, MobileNetsV3, ResNets and WRNs and their BSConv variants for CIFAR and ImageNet/fine-grained datasets)

added training script for CIFAR and ImageNet/fine-grained datasets

added class for the StanfordDogs dataset

BSConv for PyTorch:

removed activation and added option for normalization of PW layers in BSConv-S (issue #1) (API change )

added option for normalization of PW layers in BSConv-U (API change )

ensure that BSConv-S never uses more mid channels (= M') than input channels (M) and added parameter min_mid_channels (= M'_min) (API change )

added model profiler for parameter and FLOP counting

replacer now shows number of old and new model parameters

first public version

BSConv for PyTorch:

modules BSConvU and BSConvS

replacers BSConvU_Replacer and BSConvS_Replacer

If you find this work useful in your own research, please cite the paper as:

@InProceedings{Haase_2020_CVPR,

author = {Haase, Daniel and Amthor, Manuel},

title = {Rethinking Depthwise Separable Convolutions: How Intra-Kernel Correlations Lead to Improved {MobileNets}},

booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}