This assignment illustrates a way of finding the optimal hyperparameter of Support Vector Classifier. The data set used here is Census Income Dataset Data Set from UCI repository.

The data used contains 17 columns and 48,842 rows.

- Age

- workclass

- fnlwgt

- education

- education_num

- marital_status

- occupation

- relationship

- race

- sex

- capital_gain

- capital_loss

- hours_per_week

- native_country

- salary

The dataset is split into training and testing set for 10 times and the following SVC classifier hyperparameter are selected for best accuracy:

- Kernel - Selected from RBF, Polynomial, Linear and Sigmoid

- C (Regularisation parameter) - Random integer values from 1 to 7

- Gamma (Kernel coefficient) - Random integer values from -1 to 7. If the value is less than 1, then gamma is randomly set as auto or scale. It is used only by rbf, poly and sigmoid kernel.

- Degree - Random integer from 1 to 5. It is only used by poly kernel and represent the degree of polynomial kernel function.

The above hyperparameters are randomly selected from the given values for 100 iterations. The parameters that gave the best accuracy for each sample are shown in table below:

| Sample | Kernel | Accuracy |

|---|---|---|

| 1 | rbf | 0.976333 |

| 2 | poly | 0.978 |

| 3 | rbf | 0.981333 |

| 4 | rbf | 0.983333 |

| 5 | rbf | 0.981333 |

| 6 | poly | 0.980333 |

| 7 | poly | 0.982333 |

| 8 | rbf | 0.983667 |

| 9 | rbf | 0.980333 |

| 10 | rbf | 0.979333 |

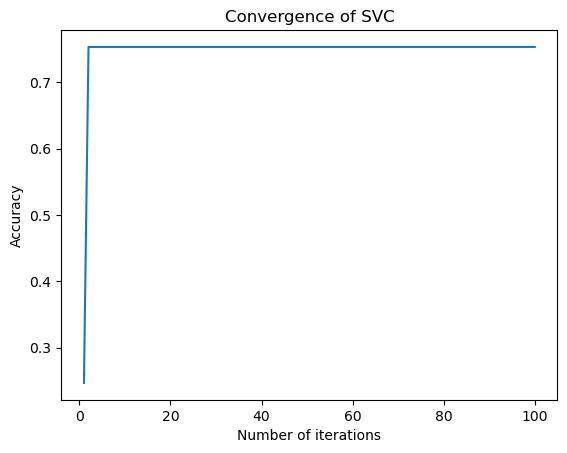

The following Convergence graph shows the accuracy of sample 8 (maximum accuracy) over the 100 iterations:

The best parameters of SVC for the given dataset are:

- Kernel : rbf

- C : 0.9

- Gamma : 1

- Degree : NA

The above parameter gave a maximum accuracy of 0.95331491712