PyTorch implementation of Neural IMage Assessment by Hossein Talebi and Peyman Milanfar. You can learn more from this post at Google Research Blog.

git clone https://github.com/truskovskiyk/nima.pytorch.git

cd nima.pytorch

virtualenv -p python3.6 env

source ./env/bin/activate

pip install -r requirements/linux_gpu.txtor You can just use ready Dockerfile

The model was trained on the AVA (Aesthetic Visual Analysis) dataset

You can get it from here

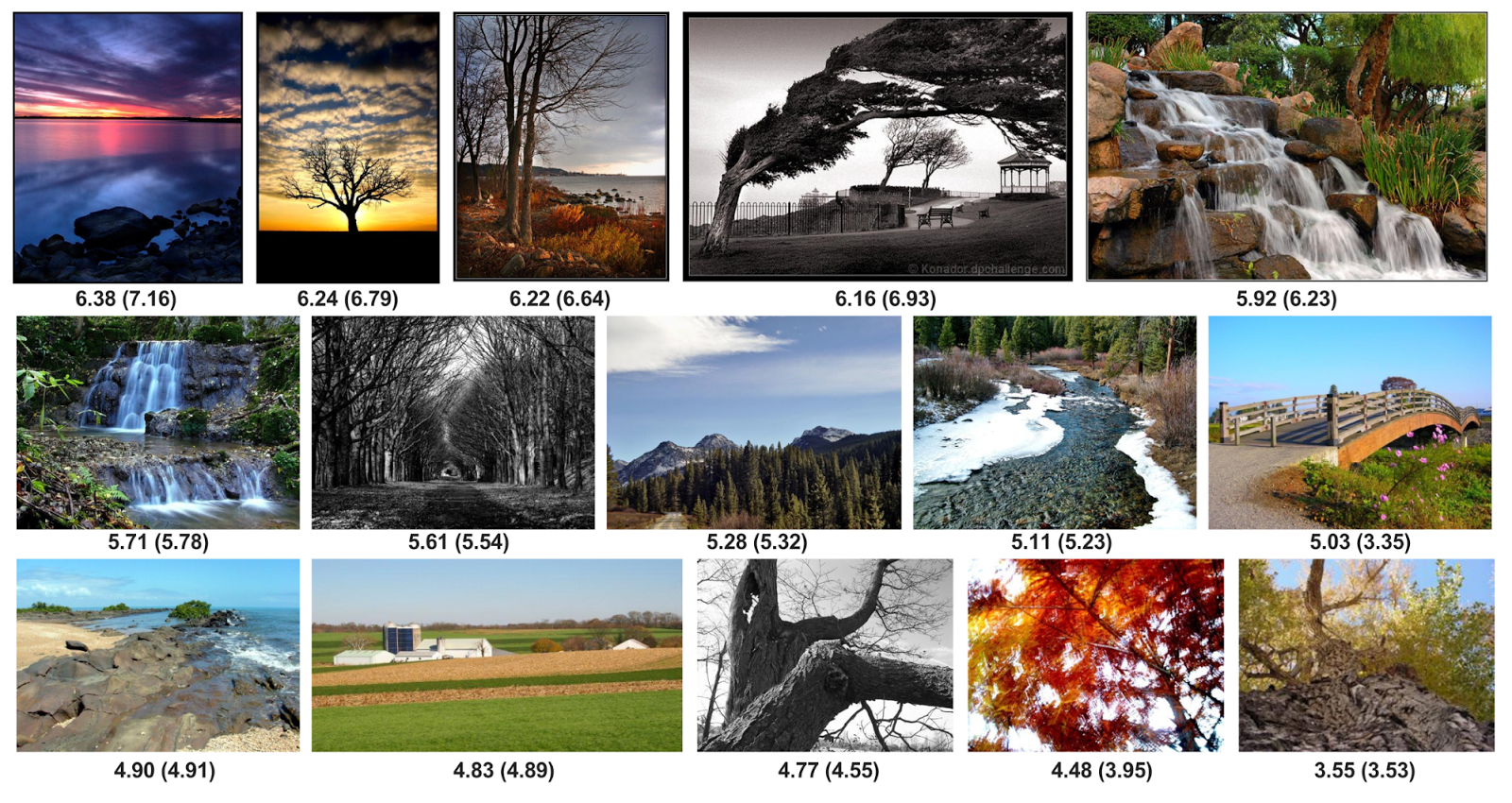

Here are some examples of images with theire scores

Used MobileNetV2 architecture as described in the paper Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation.

You can use this pretrain-model with

val_emd_loss = 0.079

test_emd_loss = 0.080Deployed model on heroku URL is https://neural-image-assessment.herokuapp.com/ You can use it for testing in Your own images, but pay attention, that's free service, so it cannot handel too many requests. Here is simple curl command to test deployment models

curl -X POST -F "file=@123.jpg" https://neural-image-assessment.herokuapp.com/api/get_scoresPlease use our swagger for interactive testing

Clean and prepare dataset

export PYTHONPATH=.

python nima/cli.py prepare_dataset --path_to_ava_txt ./DATA/ava/AVA.txt \

--path_to_save_csv ./DATA/ava \

--path_to_images ./DATA/images/

Train model

export PYTHONPATH=.

python nima/cli.py train_model --path_to_save_csv ./DATA/ava/ \

--path_to_images ./DATA/images \

--batch_size 16 \

--num_workers 2 \

--num_epoch 15 \

--init_lr 0.009 \

--experiment_dir_name firts0.009

Use tensorboard to tracking training progress

tensorboard --logdir .Validate model on val and test datasets

export PYTHONPATH=.

python nima/cli.py validate_model --path_to_model_weight ./pretrain-model.pth \

--path_to_save_csv ./DATA/ava \

--path_to_images ./DATA/images \

--batch_size 16 \

--num_workers 4Get scores for one image

export PYTHONPATH=.

python nima/cli.py get_image_score --path_to_model_weight ./pretrain-model.pth \

--path_to_image test_image.jpgContributing are welcome

This project is licensed under the MIT License - see the LICENSE file for details