The Engagement Meter is a web application that calculates and displays engagement levels of an audience observed by a webcam. It also includes the capability to recognize attendants by associating their faces to individual user profiles.

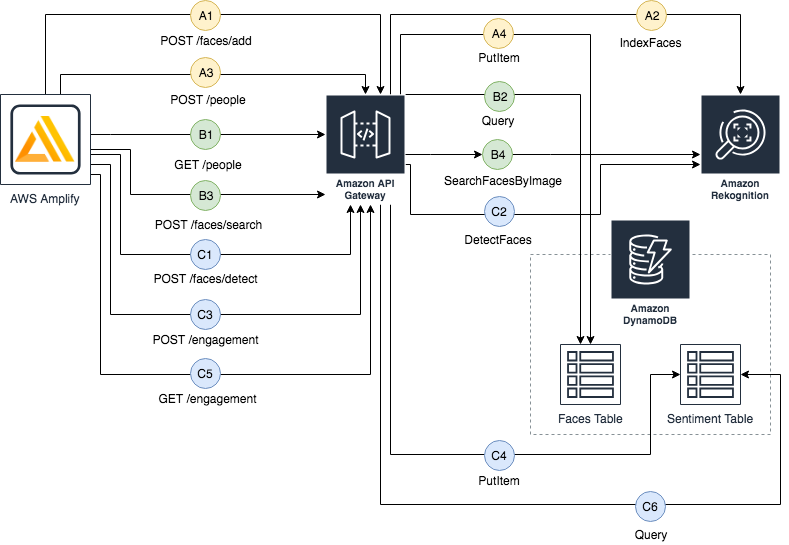

The Engagement Meter uses Amazon Rekognition for image and sentiment analysis, Amazon DynamoDB for storage, Amazon API Gateway and Amazon Cognito for the API, and Amazon S3, AWS Amplify, and React for the front-end layer.

There are three main user flows:

- the "add user" flow (A) is triggered when clicking the "Add user" button

- the "added users recognition" flow (B) and the "sentiment analysis" flow (C) are both triggered when clicking the "Start Rekognition" button and repeat until the "Stop Rekognition" button is clicked.

The diagram below represents the API calls performed by Amplify, which takes care of authenticating all the calls to the API Gateway using Cognito.

Amplify makes a POST /faces/add request to the API Gateway including the uploaded picture and an autogenerated unique identifier (known as ExternalImageId), then the API Gateway calls the IndexFaces action in Amazon Rekognition. After that, Amplify makes a POST /people request to the API Gateway including the ExternalImageId and some extra metadata (Name and Job Title), then the API Gateway writes that data to the Faces table in Amazon DynamoDB. To learn more about IndexFaces see the Rekognition documentation.

Amplify makes a GET /people request to the API Gateway, which then queries the Faces table on Amazon DynamoDB. In case any people have been registered, Amplify makes another call to POST /faces/search including a screenshot detected from the webcam. Then, the API Gateway calls the SearchFacesByImage action in Amazon Rekognition. If any previously registered person is recognized, the service provides details about the matches, including each face's coordinate and confidence. In this case, the UI displays a welcome message showing the recognized users' names. To learn more about SearchFacesByImage see the Rekognition documentation.

Amplify makes two parallel calls to the API Gateway (here represented in a sequential manner for simplicity). First, Amplify makes a POST /faces/detect request with a screenshot detected from the webcam to the API Gateway, which then calls the DetectedFaces action on Amazon Rekognition. If any face is detected, the service provides details about the matches, including physical characteristics and sentiments. In that case, a little recap is shown on the UI for each recognized person. Then Amplify makes a POST /engagement request with some of the recognized sentiments (Angry, Calm, Happy, Sad, Surprised) to the API Gateway, which writes that data to the Sentiment table in DynamoDB. In parallel, Amplify makes a GET /engagement request to the API Gateway, which then queries the Sentiment table in DynamoDB to retrieve an aggregate for all the sentiments recorded during the last hour, in order to calibrate and draw the meter. To learn more about DetectFaces see the Rekognition documentation.

To deploy the sample application you will require an AWS account. If you don’t already have an AWS account, create one at https://aws.amazon.com by following the on-screen instructions. Your access to the AWS account must have IAM permissions to launch AWS CloudFormation templates that create IAM roles.

To use the sample application you will require a modern browser and a webcam.

The demo application is deployed as an AWS CloudFormation template.

Note

You are responsible for the cost of the AWS services used while running this sample deployment. There is no additional cost for using this sample. For full details, see the pricing pages for each AWS service you will be using in this sample. Prices are subject to change.

- Deploy the latest CloudFormation template by following the link below for your preferred AWS region:

-

If prompted, login using your AWS account credentials.

-

You should see a screen titled "Create Stack" at the "Specify template" step. The fields specifying the CloudFormation template are pre-populated. Click the Next button at the bottom of the page.

-

On the "Specify stack details" screen you may customize the following parameters of the CloudFormation stack:

- Stack Name: (Default: EngagementMeter) This is the name that is used to refer to this stack in CloudFormation once deployed. The value must be 15 characters or less.

- CollectionId: (Default: RekogDemo) AWS Resources are named based on the value of this parameter. You must customise this if you are launching more than one instance of the stack within the same account.

- CreateCloudFrontDistribution (Default: false) Creates a CloudFront distribution for accessing the web interface of the demo. This must be enabled if S3 Block Public Access is enabled at an account level. Note: Creating a CloudFront distribution may significantly increase the deploy time (from approximately 5 minutes to over 30 minutes)

When completed, click Next

-

Configure stack options if desired, then click Next.

-

On the review you screen, you must check the boxes for:

- "I acknowledge that AWS CloudFormation might create IAM resources"

- "I acknowledge that AWS CloudFormation might create IAM resources with custom names"

These are required to allow CloudFormation to create a Role to allow access to resources needed by the stack and name the resources in a dynamic way.

-

Click Create Change Set

-

On the Change Set screen, click Execute to launch your stack.

- You may need to wait for the Execution status of the change set to become "AVAILABLE" before the "Execute" button becomes available.

-

Wait for the CloudFormation stack to launch. Completion is indicated when the "Stack status" is "CREATE_COMPLETE".

- You can monitor the stack creation progress in the "Events" tab.

-

Note the url displayed in the Outputs tab for the stack. This is used to access the application.

The application is accessed using a web browser. The address is the url output from the CloudFormation stack created during the Deployment steps.

- When accessing the application, the browser will ask you the permission for using your camera. You will need to click "Allow" for the application to work.

- Click "Add a new user" if you wish to add new profiles.

- Click "Start Rekognition" to start the engine. The app will start displaying information about the recognized faces and will calibrate the meter.

To remove the application open the AWS CloudFormation Console, click the Engagement Meter project, right-click and select "Delete Stack". Your stack will take some time to be deleted. You can track its progress in the "Events" tab. When it is done, the status will change from DELETE_IN_PROGRESS" to "DELETE_COMPLETE". It will then disappear from the list.

The contributing guidelines contains some instructions about how to run the front-end locally and make changes to the back-end stack.

Contributions are more than welcome. Please read the code of conduct and the contributing guidelines.

This sample code is made available under a modified MIT license. See the LICENSE file.