Reference-aware automatic speech evaluation toolkit using self-supervised speech representations. [paper]

Abstract:

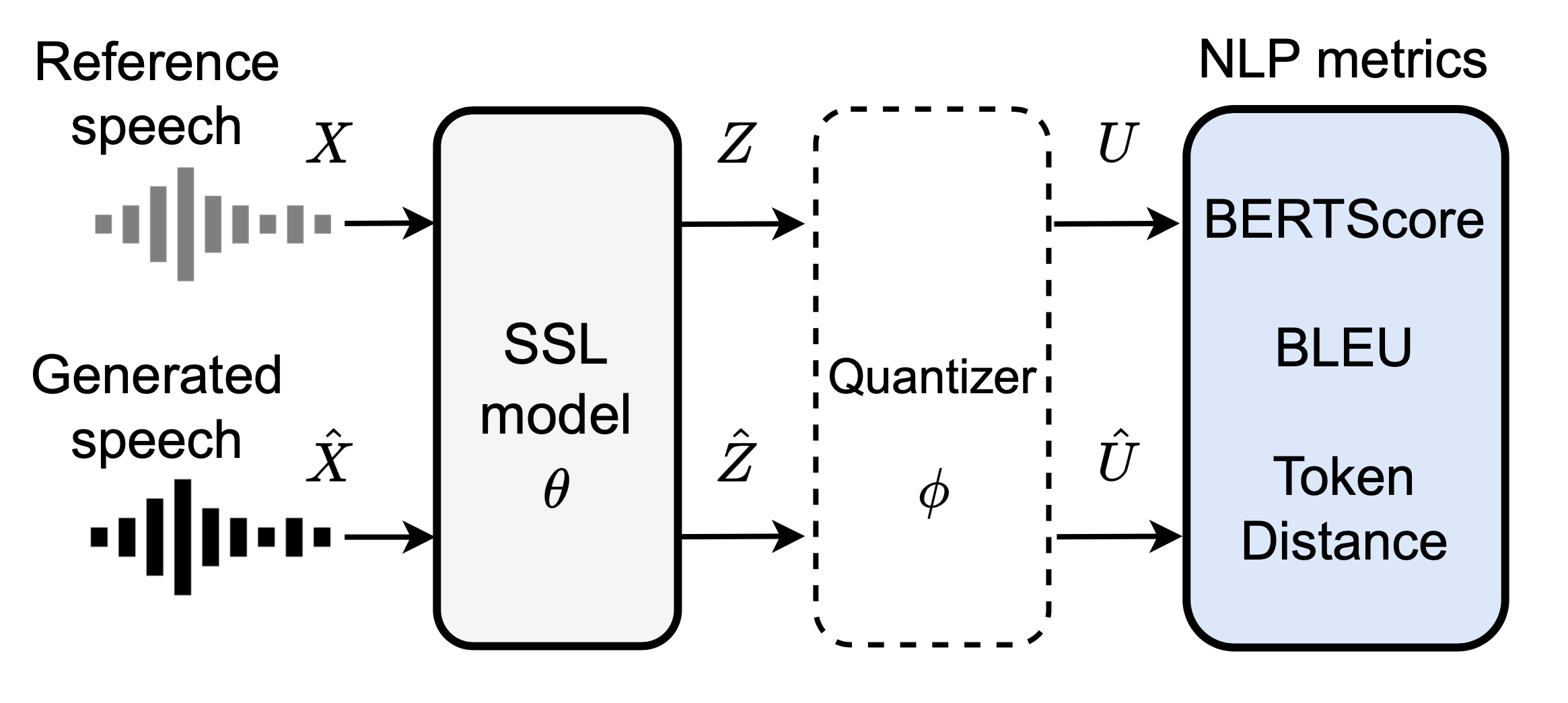

While subjective assessments have been the gold standard for evaluating speech generation, objective measures such as Mel Cepstral Distortion (MCD) and Mean Opinion Score (MOS) prediction models have also been used. Due to their cost efficiency, there is a need to establish objective measures that are highly correlated with human subjective judgments. This paper proposes reference-aware automatic evaluation methods for speech generation inspired by text generation metrics. The proposed SpeechBERTScore calculates the BERTScore for self-supervised speech feature sequences obtained from the generated speech and reference speech. We also propose to use self-supervised discrete speech tokens to compute objective measures such as SpeechBLEU. The experimental evaluations on synthesized speech show that our method correlates better with human subjective ratings than MCD and a state-of-the-art MOS prediction model. Furthermore, our method is found to be effective for noisy speech and has cross-lingual applicability.

To use discrete-speech-metrics, run the following. (You might need to separately install pypesq. See the above colab for details.)

pip3 install git+https://github.com/Takaaki-Saeki/DiscreteSpeechMetrics.gitAlternatively, you can install the toolkit through git clone.

git clone https://github.com/Takaaki-Saeki/DiscreteSpeechMetrics.git

cd DiscreteSpeechMetrics

pip3 install .In the current DiscreteSpeechMetrics, we provide three types of new metrics: SpeechBERTScore, SpeechBLEU and SpeechTokenDistance. These metrics can be used even when the reference and generated speech have different sequence lengths.

NOTE: We recommend to use the SpeechBERTScore as it showed the highest correlations with human subjective judgements in the evaluation of the paper.

SpeechBERTScore calculates BERTScore on dense self-supervised speech features of generated and reference speech. The usage of the paper's best setting is as follows.

import numpy as np

from discrete_speech_metrics import SpeechBERTScore

# Example reference and generated waveforms.

ref_wav = np.random.rand(10009)

gen_wav = np.random.rand(10003)

metrics = SpeechBERTScore(

sr=16000,

model_type="wavlm-large",

layer=14,

use_gpu=True)

precision, _, _ = metrics.score(ref_wav, gen_wav)

# precision: 0.957SpeechBLEU calculates BLEU on speech discrete tokens of generated and reference speech. The usage of the paper's best setting is as follows.

import numpy as np

from discrete_speech_metrics import SpeechBLEU

# Example reference and generated waveforms.

ref_wav = np.random.rand(10009)

gen_wav = np.random.rand(10003)

metrics = SpeechBLEU(

sr=16000,

model_type="hubert-base",

vocab=200,

layer=11,

n_ngram=2,

remove_repetition=True,

use_gpu=True)

bleu = metrics.score(ref_wav, gen_wav)

# bleu: 0.148SpeechTokenDistance calculates character-level distance measures on speech discrete tokens of generated and reference speech. The usage of the paper's best setting is as follows.

import numpy as np

from discrete_speech_metrics import SpeechTokenDistance

# Example reference and generated waveforms.

ref_wav = np.random.rand(10009)

gen_wav = np.random.rand(10003)

metrics = SpeechTokenDistance(

sr=16000,

model_type="hubert-base",

vocab=200,

layer=6,

distance_type="jaro-winkler",

remove_repetition=False,

use_gpu=True)

distance = metrics.score(ref_wav, gen_wav)

# distance: 0.548MCD is a common metric for speech synthesis, which indicates how different two mel cepstral dequences are. Dynamic time warping is used to align the generated and reference speech features with different sequential lengths. It basically follows the evaluation script in ESPnet.

from discrete_speech_metrics import MCD

# Example reference and generated waveforms.

ref_wav = np.random.rand(10009)

gen_wav = np.random.rand(10003)

metrics = MCD(sr=16000)

mcd = metrics.score(ref_wav, gen_wav)

# mcd: 0.724Log F0 RMSE is a common metric to evaluate the prosody of synthetic speech, which calculates the differece of log F0 sequences from generated and reference speech. Dynamic time warping is used to align the generated and reference speech features with different sequential lengths. It basically follows the evaluation script in ESPnet.

from discrete_speech_metrics import LogF0RMSE

# Example reference and generated waveforms.

ref_wav = np.random.rand(10009)

gen_wav = np.random.rand(10003)

metrics = LogF0RMSE(sr=16000)

logf0rmse = metrics.score(ref_wav, gen_wav)

# logf0rmse: 0.305PESQ is a reference-aware objective metric to evaluate the perceptual speech quality. It assumes the generated and reference speech signals are time-aligned. PyPESQ is used internally.

from discrete_speech_metrics import PESQ

# Example reference and generated waveforms.

# The lengths should be matched.

ref_wav = np.random.rand(10000)

gen_wav = np.random.rand(10000)

metrics = PESQ(sr=16000)

pesq = metrics.score(ref_wav, gen_wav)

# pesq: 2.12UTMOS is an automatic mean opinion score (MOS) prediction model that predicts subjective MOS from the generated speech. It does not require reference speech samples. SpeechMOS is used internally.

from discrete_speech_metrics import UTMOS

# Example generated waveforms.

gen_wav = np.random.rand(10003)

metrics = UTMOS(sr=16000)

utmos = metrics.score(generated_wav)

# utmos: 3.13If you use SpeechBERTScore, SpeechBLEU or SpeechTokenDistance, please cite the following preprint.

@article{saeki2024spbertscore,

title={{SpeechBERTScore}: Reference-Aware Automatic Evaluation of Speech Generation Leveraging NLP Evaluation Metrics},

author={Takaaki Saeki and Soumi Maiti and Shinnosuke Takamichi and Shinji Watanabe and Hiroshi Saruwatari},

journal={arXiv preprint arXiv:2401.16812},

year={2024}

}- Takaaki Saeki (The University of Tokyo, Japan)

- Soumi Maiti (Carnegie Mellon University, USA)

- Shinnosuke Takamichi (The University of Tokyo, Japan)

- Shinji Watanabe (Carnegie Mellon University, USA)

- Hiroshi Saruwatari (The University of Tokyo, Japan)