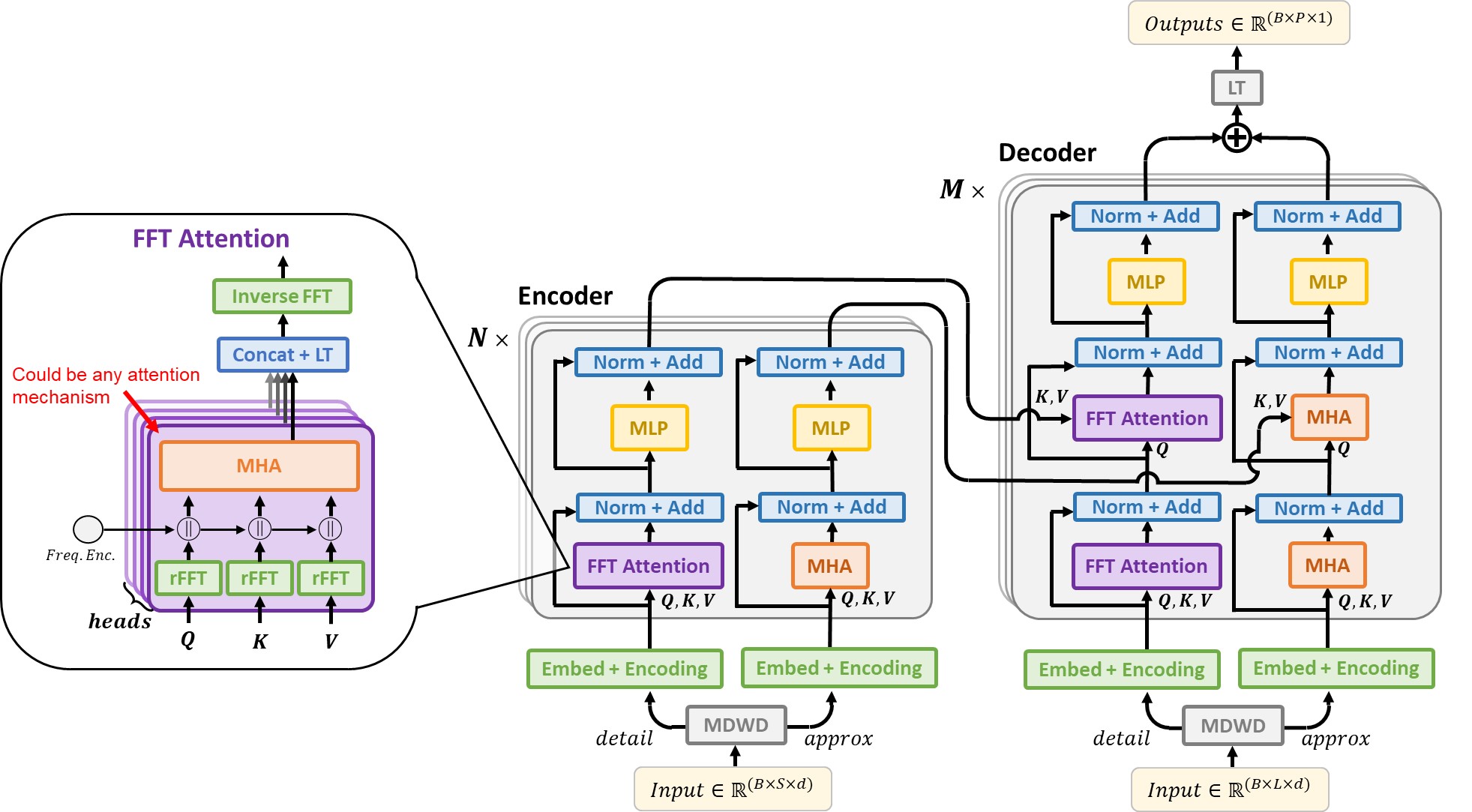

We propose the FFTransformer, a new alteration of the Transformer architecture. The architecture is based on wavelet decomposition and an adapted Transformer architecture consisting of two streams. One stream analyses periodic components in the frequency domain with an adapted attentiom mechanism based on fast Fourier Transform (FFT), and another stream similar to the vanilla Transformer, which leanrs trend components. So far the model have only been tested for spatio-temporal multi-step wind speed forecasting [paper]. This repository contains code for our proposed model, the Transformer, LogSparse Transformer, Informer, Autoformer, LSTM, MLP and a persistence model. All architectures are also implemented in a spatio-temporal setting, where the respective models are used as update functions in GNNs. Scripts for running the models follow the same style as in the Autoformer repo.

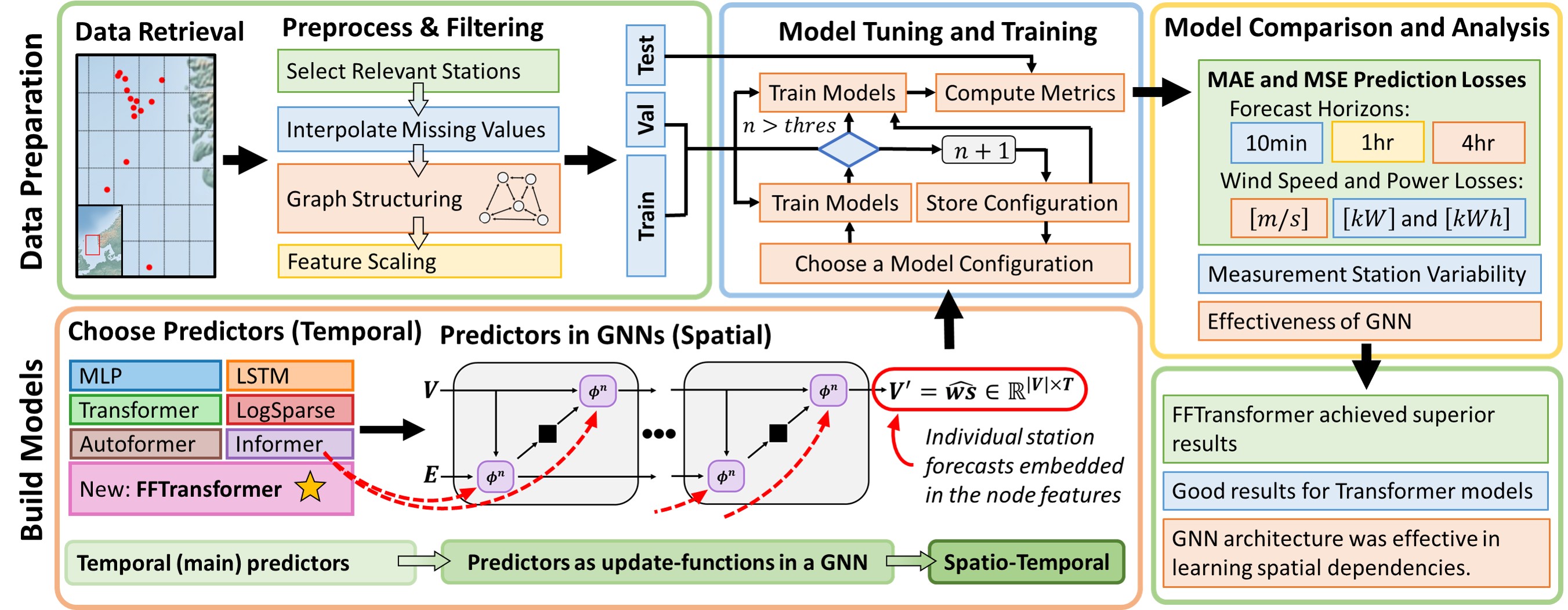

An overview of the methodology used in the paper, can be seen in Fig. 1, along with the FFTransformer model, shown for an encoder-decoder setting, in Fig. 2.

Figure 2. FFTransformer Model.

The dataset contained off-shore metereological measurements from the Norwegian continental shelf. The data was made available by the Norwegian Meterological Institue and can be downloaded using the Frost API. A small subset of the full dataset is here provided in ./dataset_example/WindData/dataset to better understand the required data structures for the wind data_loaders.

- Install Python 3.6 and the required packages in

./requirements.txt - Either download the full dataset using the Frost API, use the tiny example dataset provided or download your own time-series data (might require some additional functionality in

./data_provider/data_loader.py, see Autoformer repo). - Train models using the desired configuration set-up. You can simply write bash scripts in the same manner as here if desired.

If you found this repo useful, please cite our paper:

@article{Bentsen2022forecasting,

title = {Spatio-temporal wind speed forecasting using graph networks and novel Transformer architectures},

journal = {Applied Energy},

volume = {333},

pages = {120565},

year = {2023},

issn = {0306-2619},

doi = {https://doi.org/10.1016/j.apenergy.2022.120565},

url = {https://www.sciencedirect.com/science/article/pii/S0306261922018220},

author = {Lars Ødegaard Bentsen and Narada Dilp Warakagoda and Roy Stenbro and Paal Engelstad},

}

If you have any question or want to use the code, please contact l.o.bentsen@its.uio.no .

We appreciate the following github repos for their publicly available code and methdos:

https://github.com/thuml/Autoformer

https://github.com/zhouhaoyi/Informer2020

https://github.com/mlpotter/Transformer_Time_Series