Neural networks are becoming increasingly more popular and are responsible for some of the most cutting edge advancements in data science including image and speech recognition. They have also been transformative in reducing the need for intensive and often time intensive feature engineering needed for traditional supervised learning tasks. In this lesson, we'll investigate the architecture of neural networks.

You will be able to:

- Explain what neural networks are and what they can achieve

- List the components of a neural network

- Explain forward propagation in a neural network

- Explain backward propagation and discuss how it is related to forward propagation

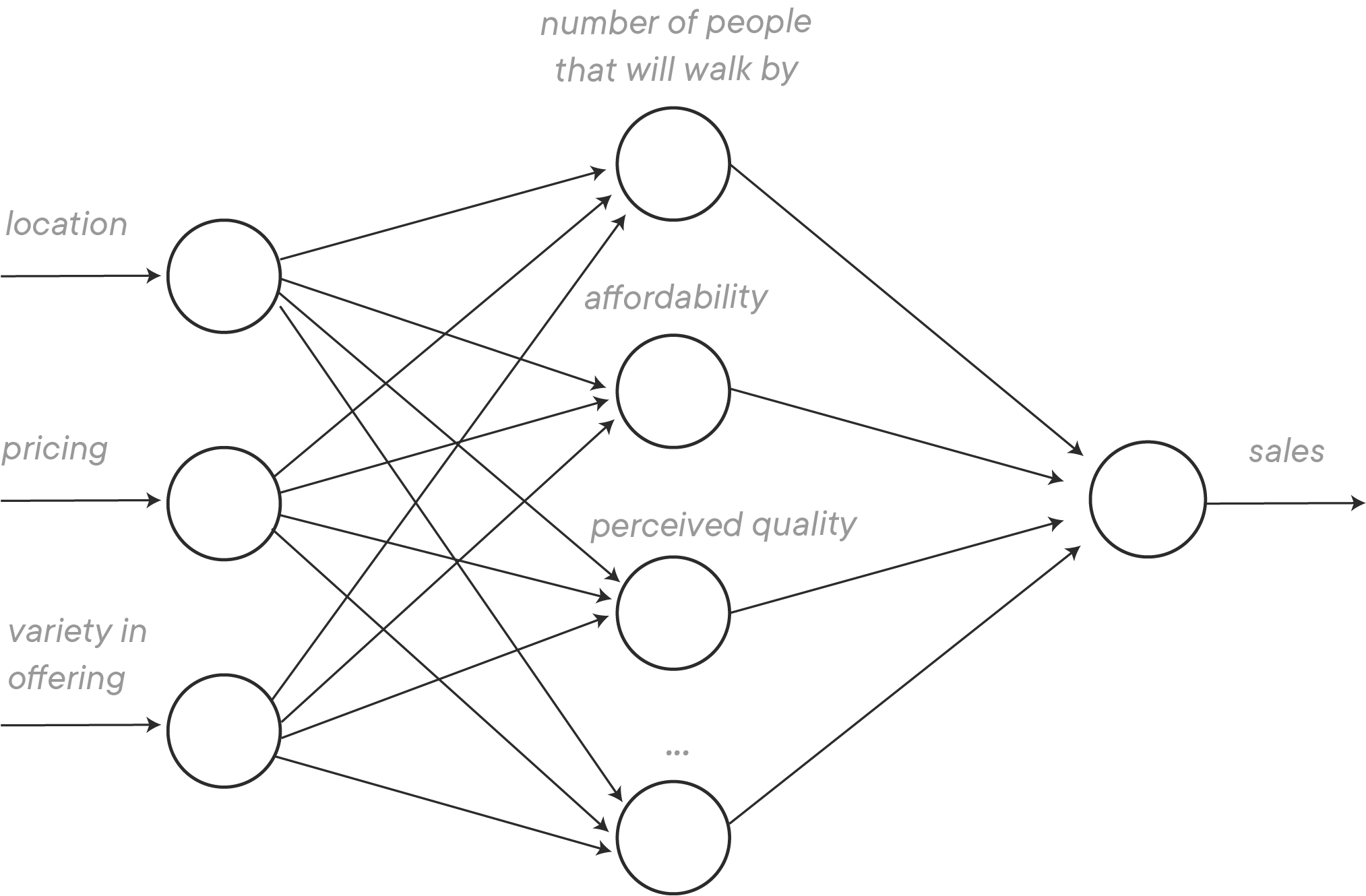

Let's start with an easy example to get an idea of what a neural network is. Imagine a city has 10 ice cream vendors. We would like to predict what the sales amount is for an ice cream vendor given certain input features. Let's say you have several features to predict the sales for each ice cream vendor: the location, the way the ice cream is priced, and the variety in the ice cream offerings.

Let's look at the input feature location. You know that one of the things that really affect the sales is how many people will walk by the ice cream shop, as these are all potential customers. And realistically, the volume of people passing is largely driven by the location.

Next, let's look at the input feature pricing. How the ice cream is priced really tells us something about the affordability, which will affect sales as well.

Last, let's look at the variety in offering. When an ice cream shop offers a lot of different ice cream flavors, this might be perceived as a higher quality shop just because customers have more flavors to choose from (and might really like that!). On the other hand, pricing might also affect perceived quality: customers might feel that the quality is higher when the prices are too. This shows that several inputs might affect one hidden feature, as these features in the so-called "hidden layer" are called.

In reality, all features will be connected with all nodes in the hidden layer, and weights will be assigned to the edges (more about this later), as you can see in the network below. That's why networks like this are also referred to as densely connected neural networks.

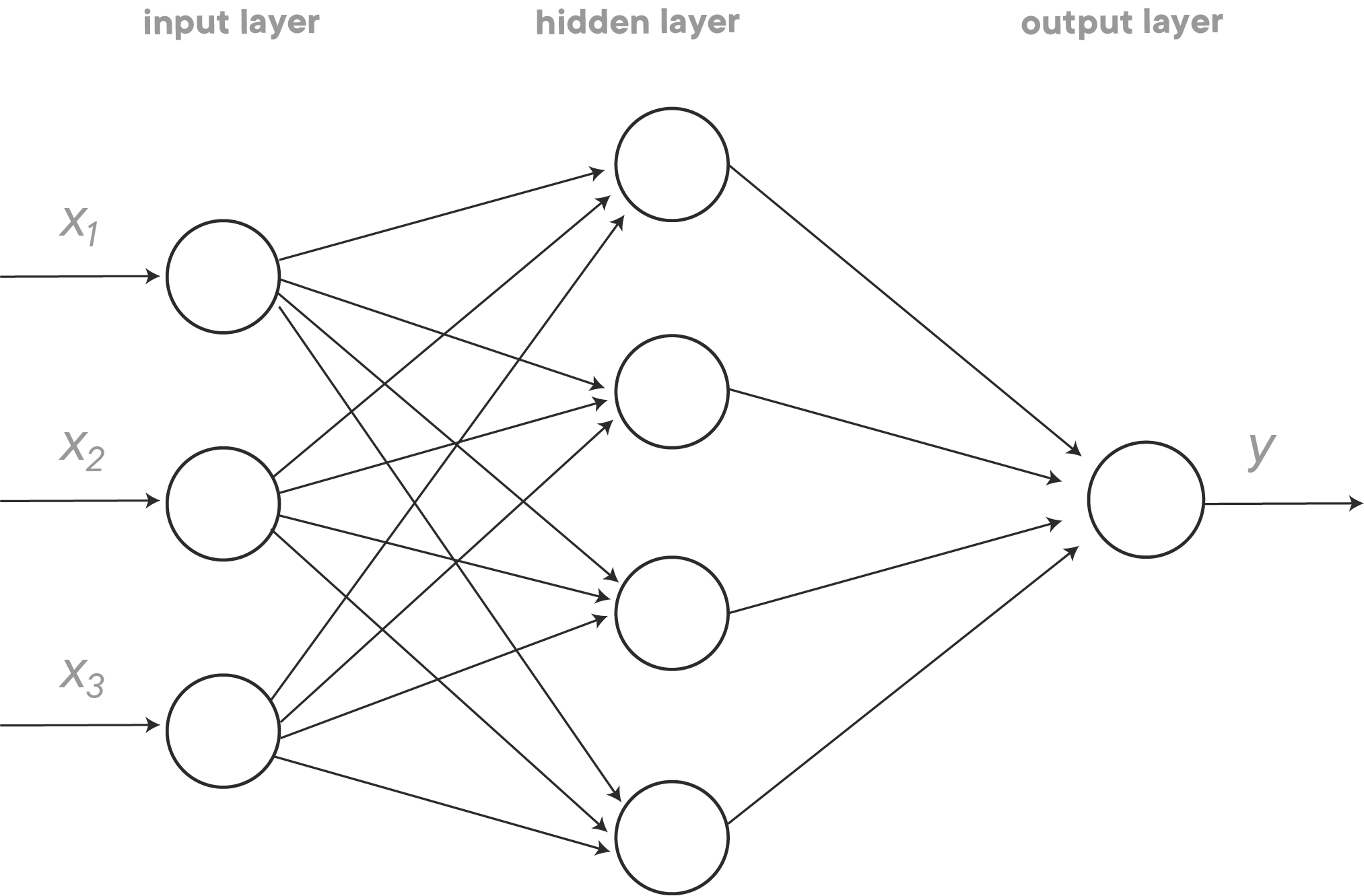

When we generalize this, a neural network looks like the configuration below.

As you can see, to implement a neural network, we need to feed it the inputs

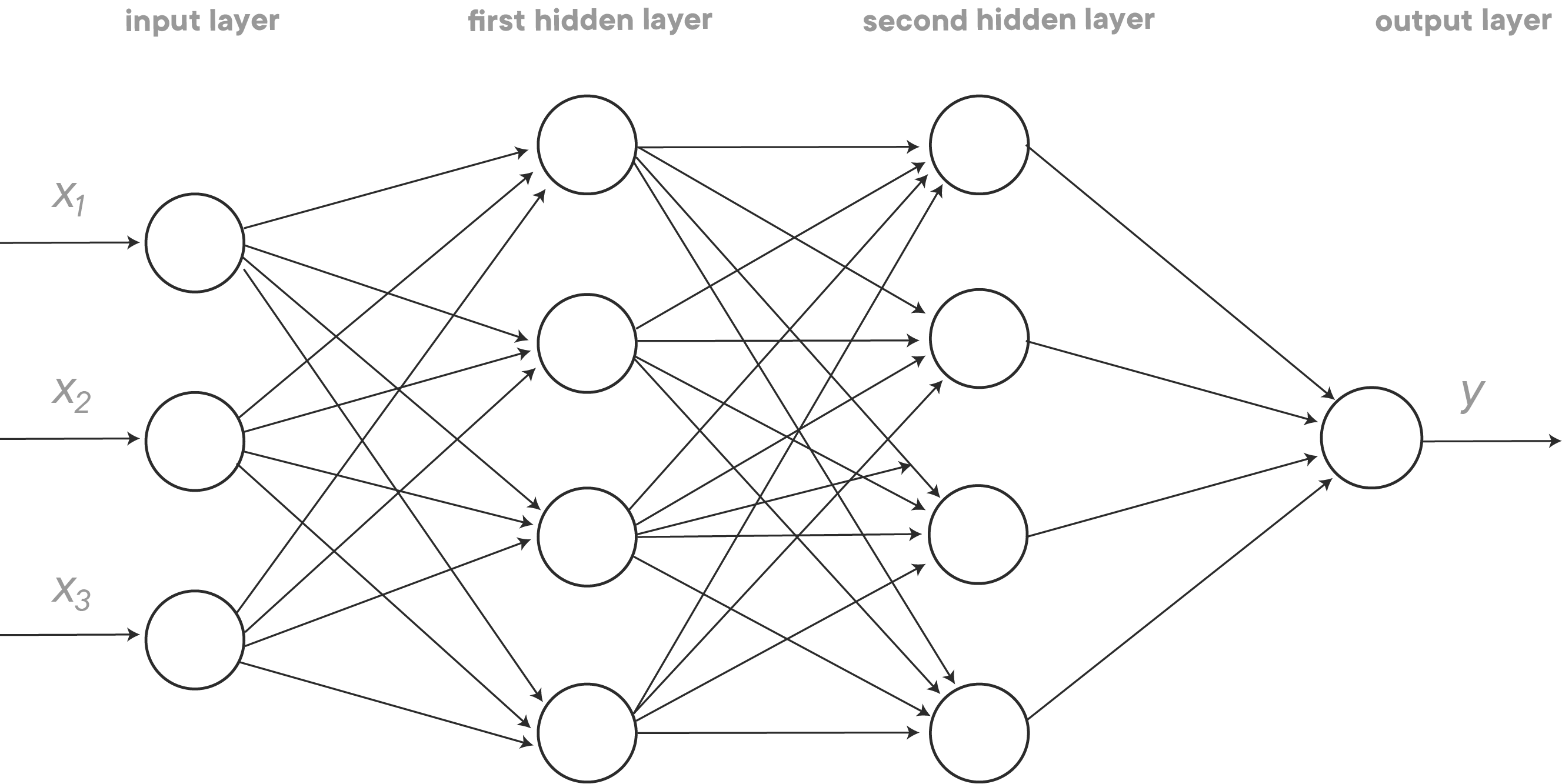

In our previous example, we have three input units, hidden layer with 4 units and 1 output unit. Notice that networks come in all shapes and sizes. This is only one example of what deep learning is capable of! The network described above can be extended almost endlessly:

- We can add more features (nodes) in the input layer

- We can add more nodes in the hidden layer. Also, we can simply add more hidden layers. This is what turns a neural network in a "deep" neural network (hence, deep learning)

- We can also have several nodes in the output layer

And there is one more thing that makes deep learning extremely powerful: unlike many other statistical and machine learning techniques, deep learning can deal extremely well with unstructured data.

In the ice cream vendor example, the input features can be seen as structured data. The input features very much take a form of a "classical" dataset: observations are rows, features are columns. Examples or unstructured data, however, are: images, audio files, text data, etc. Historically, and unlike humans, machines had a very hard time interpreting unstructured data. Deep learning was really able to drastically improve machine performance when using unstructured data!

To illustrate the power of deep learning, we describe some applications of deep learning below:

| x | y |

|---|---|

| features of an ice cream shop | sales |

| Pictures of cats vs dogs | cat or dog? |

| Pictures of presidents | which president is it? |

| Dutch text | English text |

| audio files | text |

| ... | ... |

Types or neural networks:

- Standard neural networks

- Convolutional neural networks (input = images, video)

- Recurrent neural networks (input = audio files, text, time series data)

- Generative adversarial networks

You'll see that there is quite a bit of theory and mathematical notation needed when using neural networks. We'll introduce all this for the first time by using an example. Imagine we have a dataset with images. Some of them have Santa in it, others don't. We'll use a neural network to train the model so it can detect whether Santa is in a picture or not.

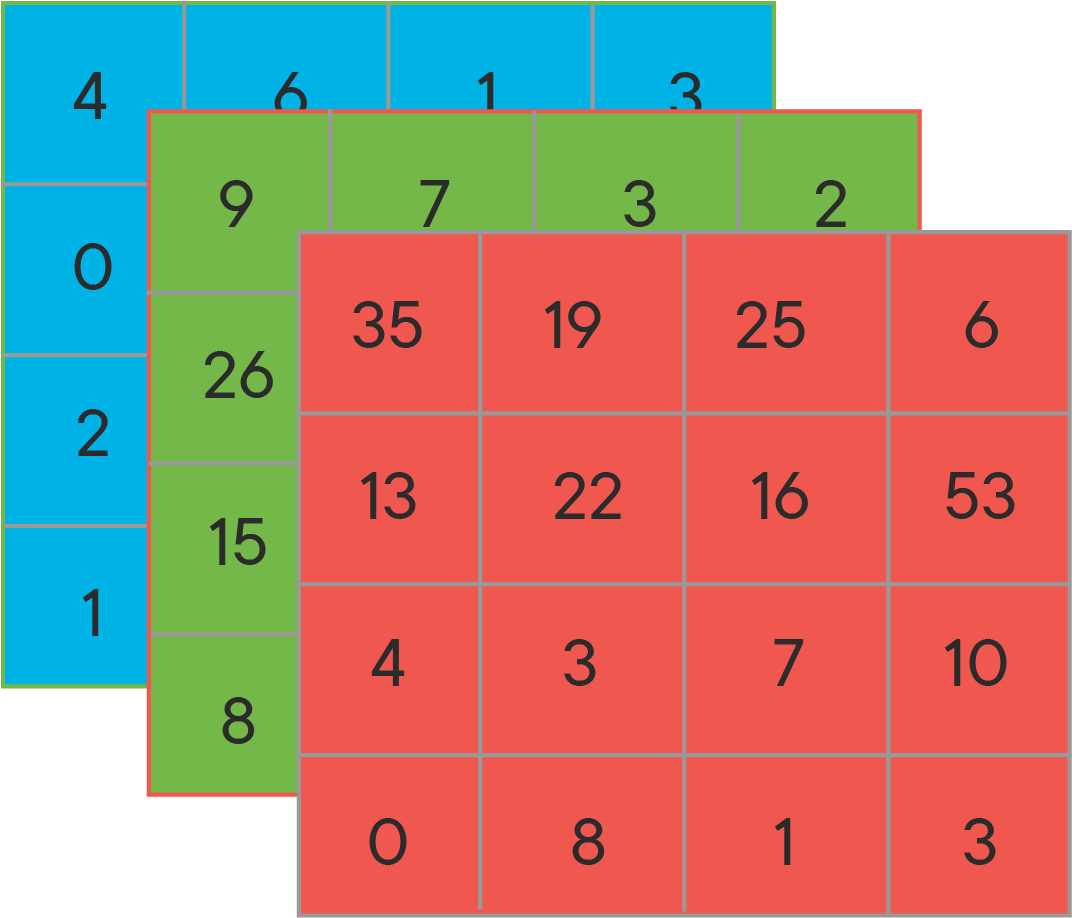

As mentioned before, this is a kind of problem where the input data is composed of images. Now how does Python read images? To store an image, your computes stores three matrices which correspond with three color channels: red, green and blue (also referred to as RGB). The numbers in each of the three matrices correspond with the pixel intensity values in each of the three colors. The picture below denotes a hypothetical representation of a 4 x 4 pixel image (note that 4 x 4 is tiny, generally you'll have much bigger dimensions). Pixel intensity values are on the scale [0, 255].

Having three matrices associated with one image, we'll need to modify this shape to get to one input feature vector. You'll want to "unrow" your input feature values into one so-called "feature vector". You should start with unrowing the red pixel matrix, then the green one, then the blue one. Unrowing the RGB matrices in the image above would result in:

$x = \begin{bmatrix} 35 \ 19 \ \vdots \ 9 \7 \\vdots \ 4 \ 6 \ \vdots \end{bmatrix}$

The resulting feature vector is a matrix with one column and 4 x 4 x 3 = 48 rows. Let's introduce some more notation to formalize this all.

Let's say you have one training sample. Your training set then looks like this:

Similarly, let's say the test set has

Note that the resulting matrix

$ \hspace{1.1cm} x^{(1)} \hspace{0.4cm} x^{(2)} \hspace{1.4cm} x^{(l)} $

The training set labels matrix has dimensions

where 1 means that the image contains a Santa, 0 means there is no Santa in the image.

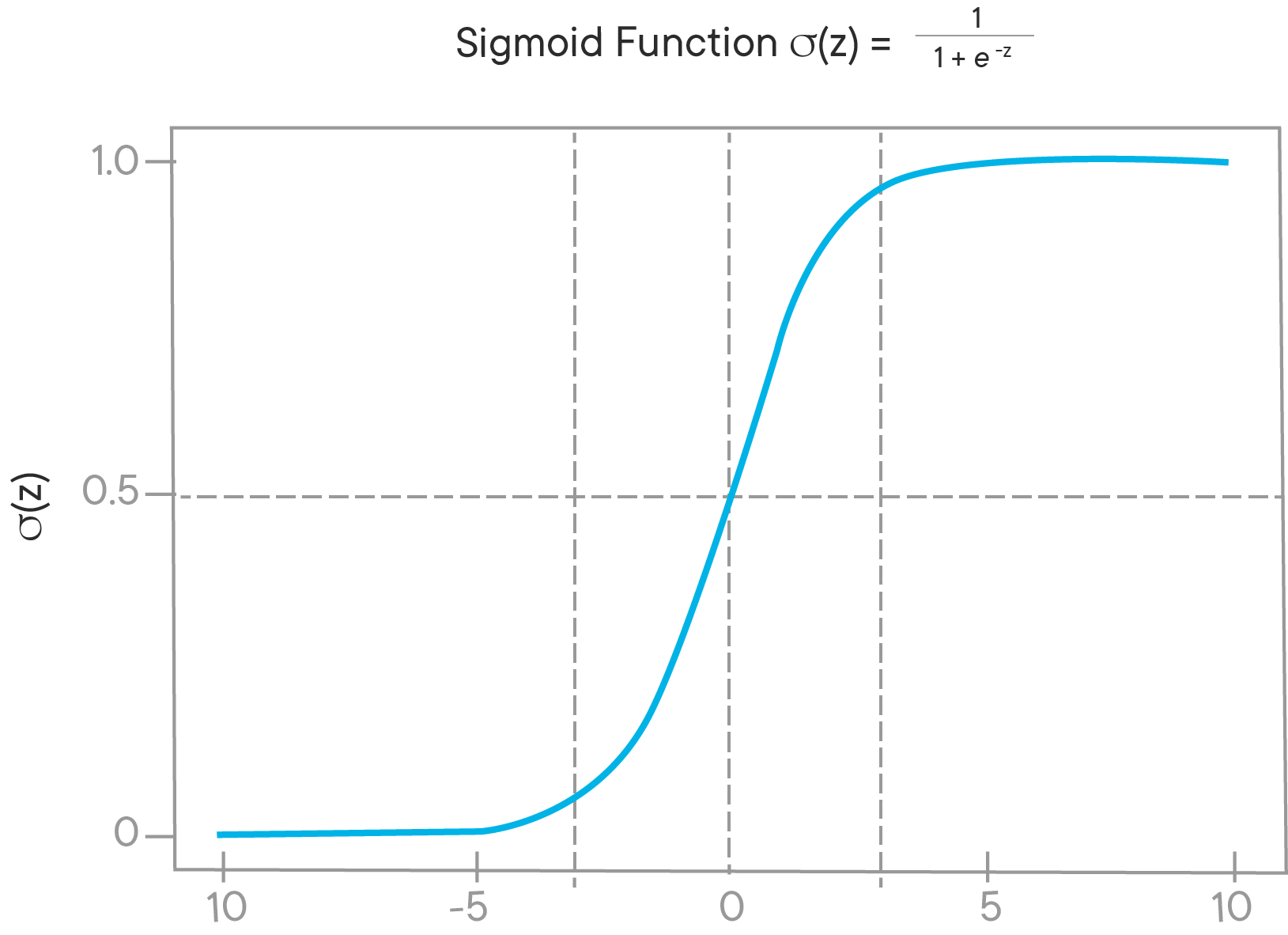

So how will we be able to predict weather y is 0 or 1 for a certain image? You might remember from logistic regression models that the eventual predictor,

Formally, you'll denote that as $ \hat y = P(y=1 \mid x) $.

Remember that

We'll need some expression here in order to make a prediction. The parameters here are

This is why a transformation of

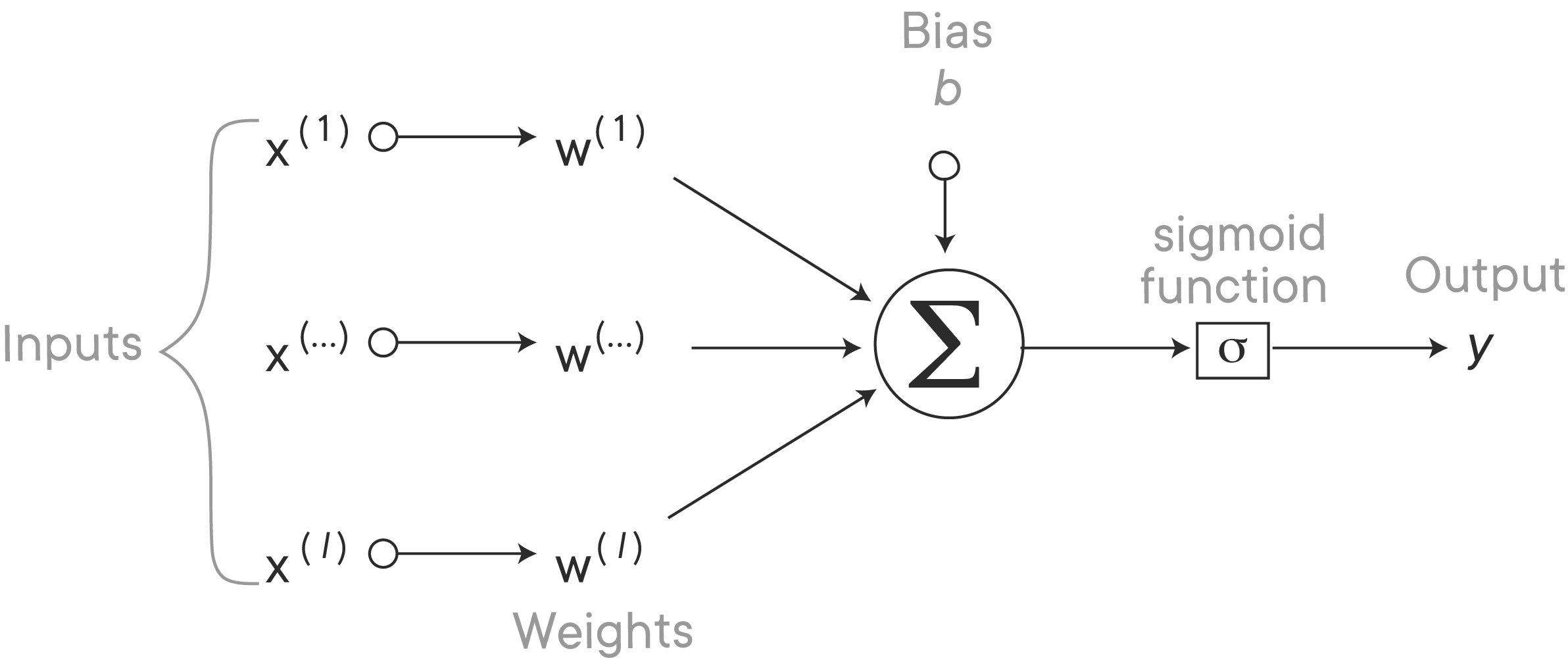

Bringing all this together, the neural network can be represented as follows:

Problem statement: given that we have

The loss function is used to measure the inconsistency between the predicted value

In logistic regression the loss function is defined as

%matplotlib inline

from mpl_toolkits.mplot3d import Axes3D

import matplotlib.pyplot as plt

from matplotlib import cm

from matplotlib.ticker import LinearLocator, FormatStrFormatter

import numpy as np

fig = plt.figure()

ax = fig.gca(projection='3d')

# Generate data

X = np.arange(-5, 5, 0.1)

Y = np.arange(-5, 5, 0.1)

X, Y = np.meshgrid(X, Y)

R = X**2+ Y**2 + 6

# Plot the surface

surf = ax.plot_surface(X, Y, R, cmap=cm.coolwarm,

linewidth=0, antialiased=False)

# Customize the z axis

ax.set_zlim(0, 50)

ax.zaxis.set_major_locator(LinearLocator(10))

ax.zaxis.set_major_formatter(FormatStrFormatter('%.02f'))

ax.set_xlabel('w', fontsize=12)

ax.set_ylabel('b', fontsize=12)

ax.set_zlabel('J(w,b)', fontsize=12)

ax.set_yticklabels([])

ax.set_xticklabels([])

ax.set_zticklabels([])

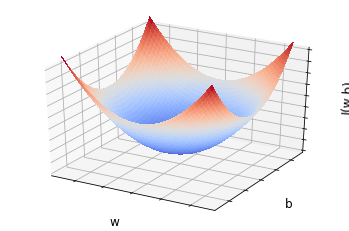

plt.show()Congratulations! You have gotten to the point where you have the expression for the cost function and the loss function. The step we have just taken is called forward propagation.

The cost function takes a convex form, looking much like this plot here! The idea is that you'll start with some initial values of

Looking at

Remember that $ \displaystyle \frac{dJ(w)}{dw}$ and

What we have just explained here is called backpropagation. You need to take the derivatives to calculate the difference between the desired and calculated outcome, and repeat these steps until you get to the lowest possible cost value!

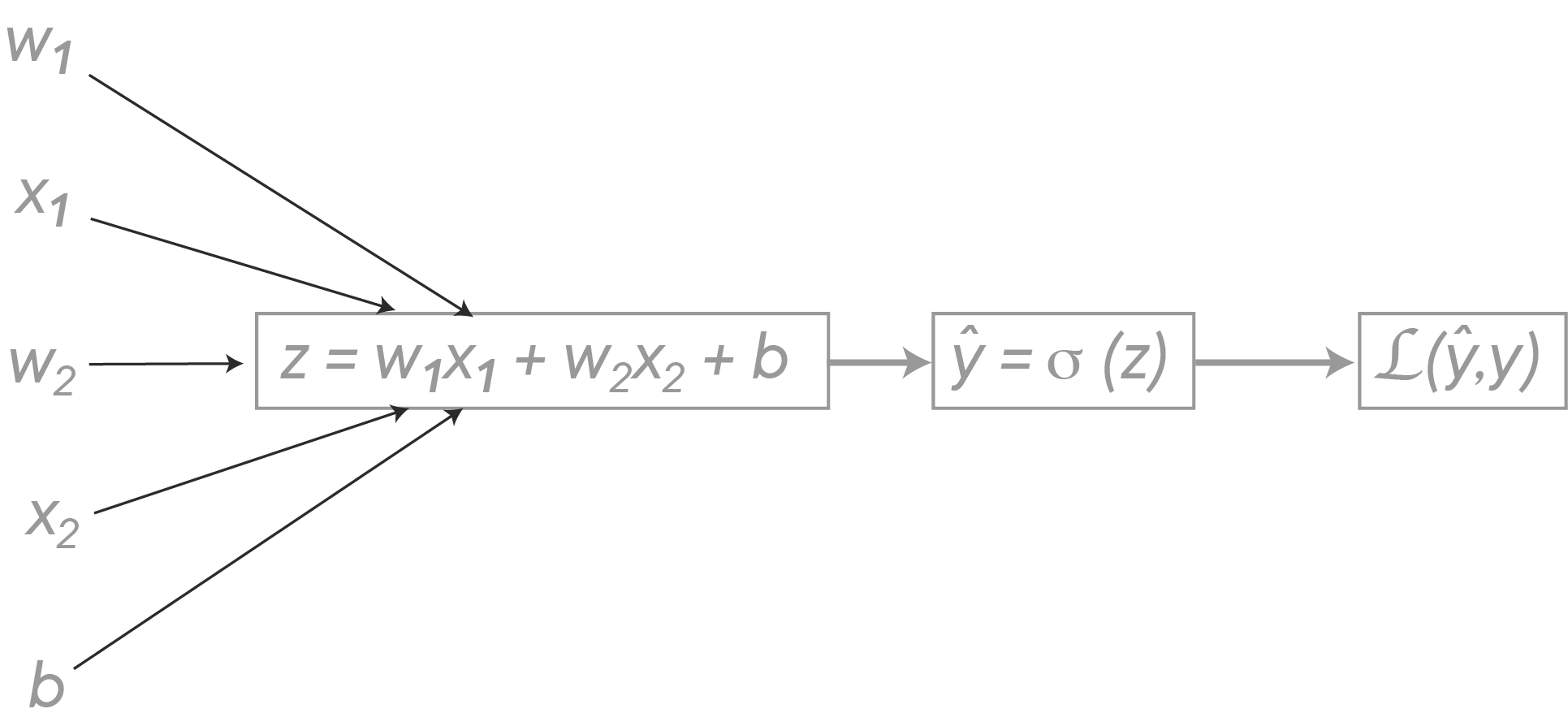

When using the chain rule, computation graphs are popular. Imagine there are just two features

You'll first want to compute the derivative to the loss with respect to

This will be explained in more detail in class, but what you need to know is that you backpropagate, first:

-

You'll want to go from

$\mathcal{L}(\hat y , y)$ to$\hat y = \sigma (z)$ . You can do this by taking the derivative of$\mathcal{L}(\hat y , y)$ with respect to$\hat y$ , and it can be shown that this is given by$\displaystyle \frac{d\mathcal{L}(\hat y , y)}{d \hat y} = \displaystyle \frac{-y}{\hat y}+\displaystyle \frac{1-y}{1-\hat y}$ -

As a next step you'll want to take the derivative with respect to

$z$ . It can be shown that $ dz = \displaystyle\frac{d\mathcal{L}(\hat y , y)}{d z} = \hat y - y$. This derivative can also be written as$\displaystyle\frac{d\mathcal{L}}{d\hat y} \displaystyle\frac{d\hat y}{dz} $ . -

Last, and this is where you want to get to, you need to derive

$\mathcal{L}$ with respect to$w_1$ ,$w_2$ and$b$ . It can be shown that:$dw_1 = \displaystyle\frac{d\mathcal{L}(\hat y , y)}{d w_1} = \displaystyle\frac{d\mathcal{L}(\hat y , y)}{d \hat y}\displaystyle\frac{d\hat y}{dz}\displaystyle\frac{dz}{d w_1} = x_1 dz $

Similarly, it can be shown that:

and

with

Remember that this example just incorporates one training sample. Let's look at how this is done when you have multiple training samples! We basically want to compute the derivative of the overall cost function:

Let's have a look at how we will get to the minimization of the cost function. As mentioned before, we'll have to initialize some values.

Initialize

For each training sample

$ z^{(i)} = w^T x^ {(i)} +b $

Then, you'll need to make update:

After that, update:

repeat until convergence!

In this lesson, you learned about the basics of neural networks, forward propagation, and backpropagation. We explained these new concepts using a logistic regression example. In the following lab, you'll learn how to do all this in Python.