An auto encoder-decoder with transformer based system to predict the recipe of food from its images.

- https://arxiv.org/pdf/1812.06164.pdf

- https://nlp.stanford.edu/pubs/emnlp15_attn.pdf

- https://medium.com/analytics-vidhya/machine-translation-encoder-decoder-model-7e4867377161

- numpy

- scipy

- matplotlib

- nltk

- Pillow

- tqdm

- lmdb

- tensorflow

- tensorboardX

- Pytorch 0.4.1

- Transformer

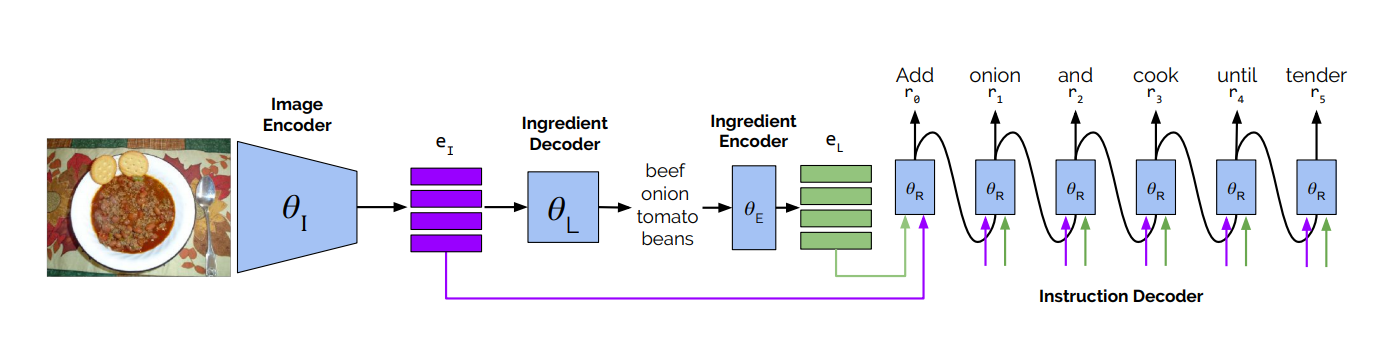

- Encoders and Decoders

- Attention networks

- RNNs

- LSTMs

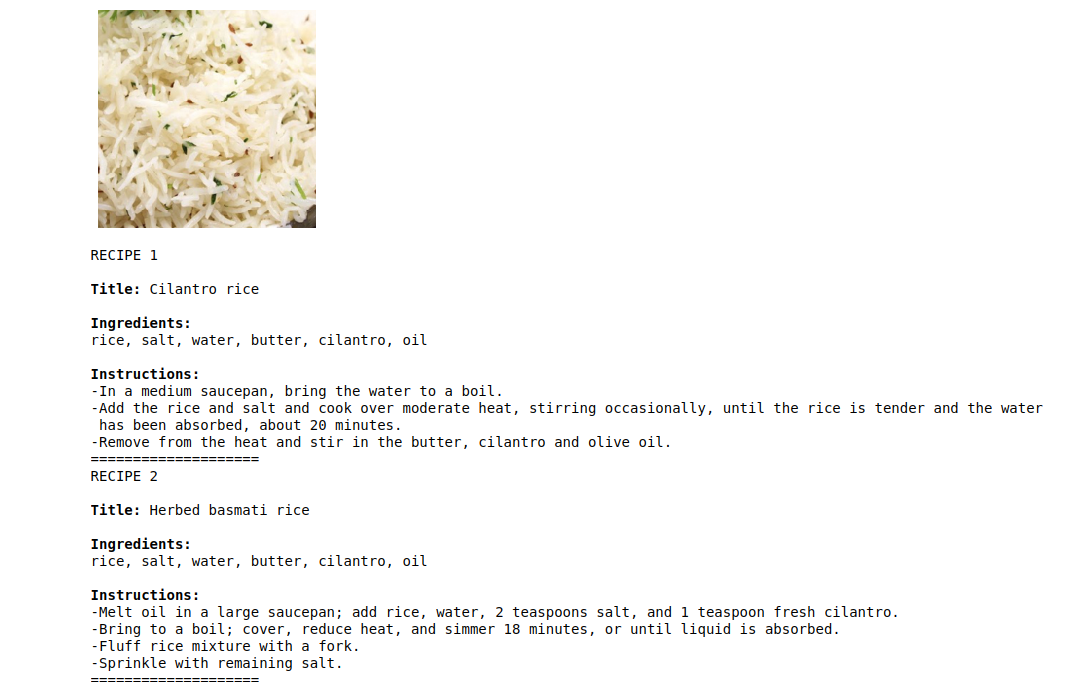

The Recipe1M dataset composed of 1 029 720 recipes scraped from cooking websites. The dataset contains 720 639 training, 155 036 validation and 154 045 test recipes, containing a title, a list of ingredients, a list of cooking instructions and (optionally) an image.

In the first stage, we pre-train the image encoder and ingredients decoder. Then, in the second stage, we train the ingredient encoder and instruction decoder by minimizing the negative log-likelihood and adjusting θR and θE.

- Find ingredient vocabulary https://dl.fbaipublicfiles.com/inversecooking/ingr_vocab.pkl

- Find instruction vocabulary https://dl.fbaipublicfiles.com/inversecooking/instr_vocab.pkl

- Find pre-trained model here https://dl.fbaipublicfiles.com/inversecooking/modelbest.ckpt