Airflow pipeline for data reading, cleansing, aggregating, saving, and emailing the results!

Retail store client has number of store outlets located in different locations. From the transactions happening in various stores; the company wants to get 'Daily Profit Reports' on how its stores are performing in market.

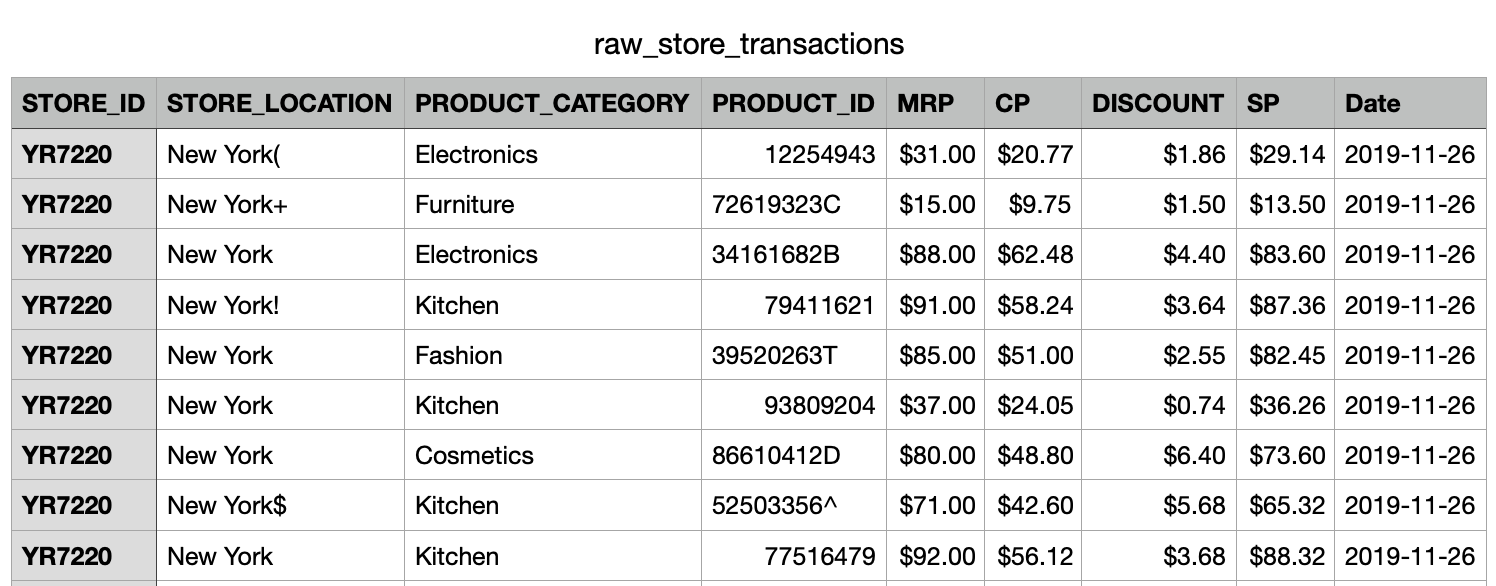

Daily CSV file are given containing transaction data of all stores.

The client wants us to generate daily reports, highlighting out of all locations, which location has made the highest profit? The profit of each individual store?

[Location wise profit - Individual store wise profit]

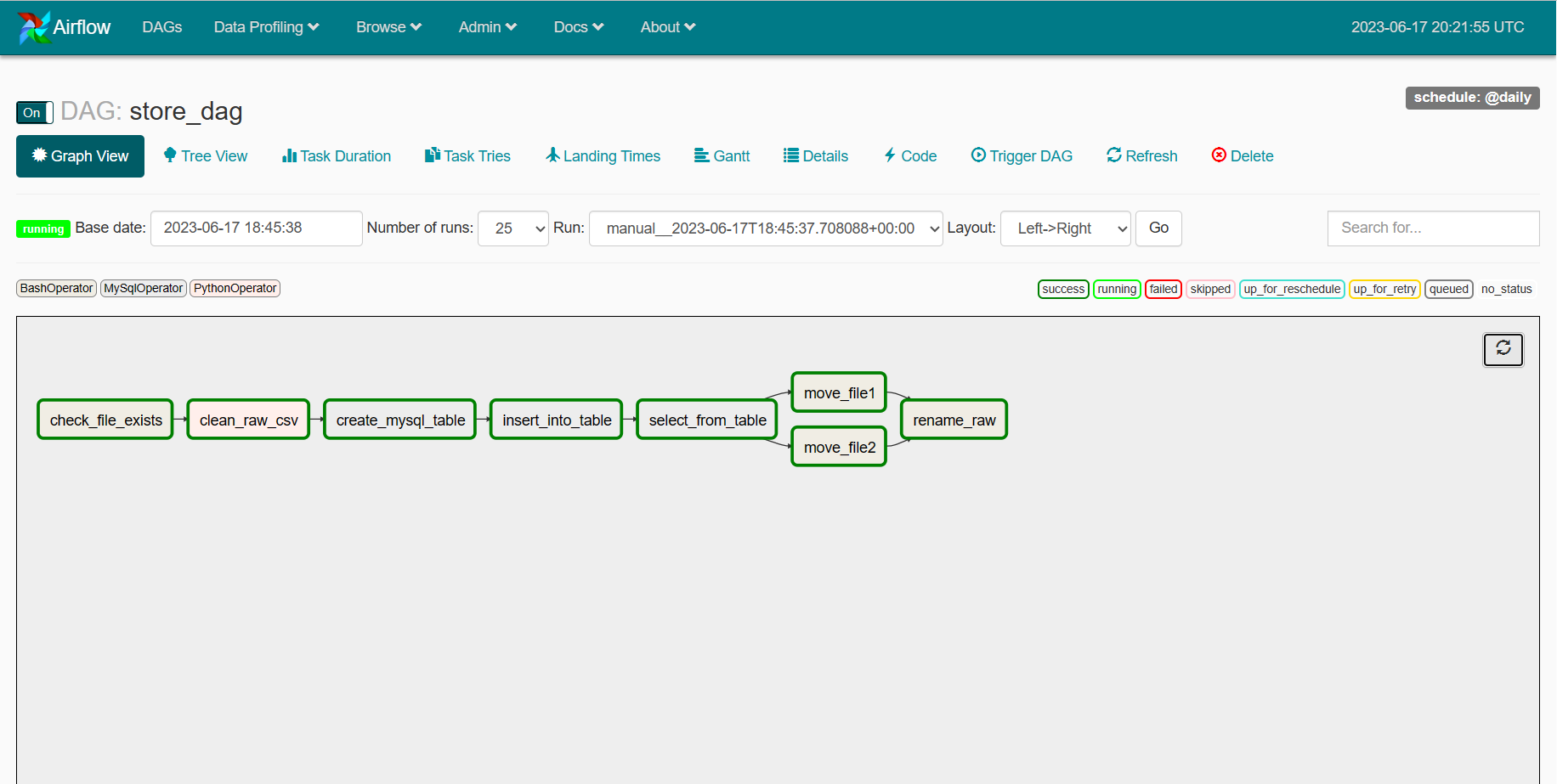

- Read the raw_store_transactions.csv

- Clean data

- Remove special charaters in the STORE_LOCATION column

- Remove special characters in the PRODUCT_ID column

- Create a MySQL table with proper schema for the cleaned store transaction data

- Insert the cleaned store transaction data to the created MySQL table

- Calculate store-wise and location-wise profit for yesterday and save the results in CSV files

- Rename the output files by adding target date . Rename the raw_store_transaction.csv file by adding yeserday date

-

Download and install Docker if you haven't yet. Docker Download

-

Clone the repo and go to the root directory

-

Open

docker-compose-localExecutor.ymland updateAIRFLOW__SMTP__SMTP_USERandAIRFLOW__SMTP__SMTP_PASSWORDwith the proper sender email -

Create a new image by run docker-compose file in terminal and installing necessary containers

➜ docker-compose -f ./docker-compose-localExecutor.yml up -d

-

Make sure proper containers are running

➜ docker ps

-

Open Airflow web server by visiting

https://localhost:8080 -

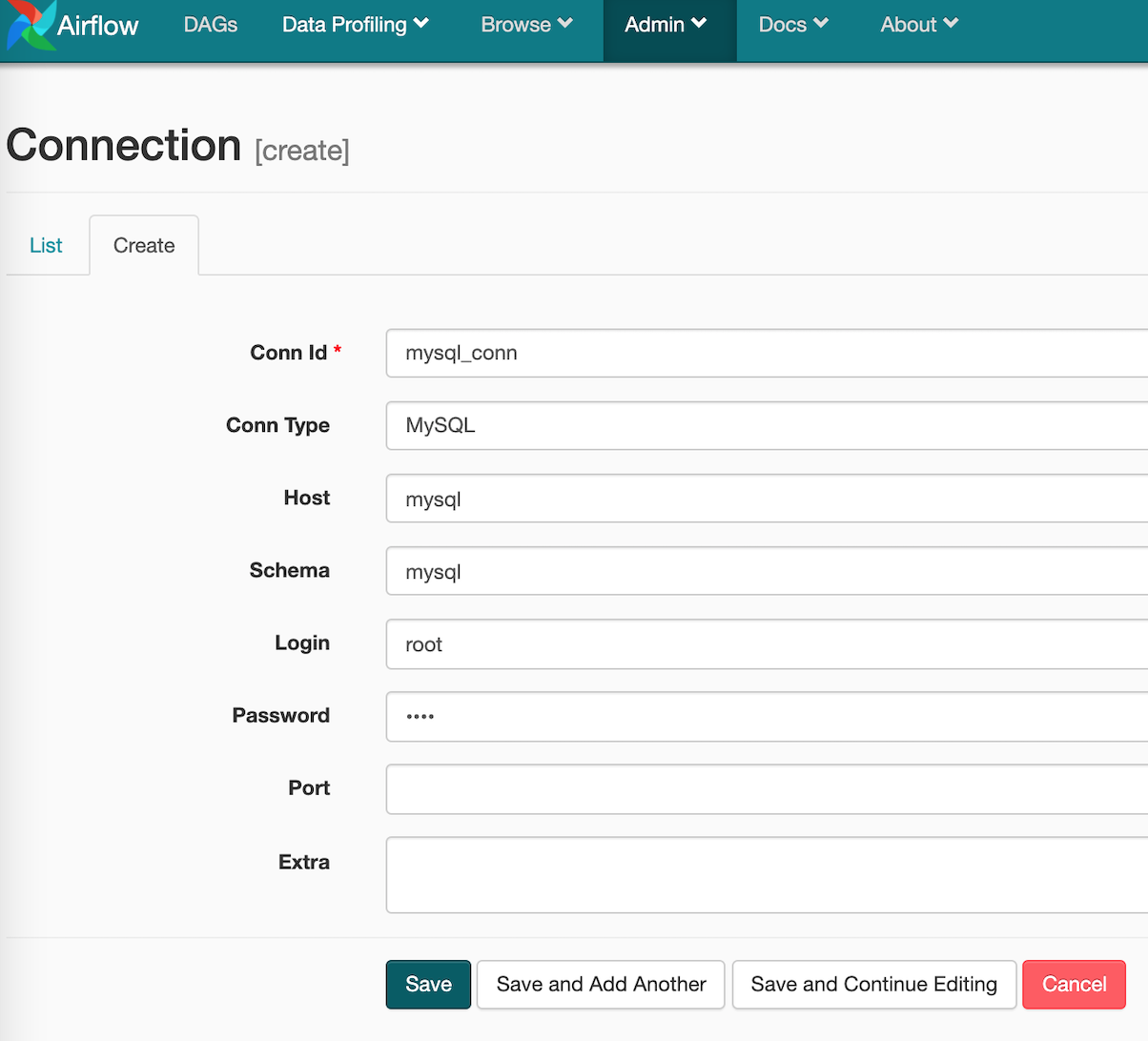

Create MySQL connection by going to Admin> Connections and typing the following information. You have to create connection whenever restarting the web server.

- To check the tables in MySQL, grab the mysql container id from

docker psand rundocker exec -it {container_id} bash.mysql -u root -p,use mysql, andshow tables; - Turn on the

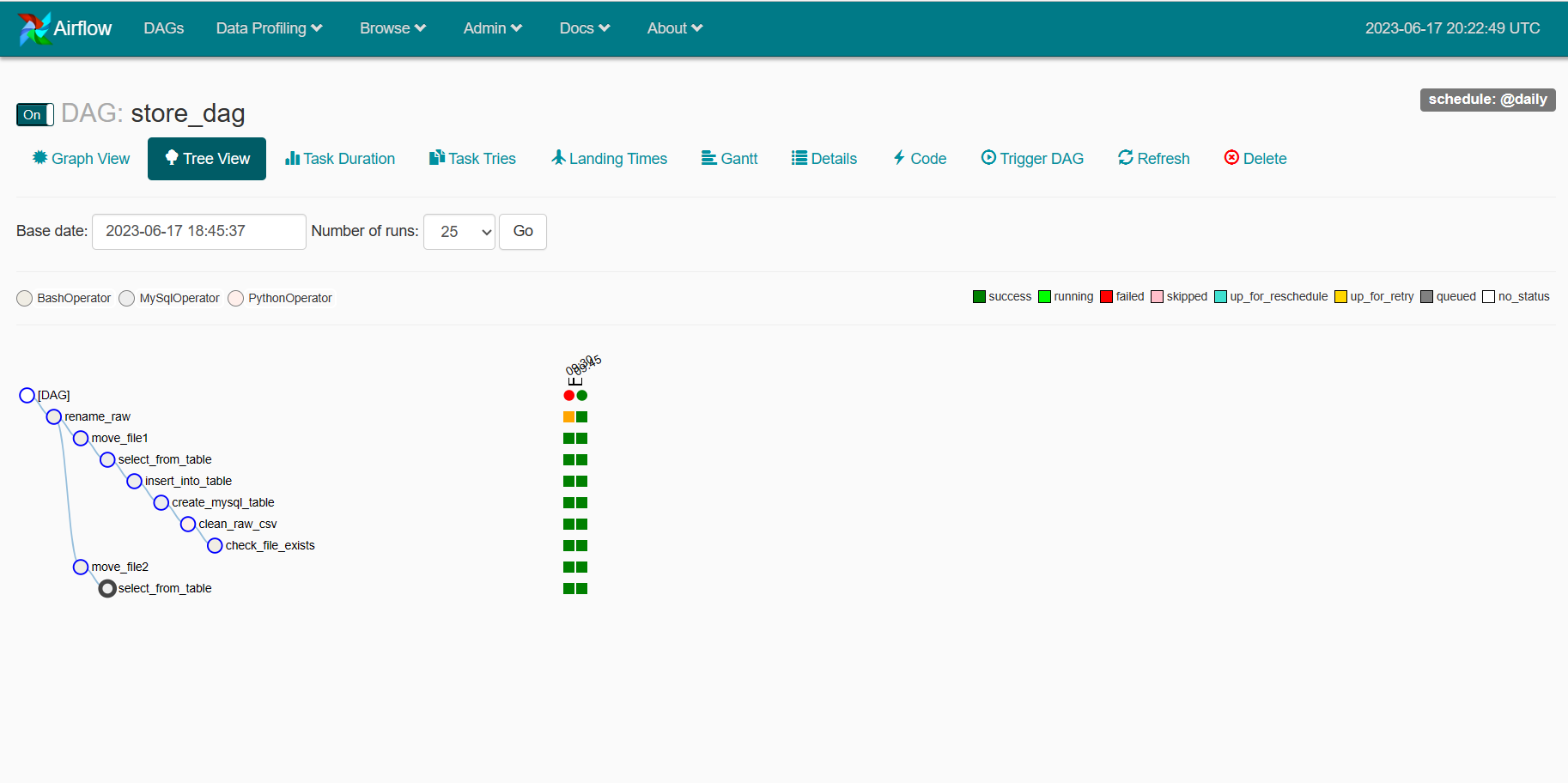

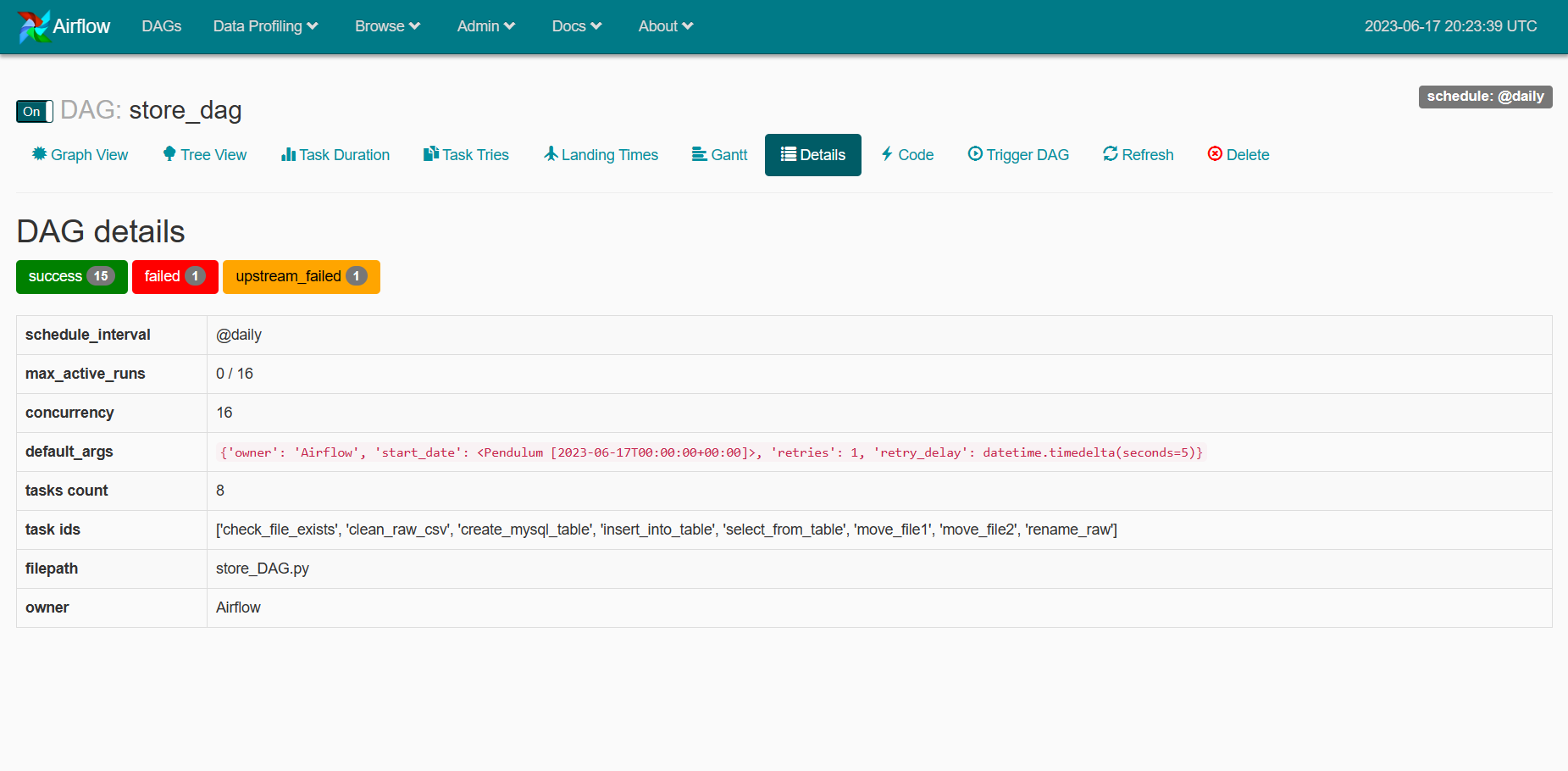

store_dagDAG and trigger the dag.